Drifting Away: Testing ML Models in Production

- 1. Drifting Away: Testing ML Models in Production Chengyin Eng Niall Turbitt Outline

- 2. Chengyin Eng Data Scientist @ Databricks ▪ Machine Learning Practice Team ▪ Experience ▪ Life Insurance ▪ Teaching ML in Production, Deep Learning, NLP, etc. ▪ MS in Computer Science at University of Massachusetts, Amherst ▪ BA in Statistics & Environmental Studies at Mount Holyoke College, Massachusetts About

- 3. Niall Turbitt Senior Data Scientist @ Databricks ▪ EMEA ML Practice Team ▪ Experience ▪ Energy & Industrial Applications ▪ e-Commerce ▪ Recommender Systems & Personalisation ▪ MS Statistics University College Dublin ▪ BA Mathematics & Economics Trinity College Dublin About

- 4. • Motivation • Machine Learning System Life Cycle • Why Monitor? • Types of drift • What to Monitor? • How to Monitor? • Demo Outline

- 5. ML is everywhere, but often fails to reach production 85% of DS projects fail 4% of companies succeed in deploying ML models to production Source: https://www.datanami.com/2020/10/01/most-data-science-projects-fail-but-yours-doesnt-have-to/

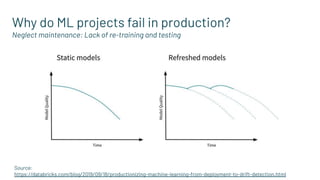

- 6. Why do ML projects fail in production? Neglect maintenance: Lack of re-training and testing Source: https://databricks.com/blog/2019/09/18/productionizing-machine-learning-from-deployment-to-drift-detection.html

- 7. This talk focuses on two questions:

- 8. This talk focuses on two questions: What are the statistical tests to use when monitoring models in production?

- 9. This talk focuses on two questions: What are the statistical tests to use when monitoring models in production? What tools can I use to coordinate the monitoring of data and models?

- 10. What this talk is not • A tutorial on model deployment strategies • An exhaustive walk through of how to robustly test your production ML code • A prescriptive list of when to update a model in production

- 11. Machine Learning System Life Cycle

- 12. Business Problem ML system life cycle

- 18. Why Monitor?

- 19. Model deployment is not the end ▪ Data distributions and feature types can change over time due to: It is the beginning of model measurement and monitoring Upstream Errors Market Change Human Behaviour Change Potential model performance degradation

- 20. Models will degrade over time Challenge: catching this when it happens

- 21. Types of drift Feature Drift Label Drift Prediction Drift Concept Drift External factors cause the label to evolve Model prediction distribution deviates Label distribution deviates Input feature(s) distributions deviate

- 22. Feature, Label, and Prediction Drift Sources: https://dataz4s.com/statistics/chi-square-test/ https://towardsdatascience.com/machine-learning-in-production-why-you-should-care-about-data-and-concept-drift-d96d0bc907fb

- 23. Concept drift Source: Krawczyk and Cano 2018. Online Ensemble Learning for Drifting and Noisy Data Streams

- 24. Drift types and actions to take Drift Type Identified Action Feature Drift ● Investigate feature generation process ● Retrain using new data Label Drift ● Investigate label generation process ● Retrain using new data Prediction Drift ● Investigate model training process ● Assess business impact of change in predictions Concept Drift ● Investigate additional feature engineering ● Consider alternative approach/solution ● Retrain/tune using new data

- 25. What to Monitor?

- 26. What should I monitor? • Basic summary statistics of features and target • Distributions of features and target • Model performance metrics • Business metrics

- 27. Monitoring tests on data ▪ Summary statistics: ▪ Median / mean ▪ Minimum ▪ Maximum ▪ Percentage of missing values ▪ Statistical tests: ▪ Mean: ▪ Two-sample Kolmogorov-Smirnov (KS) test with Bonferroni correction ▪ Mann-Whitney (MW) test ▪ Variance: ▪ Levene test Numeric Features

- 28. Kolmogorov-Smirnov (KS) test with Bonferroni correction Comparison of two continuous distributions ▪ Null hypothesis (H0 ): Distributions x and y come from the same population ▪ If the KS statistic has a p-value lower than α, reject H0 ▪ Bonferroni correction: ▪ Adjusts the αlevel to reduce false positives ▪ αnew = αoriginal / n, where n = total number of feature comparisons Numeric Feature Test

- 29. Levene test Comparison of variances between two continuous distributions ▪ Null hypothesis (H0 ): σ2 1 = σ2 2 = … = σ2 n ▪ If the Levene statistic has a p-value lower than α, reject H0 Numeric Feature Test

- 30. Monitoring tests on data ▪ Summary statistics: ▪ Median / mean ▪ Minimum ▪ Maximum ▪ Percentage of missing values ▪ Statistical tests: ▪ Mean: ▪ Two-sample Kolmogorov-Smirnov (KS) test with Bonferroni correction ▪ Mann-Whitney (MW) test ▪ Variance: ▪ Levene test ▪ Summary statistics: ▪ Mode ▪ Number of unique levels ▪ Percentage of missing values ▪ Statistical test: ▪ One-way chi-squared test Categorical Features Numeric Features

- 31. One-way chi-squared test Comparison of two categorical distributions ▪ Null hypothesis (H0 ): Expected distribution = observed distribution ▪ If the Chi-squared statistic has a p-value lower than α, reject H0 Categorical Feature Test

- 32. Monitoring tests on models • Relationship between target and features • Numeric Target: Pearson Coefficient • Categorical Target: Contingency tables • Model Performance • Regression models: MSE, error distribution plots etc • Classification models: ROC, confusion matrix, F1-score etc • Performance on data slices • Time taken to train

- 33. How to Monitor?

- 34. Demo: Measuring models in production • Logging and Versioning • MLflow (model) • Delta (data) • Statistical Tests • SciPy • statsmodels • Visualizations • seaborn

- 35. An open-source platform for ML lifecycle that helps with operationalizing ML General model format that standardizes deployment options Centralized and collaborative model lifecycle management Tracking Record and query experiments: code, metrics, parameters, artifacts, models Projects Packaging format for reproducible runs on any compute platform Models General model format that standardizes deployment options Centralized and collaborative model lifecycle management Model Registry

- 36. An open-source platform for ML lifecycle that helps with operationalizing ML General model format that standardizes deployment options Centralized and collaborative model lifecycle management Tracking Record and query experiments: code, metrics, parameters, artifacts, models Projects Packaging format for reproducible runs on any compute platform Models General model format that standardizes deployment options Model Registry Centralized and collaborative model lifecycle management

- 37. Demo Notebook http://bit.ly/dais_2021_drifting_away

- 38. Conclusion • Model measurement and monitoring are crucial when operationalizing ML models • No one-size fits all • Domain & problem specific considerations • Reproducibility • Enable rollbacks and maintain record of historic performance

- 39. Literature resources • Paleyes et al 2021. Challenges in Deploying ML • Klaise et al. 2020 Monitoring and explainability of models in production • Rabanser et al 2019 Failing Loudly: An Empirical Study of Methods for Detecting Dataset Shift • Martin Fowler: Continuous Delivery for Machine Learning

- 40. Emerging open-source monitoring packages • EvidentlyAI • Data Drift Detector • Alibi Detect • scikit-multiflow

- 41. Feedback Your feedback is important to us. Don’t forget to rate and review the sessions.