DSP_Module5_Rev2.pdfICE3251_DSP_DIGITAL SYSTEM PROCESSING_MIT

- 1. ICE 3251 DIGITAL SIGNAL PROCESSING Slides by Dr. Chinmay Rajhans Course by Dr. Anjan Gudigar (A) and Dr. Chinmay Rajhans (B) Module 5: DSP Applications Second Year B. Tech. (Electronics and Instrumentation Engineering) MIT, Manipal, Udupi, Karnataka, India (MAHE) Jan-May 2024 2023-2024

- 2. DSP Syllabus • Discrete Time Signals and Systems: Standard Discrete time signals, representation, classification, mathematical operations on discrete time signals, response of LTI discrete time systems in time domain, classification of discrete time systems. Linear convolution, cross correlation and autocorrelations. (10 hrs) • Discrete Fourier Transform (DFT): DFT, properties of the DFT, use of DFT in linear filtering, filtering of long data sequences, DFT as linear transformation, Inverse DFT, FFT Algorithms, Radix 2 DITFFT and DIFFFT. (10 hrs) • IIR filters: Frequency response of analog and digital filters, characteristics of Butterworth, Chebyshev and elliptic filters, classical filter design, design of digital filters by impulse invariance, bilinear transformation and matched Z transform. (12 hrs)

- 3. DSP Syllabus (Contd) • FIR Filters: Linear phase FIR Filters, characteristics, frequency response, design using windows, frequency sampling design. (06 hrs) • Implementation of Discrete Time Systems: Structures for FIR systems – Direct form, cascade form, Structures for IIR systems – Direct form (DF I and II), cascade and parallel form structures, lattice ladder structures. (06 hrs) • Applications of digital signal processing: Speech processing- speech coding, recognition, speech synthesis, biomedical signal processing, Image processing applications. (04 hrs)

- 4. Books 1. Proakis John G, Manolakis Dimitris G., Digital Signal Processing, PHI, (4e), 2003. 2. Rabiner L.R and Gold Bernard, Theory and Applications of Digital Signal Processing, PHI, 2002. 3. Sanjit Mitra K, Digital Signal Processing: A Computer Based Approach, TMH, (4e), 2013. 4. A. Nagoor Kani, Digital Signal Processing, Tata McGraw Hill Education, (2e), 2017. 5. Johnson Johny R, Introduction to digital signal processing, Prentice Hall Of India, 2003.

- 5. COURSE OUTCOMES: CO1: Analyze signals and LTI systems in discrete time domain and Z domain. CO2: Evaluate Discrete Fourier Transform (DFT) for discrete time signals. CO3: Analyze digital IIR filters. CO4: Implement digital FIR filters. CO5: Apply the principles of digital signal processing to real world problems.

- 6. DSP Module 5 Syllabus • Applications of digital signal processing: Speech processing- speech coding, recognition, speech synthesis, biomedical signal processing, Image processing applications.

- 8. Speech Processing • The speech signal is a slowly timed varying signal. • The speech signal can be broadly classified into voiced and unvoiced signal. • The voiced signals are periodic. • Unvoiced signals are random in nature. • The voiced signals will have a fundamental frequency in a segment of 15 to 20 msec, representing a characteristic sound of the speech. • The various frequency components of sounds in speech signal will lie within 4 kHz.

- 9. Broad Classification of Speed Processing • Speech analysis: In general, the process of extracting the features of speech and then coding or directly digitizing the speech and then reducing the bit rate are called speech analysis. It is used in speech recognition, speaker verification and speaker identification. • Speech synthesis: In general, the process of decoding the speech signal represented in the form of codes are called speech synthesis. It is used in conversion of text to speech.

- 10. Speech Coding and Decoding • The speech coding is digital representation of speech using minimum bit rate without affecting the voice quality. • The speech decoding is conversion of digital speech data to analog speech.

- 11. Speech Coding • The old method for quality transmission and reception of digital speech signal through telephone lines, employs a bit rate of 64 kbps (kilo bits per second). • Pulse Code Modulation (PCM), in which the speech signal is sampled at 8 kHz and each sample is quantized to 13 bits and then compressed to 8 bits using m-law or A-law standards to achieve a transmission rate of 64 kbps (8000 samples per second x 8 bits per sample = 64000 bits per second) needed for transmission. • A number of digital speech coding techniques are developed to represent the speech at lower bit rates up to 1000 bits per second to effectively utilize the transmission channels and also to reduce memory requirements for storage and retrieval of speech.

- 12. Speech Coding • The speech coding techniques can be broadly classified into waveform coding techniques and parametric coding techniques. • Some of the popular waveform coding techniques are Adaptive Pulse Code Modulation (APCM), Differential Pulse Code Modulation (DPCM) and Adaptive Differential Pulse Code Modulation (ADPCM). • Some of the parametric method of speech coding are Linear Prediction Coding (LPC), Mel-Frequency Cepstrum Coefficients (MFCC), Code Excited Linear Predictive Coding (CELP) and Vector Sum Excited Linear Prediction ( VSELP).

- 13. Adaptive Differential Pulse Code Modulation (ADPCM) Encoder

- 14. Adaptive Differential Pulse Code Modulation (ADPCM) Decoder

- 15. Mel-Frequency Cepstrum Coefficients (MFCC)

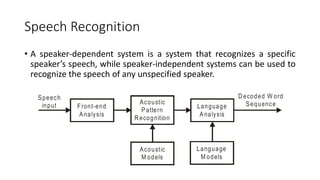

- 16. Speech Recognition • A speaker-dependent system is a system that recognizes a specific speaker’s speech, while speaker-independent systems can be used to recognize the speech of any unspecified speaker.

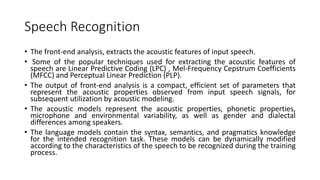

- 17. Speech Recognition • The front-end analysis, extracts the acoustic features of input speech. • Some of the popular techniques used for extracting the acoustic features of speech are Linear Predictive Coding (LPC) , Mel-Frequency Cepstrum Coefficients (MFCC) and Perceptual Linear Prediction (PLP). • The output of front-end analysis is a compact, efficient set of parameters that represent the acoustic properties observed from input speech signals, for subsequent utilization by acoustic modeling. • The acoustic models represent the acoustic properties, phonetic properties, microphone and environmental variability, as well as gender and dialectal differences among speakers. • The language models contain the syntax, semantics, and pragmatics knowledge for the intended recognition task. These models can be dynamically modified according to the characteristics of the speech to be recognized during the training process.

- 18. Speech Recognition • A speech recognition system has to be trained with known speech, before using the system for recognization. • Acoustic pattern recognition aims at measuring the similarity between an input speech and a reference model (obtained during training) and determines the best match for the input speech. • Some popular methods of acoustic pattern matching are Dynamic Time Warping (DTW), Hidden Markov Modeling (HMM), discrete HMM (DHMM), Continuous-Density HMM (CDHMM) and Vector Quantization (VQ). • The language analysis is important in speech recognition, for Large Vocabulary Continuous Speech Recognition (LVCSR) tasks. The speech decoding process needs to invoke knowledge of pronunciation, lexicon, syntax, and pragmatics in order to produce a satisfactory output text sequence.

- 19. Speech Synthesis

- 20. Speech Synthesis • The process of transforming text into speech contains two phases. • The first phase consists of text analysis and phonetic analysis. The second phase is generation of speech signal, which can be divided into two sub-phases : the search of speech segments from a database or the creation of these segments and the implementation of the prosodic features. • Text analysis includes the task of text normalization and linguistic analysis. In text normalization, the numbers and symbols are converted to words and abbreviations are replaced by their corresponding whole words or phrases, so that the whole text is converted to human utterance like words.

- 21. Speech Synthesis • The linguistic analysis aims at understanding the content of the text, exact meaning of utterance word and provide prosodic informations like position of pause, differentiate interrogative clause from statements, etc., for subsequent processing. • Phonetic analysis assigns phonetic transcription to each word, and this process is called grapheme-to-phoneme conversion. • Grapheme is the smallest unit of written word, and phoneme is the smallest unit of speech.

- 22. Speech Synthesis • Prosody refers to the rhythm of speech, stress patterns, pitch, duration, intonation, etc., and it plays- a very important role in the understandability of speech. In prosodic analysis, some prosody features are added to synthetic speech so that it resembles natural speech. Moreover, some hierarchical rules have been developed to control the timing and fundamental frequency, which makes the flow of speech in synthesis systems to resemble natural sounding. • Speech synthesis block, finally generates the speech signal. This can be done by selecting speech unit for every phoneme from a database, using an appropriate search process. The resulting short units of speech are joined together to produce the final speech signal.

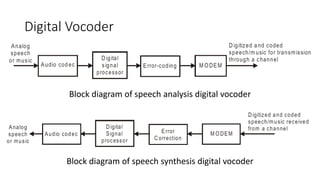

- 23. Digital Vocoder Block diagram of speech analysis digital vocoder Block diagram of speech synthesis digital vocoder

- 24. Dual Tone Multi Frequency (DTMF) in Telephone Dialing

- 25. Biomedical Signal Processing Biomedical signal classification • Bioelectric signals : Signals generated by nerve cells and muscle cells. • Biomagnetic signals: The brain, heart and lungs produce extremely weak magnetic fields, and this contains additional information to that obtained from bioelectric signals. • Bioimpedance signals: The tissue impedance reveals information about tissue composition, blood volume and distribution and more. Usually obtained as a ratio of voltage measured at the desired spot, and current injected using electrodes. • Bioacoustic signals: Sound or acoustic signals are created by flow of blood through the heart, its valves, or vessels and flow of air through upper and lower airways and lungs. Sound signals are also produced by digestive tract, joints and contraction of muscles. These signals can be recorded using microphones. • Biomechanical signals: Motion and displacement signals, pressure, tension and flow signals. • Biochemical signals: Chemical measurements from living tissue or samples analyzed in a laboratory. • Biooptical signals: Blood oxygenation obtained by measuring transmitted and backscattered light from a tissue, estimation of heart output by dye dilution.

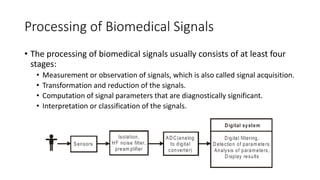

- 26. Processing of Biomedical Signals • The processing of biomedical signals usually consists of at least four stages: • Measurement or observation of signals, which is also called signal acquisition. • Transformation and reduction of the signals. • Computation of signal parameters that are diagnostically significant. • Interpretation or classification of the signals.

- 27. Biomedical Applications Domains • Information gathering: The measurement of phenomena to understand the system. • Diagnosis: Detection of malfunction, pathology or abnormality. • Monitoring: To obtain continuous or periodic information about the system. • Therapy and control: Modify the behaviour of the system and ensure the result. • Evaluation: Proof of performance, quality control, effect of treatment.

- 28. Thank You Time for Questions Dr. Chinmay Rajhans You can email queries / questions / feedback at [email protected] with subject: DSP Module 5 Queries