Engineering Intelligent NLP Applications Using Deep Learning – Part 2

- 1. Engineering Intelligent NLP Applications Using Deep Learning – Part 2 Saurabh Kaushik

- 2. • Part 1: • Why NLP? • What is NLP? • What is the Word & Sentence Modelling in NLP? • What is Word Representation in NLP? • What is Language Processing in NLP? Agenda • PART 2 : • WHY DL FOR NLP? • WHAT IS DL? • WHAT IS DL FOR NLP? • HOW RNN WORKS FOR NLP? • HOW CNN WORKS FOR NLP?

- 3. WHY DL FOR NLP?

- 4. Why DL for NLP? • The majority of traditional, rule based natural language processing procedures represent words was “One-Hot” encoded vectors. Words as “One-Hot” Vectors • A lot value in NLP comes from understanding a word in relation to its neighbors and their syntactical relationship. Lack of Lexical Semantics • Bag of Words models, including TF-IDF models, cannot distinguish certain contexts Problems with Bag of Words • Two different words will have no interaction between them • “One Hot” will compute enormously long vectors for large corpus. • Traditional models largely focus on syntactic level representations instead of semantic level representations • Sentiment analysis can be easy for longer corpus. • However, for dataset of single sentence movie reviews (Pang and Lee, 2005) accuracy never reached above 80% for >7 years

- 5. • if wi.form == ‘John’: • wi.pos = ‘noun’ • if wi.form == ‘majors’: • wi.pos = ‘noun’ • if wi.form == ‘majors’ and wi-1.form == ‘two’ • wi.pos = ‘noun’ • if wi.form == ‘studies’ and wi-1.pos == ‘num’ • wi.pos = ‘noun’ What is Rule Based Approach? Find the part-of-speech tag of each word. Good Really Too Specific Keep Doing this Img: Jinho D. Choi – Machine Learning in NLP PPT

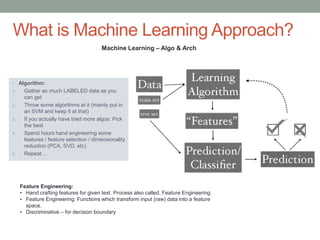

- 6. • Algorithm: 1. Gather as much LABELED data as you can get 2. Throw some algorithms at it (mainly put in an SVM and keep it at that) 3. If you actually have tried more algos: Pick the best 4. Spend hours hand engineering some features / feature selection / dimensionality reduction (PCA, SVD, etc) 5. Repeat… What is Machine Learning Approach? Machine Learning – Algo & Arch Feature Engineering: • Hand crafting features for given text. Process also called, Feature Engineering. • Feature Engineering: Functions which transform input (raw) data into a feature space. • Discriminative – for decision boundary

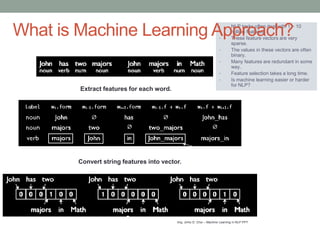

- 7. • NLP tasks often deal with 1 ~ 10 million features. • These feature vectors are very sparse. • The values in these vectors are often binary. • Many features are redundant in some way. • Feature selection takes a long time. • Is machine learning easier or harder for NLP? What is Machine Learning Approach? Extract features for each word. Convert string features into vector. Img: Jinho D. Choi – Machine Learning in NLP PPT

- 8. • Machine Learning is about: • Features: In ML, feature engineering is explicit process and mostly manual (programmatically). It is painful, over-specified and often incomplete. And take longer time to design and validate. • Representation: ML has specific framework for Word Representation based on problem set and its algo. • Learning: It is mostly Supervised Learning. Why DL vs ML? • DEEP LEARNING IS ABOUT: • FEATURES : IDENTIFY & LEARN FEATURES AUTOMATICALLY. LEARNED FEATURES ARE EASY TO ADAPT AND FAST TO LEARN. • REPRESENTATION: DL PROVIDES A VERY FLEXIBLE UNIVERSAL, (ALMOST) LEARNABLE FRAMEWORK FOR REPRESENTING WORDS, VISUAL AND LINGUISTIC INFORMATION. • LEARNING: DL LEARN FROM BOTH SUPERVISED (FROM RAW TEXT, IMAGE, AUDIO CONTENTS) AND UNSUPERVISED (SENTIMENTAL, POS TAGGED) DATA. NER POS WordNet WordNet

- 9. How Classical different from Deep Learning for NLP?

- 10. • Learning Representation • Handcrafted feature time consuming. Incomplete and Over Specification • Need to be done from each specific domain data. • Need for Distributional Similarity & Distributed Representation • Current NLP systems are incredibly fragile because of their atomic symbol representation. • Unsupervised features and Weight Training • Most NLP & ML tech requires labelled data (supervised learning). • Learning multiple levels of representation • Successive model layers learn deeper intermediate representations • Language is composed of words and phrases. Need Compositionality in ML Models. • Recursion: the same operator (word feature) is applied repeatedly on different component (words in sentences). • Why Now? • New methods of supervised pre-training • More efficient Parameter Estimation • Better understanding of Parameter Regularization What are other major Reason for Exploring DL for NLP?

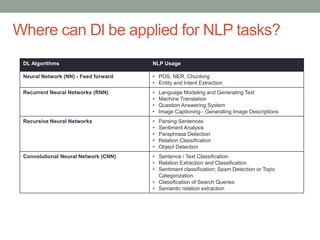

- 11. Where can Dl be applied for NLP tasks? DL Algorithms NLP Usage Neural Network (NN) - Feed forward • POS, NER, Chunking • Entity and Intent Extraction Recurrent Neural Networks (RNN) • Language Modeling and Generating Text • Machine Translation • Question Answering System • Image Captioning - Generating Image Descriptions Recursive Neural Networks • Parsing Sentences • Sentiment Analysis • Paraphrase Detection • Relation Classification • Object Detection Convolutional Neural Network (CNN) • Sentence / Text Classification • Relation Extraction and Classification • Sentiment classification; Spam Detection or Topic Categorization. • Classification of Search Queries • Semantic relation extraction

- 12. WHAT IS DL?

- 13. • In Human Neuron: • A neuron: many-inputs / one-output unit • Output can be excited or not excited • Incoming signals from other neurons determine if the neuron shall excite ("fire") • Output subject to attenuation in the synapses, which are junction parts of the neuron What is Neural Network? • IN COMPUTER NEURON: 1. TAKES THE INPUTS . 2. CALCULATE THE SUMMATION OF THE INPUTS . 3. COMPARE IT WITH THE THRESHOLD BEING SET DURING THE LEARNING STAGE. • Artificial Neural Network are designed to solve any problem by trying to mimic the structure and the function of our nervous system. • Neural Network are based on simulated neurons, which are joined together in a variety of ways to form a network. • Neural Network resembles human brain following two ways. • NN acquires Knowledge through Learning • This Knowledge is stored in Interconnection strength, called Synaptic Weight.

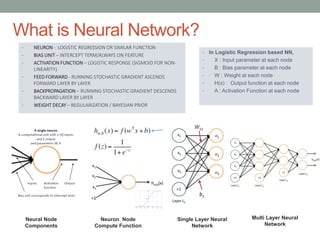

- 14. • In Logistic Regression based NN, • X : Input parameter at each node • B : Bias parameter at each node • W : Weight at each node • H(x) : Output function at each node • A : Activation Function at each node What is Neural Network? • NEURON - LOGISTIC REGRESSION OR SIMILAR FUNCTION • BIAS UNIT – INTERCEPT TERM/ALWAYS ON FEATURE • ACTIVATION FUNCTION – LOGISTIC RESPONSE (SIGMOID FOR NON- LINEARITY) • FEED FORWARD - RUNNING STOCHASTIC GRADIENT ASCENDS FORWARD LAYER BY LAYER • BACKPROPAGATION – RUNNING STOCHASTIC GRADIENT DESCENDS BACKWARD LAYER BY LAYER • WEIGHT DECAY – REGULARIZATION / BAYESIAN PRIOR Multi Layer Neural Network Neuron Node Compute Function Neural Node Components Single Layer Neural Network

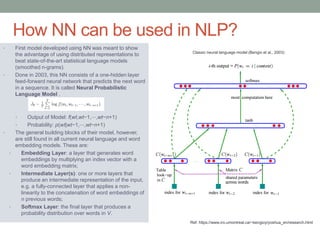

- 15. • First model developed using NN was meant to show the advantage of using distributed representations to beat state-of-the-art statistical language models (smoothed n-grams). • Done in 2003, this NN consists of a one-hidden layer feed-forward neural network that predicts the next word in a sequence. It is called Neural Probabilistic Language Model . • Output of Model: f(wt,wt−1,⋯,wt−n+1) • Probability: p(wt|wt−1,⋯,wt−n+1) • The general building blocks of their model, however, are still found in all current neural language and word embedding models. These are: • Embedding Layer: a layer that generates word embeddings by multiplying an index vector with a word embedding matrix; • Intermediate Layer(s): one or more layers that produce an intermediate representation of the input, e.g. a fully-connected layer that applies a non- linearity to the concatenation of word embeddings of n previous words; • Softmax Layer: the final layer that produces a probability distribution over words in V. How NN can be used in NLP? Ref: https://www.iro.umontreal.ca/~bengioy/yoshua_en/research.html Classic neural language model (Bengio et al., 2003)

- 16. • CBOW (Common Bag of Words): • The input to the model could be wi−2,wi−1,wi+1,wi+2, the preceding and following words of the current word we are at. The output of the neural network will be wi. Hence you can think of the task as "predicting the word given its context" • Note that the number of words we use depends on your setting for the window size. How to get Syntactical and Semantic Relationship using DL? • SKIP-GRAM: • THE INPUT TO THE MODEL IS WI, AND THE OUTPUT COULD BE WI−1,WI−2,WI+1,WI+2. SO THE TASK HERE IS "PREDICTING THE CONTEXT GIVEN A WORD". ALSO, THE CONTEXT IS NOT LIMITED TO ITS IMMEDIATE CONTEXT, TRAINING INSTANCES CAN BE CREATED BY SKIPPING A CONSTANT NUMBER OF WORDS IN ITS CONTEXT, SO FOR EXAMPLE, WI−3,WI−4,WI+3,WI+4, HENCE THE NAME SKIP-GRAM. • NOTE THAT THE WINDOW SIZE DETERMINES HOW FAR FORWARD AND BACKWARD TO LOOK FOR CONTEXT WORDS TO PREDICT. • Examples : • From Jono's example, the sentence "Hi fred how was the pizza?" becomes: • Continuous bag of words: 3-grams {"Hi fred how", "fred how was", "how was the", ...} • Skip-gram 1-skip 3-grams: {"Hi fred how", "Hi fred was", "fred how was", "fred how the", ...} • Notice "Hi fred was" skips over "how". Those are the general meaning of CBOW and skip gram. In this case, skip gram is 1-skip n-grams. Syntactical Relation Semantic Relation

- 17. HOW RNN USED FOR NLP?

- 18. • Recurrent neural network (RNN) is a neural network model proposed in the 80’s for modelling time series. • The structure of the network is similar to feedforward neural network, with the distinction that it allows a recurrent hidden state whose activation at each time is dependent on that of the previous time (cycle). What is Recurrent Neural Network (RNN)? • The time recurrence is introduced by relation for hidden layer activity ht with its past hidden layer activity ht-1. • This dependence is nonlinear because of using a logistic function.

- 19. • A recursive neural network is a recurrent neural network where the unfolded network given some finite input is expressed as a (usually: binary) tree, instead of a "flat" chain (as in the recurrent network). • Recursive Neural Networks are exceptionally useful for learning structured information • Recursive Neural Networks are both: • Architecturally Complex • Computationally Expensive What is Recursive Neural Network?

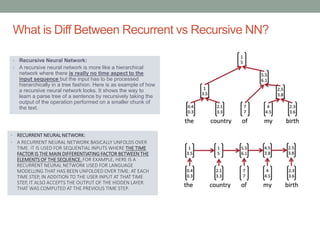

- 20. • Recursive Neural Network: • A recursive neural network is more like a hierarchical network where there is really no time aspect to the input sequence but the input has to be processed hierarchically in a tree fashion. Here is as example of how a recursive neural network looks. It shows the way to learn a parse tree of a sentence by recursively taking the output of the operation performed on a smaller chunk of the text. What is Diff Between Recurrent vs Recursive NN? • RECURRENT NEURAL NETWORK: • A RECURRENT NEURAL NETWORK BASICALLY UNFOLDS OVER TIME. IT IS USED FOR SEQUENTIAL INPUTS WHERE THE TIME FACTOR IS THE MAIN DIFFERENTIATING FACTOR BETWEEN THE ELEMENTS OF THE SEQUENCE. FOR EXAMPLE, HERE IS A RECURRENT NEURAL NETWORK USED FOR LANGUAGE MODELLING THAT HAS BEEN UNFOLDED OVER TIME. AT EACH TIME STEP, IN ADDITION TO THE USER INPUT AT THAT TIME STEP, IT ALSO ACCEPTS THE OUTPUT OF THE HIDDEN LAYER THAT WAS COMPUTED AT THE PREVIOUS TIME STEP.

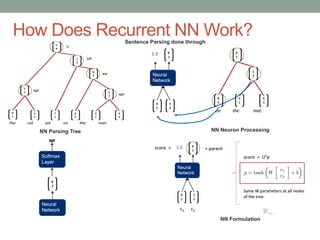

- 21. How Does Recurrent NN Work? NN Formulation NN Neuron ProcessingNN Parsing Tree Sentence Parsing done through

- 22. • Character Level Modeling Through RNN: • Objective: To train an RNN on the predicting next correct sequence of a given word. 1. Word to be predicted – “Hello” 2. Character Level Vector = [h,e,l,o] • Training Model: 1. The probability of “e” should be likely given the context of “h”, 2. “l” should be likely in the context of “he”, 3. “l” should also be likely given the context of “hel”, and finally 4. “o” should be likely given the context of “hell”. How does Recurrent NN Work? Word Level Modeling Through RNN:

- 23. What are Different Topologies for Recurrent NN ? Common Neural Network (e.g. feed forward network) Prediction of future states base on single observation Machine translationSentiment classification Simultaneous interpretation

- 24. HOW CNN USED FOR NLP?

- 25. • Mimics neural processing of Biological Brain in order to analyze a given data. • Essentially neural networks that use convolution in place of general matrix multiplication in at least one of their layers. • Major Feature of CNN • Locally Receptive Fields • Shared Weights • Spatial or Temporal Sub-sampling • Consists of Three Major Parts • Convolution • Pooling • Fully Connected NN What is CNN? Biologically Inspired

- 26. • Convolution Layer: Purpose of this is to provide representation of data from different view toward data. For this, it applies a Kernel/Filter to Input Layer. Had Hyper parameters like: • Stride size decides how convolutional moves over input layer. • Convolutional with Zero padding called Wide Convolution and Without it called Narrow Convolution. • Pooling Layer: Its main purpose is to provide fixed Dimension Output Matrix of Convolution layer for next layer’s Classification task. Pooling layers subsample their input by Non-linear down-sampling to simplify the information in output from convolutional layer. (Max Pooling or Average Pooling). • Fully Connected Layer: Its main purpose to provide classification layer using Fully Connected Neural Networks. How CNN Works? Input Layer Convolution Layer Pooling Layer FullyConnected Layer

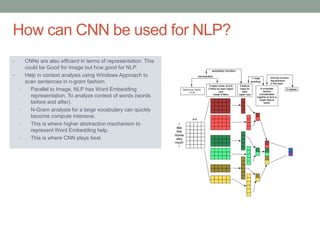

- 27. • CNNs are also efficient in terms of representation. This could be Good for Image but how good for NLP. • Help in context analysis using Windows Approach to scan sentences in n-gram fashion. • Parallel to Image, NLP has Word Embedding representation. To analyze context of words (words before and after). • N-Gram analysis for a large vocabulary can quickly become compute intensive. • This is where higher abstraction mechanism to represent Word Embedding help. • This is where CNN plays best. How can CNN be used for NLP?

- 28. How can CNN be used for SentimentAnalysis Task? PAD The movie was horrible PAD 7 3 2 5 1 9 −3 −3 1 1 1 1 0.5 0.2 −0.2 −0.9 0.5 Softmax-Classifer Negative / Positive Pooling - Capture the most important activation Pooling Layer • Single Filter • Window size: n=3 • Word Vectors: • Weight Matrix: • Bias: Classification Layer Input Layer Convolution Layer Output Layer

- 29. • SEQ2SEQ NN for NLP • Encoder & Decoder • Memory • LSTM & RNN for NLP • Attention for NLP What is further Deeper aspects of DL for NLP?

![• Character Level Modeling Through RNN:

• Objective: To train an RNN on the predicting next correct

sequence of a given word.

1. Word to be predicted – “Hello”

2. Character Level Vector = [h,e,l,o]

• Training Model:

1. The probability of “e” should be likely given the context of

“h”,

2. “l” should be likely in the context of “he”,

3. “l” should also be likely given the context of “hel”, and

finally

4. “o” should be likely given the context of “hell”.

How does Recurrent NN Work?

Word Level Modeling Through RNN:](https://image.slidesharecdn.com/dlnlp-part2v1-161228061613/85/Engineering-Intelligent-NLP-Applications-Using-Deep-Learning-Part-2-22-320.jpg)