Explainability for Natural Language Processing

- 1. Explainability for Natural Language Processing 1 IBM Research – Almaden 2 Pennnsylvania State University Tutorial@AACL-IJCNLP 2020 2 Shipi Dhanorkar 1 Lucian Popa 1 Yunyao Li 1 Kun Qian 1 Christine T. Wolf 1 Anbang Xu 1

- 2. Outline of this Tutorial • PART I - Introduction • PART II - Current State of XAI Research for NLP • PART III – Explainability and Case Study • PART IV - Open Challenges & Concluding Remarks 2

- 3. 3 PART I - Introduction Yunyao Li

- 4. 4 AI vs. NLP source: https://becominghuman.ai/alternative-nlp-method-9f94165802ed

- 5. 5 AI is Becoming Increasing Widely Adopted … …

- 6. 6 AI Life Cycle Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize

- 7. 7 AN ENGINEERING PERSPECTIVE Why Explainablity?

- 8. 8 Why Explainability: Model Development Feature Engineering (e.g. POS, LM) Model Selection/Refine ment Model Training Model Evaluation Error Analysis Error Analysis e.g. “$100 is due at signing” is not classified as <Obligation> Root Cause Identification e.g. <Currency> is not considrerd in the model AI engineers/ data scientists Model Improvement e.g. Include <Currency> as a feature / or introduce entity-aware attention Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize Source: ModelLens: An Interactive System to Support the Model Improvement Practices of Data Science Teams. CSCW’2019

- 9. 9 Why Explainability: Monitor & Optimize Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize User Feedback (implicit/explicit) Feedback Analysis Model Improvement User Feedback e.g. Clauses related to trademark should not classified as <IP> Feedback Analysis e.g. Trademark-related clauses are considered part of <IP> category by The out-of-box model AI engineers Model Improvement e.g. Create a customized model to distinguish trademark-related clauses from other IP-related clauses. Source: Role of AI in Enterprise Application https://wp.sigmod.org/?p=2577

- 10. 10 A PRACTICAL PERSPECTIVE Why Explainablity?

- 12. 12 Needs for Trust in AI Needs for Explainability What does it take? - Being able to say with certainty how AI reaches decisions - Building mindful of how it is brought into the workplace.

- 13. Why Explainability: Business Understanding Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize When Explainablity Not Needed • No significant consequences for unacceptable results E.g., ads, search, movie recommendations • Sufficiently well-studied and validated in real applications we trust the system’s decision, even if it is not perfect E.g. postal code sorting, airborne collision avoidance systems Source: Men Also Like Shopping: Reducing Gender Bias Amplification using Corpus-level Constraints. Jieyu Zhao, Tianlu Wang, Mark Yatskar, Vicente Ordonez, Kai-Wei Chang. EMNLP’2017

- 14. 14 Regulations Credit: Gade, Geyik, Kenthapadi, Mithal, Taly. Explainable AI in Industry, WWW’2020 General Data Protection Regulation (GDPR): Article 22 empowers individuals with the right to demand an explanation of how an automated system made a decision that affects them. Algorithmic Accountability Act 2019: Requires companies to provide an assessment of the risks posed by the automated decision system to the privacy or security and the risks that contribute to inaccurate, unfair, biased, or discriminatory decisions impacting consumers California Consumer Privacy Act: Requires companies to rethink their approach to capturing, storing, and sharing personal data to align with the new requirements by January 1, 2020. Washington Bill 1655: Establishes guidelines for the use of automated decision systems to protect consumers, improve transparency, and create more market predictability. Massachusetts Bill H.2701: Establishes a commission on automated decision-making, transparency, fairness, and individual rights. Illinois House Bill 3415: States predictive data analytics determining creditworthiness or hiring decisions may not include information that correlates with the applicant race or zip code.

- 16. 16 Why Explainability: Data Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize • Datasets may contain significant bias • Models can further amplify existing bias Source: Men Also Like Shopping: Reducing Gender Bias Amplification using Corpus-level Constraints. Jieyu Zhao, Tianlu Wang, Mark Yatskar, Vicente Ordonez, Kai-Wei Chang. EMNLP’2017 man woman Training Data 33% 67% Model Prediction 16% (↓17%) 84% (↑17%) agent role in cooking images

- 17. 17 Why Explainability: Data Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize • Explicitly capture measures to drive the creation of better, more inclusive algorithms. Source: https://datanutrition.org More: Data Statements for Natural Language Processing: Toward Mitigating System Bias and Enabling Better Science. Emily M. Bender, Batya Friedman TACL’2018

- 18. A core element of model risk management (MRM) • Verify models are performing as intended • Ensure models are used as intended 19 Why Explainability: Model Validation Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize Source: https://dealbook.nytimes.com/2012/08/02/knight-capital-says-trading-mishap-cost-it-440-million/ https://www.mckinsey.com/business-functions/risk/our-insights/the-evolution-of-model-risk-management Defective Model

- 19. 20 Why Explainability: Model Validation Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize A core element of model risk management (MRM) • Verify models are performing as intended • Ensure models are used as intended Source: https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon- scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G Defective Model

- 20. 21 Why Explainability: Model Validation Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize A core element of model risk management (MRM) • Verify models are performing as intended • Ensure models are used as intended Source: https://www.theguardian.com/business/2013/sep/19/jp-morgan-920m-fine-london-whale https://financetrainingcourse.com/education/2014/04/london-whale-casestudy-timeline/ https://www.reuters.com/article/us-jpmorgan-iksil/london-whale-took-big-bets-below-the-surface- idUSBRE84A12620120511 Incorrect Use of Model

- 21. 22 Why Explainability: Model Validation Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize A core element of model risk management (MRM) • Verify models are performing as intended • Ensure models are used as intended Source: https://www.digitaltrends.com/cars/tesla-autopilot-in-hot-seat-again-driver-misuse/ Incorrect Use of Model

- 22. 23 Why Explainability: Model Deployment Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize • Compliant to regulatory and legal requirements • Foster trust with key stakeholders Source: https://algoritmeregister.amsterdam.nl/en/ai-register/

- 23. 24 Why Explainability: Model Deployment Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize • Compliant to regulatory and legal requirements • Foster trust with key stakeholders Source: https://algoritmeregister.amsterdam.nl/en/ai-register/

- 24. 26 Why Explainability: Monitor & Optimize Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize • Carry out regular checks over real data to make sure that the systems are working and used as intended. • Establish KPIs and a quality assurance program to measure the continued effectiveness Source: https://www.huffpost.com/entry/microsoft-tay-racist-tweets_n_56f3e678e4b04c4c37615502

- 25. 27 Why Explainability: Monitor & Optimize Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize • Carry out regular checks over real data to make sure that the systems are working and used as intended. • Establish KPIs and a quality assurance program to measure the continued effectiveness Source: https://www.technologyreview.com/2020/10/08/1009845/a-gpt-3-bot-posted-comments-on-reddit-for- a-week-and-no-one-noticed/

- 27. 29 Explainable AI Credit: Gade, Geyik, Kenthapadi, Mithal, Taly. Explainable AI in Industry, WWW’2020

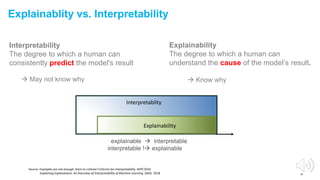

- 28. Explainablity vs. Interpretability 30 Interpretability The degree to which a human can consistently predict the model's result May not know why Explainability The degree to which a human can understand the cause of the model’s result. Know why Source: Examples are not enough, learn to criticize! Criticism for interpretability. NIPS’2016 Explaining Explanations: An Overview of Interpretability of Machine Learning. DASS. 2018 explainable interpretable interpretable ! explainable Explainability Interpretablity

- 29. Source: “Why Should I Trust You?” Explaining the Predictions of Any Classifier. KDD’2016 Example: Explanability for Computer Vision

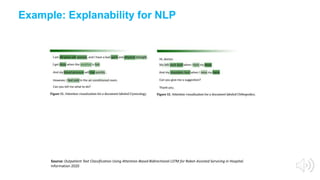

- 30. Example: Explanability for NLP Source: Outpatient Text Classification Using Attention-Based Bidirectional LSTM for Robot-Assisted Servicing in Hospital. Information 2020

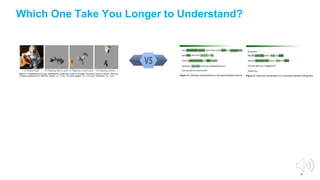

- 31. 33 Which One Take You Longer to Understand?

- 32. 34 Which One Take You Longer to Understand? Graph comprehension - encode the visual array and identify the important visual features - identify the quantitative facts or relations that those features represent - relate those quantitative relations to the graphic variables depicted Source: Why a diagram is (sometimes) worth ten thousand words. Jill H. Larkin Herbert A. Simon. Cognitive Science. 1987 Graphs as Aids to Knowledge Construction: Signaling Techniques for Guiding the Process of Graph Comprehension November 1999. Journal of Educational Psychology

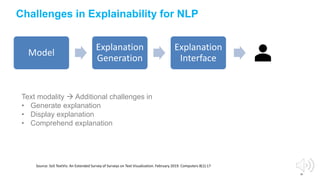

- 33. 35 Challenges in Explainability for NLP Model Explanation Generation Explanation Interface Text modality Additional challenges in • Generate explanation • Display explanation • Comprehend explanation Source: SoS TextVis: An Extended Survey of Surveys on Text Visualization. February 2019. Computers 8(1):17

- 34. 36 Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize Business Users Product Managers Data Scientists Domain Experts Business Users Data Providers AI Engineers Data Scientists Product managers AI engineers Data scientists Product managers Model validators Business Users Designers Software Engineers Product Managers AI Engineers Business Users AI Operations Product Managers Model Validators Stakeholders Whether and how explanations should be offered to stakeholders with differing levels of expertise and interests.

- 35. PART II – Current State of Explainable AI for NLP

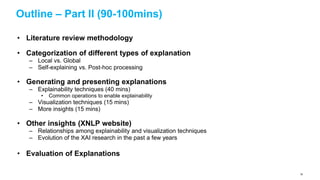

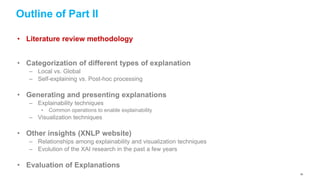

- 36. Outline – Part II (90-100mins) • Literature review methodology • Categorization of different types of explanation – Local vs. Global – Self-explaining vs. Post-hoc processing • Generating and presenting explanations – Explainability techniques (40 mins) • Common operations to enable explainability – Visualization techniques (15 mins) – More insights (15 mins) • Other insights (XNLP website) – Relationships among explainability and visualization techniques – Evolution of the XAI research in the past a few years • Evaluation of Explanations 38

- 37. Outline of Part II • Literature review methodology • Categorization of different types of explanation – Local vs. Global – Self-explaining vs. Post-hoc processing • Generating and presenting explanations – Explainability techniques • Common operations to enable explainability – Visualization techniques • Other insights (XNLP website) – Relationships among explainability and visualization techniques – Evolution of the XAI research in the past a few years • Evaluation of Explanations 39

- 38. Literature Review Methodology • The purpose is NOT to provide an exhaustive list of papers • Major NLP conferences: ACL, EMNLP, NAACL, COLING – And a few representative general AI conferences (AAAI, IJCAL, etc) • Years: 2013 – 2019 • What makes a candidate paper: – contain XAI keywords (lemmaized) in their title (e.g., explainable, interpretable, transparent, etc) • Reviewing process – A couple of NLP researchers first go through all the candidate papers to make sure they are truly about XAI for NLP – Every paper is then thoroughly reviewed by at least 2 NLP researchers • Category of the explanation • Explainability & visualization techniques • Evaluation methodology – Consult additional reviewers in the case of disagreement 40

- 39. Statistics of Literature Review • 107 candidate papers – 52 of them are included 41

- 40. Outline of Part II • Literature review methodology • Categorization of different types of explanation – Local vs. Global – Self-explaining vs. Post-hoc processing • Generating and presenting explanations – Explainability techniques • Common operations to enable explainability – Visualization techniques • Other insights (XNLP website) – Relationships among explainability and visualization techniques – Evolution of the XAI research in the past a few years • Evaluation of Explanations 42

- 41. Task 1 - how to differentiate different explanations • Different taxonomies exist [Arya et al., 2019] [Arrietaa et al, 2020] 43

- 42. Task 1 - how to differentiate different explanations • Two fundamental aspects that apply to any XAI problems Is the explanation for an individual instance or for an AI model ? Is the explanation obtained directly from the prediction or requiring post-processing ? Local explanation: individual instance Global explanation: internal mechanism of a model Self-explaining: directly interpretable Post-hoc: a second step is needed to get explanation 44

- 43. Local Explanation • We understand only the reasons for a specific decision made by an AI model • Only the single prediction/decision is explained (Guidotti et al. 2018) Machine translation Input-output alignment matrix changes based on instances [NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. Bahdanau et al., ICRL 2015] 45

- 44. Global explanation • We understand the whole logic of a model • We are able to follow the entire reasoning leading to all different possible outcomes Img source: https://medium.com/@fenjiro/data-mining-for-banking-loan-approval-use-case-e7c2bc3ece3 Decision tree built for credit card application decision making 46

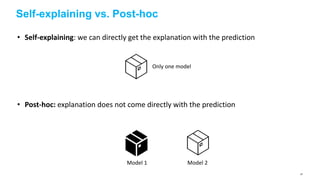

- 45. Self-explaining vs. Post-hoc • Self-explaining: we can directly get the explanation with the prediction • Post-hoc: explanation does not come directly with the prediction Only one model Model 1 Model 2 47

- 46. Task 1 - how to differentiate different explanations • Two fundamental aspects that apply to any XAI problems Is the explanation for an individual instance or for an AI model ? Is the explanation obtained directly from the prediction or requiring post-processing ? Local explanation: individual instance Global explanation: internal mechanism of a model Self-explaining: directly interpretable Post-hoc: a second step is needed to get explanation Two orthogonal aspects 48

- 47. Categorization of Explanations Least favorable Most favorable 49

- 48. Outline of Part II • Literature review methodology • Categorization of different types of explanation – Local vs. Global – Self-explaining vs. Post-hoc processing • Generating and presenting explanations – Explainability techniques • Common operations to enable explainability – Visualization techniques • Other insights (XNLP website) – Relationships among explainability and visualization techniques – Evolution of the XAI research in the past a few years • Evaluation of Explanations 50

- 49. From model prediction to user understanding XAI model Explained predictions End user Are explanations directly sent from XAI models to users? 51

- 50. Two Aspects: Generation & Presentation XAI model Mathematical justifications End user Visualizing justifications 1. Explanation Generation 2. Explanation Presentation UX engineersAI engineers 52

- 51. What’s next XAI model Mathematical justifications End user Visualizing justifications 1. Explanation Generation 2. Explanation Presentation UX engineersAI engineers Explainability techniques 53

- 52. What is Explainability • The techniques used to generate raw explanations – Ideally, created by AI engineers • Representative techniques: – Feature importance – Surrogate model – Example-driven – Provenance-based – Declarative induction • Common operations to enable explainability – First-derivative saliency – Layer-wise Relevance Propagation – Attention – LSTM gating signal – Explainability-aware architecture design 54

- 53. Explainability - Feature Importance The main idea of Feature Importance is to derive explanation by investigating the importance scores of different features used to output the final prediction. Can be built on different types of features Manual features from feature engineering [Voskarideset al., 2015] Lexical features including words/tokens and N-gram [Godin et al., 2018] [Mullenbachet al., 2018] Latent features learned by neural nets [Xie et al., 2017] [Bahdanau et al., 2015] [Li et al., 2015] Definition Usually used in parallel [Ghaeini et al., 2018] 55

- 54. Feature Importance – Machine Translation [Bahdanau et al., 2015] [NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. Bahdanau et al., ICRL 2015] A well-known example of feature importance is the Attention Mechanism first used in machine translation Image courtesy: https://smerity.com/articles/2016/google_nmt_arch.html Traditional encoder-decoder architecture for machine translation Only the last hidden state from encoder is used 56

- 55. Feature Importance – Machine Translation as An Example [NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. Bahdanau et al., ICRL 2015] Image courtesy: https://smerity.com/articles/2016/google_nmt_arch.html Attention mechanism uses the latent features learned by the encoder • a weighted combination of the latent features Weights become the importance scores of these latent features (also corresponds to the lexical features) for the decoded word/token. Similar approach in [Mullenbachet al., 2018] Local & Self-explaining Input-output alignment 57

- 56. Feature Importance – token-level explanations [Thorne et al] Another example that uses attention to enable feature importance NLP Task: Natural Language Inference (determining whether a “hypothesis” is true (entailment), false (contradiction), or underdetermined (neutral) given a “premise” Premise: Children Smiling and Waving at a camera Hypothesis: The kids are frowning GloVe LSTM ℎ 𝑝 ℎℎ Attention The kid are frowning MULTIPLE INSTANCE LEARNING to get appropriate thresholdings for attention matrix 58

- 57. Explainability – Surrogate Model Model predictions are explained by learning a second, usually more explainable model, as a proxy. Definition A black-box model or a hard-to-explain model Input data Predictions without explanations or hard to explain. An interpretable model that approximates the black-box model Interpretable predictions [Liu et al. 2018] [Ribeiro et al. KDD] [Alvarez-melis and Jaakkola] [Sydorova et al, 2019] 59

- 58. Surrogate Model – LIME [Ribeiro et al. KDD] Blue/Pink area are the decision boundary of a complex model 𝒇, which is a black-box model Cannot be well approximated by a linear function Instance to be explained Sampled instances, weighted by the proximity to the target instance An Interpretable classifier with local fidelity Local & Post-hocLIME: Local Interpretable Model-agnostic Explanations 60

- 59. Surrogate Model – LIME [Ribeiro et al. KDD] Local & Post-hoc High flexibility – free to choose any interpretable surrogate models 61

- 60. Surrogate Model - Explaining Network Embedding [Liu et al. 2018] Global & Post-hoc A learned network embedding Induce a taxonomy from the network embedding 62

- 61. Surrogate Model - Explaining Network Embedding [Liu et al. 2018] Induced from 20NewsGroups Network Bold text are attributes of the cluster, followed by keywords from documents belong to the cluster. 63

- 62. Explainability – Example-driven • Similar to the idea of nearest neighbors [Dudani, 1976] • Have been applied to solve problems including – Text classification [Croce et al., 2019] – Question Answering [Abujabal et al., 2017] Such approaches explain the prediction of an input instance by identifying and presenting other instances, usually from available labeled data, that are semantically similar to the input instance. Definition 64

- 63. Explainability – Text Classification [Croce et al., 2019] “What is the capital of Germany?” refers to a Location. WHY? “What is the capital of California?” which also refers to a Location in the training data Because 65

- 64. Explainability – Text Classification [Croce et al., 2019] Layer-wise Relevance Propagation in Kernel-based Deep Architecture 66

- 65. Explainability – Text Classification [Croce et al., 2019] Need to understand the work Thoroughly 67

- 66. Explainability – Provenance-based • An intuitive and effective explainability technique when the final prediction is the result of a series of reasoning steps. • Several question answering papers adopt this approach. – [Abujabal et al., 2017] – Quint system for KBQA (knowledge-base question answering) – [Zhou et al., 2018] – Multi-relation KBQA – [Amini et al., 2019] – MathQA, will be discussed in the declarative induction part (as a representative example of program generation) Explanations are provided by illustrating (some of) the prediction derivation processDefinition 68

- 67. Provenance-Based: Quint System A. Abujabal et al. QUINT: Interpretable Question Answering over Knowledge Bases. EMNLP System Demonstration, 2017. • When QUINT answers a question, it shows the complete derivation sequence: how it understood the question: o Entity linking: entity mentions in text ⟼ actual KB entities o Relation linking: relations in text ⟼ KB predicates the SPARQL query used to retrieve the answer: “Eisleben” “Where was Martin Luther raised?” place_of_birth Martin_Luther “ … a German professor of theology, composer, priest, monk and …” desc Martin_Luther_King_Jr. desc Learned from query templates based on structurally similar instances (“Where was Obama educated?”) Eisleben Derivation provides insights towards reformulating the question (in case of errors) 69

- 68. Provenance-Based: Multi-Relation Question Answering M. Zhou et al. An Interpretable Reasoning Network for Multi- Relation Question Answering. COLING, 2018. IRN (Interpretable Reasoning Network): • Breaks down the KBQA problem into multi-hop reasoning steps. • Intermediate entities and relations are predicted in each step: Reasoning path: John_Hays_Hammond ⟶ e ⟶ a Child Profession Facilitates correction by human (e.g., disambiguation) • multiple answers in this example (3 different children) 70

- 69. Explainability – Declarative Induction The main idea of Declarative Induction is to construct human-readable representations such as trees, programs, and rules, which can provide local or global explanations. Explanations are produced in a declarative specification language, as humans often would do. Trees [Jiang et al., ACL 2019] - express alternative ways of reasoning in multi-hop reading comprehension Rules: [A. Ebaid et al., ICDE Demo, 2019] - Bayesian rule lists to explain entity matching decisions [Pezeshkpour et al, NAACL 2019] - rules for interpretability of link prediction [Sen et al, EMNLP 2020] - linguistic expressions as sentence classification model [Qian et al, CIKM 2017] - rules as entity matching model Definition Examples of global explainability (although rules can also be used for local explanations) (Specialized) Programs: [A. Amini et al., NAACL, 2019] – MathQA, math programs for explaining math word problems Examples of local explainability (per instance) 7171

- 70. Declarative Induction: Reasoning Trees for Multi-Hop Reading Comprehension Task: Explore and connect relevant information from multiple sentences/documents to answer a question. Y. Jiang et al. Explore, Propose, and Assemble: An Interpretable Model for Multi-Hop Reading Comprehension. ACL, 2019. Question subject: “Sulphur Spring” Question body: located in administrative territorial entity Reasoning trees play dual role: • Accumulate the information needed to produce the answer • Each root-to-leaf path represents a possible answer and its derivation • Final answer is aggregated across paths • Explain the answer via the tree 72

- 71. Declarative Induction: (Specialized) Program Generation Task: Understanding and solving math word problems A. Amini et al. MathQA: Towards Interpretable Math Word Problem Solving with Operation-Based Formalisms. NAACL-HLT, 2019. • Seq-2-Program translation produces a human- interpretable representation, for each math problem • Does not yet handle problems that need complicated or long chains of mathematical reasoning, or more powerful languages (logic, factorization, etc). • Also fits under provenance-based explainability: • provides answer, and how it was derived. Domain-specific program based on math operations • Representative example of a system that provides: • Local (“input/output”) explainability • But no global explanations or insights into how the model operates (still “black-box”) 73

- 72. Declarative Induction: Post-Hoc Explanation for Entity Resolution Black-box model ExplainER • Local explanations (e.g., how different features contribute to a single match prediction – based on LIME) • Global explanations Approximate the model with an interpretable representation (Bayesian Rule List -- BRL) • Representative examples Small, diverse set of <input, output> tuples to illustrate where the model is correct or wrong • Differential analysis Compare with other ER model (disagreements, compare performance) A. Ebaid et al. ExplainER: Entity Resolution Explanations. ICDE Demo, 2019. M. T. Ribeiro et al. Why should I trust you: Explaining predictions of any classifier. In SIGKDD, pp. 1135—1144, 2016. B. Letham et al. Interpretable classifiers using rules and Bayesian analysis. Annals of Applied Stat., 9(3), pp. 1350-1371, 2015. Can also be seen as a tree (of probabilistic IF-THEN rules) Entity resolution (aka ER, entity disambiguation, record linking or matching) 74

- 73. Declarative Induction: Rules for Link Prediction Task: Link prediction in a knowledge graph, with focus on robustness and explainability P. Pezeshkpour et al. Investigating Robustness and Interpretability of Link Prediction via Adversarial Modifications. NAACL 2019. Uses adversarial modifications (e.g., removing facts): 1. Identify the KG nodes/facts most likely to influence a link 2. Aggregate the individual explanations into an extracted set of rules operating at the level of entire KG: • Each rule represents a frequent pattern for predicting the target link Works on top of existing, black-box models for link prediction: DistMult: B. Yang et al. Embedding entities and relations for learning and inference in knowledge bases. ICLR 2015 ConvE: T. Dettmers et al. Convolutional 2d knowledge graph embeddings. AAAI 2018 Global explainability able to pinpoint common mistakes in the underlying model (wrong inference in ConvE) 75

- 74. • Previous approach for rule induction: – The result is a set of logical rules (Horn rules of length 2) that are built on existing predicates from the KG (e.g., Yago). • Other approaches also learn logical rules, but they are based on different vocabularies: – Next: o Logical rules built on linguistic predicates for Sentence Classification – [SystemER – see later under Explainability-Aware Design] o Logical rules built on similarity features for Entity Resolution 76

- 75. Declarative Induction: Linguistic Rules for Sentence Classification Notices will be transmitted electronically, by registered or certified mail, or courier Syntactic and Semantic NLP Operators (Explainable) Deep Learning ∃ verb ∈ 𝑆: verb.theme ∈ ThemeDict and verb.voice = passive and verb.tense = future ThemeDict communication notice Dictionaries Rules (predicates based on syntactic/semantic parsing) “Communication” Sen et al, Learning Explainable Linguistic Expressions with Neural Inductive Logic Programming for Sentence Classification. EMNLP 2020. • Representative example of system that uses a neural model to learn another model (the rules) • The rules are used for classification but also act as global explanation • Joint learning of both rules and domain-specific dictionaries • Explainable rules generalize better and are more conducive for interaction with the expert (see later – Visualization) Label … “Terms and Termination” … Task: Sentence classification in a domain (e.g., legal/contracts domain) 77

- 76. Common Operations to enable explainability • A few commonly used operations that enable different explainability – First-derivative saliency – Layer-wise relevance propagation – Input perturbations – Attention – LSTM gating signal – Explainability-aware architecture design 78

- 77. Operations – First-derivative saliency • Mainly used for enabling Feature Importance – [Li et al., 2016 NAACL], [Aubakirova and Bansal, 2016] • Inspired by the NN visualization method proposed for computer vision – Originally proposed in (Simonyan et al. 2014) – How much each input unit contributes to the final decision of the classifier. Input embedding layer Output of neural network Gradients of the output wrt the input 79

- 78. Operations – First-derivative saliency Sentiment Analysis Naturally, it can be used to generate explanations presented by saliency-based visualization techniques [Li et al., 2016 NAACL] 80

- 79. Operations – Layer-wise relevance propagation • For a good overview, refer to • An operation that is specific to neural networks – Input can be images, videos, or text • Main idea is very similar to first-derivative saliency – Decomposing the prediction of a deep neural network computed over a sample, down to relevance scores for the single input dimensions of the sample. [Montavon et al., 2019] [Montavon et al., 2019] 81

- 80. Operations – Layer-wise relevance propagation • Key difference to first-derivative saliency: – Assigns to each dimension (or feature), 𝑥 𝑑, a relevance score 𝑅 𝑑 (1) such that • Propagate the relevance scores using purposely designed local propagation rules – Basic Rule (LRP-0) – Epsilon Rule (LRP-ϵ) – Gamma Rule (LRP-γ) 𝑓 𝑥 ≈ 𝑑 𝑅 𝑑 (1) [Croce et al] 𝑅 𝑑 (1) > 0 – the feature in favor the prediction 𝑅 𝑑 (1) < 0 – the feature against the prediction [Montavon et al., 2019] 82

- 81. Operations – Input perturbation • Usually used to enable local explanation for a particular instance – Often combined with surrogate models 𝑋 𝑓 𝑓(𝑋) An input instance A classifier Usually not interpretable Prediction made by the classifier 𝑋1, 𝑋2, … 𝑋 𝑚 𝑓(𝑋1), 𝑓(𝑋2), … 𝑓(𝑋 𝑚) Perturbed instances Prediction made by the original classifier 𝒈 A surrogate classifier usually interpretable 𝒈(𝑋) Explanation to humans 83

- 82. Operations – Input perturbation • Different ways to generate perturbations – Sampling method (e.g., [Ribeiro et al. KDD] - LIME ) – Perturbating intermediate vector representation (e.g., [Alvarez-Melis and Jaakkola, 2017]) • Sampling method – Directly use examples within labeled data that are semantically similar • Perturbating intermediate vector representation – Specific to neural networks – Introduce minor perturbations to the vector representation of input instance 84

- 83. Input perturbation – Sampling method Find examples that are both semantically “close” and “far away” from the instance in question. - Need to define a proximity metric - Will not generate instance [Ribeiro et al. KDD] 85

- 84. Perturbating intermediate Vector Representation [Alvarez-Melis and Jaakkola, 2017]) • Explain predictions of any black-box structured input – structured output model • Around a specific input-output pair • An explanation consists of groups of input-output tokens that are causally related. • The dependencies (input-output) are inferred by querying the blackbox model with perturbed inputs 86

- 85. Perturbating intermediate Vector Representation [Alvarez-Melis and Jaakkola, 2017]) 87

- 86. Perturbating intermediate Vector Representation [Alvarez-Melis and Jaakkola, 2017]) Typical autoencoder Variation autoencoder (generative model) Image source: https://towardsdatascience.com/understanding-variational-autoencoders-vaes-f70510919f73 88

- 87. Operations – Attention [NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. Bahdanau et al., ICRL 2015] Image courtesy: https://smerity.com/articles/2016/google_nmt_arch.html Attention mechanism uses the latent features learned by the encoder • a weighted combination of the latent features Weights become the importance scores of these latent features (also corresponds to the lexical features) for the decoded word/token. Naturally, can be used to enable feature importance [Ghaeini et al., 2018] 89

- 88. Operations – LSTM gating signals • LSTM gating signals determine the flow of information – How LSTM reads the word sequences and how the information from different parts is captured and combined • Gating signals are computed as the partial derivative of the score of the final decision wrt each gating signal. [Ghaeini et al., 2018] Image source: https://blog.goodaudience.com/basic-understanding-of-lstm-539f3b013f1e 90

- 89. Operations – LSTM gating signals 91

- 90. Explainability-Aware: Rule Induction Architecture Works on top of existing, black-box models for link prediction, via a decoder architecture (i.e., from embeddings back to graph) P. Pezeshkpour et al. Investigating Robustness and Interpretability of Link Prediction via Adversarial Modifications. NAACL 2019. Knowledge graph facts: wasBornIn (s, o) Optimization problem in Rd: find the most influential vector zs’r’ whose removal would lead to removal of wasBornIn (s, o) Decode back the vector zs’r’ to actual KG: isLocatedIn (s’, o) Rules of length 2 are then extracted by identifying frequent patterns isAffiliatedTo (a, c) ∧ isLocatedIn (c, b) → wasBornIn (a,b) 92

- 91. Explainability-Aware Design: Rule-Based Active Learning corporation Zip City address Trader Joe’s 95118 San Jose 5353 Almaden Express Way company Zip City address Trader Joe’s 95110 San Jose 635 Coleman Ave Rule Learning Algorithm R.corporation = S.company and R.city = S.city R.corporation = S.company and R.city = S.city and R.zip = S.zip corporation Zip City address KFC 90036 LA 5925 W 3rd St. Not a match company Zip City address KFC 90004 LA 340 N Western Ave Qian et al, SystemER: A human-in-the-loop System for Explainable Entity Resolution. VLDB’17 Demo (also full paper in CIKM’17) Iteratively learns an ER model via active learning from few labels. • Rules: explainable representation for intermediate states and final model • Rules: used as mechanism to generate and explain the examples brought back for labeling Large datasets to match Example selection Domain expert labeling 93

- 92. U: D1 x D2 Matches (R1) + + + + + + + + + + + + + + + + + + + + + - - - - - SystemER: Example Selection (Likely False Positives) To refine R1 so that it becomes high-precision, need to find examples from the matches of R1 that are likely to be false positives Negative examples will enhance precision Candidate rule R1 94

- 93. U: D1 x D2 Matches (R1) To refine R1 so that it becomes high-precision, need to find examples from the matches of R1 that are likely to be false positives + + + + + + + + + + + + + + + + + + + + + Negative examples will enhance precision - - - - - SystemER: Example Selection (Likely False Negatives) + + Rule-Minus1 + + Rule-Minus2 + Rule-Minus3 Rule-Minus heuristic: explore beyond the current R1 to find what it misses (likely false negatives) R1: i.firstName = t.first AND i.city = t.city AND i.lastName = t.last i.firstName = t.first AND i.city = t.city AND i.lastName = t.last Rule-Minus1 i.firstName = t.first AND i.city = t.city AND i.lastName = t.last Rule-Minus3 i.firstName = t.first AND i.city = t.city AND i.lastName = t.last Rule-Minus2 Candidate rule R1 New positive examples will enhance recall At each step, examples are explained/visualized based on the predicates they satisfy or not satisfy 95

- 94. Part II – (b) Visualization Techniques 96

- 95. What’s next XAI model Mathematical justifications End user Visualizing justifications 1. Explanation Generation 2. Explanation Presentation UX engineersAI engineers Visualization Techniques 97

- 96. Visualization Techniques • Presenting raw explanations (mathematical justifications) to target user • Ideally, done by UX/UI engineers • Main visualization techniques: – Saliency – Raw declarative representations – Natural language explanation – Raw examples 98

- 97. Visualization – Saliency has been primarily used to visualize the importance scores of different types of elements in XAI learning systems Definition • One of the most widely used visualization techniques • Not just in NLP, but also highly used in computer vision • Saliency is popular because it presents visually perceptive explanations • Can be easily understood by different types of users A computer vision example 99

- 98. Visualization – Saliency Example Input-output alignment [Bahdanau et al., 2015] 100

- 99. Visualization – Saliency Example [Schwarzenberg et al., 2019] [Wallace et al., 2018] [Harbecke et al, 2018] [Garneau et al., 2018] 101

- 100. Visualization – Declarative Representations • Trees: [Jiang et al., ACL 2019] – Different paths in the tree explain the possible reasoning paths that lead to the answer • Rules: [Pezeshkpour et al, NAACL 2019] – Logic rules to explain link prediction (e.g., isConnected is often predicted because of transitivity rule, while hasChild is often predicted because of spouse rule) [Sen et al, EMNLP 2020] – Linguistic rules for sentence classification: • More than just visualization/explanation • Advanced HCI system for human-machine co- creation of the final model, built on top of rules (Next) isConnected(a,c) ∧ isConnected (c,b) ⟹ isConnected(a,b) isMarriedTo (a,c) ∧ hasChild (c,b) ⟹ hasChild(a,b) ∃ verb ∈ 𝑆: verb.theme ∈ ThemeDict and verb.voice = passive and verb.tense = future Machine-generated rules Human feedback 102

- 101. Linguistic Rules: Visualization and Customization by the Expert Passive-Voice Possibility-ModalClass Passive-Voice VerbBase-MatchesDictionary-SectionTitleClues VerbBase-MatchesDictionary-SectionTitleClues Theme-MatchesDictionary-NonAgentSectionTitleClues Theme-MatchesDictionary-NonAgentSectionTitleClues Manner-MatchesDictionary-MannerContextClues Manner-MatchesDictionary-MannerContextClues VerbBase-MatchesDictionary-SectionTitleClues NounPhrase-MatchesDictionary-SectionTitleClues NounPhrase-MatchesDictionary-SectionTitleClues Label being assigned Various ways of selecting/ranking rules Rule-specific performance metrics Y. Yang, E. Kandogan, Y. Li, W. S. Lasecki, P. Sen. HEIDL: Learning Linguistic Expressions with Deep Learning and Human-in-the-Loop. ACL, System Demonstration, 2019. Sentence classification rules learned by [Sen et al, Learning Explainable Linguistic Expressions with Neural Inductive Logic Programming for Sentence Classification. EMNLP 2020] 103

- 102. Visualization of examples per rule Passive-Voice Present-Tense Negative-Polarity Possibility-ModalClass Imperative-Mood Imperative-Mood VerbBase-MatchesDictionary-SectionTitleClues Manner-MatchesDictionary-MannerContextClues Manner-MatchesDictionary-MannerContextClues VerbBase-MatchesDictionary-VerbBases VerbBase-MatchesDictionary-VerbBases Linguistic Rules: Visualization and Customization by the Expert Y. Yang, E. Kandogan, Y. Li, W. S. Lasecki, P. Sen. HEIDL: Learning Linguistic Expressions with Deep Learning and Human-in-the-Loop. ACL, System Demonstration, 2019. 104

- 103. Playground mode allows to add/drop predicates Future-Tense Passive-Voice Future-Tense Passive-Voice NounPhrase-MatchesDictionary-SectionTitleClues NounPhrase-MatchesDictionary-SectionTitleClues Linguistic Rules: Visualization and Customization by the Expert Human-machine co-created models generalize better to unseen data • humans instill their expertise by extrapolating from what has been learned by automated algorithms Y. Yang, E. Kandogan, Y. Li, W. S. Lasecki, P. Sen. HEIDL: Learning Linguistic Expressions with Deep Learning and Human-in-the-Loop. ACL, System Demonstration, 2019. 105

- 104. Visualization – Raw Examples • Use existing knowledge to explain a new instance Explaining by presenting one (or some) instance(s) (usually from the training data) that is semantically similar to the instance needs to be explained Definition Jaguar is a large spotted predator of tropical America similar to the leopard. [Panchenko et al., 2016] Word sense disambiguation Raw examples 106

- 105. Visualization – Raw Examples [Croce et al, 2018] Classification Sentences taken from the labeled data Explain with one instance (basic model) Explain with > 1 instance (multiplicative model) Explain with both positive and negative instance (contrastive model) 107

- 106. Visualization – Natural language explanation The explanation is verbalized in human-comprehensible languageDefinition • Can be generated by using sophisticated deep learning approaches – [Rajani et al., 2019] • Can be generated by simple template-based approaches – [Abujabal et al,, 2017 - QUINT] – [Croce et al, 2018] – Many declarative representations can be naturally extended to natural language explanation 108

- 107. Visualization – Natural language explanation [Rajani et al., 2019] A language model trained with human generated explanations for commonsense reasoning Open-ended human explanations Explanation generated by CAGE 109

- 108. Visualization – Natural language explanation [Croce et al, 2018] Classification Sentences taken from the labeled data Explain with one instance (basic model) Explain with > 1 instance (multiplicative model) Explain with both positive and negative instance (contrastive model) 110

- 109. Visualization – Natural language explanation [Croce et al, 2018] Classification Template-based explanatory model 111

- 110. Outline of Part II • Literature review methodology • Categorization of different types of explanation – Local vs. Global – Self-explaining vs. Post-hoc processing • Generating and presenting explanations – Explainability techniques • Common operations to enable explainability – Visualization techniques • Other insights (XNLP website) – Relationships among explainability and visualization techniques – Evolution of the XAI research in the past a few years • Evaluation of Explanations 112

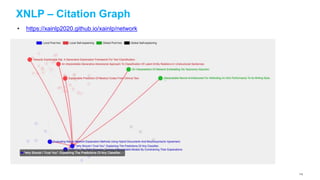

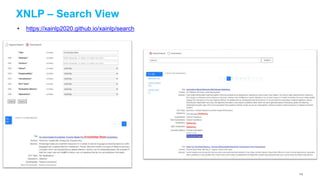

- 111. XNLP • We built an interactive website for exploring the domain • It’s self-contained • It provides different ways for exploring the domain – Cluster View (group papers based on explainability and visualization) – Tree view (categorize papers in a tree like structure) – Citation graphs (show the evolution of the field and also show influential works) – List view (list the set of papers in a table) – Search view (support keyword search and facet search) • Link to the website: https://xainlp2020.github.io/xainlp/home 113

- 112. XNLP - Tree View • https://xainlp2020.github.io/xainlp/clickabletree Local post-hoc (11) Local self-explaining (35) Global self-explaining (3) Global self-explaining (3) 114

- 113. XNLP – List View • https://xainlp2020.github.io/xainlp/table 115

- 114. XNLP – Cluster View • https://xainlp2020.github.io/xainlp/viz 116

- 115. XNLP – Citation Graph • https://xainlp2020.github.io/xainlp/network 117

- 116. XNLP – Citation Graph • https://xainlp2020.github.io/xainlp/network 118

- 117. XNLP – Search View • https://xainlp2020.github.io/xainlp/search 119

- 118. XNLP More advanced ways to explore the field can be found in our website https://xainlp2020.github.io/xainlp 120

- 119. Outline of Part II • Literature review methodology • Categorization of different types of explanation – Local vs. Global – Self-explaining vs. Post-hoc processing • Generating and presenting explanations – Explainability techniques • Common operations to enable explainability – Visualization techniques • Other insights (XNLP website) – Relationships among explainability and visualization techniques – Evolution of the XAI research in the past a few years • Evaluation of Explanations 121

- 120. How to evaluate the generated explanations • An important aspect of XAI for NLP. – No just evaluating the accuracy of the predictions • Unsurprisingly, little agreement on how explanations should be evaluated – 32 out of 52 lack formal evaluations • Only a couple of evaluation metrics observed – Human ground truth data – Human evaluation 122

- 121. Evaluation – Comparison to ground truth • Idea: compare generated explanations to ground truth explanations • Metrics used – Precision/Recall/F1 (Carton et al.,2018) – BLEU (Ling et al., 2017; Rajani et al., 2019b) • Benefit – A quantitative way to measure explainability • Pitfalls – Quality of the ground truth data – Alternative valid explanations may exit • Some attempts to avoid these issues – Having multiple annotators (with inter-annotator agreement) – Evaluating at different granularities (e.g., token-wise vs. phrase-wise) 123

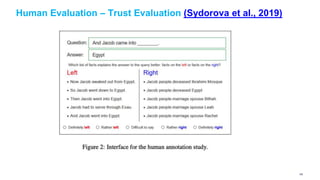

- 122. Evaluation – Human Evaluation • Idea: ask humans to evaluate the effectiveness of the generated explanations. • Benefit – Avoiding the assumption that there is only one good explanation that could serve as ground truth • Important aspects – It is important to have multiple annotators (with inter-annotator agreement, avoid subjectivity) • Observations from our literature review – Single-human evaluation (Mullenbach et al., 2018) – Multiple-human evaluation (Sydorova et al., 2019) – Rating the explanations of a single approach (Dong et al., 2019) – Comparing explanations of multiple techniques (Sydorova et al., 2019) • Very few well-established human evaluation strategies. 124

- 123. Human Evaluation – Trust Evaluation (Sydorova et al., 2019) • Borrowed the trust evaluation idea used in CV (Selvaraju et al. 2016) • A comparison approach – given two models, find out which one produces more intuitive explanations Model A Model B examples Examples that the two models predict the same labels Explanation 1 Explanation 2 Blind evaluation 125

- 124. Human Evaluation – Trust Evaluation (Sydorova et al., 2019) 126

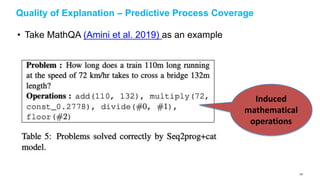

- 125. Quality of Explanation – Predictive Process Coverage • The predictive process starts from input to final prediction • Not all XAI approaches cover the whole process • Ideally, the explanation covers the whole process or most of the process • However, many of the approaches cover only a small part of the process • The end user has to fill up the gaps 127

- 126. Quality of Explanation – Predictive Process Coverage • Take MathQA (Amini et al. 2019) as an example Induced mathematical operations 128

- 127. Quality of Explanation – Predictive Process Coverage • Take MathQA (Amini et al. 2019) as an example 129

- 128. Fidelity • Often an issue arises in surrogate model • Surrogate model is flexible since its model-agnostic • Local fidelity vs. global fidelity • Logic fidelity • Surrogate model may use completely different reasoning mechanism • Is it really wise to use a linear model to explain a deep learning model? 130

- 129. PART III – Explainability & Case Study

- 131. 133 Human-centered perspective on XAI Human-AI interaction guidelines (Amershi et al., 2019) Explanations in social sciences (Miller, 2019) Leveraging human reasoning processes to craft explanations (Wang et al., 2019) Explainability scenarios (Wolf, 2019) Broader ecosystem of actors (Liao et al., 2020) Explanations for human-AI collaboration during onboarding (Cai et al, 2019) Amershi, S., Weld, D., Vorvoreanu, M., Fourney, A., Nushi, B., Collisson, P., ... & Teevan, J. (2019, May). Guidelines for human-AI interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (pp. 1-13). Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence, 267, 1-38. Wang, D., Yang, Q., Abdul, A., & Lim, B. Y. (2019, May). Designing theory-driven user-centric explainable AI. In Proceedings of the 2019 CHI conference on human factors in computing systems (pp. 1-15). Wolf, C. T. (2019, March). Explainability scenarios: towards scenario-based XAI design. In Proceedings of the 24th International Conference on Intelligent User Interfaces (pp. 252-257). Liao, Q. V., Gruen, D., & Miller, S. (2020, April). Questioning the AI: Informing Design Practices for Explainable AI User Experiences. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-15). Cai, C. J., Winter, S., Steiner, D., Wilcox, L., & Terry, M. (2019). " Hello AI": Uncovering the Onboarding Needs of Medical Practitioners for Human-AI Collaborative Decision-Making. Proceedings of the ACM on Human-computer Interaction, 3(CSCW), 1-24.

- 132. 134 Human-Centered Approach to Explainabilty Human-Centered approach to Explainabilty User Study + Case Study

- 133. 135 Explainability in Practice RQ1: What is the nature of explainability concerns in industrial AI projects? RQ2: Over the course of a model’s pipeline, when do explanation needs arise?

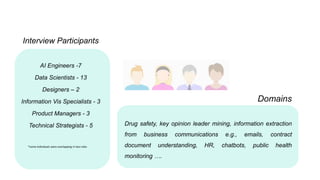

- 134. Domains Drug safety, key opinion leader mining, information extraction from business communications e.g., emails, contract document understanding, HR, chatbots, public health monitoring …. Interview Participants AI Engineers -7 Data Scientists - 13 Designers – 2 Information Vis Specialists - 3 Product Managers - 3 Technical Strategists - 5 *some individuals were overlapping in two roles

- 135. 138 Our approach Who touches the AI model? What are their informational needs? When do explanations get warranted?

- 136. Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize 139 Business Users clients of AI model AI Engineers train/fine-tune the model AI Operations gather important metrics on AI models Data Providers gatekeepers of public, private, third party data Designers develop the user experience of the AI model Data Scientists develop new models, design new algorithms Domain Experts subject matter experts in business domain helping in labeling data Model Validators debug, see if model continues to operate running as intended, detect model drift Product Managers interface between business users and data scientists, AI engineers Software Engineers support AI model deployment

- 137. 140 Business Understanding Data Acquisition & Preparation Model Development Model Validation Deployment Monitor & Optimize Business Users Product Managers Data Scientists Domain Experts Business Users Data Providers AI Engineers Data Scientists Product managers AI engineers Data scientists Product managers Model validators Business Users Designers Software Engineers Product Managers AI Engineers Business Users AI Operations Product Managers Model Validators

- 138. 141 Explanations during model development Understanding AI models inner workings “Explanations can be useful to NLP researchers explore and come up with hypothesis about why their models are working well… If you know the layer and the head then you have all the information you need to remove its influence... by looking, you could say, oh this head at this layer is only causing adverse effects, kill it and retrain or you could tweak it perhaps in such a way to minimize bias (I-19, Data scientist) “Low-level details like hyper-parameters would be discussed for debugging or brainstorming. (I-12, Data Scientist)

- 139. 142 Explanations during model validation Details about data over which model is built “Domain experts want to know more about the public medical dataset that the model is trained on to gauge if it can adapt well to their proprietary data (I-4, Data Scientist) Model design at a high level “Initially we presented everything in the typical AI way (i.e., showing the diagram of the model). We even put equations but realized this will not work... After a few weeks, we started to only show examples and describing how the model works at a high level with these examples (I-4)

- 140. 143 Explanations during model validation “How do we know the model is safe to use? ... Users will ask questions about regulatory or compliance-related factors: Does the model identify this particular part of the GDPR law? (I-16, Product Manager) Ethical Considerations

- 141. 144 Explanations during model in-production Expectation mismatch “Data quality issues might arise resulting from expectation mismatch, but the list of recommendations must at least be as good as the manual process ... If they [the clients] come back with data quality issues ... we need to [provide] an explanation of what happened (I-5, Technical Strategist) “Model mistakes versus decision disagreements” (I-28, Technical Strategist) Explanation in service of business actionability “Is the feature it is pointing to something I can change in my business? If not, how does knowing that help me? (I-22, AI Engineer)

- 142. 145 AI Lifecycle Touchpoints Initial Model building Model validation during proof-of-concept Model in-production Audience (Whom does the AI model interface with) Model developers Data Scientists Product Mangers Domain experts Business owners Business IT Operations Model developers Data Scientists | Technical Strategists Product managers | Design teams Business owners/users Business IT Operations Explainability Motivations (Information needs) - Peeking inside models to understand their inner workings - Improving model design (e.g., how should the model be retrained, re- tuned) - Selecting the right model - Characteristics of data (proprietary, public, training data) - Understanding model design - Ensuring ethical model development - Expectation mismatch - Augmenting business workflow and business actionability

- 143. Explainability in HEIDL HEIDL (Human-in-the loop linguistic Expressions wIth Deep Learning) Explainability takes on two dimensions here: models are fully explainable users involved In model co-creation with immediate feedback HEIDL video

- 144. 147 Design Implications of AI Explainability Balancing external stakeholders needs with their AI knowledge “We have to balance the information you are sharing about the AI underpinnings, it can overwhelm the user (I-21, UX Designer) Group collaboration persona describing distinct types of members “Loss of control making the support and maintenance of explainable models hard (I-8, Data Scientist) Simplicity versus complexity tradeoff “The design space for (explaining) models to end users is in a way more constrained ... you have to assume that you have to put in a very, very very shallow learning curve (I-27 HCI Researcher) Privacy “Explanatory features can reveal identities (e.g., easily inferring employee, department, etc.) (I- 24, HCI researcher)

- 145. 148 Design Implications of AI Explainability Balancing external stakeholders needs with their AI knowledge “We have to balance the information you are sharing about the AI underpinnings, it can overwhelm the user (I-21, UX Designer) Group collaboration persona describing distinct types of members “Loss of control making the support and maintenance of explainable models hard (I-8, Data Scientist) Simplicity versus complexity tradeoff “The design space for (explaining) models to end users is in a way more constrained ... you have to assume that you have to put in a very, very very shallow learning curve (I-27 HCI Researcher) Privacy “Explanatory features can reveal identities (e.g., easily inferring employee, department, etc.) (I- 24, HCI researcher)

- 146. 149 Design Implications of AI Explainability Balancing external stakeholders needs with their AI knowledge “We have to balance the information you are sharing about the AI underpinnings, it can overwhelm the user (I-21, UX Designer) Group collaboration persona describing distinct types of members “Loss of control making the support and maintenance of explainable models hard (I-8, Data Scientist) Simplicity versus complexity tradeoff “The design space for (explaining) models to end users is in a way more constrained ... you have to assume that you have to put in a very, very very shallow learning curve (I-27 HCI Researcher) Privacy “Explanatory features can reveal identities (e.g., easily inferring employee, department, etc.) (I- 24, HCI researcher)

- 147. 150 Design Implications of AI Explainability Balancing external stakeholders needs with their AI knowledge “We have to balance the information you are sharing about the AI underpinnings, it can overwhelm the user (I-21, UX Designer) Group collaboration persona describing distinct types of members “Loss of control making the support and maintenance of explainable models hard (I-8, Data Scientist) Simplicity versus complexity tradeoff “The design space for (explaining) models to end users is in a way more constrained ... you have to assume that you have to put in a very, very very shallow learning curve (I-27 HCI Researcher) Privacy “Explanatory features can reveal identities (e.g., easily inferring employee, department, etc.) (I- 24, HCI researcher)

- 148. 151 Future Work - User-Centered Design of AI Explainability Business Understanding Data & Preparation Model Development Model Validation Deployment Monitor & Optimize Image source: https://www.slideshare.net/agaszostek/history-and-future-of-human-computer-interaction-hci-and-interaction-design Business Users Product Managers AI Engineers Data Scientists End-Users

- 149. 152 Future Work - User-Centered Design of AI Explainability Business Understanding Data & Preparation Model Development Model Validation Deployment Monitor & Optimize Image source: https://www.slideshare.net/agaszostek/history-and-future-of-human-computer-interaction-hci-and-interaction-design Business Users Product Managers AI Engineers Data Scientists End-Users

- 150. 153 Future Work - User-Centered Design of AI Explainability Business Understanding Data & Preparation Model Development Model Validation Deployment Monitor & Optimize Image source: https://www.slideshare.net/agaszostek/history-and-future-of-human-computer-interaction-hci-and-interaction-design Business Users Product Managers AI Engineers Data Scientists End-Users

- 151. PART IV – Open Challenges & Concluding Remarks

- 152. Explainability for NLP is an Emerging Field • AI has become an important part of our daily lives • Explainability is becoming increasingly important • Explainability for NLP is still at its early stage

- 153. Challenge 1: Standardized Terminology Interpretability explainability transparency Directly interpretable Self-explaining Understandability Comprehensibility Black-box-ness White-box Gray-box …… … 156

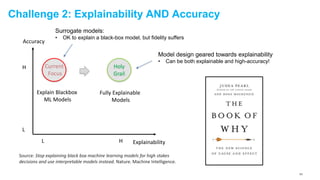

- 154. Challenge 2: Explainability AND Accuracy Current Focus Holy Grail Explainability Accuracy H HL L Explain Blackbox ML Models Fully Explainable Models Source: Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature. Machine Intelligence. Surrogate models: • OK to explain a black-box model, but fidelity suffers Model design geared towards explainability • Can be both explainable and high-accuracy! 157

- 155. Challenge 3: Stakeholder-Oriented Explainability Business Understanding Data & Preparation Model Development Model Validation Deployment Monitor & Optimize Business Users Product Managers AI Engineers Data Scientists End-Users 158

- 156. Challenge 3: Stakeholder-Oriented Explainability Statement of Work Company A Company B … Time is of essence of this Agreement. …. label = Risky Contains($sentence, $time) = False AI engineer Statement of Work Company A Company B … Time is of essence of this Agreement. …. … Risky. Need to define time frame to make this clause meaningful. Potential risk: If no time specified, courts will apply a "reasonable" time to perform. What is a "reasonable time" is a question of fact - so it will up to a jury. legal professional Different stakeholders have different expectations and skills 159

- 157. Challenge 4: Evaluation Methodology & Metrics • Need more well-established evaluation methodologies • Need more fine-grain evaluation metrics – Coverage of the prediction – Fidelity • Take into account the stakeholders as well! 160

- 158. Path Forward • Explainability research for NLP is an interdisciplinary topic – Computational Linguistics – Machine learning – Human computer interaction • NLP community and HCI community can work closer in the future – Explainability research is essentially user-oriented – but most of the papers we reviewed did not have any form of user study – Get the low-hanging fruit from the HCI community This tutorial is on a hot area in NLP and takes an innovative approach by weaving in not only core technical research but also user-oriented research. I believe this tutorial has the potential to draw a reasonably sized crowd. – anonymous tutorial proposal reviewer “Furthermore, I really appreciate the range of perspectives (social sciences, HCI, and NLP) represented among the presenters of this tutorial.” – anonymous tutorial proposal reviewer NLP people appreciate the idea of bringing NLP and HCI people together 161

- 159. Thank You • Attend our other presentation at AACL 2020: “A Survey of the State of Explainable AI for Natural Language Processing” Session 9A 23:30-23:40 UTC+8 December 5, 2020 • We are an NLP research team at IBM Research – Almaden – We work on NLP, Database, Machine Learning, Explainable AI, HCI, etc. – Interested in collaborations? Just visit our website! http://ibm.biz/scalableknowledgeintelligence • Thank you very much for attending our tutorial! 162

![Task 1 - how to differentiate different explanations

• Different taxonomies exist

[Arya et al., 2019]

[Arrietaa et al, 2020] 43](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-41-320.jpg)

![Local Explanation

• We understand only the reasons for a specific decision made by an AI model

• Only the single prediction/decision is explained

(Guidotti et al. 2018)

Machine translation

Input-output alignment matrix changes based on instances

[NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. Bahdanau

et al., ICRL 2015]

45](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-43-320.jpg)

![Explainability - Feature Importance

The main idea of Feature Importance is to derive explanation by investigating

the importance scores of different features used to output the final prediction.

Can be built on different types of features

Manual features from feature engineering

[Voskarideset al., 2015]

Lexical features including words/tokens and N-gram

[Godin et al., 2018]

[Mullenbachet al., 2018]

Latent features learned by neural nets

[Xie et al., 2017]

[Bahdanau et al., 2015]

[Li et al., 2015]

Definition

Usually used

in parallel

[Ghaeini et al., 2018]

55](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-53-320.jpg)

![Feature Importance – Machine Translation [Bahdanau et al., 2015]

[NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. Bahdanau et al., ICRL 2015]

A well-known example of feature importance is the Attention Mechanism first used in machine translation

Image courtesy: https://smerity.com/articles/2016/google_nmt_arch.html

Traditional encoder-decoder architecture for machine translation

Only the last hidden state

from encoder is used

56](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-54-320.jpg)

![Feature Importance – Machine Translation as An Example

[NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE. Bahdanau et al., ICRL 2015]

Image courtesy: https://smerity.com/articles/2016/google_nmt_arch.html

Attention mechanism uses the latent features learned by the encoder

• a weighted combination of the latent features

Weights become the importance scores

of these latent features (also corresponds

to the lexical features) for the decoded

word/token.

Similar approach in [Mullenbachet al., 2018]

Local & Self-explaining

Input-output alignment

57](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-55-320.jpg)

![Feature Importance – token-level explanations [Thorne et al]

Another example that uses attention to enable feature importance

NLP Task: Natural Language Inference (determining whether a “hypothesis” is true (entailment), false (contradiction), or

underdetermined (neutral) given a “premise”

Premise: Children Smiling and Waving at a camera

Hypothesis: The kids are frowning

GloVe LSTM

ℎ 𝑝

ℎℎ

Attention

The

kid

are

frowning

MULTIPLE INSTANCE LEARNING to

get appropriate thresholdings for attention matrix

58](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-56-320.jpg)

![Explainability – Surrogate Model

Model predictions are explained by learning a second,

usually more explainable model, as a proxy.

Definition

A black-box model

or a hard-to-explain model

Input data Predictions without explanations or

hard to explain.

An interpretable model

that approximates the black-box model

Interpretable

predictions

[Liu et al. 2018]

[Ribeiro et al. KDD]

[Alvarez-melis and Jaakkola]

[Sydorova et al, 2019]

59](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-57-320.jpg)

![Surrogate Model – LIME [Ribeiro et al. KDD]

Blue/Pink area are the decision boundary of

a complex model 𝒇, which is a black-box model

Cannot be well approximated by a linear function

Instance to be explained

Sampled instances,

weighted by the

proximity to the

target instance

An Interpretable classifier

with local fidelity

Local & Post-hocLIME: Local Interpretable Model-agnostic Explanations

60](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-58-320.jpg)

![Surrogate Model – LIME [Ribeiro et al. KDD]

Local & Post-hoc

High flexibility – free to choose any

interpretable surrogate models

61](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-59-320.jpg)

![Surrogate Model - Explaining Network Embedding [Liu et al. 2018]

Global & Post-hoc

A learned

network embedding

Induce a taxonomy

from the network embedding

62](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-60-320.jpg)

![Surrogate Model - Explaining Network Embedding [Liu et al. 2018]

Induced from 20NewsGroups Network

Bold text are attributes of the cluster,

followed by keywords from documents belong to

the cluster.

63](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-61-320.jpg)

![Explainability – Example-driven

• Similar to the idea of nearest neighbors [Dudani, 1976]

• Have been applied to solve problems including

– Text classification [Croce et al., 2019]

– Question Answering [Abujabal et al., 2017]

Such approaches explain the prediction of an input instance by identifying and presenting other instances,

usually from available labeled data, that are semantically similar to the input instance.

Definition

64](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-62-320.jpg)

![Explainability – Text Classification [Croce et al., 2019]

“What is the capital of Germany?” refers to a Location. WHY?

“What is the capital of California?” which also refers to a Location

in the training data

Because

65](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-63-320.jpg)

![Explainability – Text Classification [Croce et al., 2019]

Layer-wise Relevance Propagation in Kernel-based Deep Architecture

66](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-64-320.jpg)

![Explainability – Text Classification [Croce et al., 2019]

Need to understand the work

Thoroughly

67](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-65-320.jpg)

![Explainability – Provenance-based

• An intuitive and effective explainability technique when the final prediction is the result of

a series of reasoning steps.

• Several question answering papers adopt this approach.

– [Abujabal et al., 2017] – Quint system for KBQA (knowledge-base question answering)

– [Zhou et al., 2018] – Multi-relation KBQA

– [Amini et al., 2019] – MathQA, will be discussed in the declarative induction part (as a representative

example of program generation)

Explanations are provided by illustrating (some of) the prediction derivation processDefinition

68](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-66-320.jpg)

![Explainability – Declarative Induction

The main idea of Declarative Induction is to construct human-readable representations

such as trees, programs, and rules, which can provide local or global explanations.

Explanations are produced in a declarative specification language, as humans often would do.

Trees

[Jiang et al., ACL 2019] - express alternative ways of reasoning in multi-hop reading comprehension

Rules:

[A. Ebaid et al., ICDE Demo, 2019] - Bayesian rule lists to explain entity matching decisions

[Pezeshkpour et al, NAACL 2019] - rules for interpretability of link prediction

[Sen et al, EMNLP 2020] - linguistic expressions as sentence classification model

[Qian et al, CIKM 2017] - rules as entity matching model

Definition

Examples of global explainability

(although rules can also be used

for local explanations)

(Specialized) Programs:

[A. Amini et al., NAACL, 2019] – MathQA, math programs for explaining math word problems

Examples of local

explainability (per instance)

7171](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-69-320.jpg)

![• Previous approach for rule induction:

– The result is a set of logical rules (Horn rules of length 2) that are built on existing predicates from the KG

(e.g., Yago).

• Other approaches also learn logical rules, but they are based on different vocabularies:

– Next:

o Logical rules built on linguistic predicates for Sentence Classification

– [SystemER – see later under Explainability-Aware Design]

o Logical rules built on similarity features for Entity Resolution

76](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-74-320.jpg)

![Operations – First-derivative saliency

• Mainly used for enabling Feature Importance

– [Li et al., 2016 NAACL], [Aubakirova and Bansal, 2016]

• Inspired by the NN visualization method proposed for computer vision

– Originally proposed in (Simonyan et al. 2014)

– How much each input unit contributes to the final decision of the classifier.

Input embedding layer

Output of neural network

Gradients of the output wrt the input

79](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-77-320.jpg)

![Operations – First-derivative saliency

Sentiment Analysis

Naturally, it can be used to generate explanations presented by saliency-based visualization techniques

[Li et al., 2016 NAACL]

80](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-78-320.jpg)

![Operations – Layer-wise relevance propagation

• For a good overview, refer to

• An operation that is specific to neural networks

– Input can be images, videos, or text

• Main idea is very similar to first-derivative saliency

– Decomposing the prediction of a deep neural network computed over a sample, down to relevance

scores for the single input dimensions of the sample.

[Montavon et al., 2019]

[Montavon et al., 2019]

81](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-79-320.jpg)

![Operations – Layer-wise relevance propagation

• Key difference to first-derivative saliency:

– Assigns to each dimension (or feature), 𝑥 𝑑, a relevance score 𝑅 𝑑

(1)

such that

• Propagate the relevance scores using purposely designed local propagation rules

– Basic Rule (LRP-0)

– Epsilon Rule (LRP-ϵ)

– Gamma Rule (LRP-γ)

𝑓 𝑥 ≈

𝑑

𝑅 𝑑

(1)

[Croce et al]

𝑅 𝑑

(1)

> 0 – the feature in favor the prediction

𝑅 𝑑

(1)

< 0 – the feature against the prediction

[Montavon et al., 2019]

82](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-80-320.jpg)

![Operations – Input perturbation

• Different ways to generate perturbations

– Sampling method (e.g., [Ribeiro et al. KDD] - LIME )

– Perturbating intermediate vector representation (e.g., [Alvarez-Melis and Jaakkola, 2017])

• Sampling method

– Directly use examples within labeled data that are semantically similar

• Perturbating intermediate vector representation

– Specific to neural networks

– Introduce minor perturbations to the vector representation of input instance

84](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-82-320.jpg)

![Input perturbation – Sampling method

Find examples that are both semantically “close” and “far away”

from the instance in question.

- Need to define a proximity metric

- Will not generate instance

[Ribeiro et al. KDD]

85](https://image.slidesharecdn.com/aacl2020-xai-tutorial-final-201205000527/85/Explainability-for-Natural-Language-Processing-83-320.jpg)

![Perturbating intermediate Vector Representation