Exploiting GPU's for Columnar DataFrrames by Kiran Lonikar

- 1. Exploiting GPUs for Columnar DataFrames Kiran Lonikar

- 2. About Myself: Kiran Lonikar ● Presently working as Staff Engineer with Informatica, Bangalore ○ Keep track of technology trends ○ Work on futuristic products/features ● Passionate about new technologies, gadgets and healthy food ● Education: ○Indian Institute of Technology, Bombay (1992) ○Indian Institute of Science Bangalore (1994)

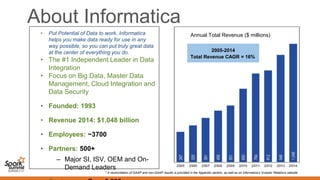

- 3. About Informatica • Put Potential of Data to work. Informatica helps you make data ready for use in any way possible, so you can put truly great data at the center of everything you do. • The #1 Independent Leader in Data Integration • Focus on Big Data, Master Data Management, Cloud Integration and Data Security • Founded: 1993 • Revenue 2014: $1.048 billion • Employees: ~3700 • Partners: 500+ – Major SI, ISV, OEM and On- Demand Leaders Annual Total Revenue ($ millions) 2005-2014 Total Revenue CAGR = 16% * A reconciliation of GAAP and non-GAAP results is provided in the Appendix section, as well as on Informatica’s Investor Relations website

- 4. Agenda ● Introducing GPUs ● Existing Applications in Big Data ● CPU the new bottleneck ● Project Tungsten ● Proposal: Extending Tungsten ○GPU for parallel execution across rows ○Code generation changes (minor refactoring) ○Batched Execution, Columnar layout (major refactoring to DataFrame) ● Results, Demo ● Future work, competing products

- 5. GPUs are Omnipresent Jetson TK1 192 cores GPU, 5”X5”, 20WGPU Servers upto 5760 cores g2: $0.65/hour: 1536 cores Nexus 9: 192 cores

- 6. Hardware Architecture: Latency Vs Throughput T i m e t1 t2 ... t32 ins 1 ins 2 ins 3 ins 4 Thread block Warp 1 t1 t2 ... t32 ins 1 ins 2 ins 3 ins 4 Warp 2 ... SIMT: Single Instruction Multiple Thread

- 7. GPU Programming Model CPU RAM CPU Processing GPU RAM GPU Processing PCIe Bus GPU Processing GPU Processing GPU Processing GPU Processing GPU Processing Shared CPU+GPU RAM CPU ProcessingHeterogeneous System Architecture based SoC GPU Processing GPU Processing GPU Processing GPU Processing ● CUDA C/C++ (NVidia GPUs) ● OpenCL C/C++ (All GPUs) ● JavaCL/ScalaCL, Aparapi, Rootbeer ● JDK 1.9 Lambdas GPU 1 GPU 2GPU RAM

- 8. GPUs in the world of Big Data LHC CERN’s ROOT: 30 PB per day, GPU based ML packages Analytic DBs 12 GPUs: 60,000 cores on a node gpudb, sqream, mapd Deep Learning Image Classification Speech Recognition NLP Genomics, DNA SparkCL: ● Aparapi based APIs to develop spark closures. ● Aparapi converts Java code to OpenCL and run on GPUs.

- 9. Natural progression into computing dimension ++ 2008 onwards 2012 onwards 2015 onwards

- 10. Spark SQL Architecture null bit set (1 bit/col) values (8 bytes/col) variable length data (length, data) null bit set (1 bit/col) values (8 bytes/col) variable length data (length, data) null bit set (1 bit/col) values (8 bytes/col) variable length data (length, data) row 1 row 2 row 3 Tungsten Row: Instead of Array of Java Objects nulltypeId row 1 row 2 row 3 null null null nulltypeId row 1 row 2 row 3 null null null nulltypeId row 1 row 2 row 3 null null null nulltypeId row 1 row 2 row 3 null null null column 1 column 2 column 3 column 4 Columnar Cache

- 11. 10Gbps ethernet, Infiniband SSD, Striped HDD arrays • Higher IO throughput: From Project Tungsten blog and Renold Xin’s talk slide 21 – Hardware advances in last 5 years: 10x improvements – Software advances: • Spark Optimizer: Prune input data to avoid unnecessary disk IO • Improved file formats: Binary, compressed, columnar (Parquet, ORC) • Less memory pressure: – Hardware: High memory bandwidths – Software: Taking over memory allocation ⇒ More data available to process. CPU the new bottleneck. CPU the new bottleneck

- 12. Project Tungsten • Taking Over memory management and bypass GC – Avoid large Java object overhead and GC overhead – Replace Java Objects allocation with sun.misc.Unsafe based explicit allocation and freeing – Replace general purpose data structures like java.util.HashMap with explicit binary map • Cache Aware computation – Change internal data structures to make them cache friendly • Co-locate key and value reference in one record for sorting • Code Generation – Expressions of columns for selecting and filtering executed through generated Java code ⇒ Avoids expensive expression tree evaluation for each row

- 13. Proposal: Execution on GPUs Goal: Change execution within a partition from serial row by row to batched/vectorized parallel execution • Change code generation to generate OpenCL code • Change executor code (Project, TungstenProject in basicOperators.scala) to execute OpenCL code through JavaCL • **Columnar layout of input data for GPU execution – BatchRow/CacheBatch: References to required columnar arrays instead of creating and processing InternalRow objects – UnsafeColumn/ByteBuffer: Columnar structure to be used for GPU execution a0 b0 c0 a1 b1 c1 a0 a1 a2 row wise columnar b0 b1 b2 a0 b0 c0 a1 b1 c1 a2 b2 c2 A B C c0 c1 c2 a2 b2 c2

- 14. Code Generation Changes // Existing Gnerated Java code class SpecificUnsafeProjection extends UnsafeProjection { private UnsafeRow row = new UnsafeRow(); // buffer for 2 cols, null bits private byte[] buffer11 = new byte[24]; private int cursor12 = 24; // size of buffer for 2 cols // initialization code, constructor etc. public UnsafeRow apply(InternalRow i) { double primitive3 = -1.0; int fixedOffset = Platform.BYTE_ARRAY_OFFSET; row.pointTo(buffer11, fixedOffset, 2, cursor12); if(nullChecks == false) { primitive3 = 2*i.getInt(0) + 4*i.getDouble(1); row.setDouble(1, primitive3); } else row.setNull(1); return row; } } // New OpenCL sample code: Columnar __kernel void computeExpression( const int* a, const char *aNulls, const int* b, const char *bNulls, int* output, char *outNulls, int dataSize) { int i = get_global_id(0); if(i < dataSize) { if(nullChecks == false) output[i] = 2*a[i] + 4*b[i]; else outNulls[i] = 1; } } // Scala code to drive the OpenCL code 1. rowIterator ⇒ ByteBuffers with a, b, aNulls, bNulls : 20x time of 2,3,4 2. Transfer ByteBuffers 3. Execute computeExpression 4. read output, outNulls into ByteBuffers ⇒ Cache

- 15. Row wise execution row wise CPU RAM Input Data row wise GPU RAM // Only A and C needed to compute D // B not needed typedef struct {float a, float b, float c} row; __kernel void expr(row *r, float *d, int n) { int id = get_global_id(0); if(id < n) d[id] = 3*r[id].a + 2*r[id].c; } a0 b0 c0 a1 b1 c1 a2 b2 c2 A B C a0 b0 c0 a1 b1 c1 a2 b2 c2 a0 b0 c0 a1 b1 c1 a2 b2 c2 t1 r0 row wise SMP Cache a0 b0 c0 a1 b1 c1 a2 b2 c2 t2 r1 t3 r2 Streaming Multiprocessor

- 16. Columnar execution Columnar CPU RAM Input Data Columnar GPU RAM // Only A and C needed to compute D // Only A and C transferred __kernel void expr(float *a, float *c, float *d, int n) { int id = get_global_id(0); if(id < n) d[id] = 3*a[id] + 2*c[id]; } a0 b0 c0 a1 b1 c1 a2 b2 c2 A B C a0 b0 c0a1 b1 c1a2 b2 c2 a0 c0a1 c1a2 c2 t1 a0 Columnar SMP Cache a0 c0a1 c1a2 c2 t2 a1 t3 a2 c0 c1 c2 Streaming Multiprocessor

- 17. JVM Considerations • Row wise representation: Array of Java objects – Java objects not same as C structs: Members not contiguous – Serialization needed before transfer to GPU RAM • Columnar representation: Arrays of individual members – Already serialized – Save Host-GPU and GPU RAM-SMP Cache data transfer – Avoid copying from input rows to projected InternalRow objects

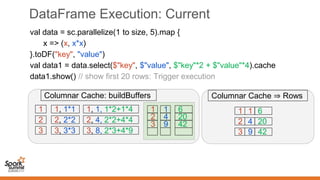

- 18. DataFrame Execution: Current val data = sc.parallelize(1 to size, 5).map { x => (x, x*x) }.toDF("key", "value") val data1 = data.select($"key", $"value", $"key"*2 + $"value"*4).cache data1.show() // show first 20 rows: Trigger execution 1, 1*1 1, 1, 1*2+1*4 Columnar Cache: buildBuffers 1 2 3 1 4 9 6 20 42 2, 2*2 2, 4, 2*2+4*4 3, 3*3 3, 8, 2*3+4*9 Columnar Cache ⇒ Rows 1 1 6 2 4 20 3 9 42 1 2 3

- 19. DataFrame Execution: Proposed val data = sc.parallelize(1 to size, 5).map { x => (x, x*x) }.toDF("key", "value") val data1 = data.select($"key", $"value", $"key"*2 + $"value"*4).cache data1.show() // show first 20 rows: Trigger execution Columnar Cache: buildBuffers 1 2 3 1 4 9 6 20 42 Columnar Cache ⇒ Rows 1 1 6 2 4 20 3 9 42 1 2 3 1 2 3 1 4 9 1 2 3 1 4 9 6 20 42 GPU

- 20. Proposal: Batched Execution In Memory RDDs ⇒ DFs Byte code Modification through Javassist to build BatchRow+UnsafeColumn Columnar Cache Input: Parquet, ORC, Relational DBs Pipelined operations: Filter, Join, Union, Sort, Group by, ... In Memory RDDs ⇒ DFs Byte code Modification through Javassist to consume BatchRow+UnsafeColumn Output: Parquet, ORC, Relational DBs Pipelined operations: Filter, Join, Union, Sort, Group by, ...

- 21. Results GPU CPU

- 22. Roadmap for future changes • Spark – Multi-GPU – Sorting: GPU based TimSort – Aggregations (groupBy) – Union – Join • Other projects capable of competing with Spark – Impala (C++, easier to adapt than Scala/JVM for GPU) – CERN Root (C++ REPL, multi-node) – Flink – Thrust (CUDA C++, single node, single GPU) – Boost Compute (OpenCL, C++, single node, single GPU) – VexCL (C++, OpenCL, CUDA, multi-GPU, multi node)

- 23. Q&A Contact Info ○ Twitter @KiranLonikar ○ https://www.linkedin.com/in/kiranlonikar ○ [email protected]

Editor's Notes

- #2: Hi, I am Kiran Lonikar, and I am going to talk on exploiting GPUs for columnar dataframes.

- #3: Let me first introduce myself. I work for Informatica in Bangalore, India. I spend quite some time in exploring new technologies and how they can be made relevant to my organization’s products. I have been exploring GPUs and Spark for quite some time now. And thought Spark can benefit from GPUs. Hence the talk!

- #4: And obviously spark

- #5: The talk is structured as follows. We will begin with a short introduction to GPUs, during which we will also look at some examples of GPU usage in the big data world. Then we will look at how CPU has become the new bottleneck in spark computations, and how project tungsten started a new direction on tackling it. Then we will look at how GPUs can extend the gains and what changes are needed to be able to achieve those. We will then take a closer look at the code generation changes, and importance of batched execution and columnar layout through a simple animation. Results of some simpler changes will then be presented. To achieve more serious results, some major refactoring of spark code is needed. A roadmap for future changes will be discussed. A set of products competing with spark will be discussed in the context of how much easier it will be for them to exploit GPUs for distributed computing.

- #6: To begin with, let me ask how many of you have heard about GPUs. How many of you know GPUs being used for non-graphical or general purpose computing and specifically big data applications? Finally, how many of you have actually played with GPUs for general purpose computing? All laptops, desktops, mobiles and tablets today come equipped with GPUs. Jetson TK1: Personal supercomputer. Linux on Tegra K1 SoC of quad-core ARM, 192 core Kepler GPU. Price $192. Size 5” x 5”. Nexus 9: Tablet with above Tegra K1 SoC Several Linux workstations and servers: Server class GPUs: several thousands of cores. Tesla K40 2880 cores, TITAN X 3072 cores, TITAN Z 5760 cores, AMD R9-295X2 5632 cores (and 12GB GPU RAM)! Much less Energy Consumption and price/TFlop: Less operational costs due to low power consumption and cooling requirements. AWS GPU machines/instances G2(Tesla K10): 1536 CUDA cores, 0.65 c/hour → $475/month. Compare to c3 and i2 instances: num_of_nodes*$1200+/month (You may need only 1 or 2 G2 instances against 10s of c3s or i2s)

- #7: CPUs are designed to be low latency so that serial code executes fast. GPUs are designed for multiple higher latency cores. So serial code executes slower, but many parallel cores give much higher throughput. CPUs for sequential parts where latency matters – CPUs can be 10+X faster than GPUs for sequential code GPUs for parallel parts where throughput wins – GPUs can be 10+X faster than CPUs for parallel code SIMD Vs SIMT: http://yosefk.com/blog/simd-simt-smt-parallelism-in-nvidia-gpus.html http://courses.cs.washington.edu/courses/cse471/13sp/lectures/GPUsStudents.pdf https://groups.google.com/forum/#!topic/comp.parallel/nQtFcL7N2rA

- #9: http://on-demand.gputechconf.com/gtc/2015/presentation/S5818-Keynote-Andrew-Ng.pdf LHC CERN’s ROOT software C++ REPL like scala based on LLVM. Used to process about 30PB data per day. Uses Parquet/ORC like columnar data files (its own root format) Toolkit for Multi-variate Analysis (TMVA). Some algorithms rewritten to use GPUs. https://www.inf.ed.ac.uk/publications/thesis/online/IM111037.pdf Deep learning community already uses GPUs: Papers by Hinton, Andrew Ng, Yann Lecun on how GPUs are used in their setups/work Popular libraries: Caffe, Theano, Torch, BIDMach, Kaldi Slide 21 of http://on-demand.gputechconf.com/gtc/2015/webinar/deep-learning-course/intro-to-deep-learning.pdf to show how GPUs enabled replacing a 1000 node data center with a few GPU cards SQL DB startups: Columnar analytic databases capable of aggregating 2TB per second. Use upto 12 GPUs == 60,000 cores on a single node http://www.gpudb.com/ http://www.mapd.com/ http://sqream.com/ YARN JIRA for managing GPU resources: https://issues.apache.org/jira/browse/YARN-4122

- #10: Hadoop, which started the big data revolution was largely based on CPUs and storage disks co-located. Spark used RAM backed by disks, thus extending the memory dimension. GPU is the natural extension to take advantage of available computing power. Extend the gains to execute some processing on GPUs...

- #11: Moving on, lets take a look at the Spark SQL architecture and and its components. The dataflow computation expressed in the form of spark SQL or DataFrames is first converted to a Logical Plan, then its optimized, and a number of physical plans are generated. A cost based optimizer then selects the best physical plan. The physical plan itself is expressed as a sequence of operations on different RDDs which is executed when an action on a parent dataframe is invoked. The main components of relevance to us are rows and columnar cache.

- #12: With this introduction, lets step back a little and look at what the bottleneck of today’s big data computing is. In software advances, the improved file formats like Parquet and ORC have become commonplace, and enable faster bulk reading of only the needed data from the disk. All of this means much more data being pumped at CPU for processing but the improvements in hardware and software have outpaced the ones in CPUs.

- #13: Now lets see what project tungsten does to solve it

- #14: The CPU bottleneck can be further eased with the help of GPUs. Coming to the main theme of this talk, let us see what is needed to perform some of spark computations on GPUs. It turns out that the most important change is moving to a columnar layout of input data. This picture illustrates the difference between row wise and columnar layouts.

- #16: Row wise and columnar formats further explained

- #17: Row wise and columnar formats further explained

- #18: If the data is contiguous, the row wise or columnar layout makes little difference. But when dealing with Java objects, and even a row as an array of Java objects, this is not the case. Serialization is needed before the data is transferred to GPU RAM which itself is CPU consuming.

- #19: In this and the next slide, we will see take a closer look at the proposal. Lets see how the execution happens today.

- #20: Lets see how we would like it to happen in a batched manner and on GPU

![Code Generation Changes

// Existing Gnerated Java code

class SpecificUnsafeProjection extends

UnsafeProjection {

private UnsafeRow row = new UnsafeRow();

// buffer for 2 cols, null bits

private byte[] buffer11 = new byte[24];

private int cursor12 = 24; // size of buffer for 2 cols

// initialization code, constructor etc.

public UnsafeRow apply(InternalRow i) {

double primitive3 = -1.0;

int fixedOffset = Platform.BYTE_ARRAY_OFFSET;

row.pointTo(buffer11, fixedOffset, 2, cursor12);

if(nullChecks == false) {

primitive3 = 2*i.getInt(0) + 4*i.getDouble(1);

row.setDouble(1, primitive3);

}

else

row.setNull(1);

return row;

}

}

// New OpenCL sample code: Columnar

__kernel void computeExpression(

const int* a, const char *aNulls,

const int* b, const char *bNulls,

int* output, char *outNulls,

int dataSize)

{

int i = get_global_id(0);

if(i < dataSize) {

if(nullChecks == false)

output[i] = 2*a[i] + 4*b[i];

else

outNulls[i] = 1;

}

}

// Scala code to drive the OpenCL code

1. rowIterator ⇒ ByteBuffers with a, b, aNulls,

bNulls : 20x time of 2,3,4

2. Transfer ByteBuffers

3. Execute computeExpression

4. read output, outNulls into ByteBuffers ⇒ Cache](https://image.slidesharecdn.com/02kiranlonikar-151102130944-lva1-app6892/85/Exploiting-GPU-s-for-Columnar-DataFrrames-by-Kiran-Lonikar-14-320.jpg)

![Row wise execution

row wise CPU RAM

Input Data

row wise GPU RAM

// Only A and C needed to compute D

// B not needed

typedef struct {float a, float b,

float c} row;

__kernel void expr(row *r, float *d,

int n) {

int id = get_global_id(0);

if(id < n)

d[id] = 3*r[id].a + 2*r[id].c;

}

a0 b0 c0

a1 b1 c1

a2 b2 c2

A B C

a0 b0 c0 a1 b1 c1 a2 b2 c2

a0 b0 c0 a1 b1 c1 a2 b2 c2

t1

r0

row wise SMP Cache

a0 b0 c0 a1 b1 c1 a2 b2 c2

t2

r1

t3

r2

Streaming

Multiprocessor](https://image.slidesharecdn.com/02kiranlonikar-151102130944-lva1-app6892/85/Exploiting-GPU-s-for-Columnar-DataFrrames-by-Kiran-Lonikar-15-320.jpg)

![Columnar execution

Columnar CPU RAM

Input Data

Columnar GPU RAM

// Only A and C needed to compute

D

// Only A and C transferred

__kernel void expr(float *a, float

*c, float *d, int n) {

int id = get_global_id(0);

if(id < n)

d[id] = 3*a[id] + 2*c[id];

}

a0 b0 c0

a1 b1 c1

a2 b2 c2

A B C

a0 b0 c0a1 b1 c1a2 b2 c2

a0 c0a1 c1a2 c2

t1

a0

Columnar SMP Cache

a0 c0a1 c1a2 c2

t2

a1

t3

a2

c0 c1 c2

Streaming

Multiprocessor](https://image.slidesharecdn.com/02kiranlonikar-151102130944-lva1-app6892/85/Exploiting-GPU-s-for-Columnar-DataFrrames-by-Kiran-Lonikar-16-320.jpg)