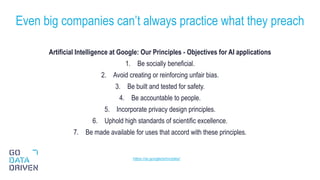

This document discusses fairness in artificial intelligence and machine learning. It begins by noting that AI can encode and amplify human biases, leading to unfair outcomes at scale. It then discusses different ways to measure fairness, such as demographic parity and equality of opportunity. The document presents an example of predicting income using census data and shows how the initial model is unfair, with low probabilities for certain groups. It explores potential sources of bias in systems and methods for enforcing fairness, such as adversarial training to iteratively train a classifier and adversarial model. The document emphasizes that fairness is complex with many approaches and no single solution, requiring active work to avoid unfair outcomes.