The document discusses fairness-aware learning in artificial intelligence, focusing on batch and sequential ensemble learning, and the discrimination that can occur within algorithms. It highlights examples of algorithmic bias and the need for fairness in AI systems, presenting approaches for mitigating bias at various stages of AI decision-making. The presentation emphasizes the importance of understanding and addressing biases in data to ensure equitable outcomes across different demographic groups.

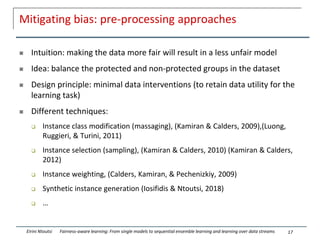

![Limitations of related work

Existing works evaluate predictive performance in terms of the overall

classification error rate (ER), e.g., [Calders et al’09, Calmon et al’17, Fish et

al’16, Hardt et al’16, Krasanakis et al’18, Zafar et al’17]

In case of class-imbalance, ER is misleading

Most of the datasets however suffer from imbalance

Moreover, Eq.Odds “is oblivious” to the class imbalance problem

32

Eirini Ntoutsi Fairness-aware learning: From single models to sequential ensemble learning and learning over data streams](https://image.slidesharecdn.com/recsgrouptampere-210220154820/85/Fairness-aware-learning-From-single-models-to-sequential-ensemble-learning-and-learning-over-data-streams-31-320.jpg)

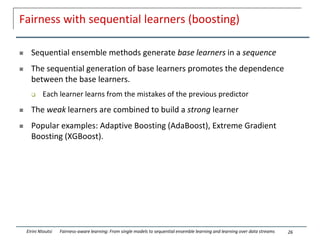

![Experimental evaluation

Datasets of varying imbalance

Baselines

AdaBoost [Sch99]: vanilla AdaBoost

SMOTEBoost [CLHB03]: AdaBoost with SMOTE for imbalanced data.

Krasanakis et al. [KXPK18]: Boosting method which minimizes Equalised Odds by

approximating the underlying distribution of hidden correct labels.

Zafar et al.[ZVGRG17]: Training logistic regression model with convex-concave

constraints to minimize Equalised Odds

AdaFair NoCumul: Variation of AdaFair that computes the fairness weights based on

individual weak learners.

39

Eirini Ntoutsi Fairness-aware learning: From single models to sequential ensemble learning and learning over data streams](https://image.slidesharecdn.com/recsgrouptampere-210220154820/85/Fairness-aware-learning-From-single-models-to-sequential-ensemble-learning-and-learning-over-data-streams-37-320.jpg)

![Wrapping-up, ongoing work and future directions

Moving from single-protected attribute fairness-aware learning to multi-

fairness

Existing legal studies define multi-fairness as compound, intersectional and

overlapping [Makkonen 2002].

Moving from fully-supervised learning to unsupervised and reinforcement

learning

Moving from myopic (maximize short-term/immediate performance) solutions

to non-myopic ones (that consider long-term performance) [Zhang et al,2020]

Actionable approaches (counterfactual generation)

54

Eirini Ntoutsi Fairness-aware learning: From single models to sequential ensemble learning and learning over data streams](https://image.slidesharecdn.com/recsgrouptampere-210220154820/85/Fairness-aware-learning-From-single-models-to-sequential-ensemble-learning-and-learning-over-data-streams-49-320.jpg)