Flink's SQL Engine: Let's Open the Engine Room!

- 1. Flink's SQL Engine: Let's Open the Engine Room! Timo Walther, Principal Software Engineer 2024-03-19

- 2. About me Open Source • Long-term committer since 2014 (before ASF) • Member of the project management committee (PMC) • Top 5 contributor (commits), top 1 contributor (additions) • Among core architects of Flink SQL Career • Early Software Engineer @ DataArtisans (acquired by Alibaba) • SDK Team, SQL Team Lead @ Ververica • Co-Founder @ Immerok (acquired by Confluent) • Principal Software Engineer @ Confluent 2

- 3. What is Apache Flink®?

- 4. Snapshots • Backup • Version • Fork • A/B test • Time-travel • Restore State • Store • Buffer • Cache • Model • Grow • Expire Time • Synchronize • Progress • Wait • Timeout • Fast-forward • Replay Building Blocks for Stream Processing 4 Streams • Pipeline • Distribute • Join • Enrich • Control • Replay

- 5. Flink SQL

- 6. Flink SQL in a Nutshell 6 Properties • Abstract the building blocks for stream processing • Operator topology is determined by planner and optimizer • Business logic is declared in ANSI SQL • Internally, the engine works on binary data • Conceptually a table, but a changelog under the hood! SELECT 'Hello World'; SELECT * FROM (VALUES (1), (2), (3)); SELECT * FROM MyTable; SELECT * FROM Orders o JOIN Payments p ON o.id = p.order;

- 7. How do I Work with Streams in Flink SQL? 7 • You don’t. You work with dynamic tables! • A concept similar to materialized views CREATE TABLE Revenue (name STRING, total INT) WITH (…) INSERT INTO Revenue SELECT name, SUM(amount) FROM Transactions GROUP BY name CREATE TABLE Transactions (name STRING, amount INT) WITH (…) name amount Alice 56 Bob 10 Alice 89 name total Alice 145 Bob 10 So, is Flink SQL a database? No, bring your own data!

- 8. Stream-Table Duality - Example 8 An applied changelog becomes a real (materialized) table. name amount Alice 56 Bob 10 Alice 89 name total Alice 56 Bob 10 changelog +I[Alice, 89] +I[Bob, 10] +I[Alice, 56] +U[Alice, 145] -U[Alice, 56] +I[Bob, 10] +I[Alice, 56] 145 materialization CREATE TABLE Revenue (name STRING, total INT) WITH (…) INSERT INTO Revenue SELECT name, SUM(amount) FROM Transactions GROUP BY name CREATE TABLE Transactions (name STRING, amount INT) WITH (…)

- 9. Stream-Table Duality - Example 9 An applied changelog becomes a real (materialized) table. name amount Alice 56 Bob 10 Alice 89 name total Alice 56 Bob 10 +I[Alice, 89] +I[Bob, 10] +I[Alice, 56] +U[Alice, 145] -U[Alice, 56] +I[Bob, 10] +I[Alice, 56] 145 materialization CREATE TABLE Revenue (PRIMARY KEY(name) …) WITH (…) INSERT INTO Revenue SELECT name, SUM(amount) FROM Transactions GROUP BY name CREATE TABLE Transactions (name STRING, amount INT) WITH (…) Save ~50% of traffic if the downstream system supports upserting!

- 10. Let's Open the Engine Room!

- 11. SQL Declaration

- 12. SQL Declaration – Variant 1 – Basic 12 -- Example tables CREATE TABLE Transaction (ts TIMESTAMP(3), tid BIGINT, amount INT); CREATE TABLE Payment (ts TIMESTAMP(3), tid BIGINT, type STRING); CREATE TABLE Matched (tid BIGINT, amount INT, type STRING); -- Join two tables based on key within time and store in target table INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

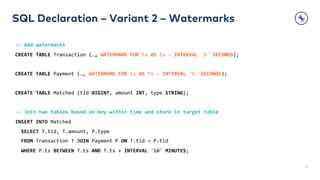

- 13. SQL Declaration – Variant 2 – Watermarks 13 -- Add watermarks CREATE TABLE Transaction (…, WATERMARK FOR ts AS ts – INTERVAL '5' SECONDS); CREATE TABLE Payment (…, WATERMARK FOR ts AS ts – INTERVAL '5' SECONDS); CREATE TABLE Matched (tid BIGINT, amount INT, type STRING); -- Join two tables based on key within time and store in target table INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

- 14. SQL Declaration – Variant 3 – Updating 14 -- Transactions can be aborted -> Result is updating CREATE TABLE Transaction (…, PRIMARY KEY (tid) NOT ENFORCED) WITH ('changelog.mode' = 'upsert'); CREATE TABLE Payment (ts TIMESTAMP(3), tid BIGINT, type STRING); CREATE TABLE Matched (…, PRIMARY KEY (tid) NOT ENFORCED) WITH ('changelog.mode' = 'upsert'); -- Join two tables based on key within time and store in target table INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

- 15. Every Keyword can trigger an Avalanche CREATE TABLE • Defines the connector (e.g., Kafka) and the changelog mode (i.e. append/retract/upsert) àFor the engine: What needs to be processed? What should be produced to the target table? WATERMARK FOR • Defines a completeness marker (i.e. "I have seen everything up to time t.") àFor the engine: Can intermediate results be discarded? Can I trigger result computation? PRIMARY KEY • Defines a uniqueness constraint (i.e. "Event with key k will occur one time or will be updated.") àFor the engine: How do I guarantee the constraint for the target table? SELECT, JOIN, WHERE àFor the engine: What needs to be done? How much freedom do I have? How much can I optimize? 15

- 16. SQL Planning

- 17. 17 Parsing & Validation {SQL String, Catalogs, Modules, Session Config} à Calcite Logical Tree • Break the SQL text into a tree • Lookup catalogs, databases, tables, views, functions, and their types • Resolve all identifiers for columns and fields e.g. SELECT pi, pi.pi FROM pi.pi.pi; • Validate input/output columns and arguments/return types Main drivers: FlinkSqlParserImpl, SqlValidatorImpl, SqlToRelConverter Output: SqlNode then RelNode

- 18. 18 Parsing & Validation {SQL String, Catalogs, Modules, Session Config} à Calcite Logical Tree Rule-based Logical Rewrites à Calcite Logical Tree • Rewrite subqueries to joins (e.g.: EXISTS or IN) • Apply query decorrelation • Simplify expressions • Constant folding (e.g.: functions with literal args) • Initial filter push down Main drivers: FlinkStreamProgram, FlinkStreamRuleSets Output: RelNode

- 19. 19 Parsing & Validation {SQL String, Catalogs, Modules, Session Config} à Calcite Logical Tree Rule-based Logical Rewrites à Calcite Logical Tree • rewrite subqueries to joins (e.g. EXISTS or IN) • apply query decorrelation • simplify expressions • constant folding (e.g. functions with literal args) • filter push down (e.g. all the way into the source) Main drivers: FlinkStreamProgram, FlinkBatchProgram Output: RelNode Cost-based Logical Optimization à Flink Logical Tree • Projection filter/push down (e.g.: all the way into the source) • Push aggregate through join • Reduce aggregate functions (e.g.: AVG -> SUM/COUNT) • Remove unnecessary sort, aggregate, union, etc. • … Main drivers: FlinkStreamProgram, FlinkStreamRuleSets Output: FlinkLogicalRel (RelNode)

- 20. 20 Parsing & Validation {SQL String, Catalogs, Modules, Session Config} à Calcite Logical Tree Rule-based Logical Rewrites à Calcite Logical Tree • rewrite subqueries to joins (e.g. EXISTS or IN) • apply query decorrelation • simplify expressions • constant folding (e.g. functions with literal args) • filter push down (e.g. all the way into the source) Main drivers: FlinkStreamProgram, FlinkBatchProgram Output: RelNode Cost-based Logical Optimization à Flink Logical Tree • watermark push down (e.g.: all the way into the source) • projection push down (e.g.: all the way into the source) • push aggregate through join • reduce aggregate functions (e.g.: AVG -> SUM/COUNT) • remove unnecessary sort, aggregate, union, etc. Main drivers: FlinkStreamProgram, FlinkStreamRuleSets Output: RelNode (FlinkLogicalRel) Flink Rule-based Logical Rewrites à Flink Logical Tree • Watermark push down, more projection push down • Transpose calc past rank to reduce rank input fields • Transform over window to top-n node • … • Sync timestamp columns with watermarks Main drivers: FlinkStreamProgram, FlinkStreamRuleSets Output: FlinkLogicalRel (RelNode)

- 21. 21 Parsing & Validation {SQL String, Catalogs, Modules, Session Config} à Calcite Logical Tree Rule-based Logical Rewrites à Calcite Logical Tree • rewrite subqueries to joins (e.g. EXISTS or IN) • apply query decorrelation • simplify expressions • constant folding (e.g. functions with literal args) • filter push down (e.g. all the way into the source) Main drivers: FlinkStreamProgram, FlinkBatchProgram Output: RelNode Cost-based Logical Optimization à Flink Logical Tree • watermark push down (e.g.: all the way into the source) • projection push down (e.g.: all the way into the source) • push aggregate through join • reduce aggregate functions (e.g.: AVG -> SUM/COUNT) • remove unnecessary sort, aggregate, union, etc. Main drivers: FlinkStreamProgram, FlinkStreamRuleSets Output: RelNode (FlinkLogicalRel) Flink Rule-based Logical Rewrites à Flink Logical Tree • watermark push down, more projection push down • transpose calc past rank to reduce rank input fields • transform over window to top-n node • … • sync timestamp columns with watermarks Main drivers: FlinkStreamProgram, FlinkStreamRuleSets Output: RelNode (FlinkLogicalRel) Flink Physical Optimization and Rewrites à Flink Physical Tree • Convert to matching physical node • Add changelog normalize node • Push watermarks past these special operators • … • Changelog mode inference (i.e. is update before necessary?) Main drivers: FlinkStreamProgram, FlinkStreamRuleSets Output: FlinkPhysicalRel (RelNode)

- 22. Physical Tree à Stream Execution Nodes 22 AsyncCalc Calc ChangelogNormalize Correlate Deduplicate DropUpdateBefore Exchange Expand GlobalGroupAggregate GlobalWindowAggregate GroupAggregate GroupTableAggregate GroupWindowAggregate IncrementalGroupAggregate IntervalJoin Join Limit LocalGroupAggregate LocalWindowAggregate LookupJoin Match OverAggregate Rank Sink Sort SortLimit TableSourceScan TemporalJoin TemporalSort Union Values WatermarkAssigner WindowAggregate WindowAggregateBase WindowDeduplicate WindowJoin WindowRank WindowTableFunction • Recipes for DAG subparts (i.e. StreamExecNodes are templates for stream transformations) • JSON serializable and stability guarantees (i.e. CompiledPlan) à End of the SQL stack: next are JobGraph (incl. concrete runtime classes) and ExecutionGraph (incl. cluster information)

- 23. Examples

- 24. Example 1 – No watermarks, no updates 24 INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES; Logical Tree LogicalSink(table=[Matched], fields=[tid, amount, type]) +- LogicalProject(tid=[$1], amount=[$2], type=[$5]) +- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))]) +- LogicalJoin(condition=[=($1, $4)], joinType=[inner]) :- LogicalTableScan(table=[Transaction]) +- LogicalTableScan(table=[Payment]) Physical Tree Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE]) +- Calc(select=[tid, amount, type], changelogMode=[I]) +- Join(joinType=[InnerJoin], where=[AND(=(tid, tid0), >=(ts0, ts), <=(ts0, +(ts, 600000)))], …, changelogMode=[I]) :- Exchange(distribution=[hash[tid]], changelogMode=[I]) : +- TableSourceScan(table=[Transaction], fields=[ts, tid, amount], changelogMode=[I]) +- Exchange(distribution=[hash[tid]], changelogMode=[I]) +- TableSourceScan(table=[Payment], fields=[ts, tid, type], changelogMode=[I])

- 25. Kafka Reader Join Calc Kafka Writer Kafka Reader Kafka Reader Join Calc Kafka Writer Kafka Reader Kafka Reader Join Calc Kafka Writer Kafka Reader Example 1 – No watermarks, no updates 25 INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES; Kafka Reader Join Calc Kafka Writer offset Kafka Reader offset left side right side txn id txn +I[<T>] +I[<P>] +I[<T>, <P>] +I[tid, amount, type] Message<k, v>

- 26. Example 2 – With watermarks, no updates 26 Logical Tree LogicalSink(table=[Matched], fields=[tid, amount, type]) +- LogicalProject(tid=[$1], amount=[$2], type=[$5]) +- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))]) +- LogicalJoin(condition=[=($1, $4)], joinType=[inner]) :- LogicalWatermarkAssigner(rowtime=[ts], watermark=[-($0, 2000)]) : +- LogicalTableScan(table=[Transaction]) +- LogicalWatermarkAssigner(rowtime=[ts], watermark=[-($0, 2000)]) +- LogicalTableScan(table=[Payment]) Physical Tree Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE]) +- Calc(select=[tid, amount, type], changelogMode=[I]) +- IntervalJoin(joinType=[InnerJoin], windowBounds=[leftLowerBound=…, leftTimeIndex=…, …], …, changelogMode=[I]) :- Exchange(distribution=[hash[tid]], changelogMode=[I]) : +- TableSourceScan(table=[Transaction, watermark=[-(ts, 2000), …]], fields=[ts, tid, amount], changelogMode=[I]) +- Exchange(distribution=[hash[tid]], changelogMode=[I]) +- TableSourceScan(table=[Payment, watermark=[-(ts, 2000), …]], fields=[ts, tid, type], changelogMode=[I]) INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

- 27. Kafka Reader Join Calc Kafka Writer Kafka Reader Kafka Reader Join Calc Kafka Writer Kafka Reader Kafka Reader Join Calc Kafka Writer Kafka Reader Example 2 – With watermarks, no updates 27 INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES; Kafka Reader Interval Join Calc Kafka Writer offset Kafka Reader offset left side right side txn id txn +I[<T>] +I[<P>] +I[<T>, <P>] +I[tid, amount, type] Message<k, v> W[12:00] W[11:55] W[11:55] timers

- 28. Example 3 – With updates, no watermarks 28 INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES; Logical Tree LogicalSink(table=[Matched], fields=[tid, amount, type]) +- LogicalProject(tid=[$1], amount=[$2], type=[$5]) +- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))]) +- LogicalJoin(condition=[=($1, $4)], joinType=[inner]) :- LogicalTableScan(table=[Transaction]) +- LogicalTableScan(table=[Payment]) Physical Tree Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE]) +- Calc(select=[tid, amount, type], changelogMode=[I,UA,D]) +- Join(joinType=[InnerJoin], where=[…], …, leftInputSpec=[JoinKeyContainsUniqueKey], changelogMode=[I,UA,D]) :- Exchange(distribution=[hash[tid]], changelogMode=[I,UA,D]) : +- ChangelogNormalize(key=[tid], changelogMode=[I,UA,D]) : +- Exchange(distribution=[hash[tid]], changelogMode=[I,UA,D]) : +- TableSourceScan(table=[Transaction], fields=[ts, tid, amount], changelogMode=[I,UA,D]) +- Exchange(distribution=[hash[tid]], changelogMode=[I]) +- TableSourceScan(table=[Payment], fields=[ts, tid, type], changelogMode=[I])

- 29. Kafka Reader Join Calc Kafka Writer Kafka Reader Kafka Reader Join Calc Kafka Writer Kafka Reader Kafka Reader Join Calc Kafka Writer Kafka Reader Example 3 – With updates, no watermarks 29 INSERT INTO Matched SELECT T.tid, T.amount, P.type FROM Transaction T JOIN Payment P ON T.tid = P.tid WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES; Kafka Reader Join Calc Kafka Writer offset Kafka Reader offset left side right side txn id txn Join Join Join Changelog Normalize last seen +U[<T>] +I[<P>] +I[tid, amount, type] Message<k, v> +I[<T>, <P>] +I[<T>] +U[<T>] +I[<P>] +U[tid, amount, type] Message<k, v> +U[<T>,<P>] +U[<T>]

- 30. Flink SQL on Confluent Cloud

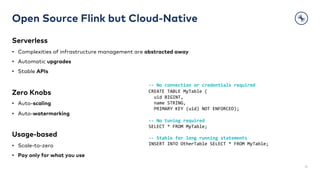

- 31. Open Source Flink but Cloud-Native Serverless • Complexities of infrastructure management are abstracted away • Automatic upgrades • Stable APIs Zero Knobs • Auto-scaling • Auto-watermarking Usage-based • Scale-to-zero • Pay only for what you use 31 -- No connection or credentials required CREATE TABLE MyTable ( uid BIGINT, name STRING, PRIMARY KEY (uid) NOT ENFORCED); -- No tuning required SELECT * FROM MyTable; -- Stable for long running statements INSERT INTO OtherTable SELECT * FROM MyTable;

- 32. Open source Flink but Cloud-Native & Complete One Unified Platform • Kafka and Flink fully integrated • Automatic inference or manual creation of topics • Metadata management via Schema Registry – bidirectional for Avro, JSON, Protobuf • Consistent semantics across storage and processing - changelogs with append-only, upsert, retract 32 Confluent Cloud should feel like a database. But for streaming! Confluent Cloud CLI Confluent Cloud SQL Workspace

- 33. Thank you! Feel free to follow: @twalthr

![Stream-Table Duality - Example

8

An applied changelog becomes a real (materialized) table.

name amount

Alice 56

Bob 10

Alice 89

name total

Alice 56

Bob 10

changelog

+I[Alice, 89] +I[Bob, 10] +I[Alice, 56] +U[Alice, 145] -U[Alice, 56] +I[Bob, 10] +I[Alice, 56]

145

materialization

CREATE TABLE Revenue

(name STRING, total INT)

WITH (…)

INSERT INTO Revenue

SELECT name, SUM(amount)

FROM Transactions

GROUP BY name

CREATE TABLE Transactions

(name STRING, amount INT)

WITH (…)](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-8-320.jpg)

![Stream-Table Duality - Example

9

An applied changelog becomes a real (materialized) table.

name amount

Alice 56

Bob 10

Alice 89

name total

Alice 56

Bob 10

+I[Alice, 89] +I[Bob, 10] +I[Alice, 56] +U[Alice, 145] -U[Alice, 56] +I[Bob, 10] +I[Alice, 56]

145

materialization

CREATE TABLE Revenue

(PRIMARY KEY(name) …)

WITH (…)

INSERT INTO Revenue

SELECT name, SUM(amount)

FROM Transactions

GROUP BY name

CREATE TABLE Transactions

(name STRING, amount INT)

WITH (…)

Save ~50% of traffic if the downstream system supports upserting!](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-9-320.jpg)

![Example 1 – No watermarks, no updates

24

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Logical Tree

LogicalSink(table=[Matched], fields=[tid, amount, type])

+- LogicalProject(tid=[$1], amount=[$2], type=[$5])

+- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))])

+- LogicalJoin(condition=[=($1, $4)], joinType=[inner])

:- LogicalTableScan(table=[Transaction])

+- LogicalTableScan(table=[Payment])

Physical Tree

Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE])

+- Calc(select=[tid, amount, type], changelogMode=[I])

+- Join(joinType=[InnerJoin], where=[AND(=(tid, tid0), >=(ts0, ts), <=(ts0, +(ts, 600000)))], …, changelogMode=[I])

:- Exchange(distribution=[hash[tid]], changelogMode=[I])

: +- TableSourceScan(table=[Transaction], fields=[ts, tid, amount], changelogMode=[I])

+- Exchange(distribution=[hash[tid]], changelogMode=[I])

+- TableSourceScan(table=[Payment], fields=[ts, tid, type], changelogMode=[I])](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-24-320.jpg)

![Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Example 1 – No watermarks, no updates

25

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Kafka Reader

Join Calc Kafka Writer

offset

Kafka Reader

offset

left side

right side txn id

txn

+I[<T>]

+I[<P>]

+I[<T>, <P>] +I[tid, amount, type] Message<k, v>](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-25-320.jpg)

![Example 2 – With watermarks, no updates

26

Logical Tree

LogicalSink(table=[Matched], fields=[tid, amount, type])

+- LogicalProject(tid=[$1], amount=[$2], type=[$5])

+- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))])

+- LogicalJoin(condition=[=($1, $4)], joinType=[inner])

:- LogicalWatermarkAssigner(rowtime=[ts], watermark=[-($0, 2000)])

: +- LogicalTableScan(table=[Transaction])

+- LogicalWatermarkAssigner(rowtime=[ts], watermark=[-($0, 2000)])

+- LogicalTableScan(table=[Payment])

Physical Tree

Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE])

+- Calc(select=[tid, amount, type], changelogMode=[I])

+- IntervalJoin(joinType=[InnerJoin], windowBounds=[leftLowerBound=…, leftTimeIndex=…, …], …, changelogMode=[I])

:- Exchange(distribution=[hash[tid]], changelogMode=[I])

: +- TableSourceScan(table=[Transaction, watermark=[-(ts, 2000), …]], fields=[ts, tid, amount], changelogMode=[I])

+- Exchange(distribution=[hash[tid]], changelogMode=[I])

+- TableSourceScan(table=[Payment, watermark=[-(ts, 2000), …]], fields=[ts, tid, type], changelogMode=[I])

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-26-320.jpg)

![Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Example 2 – With watermarks, no updates

27

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Kafka Reader

Interval Join Calc Kafka Writer

offset

Kafka Reader

offset

left side

right side

txn id

txn

+I[<T>]

+I[<P>]

+I[<T>, <P>] +I[tid, amount, type] Message<k, v>

W[12:00]

W[11:55]

W[11:55]

timers](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-27-320.jpg)

![Example 3 – With updates, no watermarks

28

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Logical Tree

LogicalSink(table=[Matched], fields=[tid, amount, type])

+- LogicalProject(tid=[$1], amount=[$2], type=[$5])

+- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))])

+- LogicalJoin(condition=[=($1, $4)], joinType=[inner])

:- LogicalTableScan(table=[Transaction])

+- LogicalTableScan(table=[Payment])

Physical Tree

Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE])

+- Calc(select=[tid, amount, type], changelogMode=[I,UA,D])

+- Join(joinType=[InnerJoin], where=[…], …, leftInputSpec=[JoinKeyContainsUniqueKey], changelogMode=[I,UA,D])

:- Exchange(distribution=[hash[tid]], changelogMode=[I,UA,D])

: +- ChangelogNormalize(key=[tid], changelogMode=[I,UA,D])

: +- Exchange(distribution=[hash[tid]], changelogMode=[I,UA,D])

: +- TableSourceScan(table=[Transaction], fields=[ts, tid, amount], changelogMode=[I,UA,D])

+- Exchange(distribution=[hash[tid]], changelogMode=[I])

+- TableSourceScan(table=[Payment], fields=[ts, tid, type], changelogMode=[I])](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-28-320.jpg)

![Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Example 3 – With updates, no watermarks

29

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Kafka Reader

Join Calc Kafka Writer

offset

Kafka Reader

offset

left side

right side txn id

txn

Join

Join

Join

Changelog

Normalize

last seen

+U[<T>]

+I[<P>]

+I[tid, amount, type] Message<k, v>

+I[<T>, <P>]

+I[<T>]

+U[<T>]

+I[<P>]

+U[tid, amount, type] Message<k, v>

+U[<T>,<P>]

+U[<T>]](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-29-320.jpg)