Getting Data into Splunk

- 1. Copyright © 2016 Splunk Inc. Getting Data In by Example Nimish Doshi Principal Systems Engineer

- 2. Assumption Install Splunk Enterprise ● This presentation assumes that you have installed Splunk somewhere, have access to Splunk Enterprise, and have access to the data that is being sent. 2

- 3. Agenda Files and Directories Network Inputs Scripted Inputs HTTP Event Collector Advance Input Topics 3

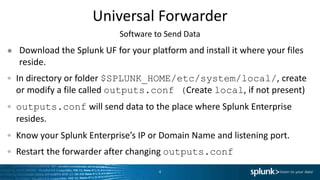

- 4. Universal Forwarder ● Download the Splunk UF for your platform and install it where your files reside. In directory or folder $SPLUNK_HOME/etc/system/local/, create or modify a file called outputs.conf (Create local, if not present) outputs.conf will send data to the place where Splunk Enterprise resides. Know your Splunk Enterprise’s IP or Domain Name and listening port. Restart the forwarder after changing outputs.conf 4 Software to Send Data

- 5. Example Outputs.conf File that controls where data is sent #Default Place Where Data is sent. It’s just a name used in the next stanza [tcpout] defaultGroup=my_indexers # Notice (commas for more) name of the server and listening port [tcpout:my_indexers] server=mysplunk_indexer1:9997 # Any optional properties for the indexer. Leave blank for now. [tcpout-server://mysplunk_indexer1:9997] 5

- 6. Set up Indexer to Receive Data Under Settings->Forwarding and Receiving->Receive Data --Add New 6

- 7. Inputs.conf Place file in same place as outputs.conf ● This file controls what data is monitored or collected by the forwarder ● Opt In: Only data that is monitored will be sent to Splunk Indexers. ● If you make changes to the file manually, restart the forwarder 7

- 8. Sample Inputs.conf to monitor files Always put in a sourcetype (and optional index)!!!!! [monitor:///opt/logs/webfiles*] # files starting with webfiles sourcetype=access_combined [monitor:///apache/*.log] # files ending with.log in apache sourcetype=access_combined [monitor://var/.../log(a|b).log] # files loga.log or logb.log sourcetype=syslog index=IT 8

- 9. Sample Inputs.conf to blacklist whitelist files Always put in a sourcetype!!!!! [monitor:///mnt/logs] # ignore files ending with .txt or .gz blacklist = .(?:txt|gz)$ # blacklist blocks sourcetype=my_app [monitor:///apache] # files stdout.log and web.log in apache whitelist = stdout.log$|web.log$ # whitelist allows sourcetype=access_combined # For better performance, use … or * followed by regex over whitelist and blacklist 9

- 10. Sample Inputs.conf for Windows Events inputs.conf on a Windows Machine # Windows platform specific input processor. [WinEventLog://Application] disabled = 0 # disabled=false and disabled=0 are the same instruction: Enable [WinEventLog://Security] disabled = 0 [WinEventLog://System] disabled = 0 10

- 11. Line breaking Default line breaker for events is a newline (r|n) ● How do you tell Splunk to break multi-line events correctly? ● Create or modify a props.conf file on the machine indexing the data in either the app’s local directory or $SPLUNK_HOME/etc/system/local. ● Contents of props.conf [name of your sourcetype] # Use either of the two below BREAK_ONLY_BEFORE = regular expression indicating end of your event LINE_BREAKER=(matching group regex indicting end of event) ● LINE_BREAKER performs better than BREAK_ONLY_BEFORE 11

- 12. Line Breaking Example Example props.conf and channel event data [channel_entry] BREAK_ONLY_BEFORE = ^channel Sample Events channel=documentaryfilm <video> <id> 120206922 </id> <video> channel=Indiefilm <video> <id> 120206923 </id> <video> 12

- 13. Timestamp Recognizion Normally, Splunk recognizes timestamps automatically, but… ● How do you tell Splunk to recognize custom timestamps? ● Create or modify a props.conf file on the machine indexing the data in either the app’s local directory or $SPLUNK_HOME/etc/system/local. ● Contents of props.conf [name of your sourcetype] TIME_PREFEX= regular expression indicating where to find timestamp TIME_FORMAT=*NIX <strptime-style format. See Docs online for format> MAX_TIMESTAMP_LOOKAHEAD= number of characters to look ahead for TS 13

- 14. Timestamp Example Example props.conf and some event data [journal] TIME_PREFIX = Valid_Until= TIME_FORMAT = %b %d %H:%M:%S %Z%z %Y Sample Events …Valid_Until=Thu Dec 31 17:59:59 GMT-06:00 2020 14

- 15. Agenda Files and Directories Network Inputs Scripted Inputs HTTP Event Collector Advance Input Topics 15

- 16. Network Inputs Splunk Indexer or forwarder can listen for TCP on UDP data ● Configure your inputs.conf to listen on a port for TCP or UDP data. ● You can use Splunk Web itself to configure this on an indexer machine. ● Restart the indexer or forwarder if you modified inputs.conf directly. 16

- 17. Example UDP inputs.conf Connectionless and prone to data loss [udp://514] sourcetype=cisco_asa [udp://515] sourcetype=pan_threat 17

- 18. Assign UDP sourcetype dynamically All data goes through the same port inputs.conf [udp://514] props.conf [source::udp:514] TRANSFORMS-ASA=assign_ASA TRANSFORMS-PAN=assign_PAN 18

- 19. Use transforms.conf to assign sourcetype Transforms.conf is in the same place as props.conf Transforms.conf [assign_ASA] REGEX = ASA FORMAT = sourcetype::cisco_ASA DEST_KEY = MetaData:Sourcetype [assign_PAN] REGEX = pand+ FORMAT = sourcetype::pan_threat DEST_KEY = MetaData:Sourcetype 19

- 20. Example TCP inputs.conf Connection Oriented [tcp://remote_server:9998] sourcetype=cisco_asa [tcp://9999] # any server can connect to send data sourcetype=pan_threat 20

- 21. Best Practice to collect from Network Use intermediary to collect data to files and forward the data 21

- 22. Agenda Files and Directories Network Inputs Scripted Inputs HTTP Event Collector Advance Input Topics 22

- 23. Using Scripted Input • Splunk’s inputs.conf file can monitor the output of any script • Script can be written in any language executable by OS’s shell. • Wrap the executable language in top level script, if necessary • Each script is executed on a number of seconds interval basis • Output of script is captured by Splunk’s indexer • Output should be considered an Event to be be indexed

- 24. Example Inputs.conf [script:///opt/splunk/etc/apps/scripts/bin/top.sh] interval = 5 # run every 5 seconds sourcetype = top # set sourcetype to top source = top_output

- 25. Inputs.conf for calling Java program # For Windows. Unix is the 2nd entry. weather.bat|sh are wrappers. [script://.binweather.bat] interval = 120 sourcetype = weather_entry source = weather_xml disabled = false [script://./bin/weather.sh] interval = 120 sourcetype = weather_entry source = weather_xml disabled = false

- 26. Example Inputs.conf for listener # Unix/Linux called from Splunk App [script://./bin/JMSReceive.sh] interval=-1 # -1 means start once and only once sourcetype=JMS_event source=JMS_entry host=virtual_linux disabled=false

- 27. Example Inputs.conf for Windows Perf # Collect CPU processor usage metrics on a windows machine. # Splunk provides perfmon input. [perfmon://Processor] object = Processor instances = _Total counters = % Processor Time;% User Time index = Perf interval = 30 disabled = 0

- 28. Agenda Files and Directories Network Inputs Scripted Inputs HTTP Event Collector Advance Input Topics 28

- 29. 29

- 30. HEC Set Up Set up via Splunk Web to get a Token used by developers ● Get an unique token for your developers to send events via HTTP/S ● Give token to developers. Enable the token. ● Developers log events via HTTP using a language that supports HTTP/S ● Data can go directly to an indexer machine or intermediary forwarder ● Logic should be built-in the code to handle exceptions and retries 30

- 31. Set up HEC Token Go to Settings->Data Inputs->HTTP Event Collector and click on it. 31

- 32. Enable HEC Via Global Settings 32 Do this once

- 33. Add a new HEC Token Name your token 33

- 34. Configure HEC Token Give it a default index and sourcetype 34

- 37. Test your token Curl can be used to send HTTP data ● > curl -k https://localhost:8088/services/collector/event -H "Authorization: Splunk 65123E77-86B1-4136- B955-E8CDD6A7D3B1" -d '{"event": "my log entry via HEC"}’ ● {"text":"Success","code":0} ● Notice the token in the Authorization:Splunk ● This event is can be sent as JSON or raw data and returns success ● http://dev.splunk.com/view/event-collector/SP-CAAAE6P 37

- 38. Search Splunk for Sample HEC Sent Data 38

- 39. Sample Java Code to send via HEC ● Run with -Djava.util.logging.config.file=/path/to/jdklogging.properties import java.util.logging.*; import com.splunk.logging.*; %user_logger_name%.level = INFO %user_logger_name%.handlers = com.splunk.logging.HttpEventCollectorLoggingHandler # Configure the com.splunk.logging.HttpEventCollectorLoggingHandler com.splunk.logging.HttpEventCollectorLoggingHandler.url = “https://mydomain:8088” com.splunk.logging.HttpEventCollectorLoggingHandler.level = INFO com.splunk.logging.HttpEventCollectorLoggingHandler.token = “65123E77-86B1-4136-B955- E8CDD6A7D3B1” com.splunk.logging.HttpEventCollectorLoggingHandler.disableCertificateValidation=true logger.info("This is a test event for Logback test"); 39

- 40. Agenda Files and Directories Network Inputs Scripted Inputs HTTP Event Collector Advance Input Topics 40

- 41. Modular Input Wrap your scripted Input into a Modular Input ● Modular input allows you to package your input as a reusable framework ● Modular Input provides validation of input and REST API access for administration such as permission granting ● Modular inputs can be configured by your user via Splunk Web on an indexer or new custom entries for inputs.conf ● See Splunk docs for more details http://docs.splunk.com/Documentation/Splunk/latest/AdvancedDev/ModInputsIntro 41

- 42. Sample Modular Input Github Input via Splunk Web 42

- 43. Splunk Stream Splunk supported app to listen to network TAP or SPAN for data ● Captures wire data without the need for a forwarder on very end point – Network must allow promiscuous read or provide certificates to decrypt – Splunk Stream can still be placed on a machine to capture its network output ● Ingestion of payload can be controlled and filtered ● Works with a variety of protocols out of the box ● Ingests wired data into human readable JSON format automatically ● See Splunk docs for more details and download from Splunkbase. 43

- 44. Configured Stream Sample protocol configuration 44

- 45. Thank You

![Example Outputs.conf

File that controls where data is sent

#Default Place Where Data is sent. It’s just a name used in the next stanza

[tcpout]

defaultGroup=my_indexers

# Notice (commas for more) name of the server and listening port

[tcpout:my_indexers]

server=mysplunk_indexer1:9997

# Any optional properties for the indexer. Leave blank for now.

[tcpout-server://mysplunk_indexer1:9997]

5](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-5-320.jpg)

![Sample Inputs.conf to monitor files

Always put in a sourcetype (and optional index)!!!!!

[monitor:///opt/logs/webfiles*] # files starting with webfiles

sourcetype=access_combined

[monitor:///apache/*.log] # files ending with.log in apache

sourcetype=access_combined

[monitor://var/.../log(a|b).log] # files loga.log or logb.log

sourcetype=syslog

index=IT

8](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-8-320.jpg)

![Sample Inputs.conf to blacklist whitelist files

Always put in a sourcetype!!!!!

[monitor:///mnt/logs] # ignore files ending with .txt or .gz

blacklist = .(?:txt|gz)$ # blacklist blocks

sourcetype=my_app

[monitor:///apache] # files stdout.log and web.log in apache

whitelist = stdout.log$|web.log$ # whitelist allows

sourcetype=access_combined

# For better performance, use … or * followed by regex over whitelist and blacklist

9](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-9-320.jpg)

![Sample Inputs.conf for Windows Events

inputs.conf on a Windows Machine

# Windows platform specific input processor.

[WinEventLog://Application]

disabled = 0 # disabled=false and disabled=0 are the same instruction: Enable

[WinEventLog://Security]

disabled = 0

[WinEventLog://System]

disabled = 0

10](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-10-320.jpg)

![Line breaking

Default line breaker for events is a newline (r|n)

● How do you tell Splunk to break multi-line events correctly?

● Create or modify a props.conf file on the machine indexing the

data in either the app’s local directory or

$SPLUNK_HOME/etc/system/local.

● Contents of props.conf

[name of your sourcetype] # Use either of the two below

BREAK_ONLY_BEFORE = regular expression indicating end of your event

LINE_BREAKER=(matching group regex indicting end of event)

● LINE_BREAKER performs better than BREAK_ONLY_BEFORE

11](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-11-320.jpg)

![Line Breaking Example

Example props.conf and channel event data

[channel_entry]

BREAK_ONLY_BEFORE = ^channel

Sample Events

channel=documentaryfilm

<video> <id> 120206922 </id> <video>

channel=Indiefilm

<video> <id> 120206923 </id> <video>

12](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-12-320.jpg)

![Timestamp Recognizion

Normally, Splunk recognizes timestamps automatically, but…

● How do you tell Splunk to recognize custom timestamps?

● Create or modify a props.conf file on the machine indexing the data in

either the app’s local directory or $SPLUNK_HOME/etc/system/local.

● Contents of props.conf

[name of your sourcetype]

TIME_PREFEX= regular expression indicating where to find timestamp

TIME_FORMAT=*NIX <strptime-style format. See Docs online for format>

MAX_TIMESTAMP_LOOKAHEAD= number of characters to look ahead for TS

13](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-13-320.jpg)

![Timestamp Example

Example props.conf and some event data

[journal]

TIME_PREFIX = Valid_Until=

TIME_FORMAT = %b %d %H:%M:%S %Z%z %Y

Sample Events

…Valid_Until=Thu Dec 31 17:59:59 GMT-06:00 2020

14](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-14-320.jpg)

![Example UDP inputs.conf

Connectionless and prone to data loss

[udp://514]

sourcetype=cisco_asa

[udp://515]

sourcetype=pan_threat

17](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-17-320.jpg)

![Assign UDP sourcetype dynamically

All data goes through the same port

inputs.conf

[udp://514]

props.conf

[source::udp:514]

TRANSFORMS-ASA=assign_ASA

TRANSFORMS-PAN=assign_PAN

18](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-18-320.jpg)

![Use transforms.conf to assign sourcetype

Transforms.conf is in the same place as props.conf

Transforms.conf

[assign_ASA]

REGEX = ASA

FORMAT = sourcetype::cisco_ASA

DEST_KEY = MetaData:Sourcetype

[assign_PAN]

REGEX = pand+

FORMAT = sourcetype::pan_threat

DEST_KEY = MetaData:Sourcetype

19](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-19-320.jpg)

![Example TCP inputs.conf

Connection Oriented

[tcp://remote_server:9998]

sourcetype=cisco_asa

[tcp://9999] # any server can connect to send data

sourcetype=pan_threat

20](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-20-320.jpg)

![Example Inputs.conf

[script:///opt/splunk/etc/apps/scripts/bin/top.sh]

interval = 5 # run every 5 seconds

sourcetype = top # set sourcetype to top

source = top_output](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-24-320.jpg)

![Inputs.conf for calling Java program

# For Windows. Unix is the 2nd entry. weather.bat|sh are wrappers.

[script://.binweather.bat]

interval = 120

sourcetype = weather_entry

source = weather_xml

disabled = false

[script://./bin/weather.sh]

interval = 120

sourcetype = weather_entry

source = weather_xml

disabled = false](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-25-320.jpg)

![Example Inputs.conf for listener

# Unix/Linux called from Splunk App

[script://./bin/JMSReceive.sh]

interval=-1 # -1 means start once and only once

sourcetype=JMS_event

source=JMS_entry

host=virtual_linux

disabled=false](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-26-320.jpg)

![Example Inputs.conf for Windows Perf

# Collect CPU processor usage metrics on a windows machine.

# Splunk provides perfmon input.

[perfmon://Processor]

object = Processor

instances = _Total

counters = % Processor Time;% User Time

index = Perf

interval = 30

disabled = 0](https://image.slidesharecdn.com/gettingdatainbyexample-161011051814/85/Getting-Data-into-Splunk-27-320.jpg)