Greyhound - Powerful Pure Functional Kafka Library

- 1. [email protected] twitter@NSilnitsky linkedin/natansilnitsky github.com/natansil Greyhound - A Powerful Pure Functional Kafka library Natan Silnitsky Backend Infra Developer, Wix.com

- 2. A Scala/Java high-level SDK for Apache Kafka. Powered by ZIO Greyhound * features...

- 3. But first… a few Kafka terms

- 5. @NSilnitsky Kafka Producer Topic Partition Partition Partition Kafka Broker Topic Topic Partition Partition Partition Partition Partition Partition A few Kafka terms

- 6. @NSilnitsky Topic TopicTopic Partition Partition Partition Partition Partition Partition Partition Partition Partition Kafka Producer Partition 0 1 2 3 4 5 append-only log A few Kafka terms

- 7. @NSilnitsky Topic TopicTopic Partition Partition Partition Partition Partition Partition Partition Partition Partition Kafka Consumers A few Kafka terms 0 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 1 5 1 6 1 7 1 8 1 9 2 0 Partition

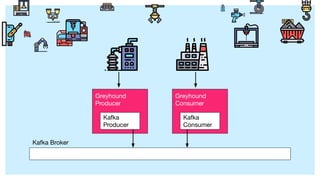

- 8. Greyhound Wraps Kafka Kafka Broker Service A Service B Kafka Consumer Kafka Producer

- 9. @NSilnitsky Kafka Broker Service A Service B Abstract so that it is easy to change for everyone Simplify APIs, with additional features Greyhound wraps Kafka Kafka Consumer Kafka Producer

- 10. @NSilnitsky Multiple APIs For Java, Scala and Wix Devs Greyhound wraps Kafka Scala Future ZIO Java Kafka Consumer Kafka Producer Kafka Broker ZIO Core Service A Service B * all logic

- 11. @NSilnitsky Greyhound wraps Kafka Scala Future ZIO Java Kafka Consumer Kafka Producer Kafka Broker ZIO Core Wix Interop OSS Private Service A Service B

- 12. Kafka Broker Service A Service B Kafka Consumer Kafka Producer - Boilerplate Greyhound wraps Kafka What do we want it to do?

- 13. val consumer: KafkaConsumer[String, SomeMessage] = createConsumer() def pollProcessAndCommit(): Unit = { val consumerRecords = consumer.poll(1000).asScala consumerRecords.foreach(record => { println(s"Record value: ${record.value.messageValue}") }) consumer.commitAsync() pollProcessAndCommit() } pollProcessAndCommit() Kafka Consumer API * Broker location, serde

- 14. val consumer: KafkaConsumer[String, SomeMessage] = createConsumer() def pollProcessAndCommit(): Unit = { val consumerRecords = consumer.poll(1000).asScala consumerRecords.foreach(record => { println(s"Record value: ${record.value.messageValue}") }) consumer.commitAsync() pollProcessAndCommit() } pollProcessAndCommit() Kafka Consumer API

- 15. val consumer: KafkaConsumer[String, SomeMessage] = createConsumer() def pollProcessAndCommit(): Unit = { val consumerRecords = consumer.poll(1000).asScala consumerRecords.foreach(record => { println(s"Record value: ${record.value.messageValue}") }) consumer.commitAsync() pollProcessAndCommit() } pollProcessAndCommit() Kafka Consumer API

- 16. val handler: RecordHandler[Console, Nothing, String, SomeMessage] = RecordHandler { record => zio.console.putStrLn(record.value.messageValue) } GreyhoundConsumersBuilder .withConsumer(RecordConsumer( topic = "some-group", group = "group-2", handle = handler)) Greyhound Consumer API * No commit, wix broker location

- 17. Functional Composition Greyhound wraps Kafka ✔ Simple Consumer API + Composable Record Handler What do we want it to do?

- 18. @NSilnitsky Kafka Broker Greyhound Consumer Kafka Consumer COMPOSABLE RECORD HANDLER Site Published Topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Commit

- 19. trait RecordHandler[-R, +E, K, V] { def handle(record: ConsumerRecord[K, V]): ZIO[R, E, Any] def contramap: RecordHandler def contramapM: RecordHandler def mapError: RecordHandler def withErrorHandler: RecordHandler def ignore: RecordHandler def provide: RecordHandler def andThen: RecordHandler def withDeserializers: RecordHandler } Composable Handler @NSilnitsky

- 20. trait RecordHandler[-R, +E, K, V] { def handle(record: ConsumerRecord[K, V]): ZIO[R, E, Any] def contramap: RecordHandler def contramapM: RecordHandler def mapError: RecordHandler def withErrorHandler: RecordHandler def ignore: RecordHandler def provide: RecordHandler def andThen: RecordHandler def withDeserializers: RecordHandler } Composable Handler @NSilnitsky * change type

- 21. def contramapM[R, E, K, V](f: ConsumerRecord[K2, V2] => ZIO[R, E, ConsumerRecord[K, V]]) : RecordHandler[R, E, K2, V2] = new RecordHandler[R, E, K2, V2] { override def handle(record: ConsumerRecord[K2, V2]): ZIO[R, E, Any] = f(record).flatMap(self.handle) } def withDeserializers(keyDeserializer: Deserializer[K], valueDeserializer: Deserializer[V]) : RecordHandler[R, Either[SerializationError, E], Chunk[Byte], Chunk[Byte]] = mapError(Right(_)).contramapM { record => record.bimapM( key => keyDeserializer.deserialize(record.topic, record.headers, key), value => valueDeserializer.deserialize(record.topic, record.headers, value) ).mapError(e => Left(SerializationError(e))) } Composable Handler @NSilnitsky

- 22. def contramapM[R, E, K, V](f: ConsumerRecord[K2, V2] => ZIO[R, E, ConsumerRecord[K, V]]) : RecordHandler[R, E, K2, V2] = new RecordHandler[R, E, K2, V2] { override def handle(record: ConsumerRecord[K2, V2]): ZIO[R, E, Any] = f(record).flatMap(self.handle) } def withDeserializers(keyDeserializer: Deserializer[K], valueDeserializer: Deserializer[V]) : RecordHandler[R, Either[SerializationError, E], Chunk[Byte], Chunk[Byte]] = mapError(Right(_)).contramapM { record => record.bimapM( key => keyDeserializer.deserialize(record.topic, record.headers, key), value => valueDeserializer.deserialize(record.topic, record.headers, value) ).mapError(e => Left(SerializationError(e))) } Composable Handler @NSilnitsky

- 23. RecordHandler( (r: ConsumerRecord[String, Duration]) => putStrLn(s"duration: ${r.value.toMillis}")) .withDeserializers(StringSerde, DurationSerde) => RecordHandler[Console, scala.Either[SerializationError, scala.RuntimeException], Chunk[Byte], Chunk[Byte]] Composable Handler @NSilnitsky

- 24. @NSilnitsky Kafka Broker Greyhound Consumer Kafka Consumer DRILL DOWN Site Published Topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5

- 25. @NSilnitsky Kafka Broker Kafka Consumer Site Published Topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Event loop Greyhound Consumer

- 26. @NSilnitsky Kafka Broker Kafka Consumer Site Published Topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Event loop Greyhound Consumer Workers Message Dispatcher

- 27. @NSilnitsky Kafka Broker Kafka Consumer Site Published Topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Event loop Greyhound Consumer Workers Message Dispatcher DRILL DOWN

- 28. object EventLoop { def make[R](consumer: Consumer /*...*/): RManaged[Env, EventLoop[...]] = { val start = for { running <- Ref.make(true) fiber <- pollLoop(running, consumer/*...*/).forkDaemon } yield (fiber, running /*...*/) start.toManaged { case (fiber, running /*...*/) => for { _ <- running.set(false) // ... } yield () } } EventLoop Polling

- 29. object EventLoop { def make[R](consumer: Consumer /*...*/): RManaged[Env, EventLoop[...]] = { val start = for { running <- Ref.make(true) fiber <- pollLoop(running, consumer/*...*/).forkDaemon } yield (fiber, running /*...*/) start.toManaged { case (fiber, running /*...*/) => for { _ <- running.set(false) // ... } yield () } } EventLoop Polling @NSilnitsky * dispatcher.shutdown

- 30. object EventLoop { def make[R](consumer: Consumer /*...*/): RManaged[Env, EventLoop[...]] = { val start = for { running <- Ref.make(true) fiber <- pollLoop(running, consumer/*...*/).forkDaemon } yield (fiber, running /*...*/) start.toManaged { case (fiber, running /*...*/) => for { _ <- running.set(false) // ... } yield () } } EventLoop Polling * mem leak@NSilnitsky

- 31. object EventLoop { def make[R](consumer: Consumer /*...*/): RManaged[Env, EventLoop[...]] = { val start = for { running <- Ref.make(true) fiber <- pollLoop(running, consumer/*...*/).forkDaemon } yield (fiber, running /*...*/) start.toManaged { case (fiber, running /*...*/) => for { _ <- running.set(false) // ... } yield () } } EventLoop Polling @NSilnitsky

- 32. def pollLoop[R1](running: Ref[Boolean], consumer: Consumer // ... ): URIO[R1 with GreyhoundMetrics, Unit] = running.get.flatMap { case true => for { //... _ <- pollAndHandle(consumer /*...*/) //... result <- pollLoop(running, consumer /*...*/) } yield result case false => ZIO.unit } TailRec in ZIO @NSilnitsky

- 33. def pollLoop[R1](running: Ref[Boolean], consumer: Consumer // ... ): URIO[R1 with GreyhoundMetrics, Unit] = running.get.flatMap { case true => // ... pollAndHandle(consumer /*...*/) // ... .flatMap(_ => pollLoop(running, consumer /*...*/) .map(result => result) ) case false => ZIO.unit } TailRec in ZIO https://github.com/oleg-py/better-monadic-for @NSilnitsky

- 34. object EventLoop { def make[R](consumer: Consumer /*...*/): RManaged[Env, EventLoop[...]] = { val start = for { running <- Ref.make(true) fiber <- pollOnce(running, consumer/*, ...*/) .doWhile(_ == true).forkDaemon } yield (fiber, running /*...*/) start.toManaged { case (fiber, running /*...*/) => for { _ <- running.set(false) // ... } yield () } TailRec in ZIO @NSilnitsky

- 35. object EventLoop { type Handler[-R] = RecordHandler[R, Nothing, Chunk[Byte], Chunk[Byte]] def make[R](handler: Handler[R] /*...*/): RManaged[Env, EventLoop[...]] = { val start = for { // ... handle = handler.andThen(offsets.update).handle(_) dispatcher <- Dispatcher.make(handle, /**/) // ... } yield (fiber, running /*...*/) } def pollOnce(/*...*/) = { // poll and handle... _ <- offsets.commit Commit Offsets @NSilnitsky * old -> pass down

- 36. Greyhound wraps Kafka ✔ Simple Consumer API ✔ Composable Record Handler + Parallel Consumption! What do we want it to do?

- 37. val consumer: KafkaConsumer[String, SomeMessage] = createConsumer() def pollProcessAndCommit(): Unit = { val consumerRecords = consumer.poll(1000).asScala consumerRecords.foreach(record => { println(s"Record value: ${record.value.messageValue}") }) consumer.commitAsync() pollProcessAndCommit() } pollProcessAndCommit() Kafka Consumer API

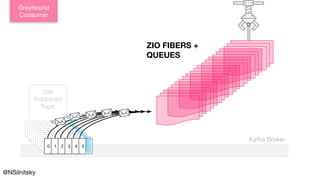

- 38. @NSilnitsky Kafka Broker Site Published Topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Greyhound Consumer ZIO FIBERS + QUEUES

- 39. @NSilnitsky Kafka Broker Kafka Consumer Site Published Topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Event loop Greyhound Consumer Workers Message Dispatcher (THREAD-SAFE) PARALLEL CONSUMPTION

- 40. object Dispatcher { def make[R](handle: Record => URIO[R, Any]): UIO[Dispatcher[R]] = for { // ... workers <- Ref.make(Map.empty[TopicPartition, Worker]) } yield new Dispatcher[R] { override def submit(record: Record): URIO[..., SubmitResult] = for { // ... worker <- workerFor(TopicPartition(record)) submitted <- worker.submit(record) } yield // … } } Parallel Consumption @NSilnitsky

- 41. object Dispatcher { def make[R](handle: Record => URIO[R, Any]): UIO[Dispatcher[R]] = for { // ... workers <- Ref.make(Map.empty[TopicPartition, Worker]) } yield new Dispatcher[R] { override def submit(record: Record): URIO[..., SubmitResult] = for { // ... worker <- workerFor(TopicPartition(record)) submitted <- worker.submit(record) } yield // … } } Parallel Consumption * lazily @NSilnitsky

- 42. object Worker { def make[R](handle: Record => URIO[R, Any], capacity: Int,/*...*/): URIO[...,Worker] = for { queue <- Queue.dropping[Record](capacity) _ <- // simplified queue.take.flatMap { record => handle(record).as(true) }.doWhile(_ == true).forkDaemon } yield new Worker { override def submit(record: Record): UIO[Boolean] = queue.offer(record) // ... } } Parallel Consumption @NSilnitsky

- 43. object Worker { def make[R](handle: Record => URIO[R, Any], capacity: Int,/*...*/): URIO[...,Worker] = for { queue <- Queue.dropping[Record](capacity) _ <- // simplified queue.take.flatMap { record => handle(record).as(true) }.doWhile(_ == true).forkDaemon } yield new Worker { override def submit(record: Record): UIO[Boolean] = queue.offer(record) // ... } } Parallel Consumption * semaphore @NSilnitsky

- 44. object Worker { def make[R](handle: Record => URIO[R, Any], capacity: Int,/*...*/): URIO[...,Worker] = for { queue <- Queue.dropping[Record](capacity) _ <- // simplified queue.take.flatMap { record => handle(record).as(true) }.doWhile(_ == true).forkDaemon } yield new Worker { override def submit(record: Record): UIO[Boolean] = queue.offer(record) // ... } } Parallel Consumption @NSilnitsky

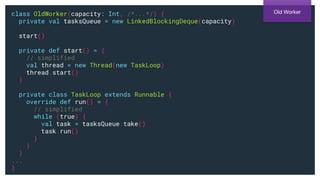

- 45. class OldWorker(capacity: Int, /*...*/) { private val tasksQueue = new LinkedBlockingDeque(capacity) start() private def start() = { // simplified val thread = new Thread(new TaskLoop) thread.start() } private class TaskLoop extends Runnable { override def run() = { // simplified while (true) { val task = tasksQueue.take() task.run() } } } ... } Old Worker

- 46. class OldWorker(capacity: Int, /*...*/) { private val tasksQueue = new LinkedBlockingDeque(capacity) start() private def start() = { // simplified val thread = new Thread(new TaskLoop) thread.start() } private class TaskLoop extends Runnable { override def run() = { // simplified while (true) { val task = tasksQueue.take() task.run() } } } ... } Old Worker * resource, context, maxPar

- 47. Greyhound wraps Kafka ✔ Simple Consumer API ✔ Composable Record Handler ✔ Parallel Consumption + Retries! What do we want it to do? ...what about Error handling?

- 48. val retryConfig = RetryConfig.nonBlocking( 1.second, 10.minutes) GreyhoundConsumersBuilder .withConsumer(GreyhoundConsumer( topic = "some-group", group = "group-2", handle = handler, retryConfig = retryConfig)) Non-blocking Retries

- 49. @NSilnitsky Kafka Broker renew-sub-topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Greyhound Consumer FAILED PROCESSING Kafka Consumer

- 50. @NSilnitsky Kafka Broker renew-sub-topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 renew-sub-topic-retry-0 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 renew-sub-topic-retry-1 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Inspired by Uber RETRY! Greyhound Consumer Kafka Consumer RETRY PRODUCER

- 51. @NSilnitsky Kafka Broker renew-sub-topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 renew-sub-topic-retry-0 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 0 1 2 3 4 5 renew-sub-topic-retry-1 Inspired by Uber RETRY! 0 1 2 3 4 5 Greyhound Consumer Kafka Consumer RETRY PRODUCER

- 52. Retries same message on failure BLOCKING POLICY HANDLER Kafka Broker source-control- update-topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Greyhound Consumer Kafka Consumer build-log-service

- 53. val retryConfig = RetryConfig.finiteBlocking( 1.second, 1.minutes) GreyhoundConsumersBuilder .withConsumer(GreyhoundConsumer( topic = "some-group", group = "group-2", handle = handler, retryConfig = retryConfig)) Blocking Retries * exponential

- 54. Retries same message on failure * lag BLOCKING POLICY HANDLER Kafka Broker source-control- update-topic 0 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 50 1 2 3 4 5 Greyhound Consumer Kafka Consumer build-log-service

- 56. handle() .retry( Schedule.doWhile(_ => shouldBlock(blockingStateRef)) && Schedule.fromDurations(blockingBackoffs) ) First Approach

- 57. handle() .retry( Schedule.doWhile(_ => shouldBlock(blockingStateRef)) && Schedule.fromDurations(blockingBackoffs) ) First Approach Doesn’t allow delay interruptions

- 58. foreachWhile(blockingBackoffs) { interval => handleAndBlockWithPolling(interval, blockingStateRef) } Current Solution

- 59. foreachWhile(blockingBackoffs) { interval => handleAndBlockWithPolling(interval, blockingStateRef) } Current Solution Checks blockingState between short sleeps Allows user request to unblock

- 60. foreachWhile(blockingBackoffs) { interval => handleAndBlockWithPolling(interval, blockingStateRef) } Current Solution def foreachWhile[R, E, A](as: Iterable[A])(f: A => ZIO[R, E, Boolean]): ZIO[R, E, Unit] = ZIO.effectTotal(as.iterator).flatMap { i => def loop: ZIO[R, E, Unit] = if (i.hasNext) f(i.next).flatMap(result => if(result) loop else ZIO.unit) else ZIO.unit loop }

- 61. foreachWhile(blockingBackoffs) { interval => handleAndBlockWithPolling(interval, blockingStateRef) } Current Solution def foreachWhile[R, E, A](as: Iterable[A])(f: A => ZIO[R, E, Boolean]): ZIO[R, E, Unit] = ZIO.effectTotal(as.iterator).flatMap { i => def loop: ZIO[R, E, Unit] = if (i.hasNext) f(i.next).flatMap(result => if(result) loop else ZIO.unit) else ZIO.unit loop } Stream.fromIterable(blockingBackoffs).foreachWhile(...)

- 62. Greyhound wraps Kafka ✔ Simple Consumer API ✔ Composable Record Handler ✔ Parallel Consumption ✔ Retries + Resilient Producer What do we want it to do? and when Kafka brokers are unavailable...

- 63. + Retry on Error Kafka Broker Producer Use Case: Guarantee completion Consumer Wix Payments Service Subscription renewal Job Scheduler

- 64. + Retry on Error Kafka Broker Producer Use Case: Guarantee completion Consumer Wix Payments Service Job Scheduler

- 66. Kafka Broker Producer Wix Payments Service Job Scheduler FAILS TO PRODUCE Save message to disk

- 67. Kafka Broker Producer Wix Payments Service Job Scheduler FAILS TO PRODUCE Save message to disk Retry on failure

- 68. Greyhound wraps Kafka ✔ Simple Consumer API ✔ Composable Record Handler ✔ Parallel Consumption ✔ Retries ✔ Resilient Producer + Context Propagation What do we want it to do? Super cool for us

- 69. @NSilnitsky CONTEXT PROPAGATION Language User Type USER REQUEST METADATA Sign up Site-Members ServiceGeo ... Browser

- 71. CONTEXT PROPAGATION Site-Members Service Kafka Broker Producer Contacts Service Consumer Browser

- 72. context = contextFrom(record.headers, token) handler.handle(record).provideSomeLayer[UserEnv](Context.layerFrom(context)) Context Propagation

- 73. context = contextFrom(record.headers, token) handler.handle(record, controller).provideSomeLayer[UserEnv](Context.layerFrom(context)) RecordHandler((r: ConsumerRecord[String, Contact]) => for { context <- Context.retrieve _ <- ContactsDB.write(r.value, context) } yield () => RecordHandler[ContactsDB with Context, Throwable, Chunk[Byte], Chunk[Byte]] Context Propagation

- 74. Greyhound wraps Kafka more features ✔ Simple Consumer API ✔ Composable Record Handler ✔ Parallel Consumption ✔ Retries ✔ Resilient Producer ✔ Context Propagation ✔ Pause/resume consumption ✔ Metrics reporting

- 75. Greyhound wraps Kafka ✔ Simple Consumer API ✔ Composable Record Handler ✔ Parallel Consumption ✔ Retries ✔ Resilient Producer ✔ Context Propagation ✔ Pause/resume consumption ✔ Metrics reporting future plans + Batch Consumer + Exactly Once Processing + In-Memory KV Stores Will be much simpler with ZIO

- 77. GREYHOUND USE CASES AT WIX Pub/Sub CDC Offline Scheduled Jobs DB (Elastic Search) replication Action retries Materialized Views

- 78. - Much less boilerplate - Code that’s easier to understand - Fun REWRITING GREYHOUND IN ZIO RESULTED IN...

- 79. ...but ZIO offers lower level abstractions too, like Promise and clock.sleep. SOMETIMES YOU CAN’T DO EXACTLY WHAT YOU WANT WITH HIGH LEVEL ZIO OPERATORS

- 80. A Scala/Java high-level SDK for Apache Kafka. 0.1 is out! github.com/wix/greyhound

- 81. Thank You [email protected] twitter @NSilnitsky linkedin/natansilnitsky github.com/natansil

![val consumer: KafkaConsumer[String, SomeMessage] =

createConsumer()

def pollProcessAndCommit(): Unit = {

val consumerRecords = consumer.poll(1000).asScala

consumerRecords.foreach(record => {

println(s"Record value: ${record.value.messageValue}")

})

consumer.commitAsync()

pollProcessAndCommit()

}

pollProcessAndCommit()

Kafka

Consumer API

* Broker location, serde](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-13-320.jpg)

![val consumer: KafkaConsumer[String, SomeMessage] =

createConsumer()

def pollProcessAndCommit(): Unit = {

val consumerRecords = consumer.poll(1000).asScala

consumerRecords.foreach(record => {

println(s"Record value: ${record.value.messageValue}")

})

consumer.commitAsync()

pollProcessAndCommit()

}

pollProcessAndCommit()

Kafka

Consumer API](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-14-320.jpg)

![val consumer: KafkaConsumer[String, SomeMessage] =

createConsumer()

def pollProcessAndCommit(): Unit = {

val consumerRecords = consumer.poll(1000).asScala

consumerRecords.foreach(record => {

println(s"Record value: ${record.value.messageValue}")

})

consumer.commitAsync()

pollProcessAndCommit()

}

pollProcessAndCommit()

Kafka

Consumer API](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-15-320.jpg)

![val handler: RecordHandler[Console, Nothing,

String, SomeMessage] =

RecordHandler { record =>

zio.console.putStrLn(record.value.messageValue)

}

GreyhoundConsumersBuilder

.withConsumer(RecordConsumer(

topic = "some-group",

group = "group-2",

handle = handler))

Greyhound

Consumer API

* No commit, wix broker location](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-16-320.jpg)

![trait RecordHandler[-R, +E, K, V] {

def handle(record: ConsumerRecord[K, V]): ZIO[R, E, Any]

def contramap: RecordHandler

def contramapM: RecordHandler

def mapError: RecordHandler

def withErrorHandler: RecordHandler

def ignore: RecordHandler

def provide: RecordHandler

def andThen: RecordHandler

def withDeserializers: RecordHandler

}

Composable

Handler

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-19-320.jpg)

![trait RecordHandler[-R, +E, K, V] {

def handle(record: ConsumerRecord[K, V]): ZIO[R, E, Any]

def contramap: RecordHandler

def contramapM: RecordHandler

def mapError: RecordHandler

def withErrorHandler: RecordHandler

def ignore: RecordHandler

def provide: RecordHandler

def andThen: RecordHandler

def withDeserializers: RecordHandler

}

Composable

Handler

@NSilnitsky * change type](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-20-320.jpg)

: RecordHandler[R, E, K2, V2] =

new RecordHandler[R, E, K2, V2] {

override def handle(record: ConsumerRecord[K2, V2]): ZIO[R, E, Any] =

f(record).flatMap(self.handle)

}

def withDeserializers(keyDeserializer: Deserializer[K],

valueDeserializer: Deserializer[V])

: RecordHandler[R, Either[SerializationError, E], Chunk[Byte], Chunk[Byte]] =

mapError(Right(_)).contramapM { record =>

record.bimapM(

key => keyDeserializer.deserialize(record.topic, record.headers, key),

value => valueDeserializer.deserialize(record.topic, record.headers, value)

).mapError(e => Left(SerializationError(e)))

}

Composable

Handler

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-21-320.jpg)

: RecordHandler[R, E, K2, V2] =

new RecordHandler[R, E, K2, V2] {

override def handle(record: ConsumerRecord[K2, V2]): ZIO[R, E, Any] =

f(record).flatMap(self.handle)

}

def withDeserializers(keyDeserializer: Deserializer[K],

valueDeserializer: Deserializer[V])

: RecordHandler[R, Either[SerializationError, E], Chunk[Byte], Chunk[Byte]] =

mapError(Right(_)).contramapM { record =>

record.bimapM(

key => keyDeserializer.deserialize(record.topic, record.headers, key),

value => valueDeserializer.deserialize(record.topic, record.headers, value)

).mapError(e => Left(SerializationError(e)))

}

Composable

Handler

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-22-320.jpg)

![RecordHandler(

(r: ConsumerRecord[String, Duration]) =>

putStrLn(s"duration: ${r.value.toMillis}"))

.withDeserializers(StringSerde, DurationSerde)

=>

RecordHandler[Console, scala.Either[SerializationError, scala.RuntimeException],

Chunk[Byte], Chunk[Byte]]

Composable

Handler

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-23-320.jpg)

: RManaged[Env, EventLoop[...]] = {

val start = for {

running <- Ref.make(true)

fiber <- pollLoop(running, consumer/*...*/).forkDaemon

} yield (fiber, running /*...*/)

start.toManaged {

case (fiber, running /*...*/) => for {

_ <- running.set(false)

// ...

} yield ()

}

}

EventLoop

Polling](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-28-320.jpg)

: RManaged[Env, EventLoop[...]] = {

val start = for {

running <- Ref.make(true)

fiber <- pollLoop(running, consumer/*...*/).forkDaemon

} yield (fiber, running /*...*/)

start.toManaged {

case (fiber, running /*...*/) => for {

_ <- running.set(false)

// ...

} yield ()

}

}

EventLoop

Polling

@NSilnitsky * dispatcher.shutdown](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-29-320.jpg)

: RManaged[Env, EventLoop[...]] = {

val start = for {

running <- Ref.make(true)

fiber <- pollLoop(running, consumer/*...*/).forkDaemon

} yield (fiber, running /*...*/)

start.toManaged {

case (fiber, running /*...*/) => for {

_ <- running.set(false)

// ...

} yield ()

}

}

EventLoop

Polling

* mem leak@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-30-320.jpg)

: RManaged[Env, EventLoop[...]] = {

val start = for {

running <- Ref.make(true)

fiber <- pollLoop(running, consumer/*...*/).forkDaemon

} yield (fiber, running /*...*/)

start.toManaged {

case (fiber, running /*...*/) => for {

_ <- running.set(false)

// ...

} yield ()

}

}

EventLoop

Polling

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-31-320.jpg)

: URIO[R1 with GreyhoundMetrics, Unit] =

running.get.flatMap {

case true => for {

//...

_ <- pollAndHandle(consumer /*...*/)

//...

result <- pollLoop(running, consumer /*...*/)

} yield result

case false => ZIO.unit

}

TailRec in ZIO

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-32-320.jpg)

: URIO[R1 with GreyhoundMetrics, Unit] =

running.get.flatMap {

case true => // ...

pollAndHandle(consumer /*...*/)

// ...

.flatMap(_ =>

pollLoop(running, consumer /*...*/)

.map(result => result)

)

case false => ZIO.unit

}

TailRec in ZIO

https://github.com/oleg-py/better-monadic-for

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-33-320.jpg)

: RManaged[Env, EventLoop[...]] = {

val start = for {

running <- Ref.make(true)

fiber <- pollOnce(running, consumer/*, ...*/)

.doWhile(_ == true).forkDaemon

} yield (fiber, running /*...*/)

start.toManaged {

case (fiber, running /*...*/) => for {

_ <- running.set(false)

// ...

} yield ()

}

TailRec in ZIO

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-34-320.jpg)

![object EventLoop {

type Handler[-R] = RecordHandler[R, Nothing, Chunk[Byte], Chunk[Byte]]

def make[R](handler: Handler[R] /*...*/): RManaged[Env, EventLoop[...]] = {

val start = for {

// ...

handle = handler.andThen(offsets.update).handle(_)

dispatcher <- Dispatcher.make(handle, /**/)

// ...

} yield (fiber, running /*...*/)

}

def pollOnce(/*...*/) = {

// poll and handle...

_ <- offsets.commit

Commit Offsets

@NSilnitsky

* old -> pass down](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-35-320.jpg)

![val consumer: KafkaConsumer[String, SomeMessage] =

createConsumer()

def pollProcessAndCommit(): Unit = {

val consumerRecords = consumer.poll(1000).asScala

consumerRecords.foreach(record => {

println(s"Record value: ${record.value.messageValue}")

})

consumer.commitAsync()

pollProcessAndCommit()

}

pollProcessAndCommit()

Kafka

Consumer API](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-37-320.jpg)

: UIO[Dispatcher[R]] = for {

// ...

workers <- Ref.make(Map.empty[TopicPartition, Worker])

} yield new Dispatcher[R] {

override def submit(record: Record): URIO[..., SubmitResult] =

for {

// ...

worker <- workerFor(TopicPartition(record))

submitted <- worker.submit(record)

} yield // …

}

}

Parallel

Consumption

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-40-320.jpg)

: UIO[Dispatcher[R]] = for {

// ...

workers <- Ref.make(Map.empty[TopicPartition, Worker])

} yield new Dispatcher[R] {

override def submit(record: Record): URIO[..., SubmitResult] =

for {

// ...

worker <- workerFor(TopicPartition(record))

submitted <- worker.submit(record)

} yield // …

}

}

Parallel

Consumption

* lazily

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-41-320.jpg)

: URIO[...,Worker] = for {

queue <- Queue.dropping[Record](capacity)

_ <- // simplified

queue.take.flatMap { record =>

handle(record).as(true)

}.doWhile(_ == true).forkDaemon

} yield new Worker {

override def submit(record: Record): UIO[Boolean] =

queue.offer(record)

// ...

}

}

Parallel

Consumption

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-42-320.jpg)

: URIO[...,Worker] = for {

queue <- Queue.dropping[Record](capacity)

_ <- // simplified

queue.take.flatMap { record =>

handle(record).as(true)

}.doWhile(_ == true).forkDaemon

} yield new Worker {

override def submit(record: Record): UIO[Boolean] =

queue.offer(record)

// ...

}

}

Parallel

Consumption

* semaphore

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-43-320.jpg)

: URIO[...,Worker] = for {

queue <- Queue.dropping[Record](capacity)

_ <- // simplified

queue.take.flatMap { record =>

handle(record).as(true)

}.doWhile(_ == true).forkDaemon

} yield new Worker {

override def submit(record: Record): UIO[Boolean] =

queue.offer(record)

// ...

}

}

Parallel

Consumption

@NSilnitsky](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-44-320.jpg)

(f: A => ZIO[R, E, Boolean]):

ZIO[R, E, Unit] =

ZIO.effectTotal(as.iterator).flatMap { i =>

def loop: ZIO[R, E, Unit] =

if (i.hasNext) f(i.next).flatMap(result => if(result) loop else

ZIO.unit)

else ZIO.unit

loop

}](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-60-320.jpg)

(f: A => ZIO[R, E, Boolean]):

ZIO[R, E, Unit] =

ZIO.effectTotal(as.iterator).flatMap { i =>

def loop: ZIO[R, E, Unit] =

if (i.hasNext) f(i.next).flatMap(result => if(result) loop else

ZIO.unit)

else ZIO.unit

loop

}

Stream.fromIterable(blockingBackoffs).foreachWhile(...)](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-61-320.jpg)

)

Context

Propagation](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-72-320.jpg)

)

RecordHandler((r: ConsumerRecord[String, Contact]) => for {

context <- Context.retrieve

_ <- ContactsDB.write(r.value, context)

} yield ()

=>

RecordHandler[ContactsDB with Context, Throwable, Chunk[Byte], Chunk[Byte]]

Context

Propagation](https://image.slidesharecdn.com/greyhound-powerfulpurefunctionalkafkalibrary-200728110626/85/Greyhound-Powerful-Pure-Functional-Kafka-Library-73-320.jpg)