Hierarchical Reinforcement Learning

- 1. Hierarchical Reinforcement Learning David Jardim & Luís Nunes ISCTE-IUL 2009/2010

- 2. Hierarchical Reinforcement Learning David Jardim & Luís Nunes ISCTE-IUL 2009/2010

- 3. Outline 1/2 Planning Process The Problem and Motivation Reinforcement Learning Markov Decision Process Q-Learning Hierarchical Reinforcement Learning Why HRL? Approaches 3

- 4. Outline 2/2 Semi-Markov Decision Process Options Until Now Next Step - Simbad Limitations of HRL Future Work on HRL Questions References 4

- 5. Planning Process 5

- 6. The Problem and LEGO_Mindstorms_NXT_mini.jpg @ http:/ Motivation /lambcutlet.org/images/ LEGO MindStorms Robot with sensors, actuators and noise Purpose of collecting “bricks” and assembly them accordingly to a plan Decompose the global problem in sub- problems Try to solve the problem by implementing well-known RL and HRL techniques 6

- 7. Reinforcement Learning Computational approach to learning @ R. S. Sutton, Reinforcement Learning: An Introduction (MIT Press, 1998). An agent tries to maximize the reward he receives when an action is taken Interacts with a complex, uncertain environment Learns how to map situations to actions 7

- 8. Markov Decision Process A finite MDP is defined by a finite set of states S a finite set of actions A @ http://en.wikipedia.org/wiki/Markov_decision_process 8

- 9. Q-Learning [Watkins, C.J.C.H.’89] Agent with a state set S and action set A. Performs an action a in order to change its state. A reward is provided by the environment. The goal of the agent is to maximize its total reward. @ http://en.wikipedia.org/wiki/Q-learning 9

- 10. Why HRL? Improve the performance Impossibility to apply RL to problems with large state/action (curse of dimensionality) Sub-goals and abstract actions can be used on different tasks (state abstraction) Multiple levels of temporal abstraction Obtain state abstraction 10

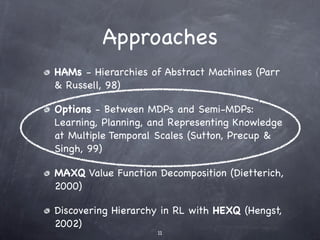

- 11. Approaches HAMs - Hierarchies of Abstract Machines (Parr & Russell, 98) Options - Between MDPs and Semi-MDPs: Learning, Planning, and Representing Knowledge at Multiple Temporal Scales (Sutton, Precup & Singh, 99) MAXQ Value Function Decomposition (Dietterich, 2000) Discovering Hierarchy in RL with HEXQ (Hengst, 2002) 11

- 12. Approaches HAMs - Hierarchies of Abstract Machines (Parr & Russell, 98) Options - Between MDPs and Semi-MDPs: Learning, Planning, and Representing Knowledge at Multiple Temporal Scales (Sutton, Precup & Singh, 99) MAXQ Value Function Decomposition (Dietterich, 2000) Discovering Hierarchy in RL with HEXQ (Hengst, 2002) 11

- 13. Semi-Markov Decision Process An SMDP consists of A set of states S A set of actions A An expected cumulative discounted reward A well-defined joint distribution of the next state and transit time 12

- 14. Options [Sutton, Precup & Singh’99] An Option is defined by A policy ∏: SxA ➞ [0,1] A termination condition β: S^+ →[0,1] And an initiation set I⊆S Its hierarchical and used to reach sub-goals 13

- 15. Until Now O1 O2 14

- 16. Until Now St Steps Steps Episodes Episodes @ Sutton, Precup & Singh’99 @ My Simulation 15

- 17. Next Step - Simbad Java 3D Robot Simulator 3D visualization and sensing Range Sensor: sonars and IR Contact Sensor: bumpers @ http://simbad.sourceforge.net/ Will allow us to simulate and learn first, and then transfer the learning to our LEGO MindStorm 16

- 18. Limitations of HRL Effectiveness of these ideas on large and complex continuous control tasks Sub-goals are assigned manually Some of the existing algorithms only work well for the problem which they were designed to solve 17

- 19. Future Work on HRL Automated discovery of state abstraction Find the best automated way to discovery sub-goals to associate with Options Obtain a long lived learning agent that faces a continued series of tasks and keep evolving 18

- 20. Questions? 19

- 21. Questions? 19

- 22. References R. S. Sutton, Reinforcement Learning: An Introduction (MIT Press, 1998). R. Parr and S. Russell. Reinforcement learning with hierarchies of machines. In Advances in Neural Information Processing Systems: Proceedings of the 1997 Conference, Cambridge, MA, 1998. MIT Press. R. S. Sutton, D. Precup, and S. Singh. Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112:181–211, 1999. T. G. Dietterich. Hierarchical reinforcement learning with the MAXQ value function decomposition. Journal of Artificial Intelligence Research, 13:227–303, 2000. B. Hengst. Discovering hierarchy in reinforcement learning with hexq. In Maching Learning: Proceedings of the Nineteenth International Conference on Machine Learning, 2002. 20

Editor's Notes

- #2: Boa tarde. Estou cá para vós falar sobre o meu projecto de dissertação, que insere-se na área da aprendizagem hierárquica por reforço.

- #4: Durante esta apresentação ou abordar o problema e a motivação para o mesmo, definir a aprendizagem por reforço e a sua estrutura matemática. Falar um pouco sobre o QLearning e posteriormente aprofundar o tema da Aprendizagem por Reforço Hierárquica.

- #5: Mostrar algum do trabalho desenvolvido até ao momento, e definir quais são os próximos passos.

- #7: Pretende-se simular um robô que tem o objectivo de buscar tijolos e dispôr os mesmos de acordo com um plano. Vou tentar dividir o problema em várias tarefas, por ex: como encontrar o tijolo, empurrar o tijolo... Numa fase seguinte, efectuar o mesmo com um robô real num cenário real. A questão é até que ponto, as técnicas conhecidas de aprendizagem por reforço e aprendizagem por reforço hierarquico nos podem levar à resolução do problema começando por uma simplificação e adicionar complexidade progressivamente.

- #8: A aprendizagem por reforço na área computacional, consiste, numa abordagem à aprendizagem, onde um agente ao executar uma acção recebe uma recompensa e altera o seu estado. Ao longo do tempo essa recompensa vai permitir mapear estados para acções e criar uma política. Essa política vai permitir ao agente resolver o problema a que foi proposto.

- #9: Se uma tarefa em aprendizagem por reforço possui um conjunto de estados e de acções finitos, então podemos afirmar que essa mesma tarefa é um Markov Decision Process. Para qualquer estado e acção, a probabilidade do estado seguinte ocorrer é definido pela 1ª equação. Consiste na recompensa imediata após transitar para o estado seguinte com a probabilidade definida anteriormente

- #10: Foi uma descoberta muito importante para a área, pode ser vista como um agente que escolhe uma acção a partir de uma política, de seguida executa essa mesma acção, recebe uma recompensa e transita para um novo estado actualizando a qualidade da acção executada no estado correspondente.

- #11: A aprendizagem por reforço possui algumas limitações, consoante a complexidade do problema pode-se revelar impracticável a sua aprendizagem. Quanto mais complexo maior é o conjunto de estados e de acções. A aprendizagem por reforço pode ser utilizada para acelerar o processo de aprendizagem, diminuir a quantidade de recursos necessários (memória), reutilizar a aprendizagem adquirida em diferentes tarefas (state abstraction). Dessa forma tornar possível a resolução de alguns problemas impossíveis.

- #12: HAMs - Através de uma hierarquia de máquinas de estados finitos, organizada por ordem crescente de complexidade, onde as máquinas de topo são compostas pelas máquinas subjacentes até chegarmos às máquinas que executam as acções primitivas. MAXQ - Trata o problema como um conjunto de problemas QLearning simultâneos. Consegue decompôr a política de forma a aproveitar partes que se repetem. Implementa vários tipos de state abstraction. HEXQ - Tenta decompôr um MDP ao dividir o espaço dos estados em regiões sub-MDP aninhadas e posteriormente tenta resolver o problema para cada uma das regiões.

- #13: Os Semi-Markov Decision Process são considerados um tipo especial de MDP, apropriados para modelar sistemas de eventos discretos em tempo continuo. A grande diferença é que neste caso as acções podem levar quantidades de tempo variáveis de forma a modelarem acções temporalmente alargadas.

- #14: Esta foi a abordagem escolhida como base para o trabalho a desenvolver, porque em relação às outras abordagens, esta revelou-se a mais flexível em termos de problemas em que pode ser aplicada. Politica - Consiste no conjunto de acções primitivas que definem a acção composta Condição de terminação - A probabilidade é igual a 1 quando o agente muda de sala por ex. Consiste no conjunto de estados onde a Option pode ser iniciada (uma sala). Criação da OPTION atráves de QLearning

- #15: De forma a obter uma base sólida de conhecimento acerca das OPTIONS, tentei replicar o estudo efectuado pelos autores, e esta foi a minha implementação. Na 1ª imagem temos uma representação de uma OPTION, que não é nada mais que uma acção composta. E na 2ª imagem podemos ver os estados onde o agente decidiu que era mais vantajoso executar uma OPTION ou uma acção primitiva.

- #16: Os resultados obtidos foram muito próximos da simulação efectuada pelos autores, como podem ver pela comparação entre os dois gráficos. Neste momento está criada a base que vai ser utilizada para efectuar testes e tentar resolver o problema, quem sabe até melhorar estes resultados.

- #17: O passo seguinte é utilizar uma plataforma de simulação de robôs com diversas funcionalidades, e tentar resolver o problema proposto do robô construtor utilizando os conhecimentos adquiridos na implementação das OPTIONS. Quando esse objectivo for alcançado, então começarei a trabalhar para transferir a aprendizagem adquirida na simulação para o robô real e fazer com que ele resolva o problema aprendido.

- #18: É claro que a aprendizagem por reforço hierárquica não é perfeita, porque por enquanto apenas consegue ser aplicada a problemas de pequenas ou médias dimensões, e os sub-objectivos são atribuidos de forma manual pelo programador. Também a maior parte dos algorítmos desenvolvidos apenas funcionam como devem de ser para os problemas que foram pensados incialmente.

- #19: Existe muito trabalho futuro nesta área e as possibilidades são imensas. Pela investigação efectuada o maior desafio consiste na descoberta automática de estruturas hierárquicas, como a descoberta dos sub-objectivos de forma a que o agente divida o problema em vários problemas mais simples, e fazer com que o agente atráves do processo de aprendizagem seja capaz de responder a novos desafios e evoluir de acordo.

- #20: Obrigado, agora se alguem tiver questões que eu possa responder.

![Q-Learning

[Watkins, C.J.C.H.’89]

Agent with a state set S and action set A.

Performs an action a in order to change its

state.

A reward is provided by the environment.

The goal of the agent is to maximize its

total reward.

@ http://en.wikipedia.org/wiki/Q-learning

9](https://image.slidesharecdn.com/hrl-100525054951-phpapp02/85/Hierarchical-Reinforcement-Learning-9-320.jpg)

![Options

[Sutton, Precup & Singh’99]

An Option is defined by

A policy ∏: SxA ➞ [0,1]

A termination condition β: S^+ →[0,1]

And an initiation set I⊆S

Its hierarchical and used to reach sub-goals

13](https://image.slidesharecdn.com/hrl-100525054951-phpapp02/85/Hierarchical-Reinforcement-Learning-14-320.jpg)