How to program DL & AI applications

- 1. 1 How to program DL & AI applications Master in Innovation and Research in Informatics UPC Barcelona Tech, Novembre 2018 Jordi TORRES.AI

- 2. Jordi TORRES.AI Lecture slides based on the book 2 https://torres.ai/DeepLearning Paperback https://www.amazon.com/First-contact-Deep- Learning-introduction/dp/1983211559 Kindle Edition https://www.amazon.com/First-contact-Deep- Learning-introduction/dp/1983211559/ eBook PDF http://www.lulu.com/shop/jordi-torres/first- contact-with-deep-learning-practical- introduction-with-keras/ebook/product- 23738015.html eBook NOOK versión https://www.barnesandnoble.com/w/first-contact- with-deep-learning-jordi- torres/1129202334?ean=2940161787595

- 3. Jordi TORRES.AI AI & DL overview

- 4. Jordi TORRES.AI Artificial Intelligence is changing our life

- 5. Jordi TORRES.AI Quantum leaps in the quality of a wide range of everyday technologies thanks to Artificial Intelligence

- 6. Jordi TORRES.AI Credits: https://www.yahoo.com/tech/battle-of-the-voice-assistants-siri-cortana-211625975.html We are increasingly interacting with “our” computers by just talking to them JORDI TORRES Speech Recognition

- 7. 7 Google Translate now renders spoken sentences in one language into spoken sentences in another, for 32 pairs of languages and offers text translation for 100+ languages. Natural Language Processing 7

- 8. 8 Google Translate now renders spoken sentences in one language into spoken sentences in another, for 32 pairs of languages and offers text translation for 100+ languages. Natural Language Processing 8

- 9. 9 Computer Vision Now our computers can recognize images and generate descriptions for photos in seconds. 9

- 10. Jordi TORRES.AI All these three areas are crucial to unleashing improvements in robotics, drones, self-driving cars, etc. Source: http://edition.cnn.com/2013/05/16/tech/innovation/robot-bartender-mit-google-makr-shakr/ All these three areas are crucial to unleashing improvements in robotics, drones, self-driving cars, etc. JORDI TORRES

- 11. Jordi TORRES.AI Robot bartender creates crowd-sourced cocktails

- 12. Jordi TORRES.AI Source: http://axisphilly.org/article/military-drones-philadelphia-base-control/ All these three areas are crucial to unleashing improvements in robotics, drones, self-driving cars, etc. JORDI TORRES

- 13. Jordi TORRES.AI Source: http://fortune.com/2016/04/23/china-self-driving-cars/ All these three areas are crucial to unleashing improvements in robotics, drones, self-driving cars, etc. JORDI TORRES

- 14. Jordi TORRES.AI image source: http://acuvate.com/blog/22-experts-predict-artificial-intelligence-impact-enterprise-workplace AI is at the heart of today’s technological innovation.

- 15. 15 Many of these breakthroughs have been made possible by a family of AI known as Neural Networks

- 16. 16 Neural networks, also known as a Deep Learning, enables a computer to learn from observational data Although the greatest impacts of deep learning may be obtained when it is integrated into the whole toolbox of other AI techniques

- 17. Jordi TORRES.AI Artificial Intelligence and Neural Networks, are not new concepts!

- 19. Jordi TORRES.AI Neuron x1 x2 xn yj 1950: McCulloch and Pitt's defined an architecture that was loosely inspired by biological neurons

- 22. Jordi TORRES.AI x1 x2 xn w1j w2j wnj yj bj 1 1 + #$% zj = ∑' (')*'+ bj yj = +(-j )

- 23. Jordi TORRES.AI x1 x2 xn w1j w2j wnj yj bj 1 1 + #$% zj = ∑' (')*'+ bj yj = +(-j ) MANY EXAMPLES (X,Y) PAIRS TRAINING stage MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS

- 24. Jordi TORRES.AI x1 x2 xn w1j w2j wnj yj bj 1 1 + #$% zj = ∑' (')*'+ bj yj = +(-j ) MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS TRAINING stage

- 25. Jordi TORRES.AI x1 x2 xn w1j w2j wnj yj bj 1 1 + #$% zj = ∑' (')*'+ bj yj = +(-j ) MANY EXAMPLES (X,Y) PAIRS Adaptar pesos MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS MANY EXAMPLES (X,Y) PAIRS TRAINING stage

- 26. Jordi TORRES.AI x1 x2 xn w1j w2j wnj yj bj 1 1 + #$% zj = ∑' (')*'+ bj yj = +(-j ) Predicción Y Nuevo Dato

- 27. Jordi TORRES.AI x1 x2 xn w1j w2j wnj yj bj 1 1 + #$% zj = ∑' (')*'+ bj yj = +(-j ) ABANDONED à AI WINTER 1

- 28. Jordi TORRES.AI 1980: LAYERED NEURAL NETWORKS People discovered how to effectively train a neural network with many neurons . . . . . . . . . . . . . . . . . .

- 29. Jordi TORRES.AI 1980: LAYERED NEURAL NETWORKS People discovered how to effectively train a neural network with many neurons FORWARD PROPAGATION . . . . . . . . . . . . . . . . . . LOSS BARCKWARD PROPAGATION

- 30. Jordi TORRES.AI face edges combination of edges high-level representation

- 31. Jordi TORRES.AI FORWARD PROPAGATION . . . . . . . . . . . . . . . . . . BARCKWARD PROPAGATION LOSS Support Vector Machines ABANDONED à AI WINTER 2

- 32. Jordi TORRES.AI 2009: Hinton and his team in Toronto trained deep neural networks with many layers of hidden units Capa entrada . . . . . . . . . . . . . . . . . . Capa salida Capas ocultas . . . . . . . . . . . .

- 33. Jordi TORRES.AI So why did Deep Learning only take off few years ago?

- 34. 34 Source:http://www.economist.com/node/15579717 One of the key drivers: The data deluge 34

- 35. 35 Source:http://www.economist.com/node/15579717 One of the key drivers: The data deluge Thanks to the advent of Big Data AI models can be “trained” by exposing them to large data sets that were previously unavailable. 35

- 39. 39source: cs231n.stanford.edu/slides/2017/cs231n_2017_lecture15.pdf Training DL neural nets has an insatiable demand for Computing 39

- 40. Jordi TORRES.AI Thanks to advances in Computer Architecture, nowadays we can solve problems that would have been intractable some years ago. 40

- 41. Credits:http://www.ithistory.org/sites/default/files/hardware/facom230-50.jpg FACOM 230 – Fujitsu Instructions per second: few Mips * (M = 1.000.000) Processors : 1 1982

- 42. 2012 MARENOSTRUM III - IBM Instructions per second: 1.000.000.000 MFlops Processors : 6046 (48448 cores)

- 43. 2012 MARENOSTRUM III - IBM Instructions per second: 1.000.000.000 MFlops Processors : 6046 (48448 cores) only 1.000.000.000 times faster

- 44. CPU improvements! Until then, the increase in computational power every decade of “my” computer, was mainly thanks to CPU

- 45. CPU improvements! Since then, the increase in computational power for Deep Learning has not only been from CPU improvements . . . Until then, the increase in computational power every decade of “my” computer, was mainly thanks to CPU

- 46. 46 SOURCE:https://www.hpcwire.com/2016/11/23/nvidia-sees-bright-future-ai-supercomputing/?eid=330373742&bid=1597894 but also from the realization that GPUs (NVIDIA) were 20 to 50 times more efficient than traditional CPUs.

- 47. Deep Learning requires computer architecture advancements AI specific processors Optimized libraries and kernels Fast tightly coupled network interfaces Dense computer hardware

- 48. COMPUTING POWER is the real enabler!

- 49. What if I do not have this hardware?

- 50. Now we are entering into an era of computation democratization for companies !

- 51. And what is “my/your” computer like now?

- 52. And what is “my/your” computer like now? Source: http://www.google.com/about/datacenters/gallery/images

- 53. Source: http://www.google.com/about/datacenters/gallery/images And what is “my/your” computer like now? The Cloud

- 54. 28.000 m2 Credits: http://datacenterfrontier.com/server-farms-writ-large-super-sizing-the-cloud-campus/ Huge data centers!

- 58. For those (experts) who want to develop their own software, cloud services like Amazon Web Services provide GPU-driven deep-learning computation services

- 59. And Google ...

- 60. And all major cloud platforms... Microsoft Azure IBM Cloud Aliyun Cirrascale NIMBIX Outscale . . . Cogeco Peer 1 Penguin Computing RapidSwitch Rescale SkyScale SoftLayer . . .

- 61. And for “less expert” people, various companies are providing a working scalable implementation of ML/AI algorithms as a Service (AI-as-a-Service) Source: https://twitter.com/smolix/status/804005781381128192Source: http://www.kdnuggets.com/2015/11/machine-learning-apis-data-science.html

- 62. An open-source world for the Deep Learning community

- 63. Many open-source DL software have greased the innovation process

- 64. Github Stars source: Francesc Sastre

- 65. The 3 frameworks with steepest gradient frameworks with more slope TensorFlow Keras Pytorch

- 66. and no less important, an open-publication ethic, whereby many researchers publish their results immediately on a database without awaiting peer-review approval.

- 67. Beyond Big Data: turn computation on Data

- 68. Jordi TORRES.AIJordi TORRES.AI image: https://gogameguru.com/alphago-defeats-lee-sedol-game-1 AlphaGo versus Lee Sedol DeepMind Challenge Match - March 2016

- 69. Jordi TORRES.AI

- 70. Jordi TORRES.AI Image: https://deepmind.com/blog/alphago-zero-learning-scratch And …. AlphaGo à AlphaGo Zero

- 71. Jordi TORRES.AI Densely Connected Networks

- 72. Jordi TORRES.AI Machine Learning Basics ● Machine learning is a field of computer science that gives computers the ability to learn without being explicitly programmed ● Types of Learning ○ Supervised: Learning with a labelled training set (Example: email classification with already labelled emails) ○ Unsupervised: Discover patterns in unlabelled data (Example: cluster similar documents based on text) ○ Reinforcement learning: Learn to act based on feedback/reward (Example: learn to play Chess , reward: win or lose)

- 73. Jordi TORRES.AI Basic Machine Learning terminology ● y: Labels ● x: Features ● Model: defines the relation between features and labels ● w :weights ● b : bias

- 74. Jordi TORRES.AI Deep Learning • Allows models to learn representations of data with multiple levels of abstraction • Discovers intricate structure in large data sets (Patterns) • Dramatically improved the state- of-the-art in speech recognition, visual object recognition, object detection, ... Deep learning. In: Nature 521.7553 (May 2015). Yann LeCun et al.

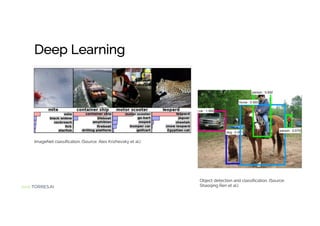

- 75. Jordi TORRES.AI Deep Learning ImageNet classification. (Source: Alex Krizhevsky et al.) Object detection and classification. (Source: Shaoqing Ren et al.)

- 76. Jordi TORRES.AI Deep Learning Image Colorization. (Source: Richard Zhang et al.) Images captioning. (Source: Andrej Karpathy et al.)

- 78. Jordi TORRES.AI Someone who has watched many romantic movies may be interested in Good Will Hunting if we show the artwork containing Matt Damon and Minnie Driver, whereas, a member who has watched many comedies might be drawn to the movie if we use the artwork containing Robin Williams, a well-known comedian. https://medium.com/netflix-techblog/artwork-personalization-c589f074ad76

- 79. Jordi TORRES.AI In another scenario, let’s imagine how the different preferences for cast members might influence the personalization of the artwork for the movie Pulp Fiction. A member who watches many movies featuring Uma Thurman would likely respond positively to the artwork for Pulp Fiction that contains Uma. Meanwhile, a fan of John Travolta may be more interested in watching Pulp Fiction if the artwork features John. https://medium.com/netflix-techblog/artwork-personalization-c589f074ad76

- 80. Jordi TORRES.AI Source: GANimation: Anatomically-aware Facial Animation from a Single Image Albert Pumarola, Antonio Agudo, Aleix M. Martinez, Alberto Sanfeliu, Francesc Moreno-Noguer https://www.albertpumarola.com/research/GANimation/index.html

- 81. Jordi TORRES.AI

- 82. Jordi TORRES.AI Feature engineering ● In Machine learning ○ is a process of putting domain knowledge into the creation of feature extractors (most of the applied features need to be identified by an expert) to reduce the complexity of the data and make patterns more visible to learning algorithms to work. ○ For example, features can be pixel values, shape, textures, position and orientation. The performance of most of the Machine Learning algorithm depends on how accurately the features are identified and extracted. Input Feature extraction Classification Output FACE

- 83. Jordi TORRES.AI Feature engineering ● In Deep Learning ○ Deep learning algorithms try to learn high-level features from data. This is a very distinctive part of Deep Learning and a major step ahead of traditional Machine Learning. Therefore, deep learning reduces the task of developing new feature extractors for every problem. Convolutional NN will try to learn low-level features such as edges and lines in early layers then parts of people’s faces and then high-level representation of a face. Input Classification Output FACE

- 84. Jordi TORRES.AI Feature engineering ● In Deep Learning (cont): ○ Convolutional NN will try to learn low-level features such as edges and lines in early layers then parts of people’s faces and then high-level representation of a face Input Classification Output FACE Low-level features Mid-level features High-level features Increasing level of abstraction

- 85. Jordi TORRES.AI Deep Learning: Training vs Inference Source: Nvidia Research

- 86. Jordi TORRES.AI Perceptron ● Types of regression ○ Logistic regression ○ Linear regression ● Simple artificial neuron: example

- 91. Jordi TORRES.AI Neurons • Inputs: • Outputs from other neurons • Input data • Each input has a different weight • One output • Different activation functions

- 92. Jordi TORRES.AI Multi-Layer Perceptron (or Neural Network)

- 93. Jordi TORRES.AI Neural Networks elements • Set of neurons • Each neuron contains an activation function • Different topologies • The connections are the inputs and outputs of the functions • Each connection has a weight and bias

- 94. Jordi TORRES.AI Multi-Layer Perceptron for classification ● MLPs are often used for classification, and specifically when classes are exclusive. In this case the output layer is a softmax function in which the output of each neuron corresponds to the estimated probability of the corresponding class.

- 95. Jordi TORRES.AI An easy example to start: Softmax • The softmax function has two main steps: • first, the “evidences” for an image belonging to a certain label are computed, • and later the evidences are converted into probabilities for each possible label.

- 96. Jordi TORRES.AI Hello World in Deep Learning ● Case study: “The MNIST database” ○ Dataset of handwritten digits classification ○ 60,000 28x28 grayscale images of the 10 digits, along with a test set of 10,000 images.

- 97. Jordi TORRES.AI The MNIST database ● Features: matrix of 28x28 pixels with values [0, 255] ● Labels: values [0, 9]

- 98. Jordi TORRES.AI The MNIST database ● Features: matrix of 28x28 pixels with values [0, 255]

- 99. Jordi TORRES.AI Evidence of belonging ● Model example of zero

- 100. Jordi TORRES.AI Evidence of belonging

- 101. Jordi TORRES.AI Evidence of belonging

- 102. Jordi TORRES.AI Evidence of belonging ● Models [0-9]

- 103. Jordi TORRES.AI Probability of belonging ● The second step involves computing probabilities. Specifically we turn the sum of evidences into predicted probabilities using this function:

- 104. Jordi TORRES.AI (Activation function) Softmax ● A function that provides probabilities for each possible class in a multi- class classification model. The probabilities add up to exactly 1.0. ○ For example, softmax might determine that the probability of a particular image being a “7” at 0.8, a “9” at 0.17, …

- 105. Jordi TORRES.AI Data • Training set → For training • Validation set → For hyperparameter tuning • Test set → Test model performance

- 108. Jordi TORRES.AI It is time to get your hands dirty!

- 109. Jordi TORRES.AI (Old) Working Environment (optional) http://localhost:8888 (password dl )

- 110. Jordi TORRES.AI Working Environment (suggested)

- 111. Jordi TORRES.AI

- 112. Jordi TORRES.AI

- 113. Jordi TORRES.AI

- 114. Jordi TORRES.AI

- 115. Jordi TORRES.AI

- 116. Jordi TORRES.AI

- 118. Jordi TORRES.AI • was developed and maintained by François Chollet • https://keras.io • Python library on top of TensorFlow, Theano or CNTK.

- 119. Jordi TORRES.AI • Good for beginners • Austerity and simplicity • Keras model is a combination of discrete elements

- 120. Jordi TORRES.AI Steps to create a model with Keras: ● Load Data. ● Define Model. ● Compile Model. ● Fit Model. ● Evaluate Model. ● Use the Model

- 121. Jordi TORRES.AI Load MNIST data

- 122. Jordi TORRES.AI

- 123. Jordi TORRES.AI Keras data: Tensor

- 124. Jordi TORRES.AI Normalizing the data

- 125. Jordi TORRES.AI Model: core data structure ● keras.models.Sequential class is a wrapper for the neural network model:

- 126. Jordi TORRES.AI Layers in Keras ● Models in Keras are defined as a sequence of layers. ● There are ○ fully connected layers, ○ max pool layers, ○ activation layers, ○ etc. ● You can add a layer to the model using the model’s add()function.

- 127. Jordi TORRES.AI Layer shape ● Keras will automatically infer the shape of all layers after the first layer ○ This means you only have to set the input dimensions for the first layer. ○ Example: The first layer sets the input dimension to 28. The second layer takes in the output of the first layer and sets the output to 128. . . . We can see that the output has dimension 10.

- 128. Jordi TORRES.AI The model definition in Keras

- 129. Jordi TORRES.AI The model definition in Keras

- 130. Jordi TORRES.AI Summary( ) method

- 131. Jordi TORRES.AI Compiling the model

- 132. Jordi TORRES.AI Training the model Verbose =1

- 133. Jordi TORRES.AI Evaluate the model

- 135. Jordi TORRES.AI MNIST confusion matrix for this model

- 136. Jordi TORRES.AI Predictions ● Using a trained network to generate predictions on new data

- 138. Jordi TORRES.AI Basics about learning process

- 139. Chapter Course Notes in Slide Format

- 140. Jordi TORRES.AI Neuron A neural network is formed by neurons connected to each other; in turn, each connection of one neural network is associated with a weight that dictates the importance of this relationship in the neuron when multiplied by the input value.

- 141. Jordi TORRES.AI Neuron Each neuron has an activation function that defines the output of the neuron. The activation function is used to introduce non- linearity in the network connections.

- 142. Jordi TORRES.AI Learning process: ● Adjust the biases and weights of the connections between neurons Objective: Learn the values of the network parameters (weights wij and biases bj) An iterative process of two phases: ● Forwardpropagation. ● Backpropagation.

- 143. Jordi TORRES.AI Learning process: Forwardpropagation ● The first phase, forwardpropagation, occurs when the training data crosses the entire neural network in order to calculate their predictions (labels). ○ That is, pass the input data through the network in such a way that all the neurons apply their transformation to the information they receive from the neurons of the previous layer and send it to the neurons of the next layer. ○ When data has crossed all the layers where all its neurons have made their calculations, the final layer will be reached, with a result of label prediction for those input examples.

- 144. Jordi TORRES.AI Learning process: Loss function ● Next, we will use a loss function to estimate the loss (or error) and to measure how good/bad our prediction result was in relation to the correct result. (Remember that we are in a supervised learning environment and we have the label that tells us the expected value). ○ Ideally, we want our cost to be zero, that is, without divergence between estimated and expected value. ○ As the model is being trained, the weights of the interconnections of the neurons will be adjusted automatically until good predictions are obtained.

- 145. Jordi TORRES.AI Learning process Backpropagation ● Starting from the output layer, the calculated loss is propagated backward, to all the neurons in the hidden layer that contribute directly to the output. ○ However, the neurons of the hidden layer receive only a fraction of the total loss signal, based on the relative contribution that each neuron has contributed to the original output (indicated by the weight of the connexion). ○ This process is repeated, layer by layer, until all the neurons in the network have received a loss signal that describes their relative contribution to the total loss.

- 146. Jordi TORRES.AI Learning process: summary

- 147. Jordi TORRES.AI Adjust the weights: Gradient descent ● Once the loss information has been propagated to all the neurons, we proceed to adjust the weights. ● This technique changes the weights and biases in small increments, with the help of the derivative (or gradient) of the loss function, which allows us to see in which direction "to descend" towards the global mínimum. ○ Generally, this is done in batches of data in the successive iterations of the set of all the data that we pass to the network in each iteration.

- 148. Jordi TORRES.AI Learning algorithm overview: 1. Start with some values (often random) for the network weights and biases. 2. Take a set of input examples and perform the forwardpropagation process passing them through the network to get their prediction. 3. Compare these obtained predictions with the expected labels and calculate the loss using the loss fuction. 4. Perform the backpropagation process to propagate this loss, to each and every one of the parameters, that make up the model of the neural network. 5. Use this propagated information to update the parameters of the neural network with the gradient descent. This reduces the total loss and obtains a better model. 6. Continue iterating on steps 2 to 5 until we consider that we have a good model (we will see later when we should stop).

- 149. Jordi TORRES.AI Activation Function: Linear ● The linear activation function is basically the identity function, which in practical terms, means that the signal does not change. Sometimes, we can see this activation function in the input layer of a neural network.

- 150. Jordi TORRES.AI Activation Function: Sigmoid The sigmoid function was introduced previously and allows us to reduce extreme or atypical values without eliminating them. A sigmoid function converts independent variables of almost infinite range into simple probabilities between 0 and 1. Most of the output will be very close to the extremes of 0 or 1, as we have already commented.

- 151. Jordi TORRES.AI Activation Function: Tanh In the same way that the tangent represents a relation between the opposite side and the adjacent side of a right-angled triangle, tanh represents the relation between the hyperbolic sine and the hyperbolic cosine: tanh(x)=sinh (x)/cosh(x). Unlike the sigmoid function, the normalized range of tanh is between -1 to 1, which is the input that suits some neural networks. The advantage of tanh is that you can also deal more easily with negative numbers.

- 152. Jordi TORRES.AI Activation Function: Softmax ● The Softmax activation function was already presented as a way to generalize the logistic regression. ● The softmax activation function returns the probability distribution on mutually exclusive output classes. ● Softmax will often be found in the output layer of a classifier.

- 153. Jordi TORRES.AI Activation Function: ReLU ● The activation function Rectified linear unit (ReLU) is a very interesting transformation that activates a single node if the input is above a certain threshold. ○ While the input has a value below zero, the output will be zero, but when the input rises, the output is a linear relationship with the input variable of the form f (x)=max (0,x) . ○ The ReLUs activation function has proven to be the best option in many different cases.

- 154. Jordi TORRES.AI Backpropagation In summary, we can see backpropagation as a method to alter the weights and biases of the neural network in the right direction. Start first by calculating the loss term and then, the weights+biases are adjusted in reverse order, with an optimization algorithm, taking into account this calculated loss. In Keras the compile() method allows us to specify the components involved in the learning process:

- 155. Jordi TORRES.AI Arguments of compile() method: loss ● A loss function is one of the parameters required to quantify how close a particular neural network is to our ideal, during the learning process. ○ In the Keras manual, we can find all types of loss functions available (there are many). ● The choice of the best function of loss lies in understanding what type of error is or is not acceptable for the particular problema.

- 156. Jordi TORRES.AI Arguments of compile() method: optimizers ● In general, we can see the learning process as a global optimization problem where the parameters (weights and biases) must be adjusted in such a way that the loss function presented above is minimized. ○ In most cases, this optimization cannot be solved analytically, but in general, it can be approached effectively with iterative optimization algorithms or optimizers. ○ Keras currently has different optimizers that can be used: SGD, RMSprop, Adagrad, Adadelta, Adam, Adamax, Nadam.

- 157. Jordi TORRES.AI Example of Optimizer: Gradient descent ● Gradient descent uses the first derivative (gradient) of the loss function to update the parameters. ● The process involves chaining the derivatives of the loss of each hidden layer from the derivatives of the loss of its upper layer, incorporating its activation function in the calculation. ● In each iteration, once all the neurons have the gradient value of the corresponding loss function, the values of the parameters are updated in the opposite direction to that indicated by the gradient (which points in the direction in which it increases).

- 158. Jordi TORRES.AI Example of Optimizer: Gradient descent ● Let's see the process in a visual way assuming only two dimensions. ● Suppose that the blue line represents the values that the loss function takes for each possible parameter and at the indicated starting point the negative gradient is indicated by the arrow:

- 159. Jordi TORRES.AI Example of Optimizer: Gradient descent ● To determine the next value for the parameter (to simplify, consider only weight), the Gradient descent algorithm adds a certain magnitude to the gradient vector at the starting point. ● The magnitude that is taken in each iteration to reach the local mínimum, is determined by a learning rate hyperparameter (which we will present shortly) that we can specify. ● Therefore, conceptually it is as if we followed the direction of the downhill curve until we reached a local minimum:

- 160. Jordi TORRES.AI Example of Optimizer: Gradient descent ● The gradient descent algorithm repeats this process, getting closer and closer to the minimum until the value of the parameter reaches a point beyond which the loss function can not decrease:

- 161. Jordi TORRES.AI Stochastic Gradient Descent (SGD) We have not yet talked about how often the values of the parameters are adjusted. ● Stochastic gradient descent: After each entry example (online learning) ● Batch gradient descent: After each iteration on the whole set of training examples (batch learning) (*) The literature indicates that we usually get better results with online learning, but there are reasons that justify batch learning because many optimization techniques will only work with it. ● For this reason most applications of SGD actually use a minibatch of several samples (mini-batch learning) : The values of the parameters are adjusted after a sample of examples of the training set.

- 162. Jordi TORRES.AI SGD in Keras ● We divide the data into several batches. ● Then we take the first batch, calculate the gradient of its loss and update the parameters; this would follow successively until the last batch. ● Now, in a single pass through all the input data, only a number of steps have been made, equal to the number of batches.

- 163. Jordi TORRES.AI Parameters vs hyperparameters ● Parameter: A variable of a model that the DL system trains on its own. For example, weights are parameters whose values the DL system gradually learns through successive training iterations. ● Hyperparameters: The "knobs" that you tweak during successive runs of training a model. For example, learning rate is a hyperparameter.

- 164. Jordi TORRES.AI Many hyperparameters: ● Epochs ● Batch size ● Learning rate ● Learning rate decay ● Momentum ● . . . ● Number of layers ● Number of neurons per layer ● . . .

- 165. Jordi TORRES.AI Epochs ● An epoch is defined as a single training iteration of all batches in both forward and back propagation. This means 1 epoch is a single forward and backward pass of the entire input data. ● The number of epochs you would use to train your network can be chosen by you. ○ It’s highly likely that more epochs would show higher accuracy of the network, however, it would also take longer for the network to converge. ○ Also you must be aware that if the number of epochs is too high, the network might be overfitted.

- 166. Jordi TORRES.AI Batch size ● The number of examples in a batch. The set of examples used in one single update of a model's weights during training. ● Indicated in the fit() method ● The optimal size will depend on many factors, including the memory capacity of the computer that we use to do the calculations.

- 167. Jordi TORRES.AI Learning rate ● A scalar used to train a model via gradient descent. During each iteration, the gradient descent algorithm multiplies the learning rate by the gradient. ● In simple terms, the rate at which we descend towards the minima of the cost function is the learning rate. We should choose the learning rate very carefully since it should be neither so large that the optimal solution is missed and nor so low that it takes forever for the network to converge.

- 168. Jordi TORRES.AI Learning rate decay ● A concept to adjust the learning rate during training. Allows for flexible learning rate adjustments. In deep learning, the learning rate typically decays the longer the network is trained.

- 169. Jordi TORRES.AI Momentum ● A gradient descent algorithm in which a learning step depends not only on the derivative in the current step, but also on the derivatives of the steps that immediately preceded it. ● Momentum involves computing an exponentially weighted moving average of the gradients over time, analogous to momentum in physics. ● Momentum sometimes prevents learning from getting stuck in local minima.

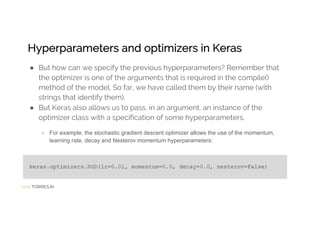

- 170. Jordi TORRES.AI Hyperparameters and optimizers in Keras ● But how can we specify the previous hyperparameters? Remember that the optimizer is one of the arguments that is required in the compile() method of the model. So far, we have called them by their name (with strings that identify them). ● But Keras also allows us to pass, in an argument, an instance of the optimizer class with a specification of some hyperparameters. ○ For example, the stochastic gradient descent optimizer allows the use of the momentum, learning rate, decay and Nesterov momentum hyperparameters:

- 171. Jordi TORRES.AI How to tweak the "knobs" during successive runs of training a model?

- 173. Jordi TORRES.AI It is time to get your hands dirty! JORDI TORRES It is time to get your hands dirty! JORDI TORRES It is time to get your hands dirty!

- 174. Jordi TORRES.AI Working Environment Experience in designing Deep Learning neural networks can be gained using a fun and powerful application called the TensorFlow Playground: http://playground.tensorflow.org

- 175. Jordi TORRES.AI TensorFlow Playground ● an interactive visualization of neural networks, written in TypeScript using d3.js.

- 176. Jordi TORRES.AI TensorFlow Playground ● DATA: We can select the type of data:

- 177. Jordi TORRES.AI TensorFlow Playground ● Live demo of how it works: http://playground.tensorflow.org

- 178. Jordi TORRES.AI TensorFlow Playground: First example ● DATA:

- 179. Jordi TORRES.AI Classification with a single neuron ?

- 180. Jordi TORRES.AI Classification with a single neuron

- 181. Jordi TORRES.AI TensorFlow Playground: Second example ● DATA:

- 182. Jordi TORRES.AI Classification with more than one neuron ?

- 183. Jordi TORRES.AI Classification with more than one neuron

- 184. Jordi TORRES.AI TensorFlow Playground: Third example ● DATA:

- 187. Jordi TORRES.AI TensorFlow Playground: Fourth example ● DATA:

- 188. Jordi TORRES.AI Classification with many layers

- 190. Jordi TORRES.AI Convolutional Neural Networks

- 191. SUPERCOMPUTERS ARCHITECTURE Master in Innovation and Research in Informatics 5.5 Convolutional Neural Networks

- 192. Jordi TORRES.AI Convolutional Neural Networks ● Acronyms: CNNs or ConvNets ● An explicit assumption that the inputs are images. edges edge combination object models Face (*) Francesc Torres, Rector de la Universitat Politècnica de Catalunya · BarcelonaTech http://www.upc.edu/en/the-upc/government-and-representation/the-rector/the-rector (*)

- 193. Jordi TORRES.AI Basic components of a CNN ● The convolution operation ○ Intuitively, we could say that the main purpose of a convolutional layer is to detect features or visual features in images. ○ Convolutional layers can learn spatial hierarchies of patterns by preserving spatial relationships. ● The pooling operation ○ Accompanies the convolutional layers. ○ Simplifies the information collected by the convolutional layer and create a condensed version of the information contained in them.

- 194. Jordi TORRES.AI The convolution operation ● In general, the convolution layers operate on 3D tensors, called feature maps, with two spatial axes of height and width, as well as a channel axis (also called depth). ○ For an RGB color image, the dimension of the depth axis is 3, since the image has three channels: red, green and blue. ○ For a black and white image, such as the MNIST, the depth axis dimension is 1 (grey level).

- 195. Jordi TORRES.AI The convolution operation ● In CNN not all the neurons of a layer are connected with all the neurons of the next layer as in the case of fully connected neural networks; it is done by using only small localized areas of the space of input neurons.

- 196. Jordi TORRES.AI The convolution operation ● Sliding window ● Use the same filter (the same W matrix of weights and the same bias b) for all the neurons in the next layer

- 197. Jordi TORRES.AI ● Many filters (one for each feature that we want to detect) The convolution operation

- 198. Jordi TORRES.AI ● Many filters (one for each feature that we want to detect) The convolution operation

- 199. Jordi TORRES.AI The pooling operation ● Accompanies the convolution layer ● Simplifies the information collected by the convolutional layer and creates a condensed version of the information: ○ max-pooling ○ average-pooling

- 200. Jordi TORRES.AI The pooling operation ● The pooling maintains the spatial relationship

- 201. Jordi TORRES.AI Convolutional+Pooling layers: Summary

- 202. Jordi TORRES.AI Basic elements of a CNN in Keras

- 203. Jordi TORRES.AI A simple CNN model in Keras - I

- 204. Jordi TORRES.AI A simple CNN model in Keras - II (*) Add a densely connected layer and a softmax

- 205. Jordi TORRES.AI A simple CNN model in Keras - III ● Summary:

- 206. Jordi TORRES.AI Training and evaluation of the model ● Test accuracy: 0.9704

- 207. Jordi TORRES.AI Hyperparameters of the convolutional layer ● Size and number of filters ○ The size of the window (window_height × window_width) that keeps information about the spatial relationship of pixels are usually 3×3 or 5×5. ○ The number of filters (output_depth) indicates the number of features and is usually 32 or 64. Conv2D(output_depth, (window_height, window_width))

- 208. Jordi TORRES.AI Hyperparameters of the convolutional layer 5 × 5 3 × 3

- 209. Jordi TORRES.AI ● Padding ○ Sometimes we want to get an output image of the same dimensions as the input. ○ We can add zeros around the input images before sliding the window through it. Hyperparameters of the convolutional layer

- 210. Jordi TORRES.AI Hyperparameters of the convolutional layer In Keras, the padding in the Conv2D layer is configured with the padding argument, which can have two values: "Same" indicates that as many rows and columns of zeros are added as necessary, so that the output has the same dimension as the input. "Valid” indicates not to do padding (it is the default value of this argument in Keras).

- 211. Jordi TORRES.AI Hyperparameters of the convolutional layer ● Stride : Number of steps the sliding window jumps ○ Ex: stride 2

- 212. Jordi TORRES.AI Well-known CNN ● LeNet, born in the nineties when Yann LeCun got the first use of successful convolutional neural networks in the reading of postal codes and digits. The input data to the network is 32 × 32 pixel images, followed by two stages of convolution-pooling, a densely connected layer and a final softmax layer that allows us to recognize the numbers. ● AlexNet by Alex Krizhevsky, who won the ImageNet 2012 competition. ● Also GoogleLeNet, which with its inception module drastically reduces the parameters of the network (15 times less than AlexNet) and it has derived several versions, such as Inception-v4. ● Others, such as the VGGnet, helped to demonstrate that the depth of the network is a critical component for good results. ● Etc.

- 213. Jordi TORRES.AI Well-known CNN: VGG16

- 214. Jordi TORRES.AI Well-known CNN: VGG16

- 215. Jordi TORRES.AI Well-known CNN: VGG16 ● The interesting thing about many of these networks is that we can find them already preloaded in most of the frameworks. ● For example, in Keras, if we wanted to program a VGGnet neural network we could do it by writing the following code:

- 216. Jordi TORRES.AI Well-known CNN: VGG16

- 217. Jordi TORRES.AI Transfer Learning ● What is it? ○ is the reuse of a pre-trained model on a new problem. ○ It is currently very popular in the field of Deep Learning because it enables you to train Deep Neural Networks with comparatively little data. ○ This is very useful since most real-world problems typically do not have millions of labeled data points to train such complex models. other datasets

- 218. Jordi TORRES.AI Transfer learning: Fine tuning Source: keras github

- 219. Jordi TORRES.AI Other Networks ● Recurrent Neural Networks (RNN) Image source: Christopher Olah

![Jordi TORRES.AI

The MNIST database

● Features: matrix of 28x28 pixels with values [0, 255]

● Labels: values [0, 9]](https://image.slidesharecdn.com/deeplearningbook-181123102517/85/How-to-program-DL-AI-applications-97-320.jpg)

![Jordi TORRES.AI

The MNIST database

● Features: matrix of 28x28 pixels with values [0, 255]](https://image.slidesharecdn.com/deeplearningbook-181123102517/85/How-to-program-DL-AI-applications-98-320.jpg)

![Jordi TORRES.AI

Evidence of belonging

● Models [0-9]](https://image.slidesharecdn.com/deeplearningbook-181123102517/85/How-to-program-DL-AI-applications-102-320.jpg)