How to use Postgresql in order to handle Prometheus metrics storage

- 1. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 1 di 47 Come usare Postgresql per gestire lo storage delle metriche di Prometheus Lucio Grenzi [email protected]

- 2. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 2 di 47 Delphi developer since 1999 IT Consultant Full stack web developer Postgresql addicted lucio grenzi dogwolf Who I am

- 3. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 3 di 47 AgendaAgenda Intro to Prometheus Intro to TimeScaleDB Pg_prometheus Prometheus-postgresql-adapter Prometheus HA Conclusion and final thoughts

- 4. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 4 di 47 Why monitoring activities?Why monitoring activities? Detect errors and bottlenecks Auditing Measure performances Trending to see changes during time Drive business/technical processes

- 5. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 5 di 47 Prometheus

- 6. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 6 di 47 PrometheusPrometheus Open source (Apache 2 license) Only metrics, not logging Pull based approach Multidimensional data model Built-in time series database Flexibile query langage (Qml) Alertmanager handle notifications For all levels of the stack

- 7. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 7 di 47 Prometheus historyPrometheus history Inspired by the monitoring tool Borgmon used at Google Developed at SoundCloud starting in 2012 In May 2016, the Cloud Native Computing Foundation accepted Prometheus as its second incubated project Inspired by the monitoring tool Borgmon used at Google Developed at SoundCloud starting in 2012 In May 2016, the Cloud Native Computing Foundation accepted Prometheus as its second incubated project

- 8. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 8 di 47 Prometheus CommunityPrometheus Community Open source project IRC: #prometheus User mailing lists: Prometheus-announce Prometheus-users Prometheus-announce. Third-party commercial support

- 9. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 9 di 47 Prometheus architecturePrometheus architecture

- 10. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 10 di 47 Integration withIntegration with Many existing integrations: Java, JMX, Python, Go, Ruby, .Net, Machine, Cloudwatch, EC2, MySQL, PostgreSQL, Haskell, Bash, Node.js, SNMP, Consul, HAProxy, Mesos, Bind, CouchDB, Django, Mtail, Heka, Memcached, RabbitMQ, Redis, RethinkDB, Rsyslog, Meteor.js, Minecraft... Graphite, Statsd, Collectd, Scollector, Munin, Nagios integrations aid transition.

- 11. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 11 di 47 InstallationInstallation Pre-compiled binaries Compile from source: makefile Docker Ansible Chef Puppet Saltstack

- 12. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 12 di 47 ConfigurationConfiguration Written in Yaml format Sections: global rule_files scrape_configs alerting remote_write remote_read

- 13. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 13 di 47 # my global config global: scrape_interval: 15s evaluation_interval: 30s # scrape_timeout is set to the global default (10s). external_labels: monitor: codelab foo: bar rule_files: - "first.rules" - "my/*.rules" remote_write: - url: http://remote1/push write_relabel_configs: - source_labels: [__name__] regex: expensive.* action: drop - url: http://remote2/push tls_config: cert_file: valid_cert_file key_file: valid_key_file remote_read: - url: http://remote1/read read_recent: true - url: http://remote3/read read_recent: false required_matchers: job: special tls_config: cert_file: valid_cert_file key_file: valid_key_file scrape_configs: - job_name: prometheus honor_labels: true # scrape_interval is defined by the configured global (15s). # scrape_timeout is defined by the global default (10s). # metrics_path defaults to '/metrics' # scheme defaults to 'http'. file_sd_configs: - files: - foo/*.slow.json - foo/*.slow.yml - single/file.yml refresh_interval: 10m - files: - bar/*.yaml static_configs: - targets: ['localhost:9090', 'localhost:9191'] labels: my: label your: label relabel_configs: - source_labels: [job, __meta_dns_name] regex: (.*)some-[regex] target_label: job replacement: foo-${1} # action defaults to 'replace' - source_labels: [abc] target_label: cde - replacement: static target_label: abc

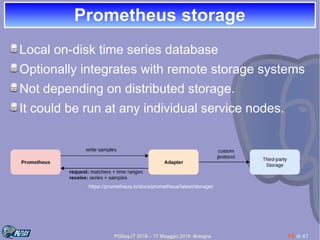

- 14. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 14 di 47 Prometheus storagePrometheus storage Local on-disk time series database Optionally integrates with remote storage systems Not depending on distributed storage. It could be run at any individual service nodes. https://prometheus.io/docs/prometheus/latest/storage/

- 15. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 15 di 47 PromQLPromQL Prometheus query language Can multiply, add, aggregate, join, predict, take quantiles across many metrics in the same query Not SQL style Designed for time series data http_requests_total{job="prometheus"}[5m] http_requests_total{job="prometheus", code="200"} http_requests_total{job="prometheus"}[5m] http_requests_total{job="prometheus", code="200"}

- 16. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 16 di 47 Data modelData model All data are store as time series a float64 value a millisecond-precision timestamp Streams of timestamped values belonging to the same metric

- 17. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 17 di 47 Metric names and labelsMetric names and labels Every time series is identified by its metric name Labels are keyvalue pairs <metric name>{<label name>=<label value>, …}<metric name>{<label name>=<label value>, …}

- 18. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 18 di 47 Long term metric storageLong term metric storage The default retention period is 15 days The data become large if you set long retention period

- 19. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 19 di 47 TimescaleDB

- 20. TimeScaleDBTimeScaleDB Open source (Apache 2 license) Time oriented features Enabling long-term storage to Prometheus. https://www.timescale.com/

- 21. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 21 di 47 https://docs.timescale.com/v1.2/getting-started/installation/docker/installation-docker

- 22. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 22 di 47 LicensingLicensing https://www.timescale.com/products

- 23. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 23 di 47 Setup TimescaleDBSetup TimescaleDB Connect to the database c myDB Extend the database with TimescaleDB CREATE EXTENSION IF NOT EXISTS timescaledb CASCADE; Connect to the database c myDB Extend the database with TimescaleDB CREATE EXTENSION IF NOT EXISTS timescaledb CASCADE; # Connect to PostgreSQL, using a superuser named 'postgres' psql U postgres h localhost # Connect to PostgreSQL, using a superuser named 'postgres' psql U postgres h localhost Create a database CREATE database myDB; Create a database CREATE database myDB;

- 24. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 24 di 47 HypertableHypertable Abstraction of a single continuous table across all space and time intervals Query via vanilla SQL We start by creating a regular SQL table CREATE TABLE sensor_data ( time TIMESTAMPTZ NOT NULL, location TEXT NOT NULL, temperature DOUBLE PRECISION NULL, humidity DOUBLE PRECISION NULL, light DOUBLE PRECISION NULL ); Then we convert it into a hypertable that is partitioned by time SELECT create_hypertable('sensor_data', 'time'); We start by creating a regular SQL table CREATE TABLE sensor_data ( time TIMESTAMPTZ NOT NULL, location TEXT NOT NULL, temperature DOUBLE PRECISION NULL, humidity DOUBLE PRECISION NULL, light DOUBLE PRECISION NULL ); Then we convert it into a hypertable that is partitioned by time SELECT create_hypertable('sensor_data', 'time');

- 25. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 25 di 47 MigrationMigration pg_dump for exporting schema and data Copy over the database schema choose which tables will become hypertables Backup data (CSV). Import the data into TimescaleDB

- 26. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 26 di 47 https://docs.timescale.com/v1.3/tutorials/prometheus-adapter

- 27. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 27 di 47 prometheus-postgresql-adapterprometheus-postgresql-adapter Translation proxy Data needs to be first deserialized and then converted into the Prometheus native format before it is inserted into the database pg_prometheus is required http://github.com/timescale/prometheus-postgresql-adapter/

- 28. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 28 di 47 pg_prometheuspg_prometheus Postgresql extension Translates from the Prometheus data model into a compact SQL model https://github.com/timescale/pg_prometheus

- 29. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 29 di 47 Data are stored in a “normalized format” labels are stored in a labels table metric values in a values table Column | Type | Modifiers -------------+--------------------------+----------- time | timestamp with time zone | value | double precision | labels_id | integer | Column | Type | Modifiers -------------+--------------------------+----------- time | timestamp with time zone | value | double precision | labels_id | integer | pg_prometheus – normalizationpg_prometheus – normalization Column | Type | Modifiers ------------+---------+------------------------------------------------------------- id | integer | not null default nextval('metrics_labels_id_seq'::regclass) metric_name | text | not null labels | jsonb | Column | Type | Modifiers ------------+---------+------------------------------------------------------------- id | integer | not null default nextval('metrics_labels_id_seq'::regclass) metric_name | text | not null labels | jsonb |

- 30. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 30 di 47 InstallationInstallation Precompliled binaries Docker image

- 31. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 31 di 47 Run all via docker composeRun all via docker compose services: db: environment: POSTGRES_PASSWORD: postgres POSTGRES_USER: postgres image: timescale/pg_prometheus:latest ports: 5432:5432/tcp prometheus: image: prom/prometheus:latest ports: 9090:9090/tcp volumes: ./prometheus.yml:/etc/prometheus/prometheus.yml:ro prometheus_postgresql_adapter: build: context: . depends_on: db prometheus environment: TS_PROM_LOG_LEVEL: debug TS_PROM_PG_DB_CONNECT_RETRIES: 10 TS_PROM_PG_HOST: db TS_PROM_PG_PASSWORD: postgres TS_PROM_PG_SCHEMA: postgres TS_PROM_WEB_TELEMETRY_PATH: /metricstext image: timescale/prometheuspostgresqladapter:latest ports: 9201:9201/tcp version: '3.0'l services: db: environment: POSTGRES_PASSWORD: postgres POSTGRES_USER: postgres image: timescale/pg_prometheus:latest ports: 5432:5432/tcp prometheus: image: prom/prometheus:latest ports: 9090:9090/tcp volumes: ./prometheus.yml:/etc/prometheus/prometheus.yml:ro prometheus_postgresql_adapter: build: context: . depends_on: db prometheus environment: TS_PROM_LOG_LEVEL: debug TS_PROM_PG_DB_CONNECT_RETRIES: 10 TS_PROM_PG_HOST: db TS_PROM_PG_PASSWORD: postgres TS_PROM_PG_SCHEMA: postgres TS_PROM_WEB_TELEMETRY_PATH: /metricstext image: timescale/prometheuspostgresqladapter:latest ports: 9201:9201/tcp version: '3.0'l

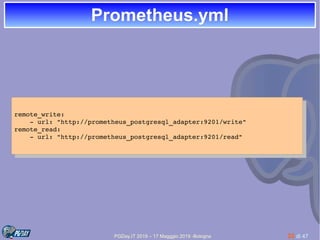

- 32. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 32 di 47 Prometheus.ymlPrometheus.yml remote_write: url: "http://prometheus_postgresql_adapter:9201/write" remote_read: url: "http://prometheus_postgresql_adapter:9201/read" remote_write: url: "http://prometheus_postgresql_adapter:9201/write" remote_read: url: "http://prometheus_postgresql_adapter:9201/read"

- 33. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 33 di 47 TimescaleDB- tuneTimescaleDB- tune Program for tuning a TimescaleDB db Parse existing postgresql.conf Ensure extension is installed Provide reccomendations

- 34. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 34 di 47 Prometheus HA

- 35. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 35 di 47 Prometheus HAPrometheus HA Avoiding data loss Single point of failure Avoiding missing alerts Local storage is limited by single nodes

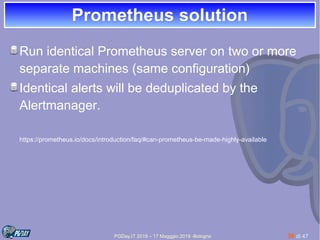

- 36. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 36 di 47 Prometheus solutionPrometheus solution Run identical Prometheus server on two or more separate machines (same configuration) Identical alerts will be deduplicated by the Alertmanager. https://prometheus.io/docs/introduction/faq/#can-prometheus-be-made-highly-available

- 37. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 37 di 47 https://mkezz.wordpress.com/2018/09/05/high-availability-prometheus-and-alertmanager/

- 38. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 38 di 47 But ...But ... It can be hard to keep data in sync. Often parallel Prometheus instances do not have identical data. scrape intervals may differ, since each instance has its own clock Remote storage

- 39. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 39 di 47 Prometheus HA + TimescaleDBPrometheus HA + TimescaleDB https://blog.timescale.com/prometheus-ha-postgresql-8de68d19b6f5/

- 40. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 40 di 47 Prometheus ConfigurationPrometheus Configuration global: scrape_interval: 5s evaluation_interval: 10s scrape_configs: job_name: prometheus static_configs: targets: ['localhost:9090'] remote_write: url: "http://localhost:9201/write" remote_read: url: "http://localhost:9201/read" read_recent: true global: scrape_interval: 5s evaluation_interval: 10s scrape_configs: job_name: prometheus static_configs: targets: ['localhost:9090'] remote_write: url: "http://localhost:9201/write" remote_read: url: "http://localhost:9201/read" read_recent: true global: scrape_interval: 5s evaluation_interval: 10s scrape_configs: job_name: prometheus static_configs: targets: ['localhost:9091'] remote_write: url: "http://localhost:9202/write" remote_read: url: "http://localhost:9202/read" read_recent: true global: scrape_interval: 5s evaluation_interval: 10s scrape_configs: job_name: prometheus static_configs: targets: ['localhost:9091'] remote_write: url: "http://localhost:9202/write" remote_read: url: "http://localhost:9202/read" read_recent: true Node 1 Node 2

- 41. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 41 di 47 ./prometheus web.listenaddress=:9091 –storage.tsdb.path=data1/ ./prometheus web.listenaddress=:9092 storage.tsdb.path=data2/ ./prometheus web.listenaddress=:9091 –storage.tsdb.path=data1/ ./prometheus web.listenaddress=:9092 storage.tsdb.path=data2/ Start Prometheus instances Start Prometheus-adapters ./prometheuspostgresqladapter web.listenaddress=:9201 leaderelection.pgadvisorylockid=1 leaderelection.pgadvisorylock.prometheustimeout=6s ./prometheuspostgresqladapter web.listenaddress=:9202 leaderelection.pgadvisorylockid=1 leaderelection.pgadvisorylock.prometheustimeout=6s ./prometheuspostgresqladapter web.listenaddress=:9201 leaderelection.pgadvisorylockid=1 leaderelection.pgadvisorylock.prometheustimeout=6s ./prometheuspostgresqladapter web.listenaddress=:9202 leaderelection.pgadvisorylockid=1 leaderelection.pgadvisorylock.prometheustimeout=6s

- 42. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 42 di 47 Adapter leader electionAdapter leader election Allow only one instance to write into the Database

- 43. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 43 di 47 Concurrency controlConcurrency control Consensum algoritm (Raft) Acquire a shared mutex (lock) Zookeeper, Consul

- 44. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 44 di 47 Postgresql advisory lockPostgresql advisory lock Introduced in Postgresql 9.4 Application enforced database lock

- 45. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 45 di 47 ConclusionConclusion HA support ? Long storage support ?

- 46. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 46 di 47 Questions?Questions?

- 47. PGDay.IT 2019 – 17 Magggio 2019 -Bologna 47 di 47

![PGDay.IT 2019 – 17 Magggio 2019 -Bologna 13 di 47

# my global config

global:

scrape_interval: 15s

evaluation_interval: 30s

# scrape_timeout is set to the global default

(10s).

external_labels:

monitor: codelab

foo: bar

rule_files:

- "first.rules"

- "my/*.rules"

remote_write:

- url: http://remote1/push

write_relabel_configs:

- source_labels: [__name__]

regex: expensive.*

action: drop

- url: http://remote2/push

tls_config:

cert_file: valid_cert_file

key_file: valid_key_file

remote_read:

- url: http://remote1/read

read_recent: true

- url: http://remote3/read

read_recent: false

required_matchers:

job: special

tls_config:

cert_file: valid_cert_file

key_file: valid_key_file

scrape_configs:

- job_name: prometheus

honor_labels: true

# scrape_interval is defined by the configured

global (15s).

# scrape_timeout is defined by the global

default (10s).

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

file_sd_configs:

- files:

- foo/*.slow.json

- foo/*.slow.yml

- single/file.yml

refresh_interval: 10m

- files:

- bar/*.yaml

static_configs:

- targets: ['localhost:9090', 'localhost:9191']

labels:

my: label

your: label

relabel_configs:

- source_labels: [job, __meta_dns_name]

regex: (.*)some-[regex]

target_label: job

replacement: foo-${1}

# action defaults to 'replace'

- source_labels: [abc]

target_label: cde

- replacement: static

target_label: abc](https://image.slidesharecdn.com/prometeus-190621131906/85/How-to-use-Postgresql-in-order-to-handle-Prometheus-metrics-storage-13-320.jpg)

![PGDay.IT 2019 – 17 Magggio 2019 -Bologna 15 di 47

PromQLPromQL

Prometheus query language

Can multiply, add, aggregate, join, predict, take

quantiles across many metrics in the same query

Not SQL style

Designed for time series data

http_requests_total{job="prometheus"}[5m]

http_requests_total{job="prometheus", code="200"}

http_requests_total{job="prometheus"}[5m]

http_requests_total{job="prometheus", code="200"}](https://image.slidesharecdn.com/prometeus-190621131906/85/How-to-use-Postgresql-in-order-to-handle-Prometheus-metrics-storage-15-320.jpg)

![PGDay.IT 2019 – 17 Magggio 2019 -Bologna 40 di 47

Prometheus ConfigurationPrometheus Configuration

global:

scrape_interval: 5s

evaluation_interval: 10s

scrape_configs:

job_name: prometheus

static_configs:

targets: ['localhost:9090']

remote_write:

url: "http://localhost:9201/write"

remote_read:

url: "http://localhost:9201/read"

read_recent: true

global:

scrape_interval: 5s

evaluation_interval: 10s

scrape_configs:

job_name: prometheus

static_configs:

targets: ['localhost:9090']

remote_write:

url: "http://localhost:9201/write"

remote_read:

url: "http://localhost:9201/read"

read_recent: true

global:

scrape_interval: 5s

evaluation_interval: 10s

scrape_configs:

job_name: prometheus

static_configs:

targets: ['localhost:9091']

remote_write:

url: "http://localhost:9202/write"

remote_read:

url: "http://localhost:9202/read"

read_recent: true

global:

scrape_interval: 5s

evaluation_interval: 10s

scrape_configs:

job_name: prometheus

static_configs:

targets: ['localhost:9091']

remote_write:

url: "http://localhost:9202/write"

remote_read:

url: "http://localhost:9202/read"

read_recent: true

Node 1 Node 2](https://image.slidesharecdn.com/prometeus-190621131906/85/How-to-use-Postgresql-in-order-to-handle-Prometheus-metrics-storage-40-320.jpg)