HPC DAY 2017 | Prometheus - energy efficient supercomputing

- 2. ACC Cyfronet AGH-UST established in 1973 part of AGH University of Science and Technology in Krakow, Poland member of PIONIER consortium operator of Krakow MAN centre of competence in HPC and Grid Computing provides free computing resources for scientific institutions home for supercomputers

- 3. Prometheus 3

- 4. Liquid Cooling Water: up to 1000x more efficient heat exchange than air Less energy needed to move the coolant Hardware (CPUs, DIMMs) can handle ~80C CPU/GPU vendors show TDPs up to 300W Challenge: cool 100% of HW with liquid network switches PSUs

- 5. MTBF The less movement the better less pumps less fans less HDDs Example pump MTBF: 50 000 hrs fan MTBF: 50 000 hrs 1800 node system MTBF: 7 hrs

- 6. Prometheus HP Apollo 8000 16 m2, 20 racks (4 CDU, 16 compute) 2.4 PFLOPS PUE <1.05, 800 kW peak power 2232 nodes: 2160 CPU nodes: 2x Intel Haswell E5-2680v3, 128 GB RAM, IB FDR 56 Gb/s, Ethernet 1 Gb/s 72 GPU nodes: +2 NVIDIA Tesla K40d 53568 cores, up to 13824 per island 279 TB DDR4 RAM CentOS 7 + SLURM

- 7. Prometheus storage Diskless compute nodes Separate project for storage DDN SFA12kx hardware Lustre-based 2 file systems: Scratch: 120 GB/s, 5 PB usable space Archive: 60 GB/s, 5 PB usable space HSM-ready NFS for $HOME and software

- 8. Why Apollo 8000? Most energy efficient The only solution with 100% warm water cooling Highest density Lowest TCO

- 9. Even more Apollo Focuses also on ‘1’ in PUE! Power distribution Less fans Detailed monitoring ‘energy to solution’ Dry node maintenance Less cables Prefabricated piping Simplified management

- 10. Deployment timeline Day 0 - Contract signed (20.10.2014) Day 23 - Installation of the primary loop starts Day 35 - First delivery (service island) Day 56 - Apollo piping arrives Day 98 - 1st and 2nd island delivered Day 101 - 3rd island delivered Day 111 - basic acceptance ends Official launch event on 27.04.2015

- 11. Facility preparation Primary loop installation took 5 weeks Secondary (prefabricated) just 1 week Upgrade of the raised floor done „just in case” Additional pipes for leakage/condensation drain Water dam with emergency drain Lot of space needed for the hardware deliveries (over 100 pallets)

- 13. Secondary loop

- 14. Prometheus - node 14 HP XL730f/XL750f Gen9 • 2x Intel Xeon E5-2680 v3 (Haswell) • 24 cores, 2100-3300 MHz • 30 MB cache, 128 GB RAM DDR4 • Mellanox Connect-X3 IB FDR 56Gb/s

- 15. Prometheus - rack 15 HP Apollo 8000: • 8 cells – 9 trays each – 18 CPU or 9 GPU nodes • 8 IB FDR 36p 56 Gb/s switches • Dry-disconnect and HEX water cooling • HVDC 480V HP Apollo 8000 CDU: • Heat exchanger • Vacuum pump • Cooling controller • IB FDR 36p 56 Gb/s (18+3) dist+core switches

- 16. Prometheus – compute island

- 17. Prometheus – IB network service nodes Service island I/O nodes IB core network 576 CPU nodes Compute island 576 CPU nodes Compute island 576 CPU nodes Compute island 432 CPU nodes 72 GPU nodes Compute island Over 250 Tb/s aggregate throughput • 30 km of cables • 217 switches • >10 000 ports

- 18. Monitoring 18 SLURM node states IB network traffic Monitoring of: • CPU frequencies and temperatures • Memory usage • NFS and Lustre bandwith/IOPS/MDOPS • Power and cooling

- 21. Top500 and Green500 3-rd level submision (Nov 2015) #72, 2068 Mflops/W #1 petascale x86 system in Europe submission after expansion (Nov 2015) #38, 1,67 PFLOPS Rmax GPUs not used for the run

- 22. Application & software Academic workload Lots of small/medium jobs Few big jobs 330 projects 750 users Main fields: Chemistry Biochemistry (farmaceuticals) Astrophysics 22

- 23. thatmpi code Institute of Nuclear Physics PAS in Krakow Study of non-relativistic shock waves hosted by supernova remnants that are believed to generate most of the Galactic cosmic-rays Thatmpi: Two-and-a-Half-Dimensional Astroparticle Stanford code with MPI (PIC) 2.5-dimensional particle dynamics fully relativistic with electro-magnetism colliding plasma jets with perpendicular B-field large simulations: up to 10k cores/run Applications

- 24. thatmpi: left leptons jet animation A. Dorobisz, M. Kotwica

- 25. thatmpi: joint development low-level: vectorization flow control refactoring register and cache-use optimization high-level: modernization from FORTRAN77 to Fortran2013 new particle sorting method portable data dumping with HDF5 communication buffering total time reduction: over 20% >1.2 MWh less energy per run (350 nodes, 60h) Future: 3D, domain partitioning, code refactoring

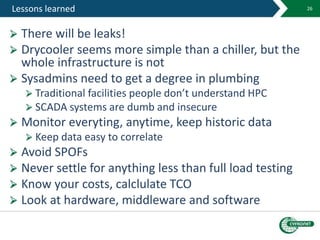

- 26. Lessons learned There will be leaks! Drycooler seems more simple than a chiller, but the whole infrastructure is not Sysadmins need to get a degree in plumbing Traditional facilities people don’t understand HPC SCADA systems are dumb and insecure Monitor everyting, anytime, keep historic data Keep data easy to correlate Avoid SPOFs Never settle for anything less than full load testing Know your costs, calclulate TCO Look at hardware, middleware and software 26

- 27. Thank you!