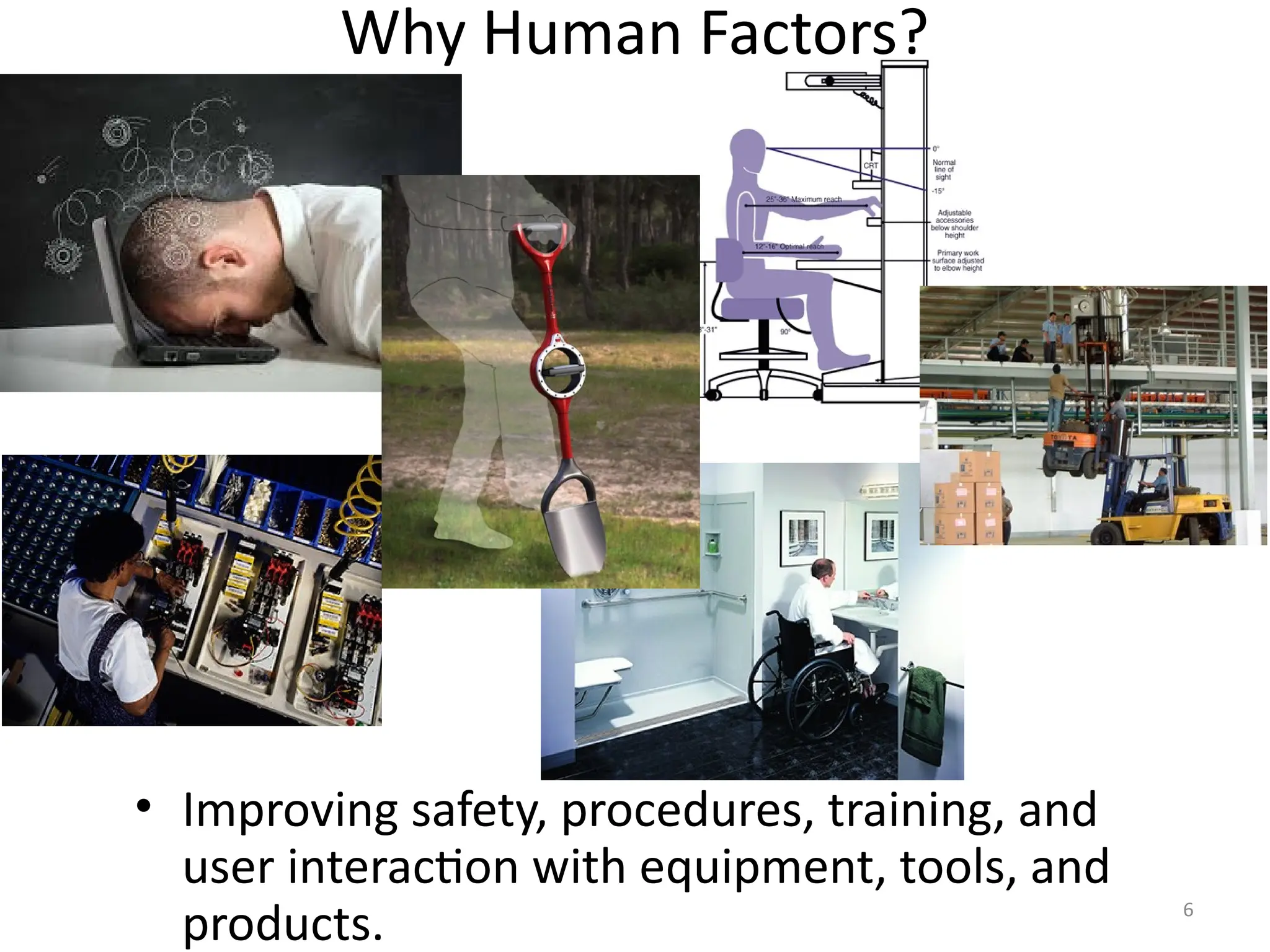

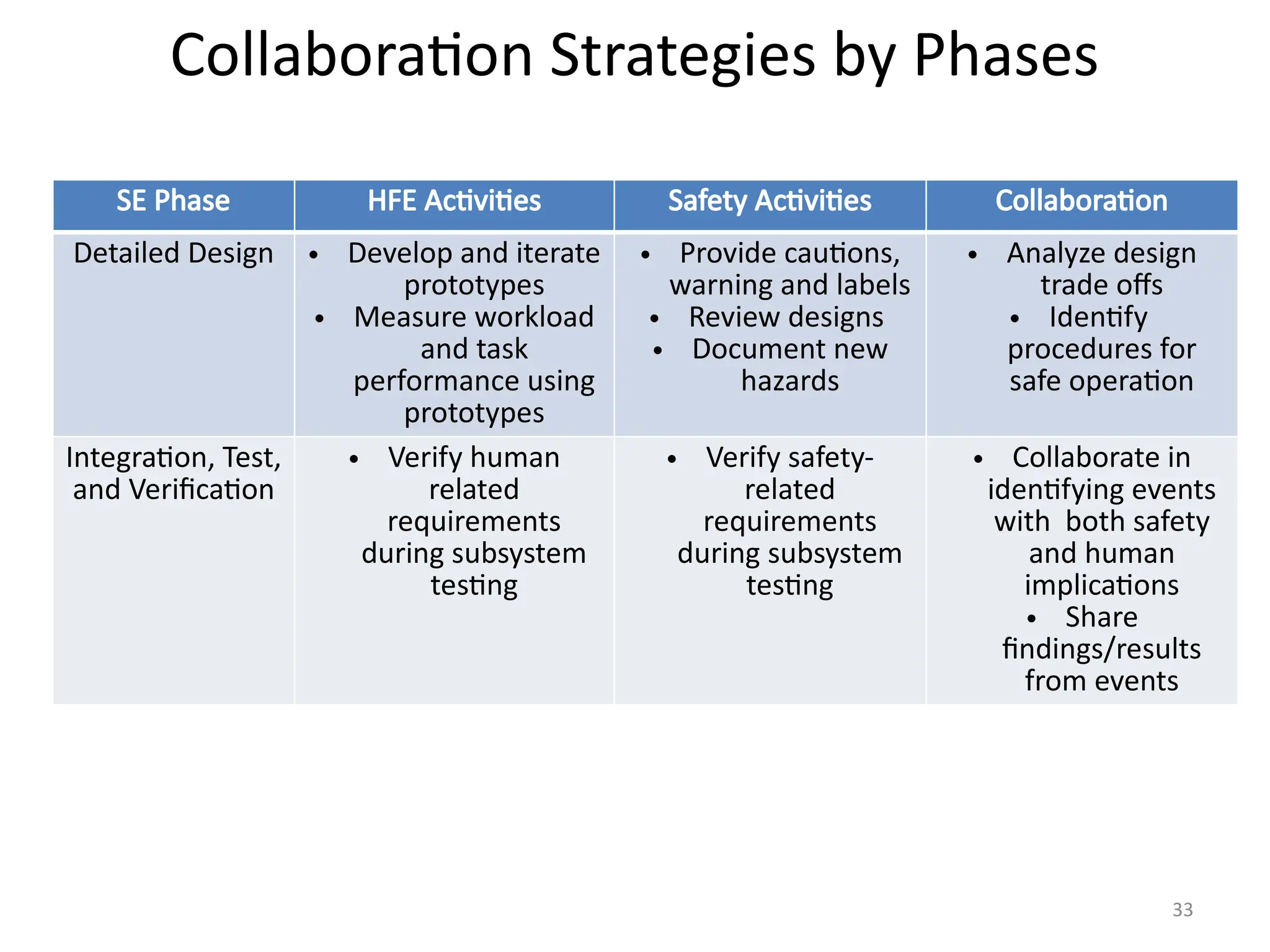

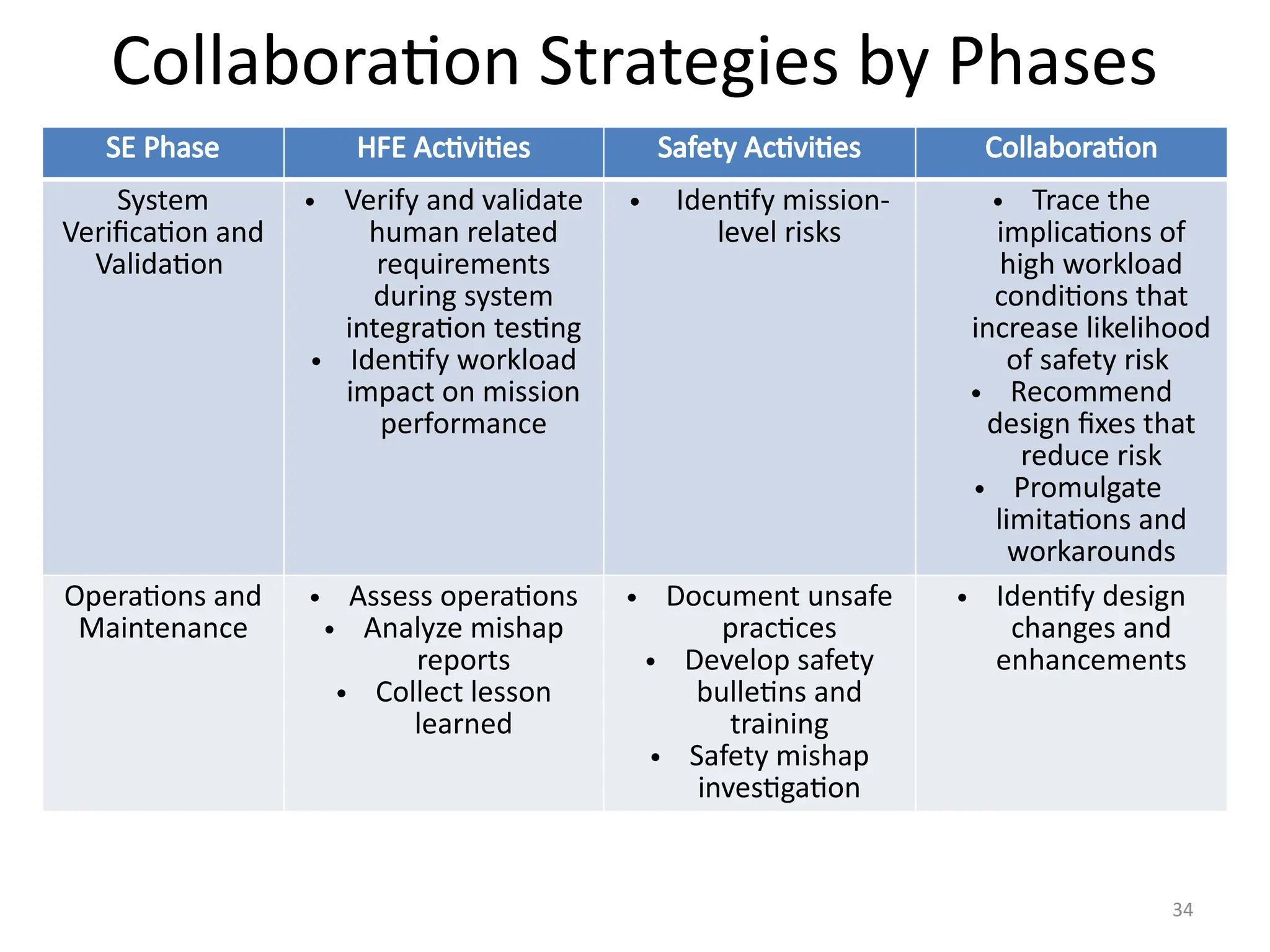

The presentation outlines the distinction between human factors and human systems integration, emphasizing the application of human factors in system design to improve safety and user interaction. It discusses the Swiss cheese model of safety, which illustrates active failures and latent conditions that contribute to errors, and provides examples of physical and cognitive human factors in system design. Collaboration between safety and human factors professionals is essential for effective design and risk assessment, ensuring that human capabilities and limitations are taken into account throughout the systems engineering process.