Image Recognition Expert System based on deep learning

- 1. Page | 1 Artificial Intelligence Seminar Report on Image Recognition Expert System based on deep learning Submitted by Name Roll number Rege PrathameshMilind 1605012 Department of Mechanical Engineering K. J. Somaiya College of Engineering Mumbai 400077 Jan/April 2017

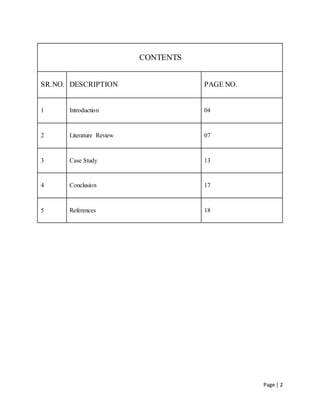

- 2. Page | 2 CONTENTS SR.NO. DESCRIPTION PAGE NO. 1 Introduction 04 2 Literature Review 07 3 Case Study 13 4 Conclusion 17 5 References 18

- 3. Page | 3 Abstract Image detection systems are gradually being popularized and applied. This paper is to discuss a new expert system hybridized with deep learning to utilize image detection systems in road safety. We shall discuss abilities of low power systems to accurately detect high-resolution images. Secondly we shall discuss knowledge based systems and its’ understanding of image processing. Thirdly we shall discuss the utilization of Fourier transform in deep learning on an image recognition system. Finally we utilize the results of all three studies and apply it to our benefit to detect vehicles jumping signals at traffic signal crossing

- 4. Page | 4 Introduction In AI, expert systems are those computer systems which perform decision-making with the same capacity of human experts. There are used to solve complex problems using mainly if-then rules as knowledge rather than procedural programming as is the convention. The expert systems are amongst the first truly successful AI software. The expert systems were introduced in the 1970s. The first expert system was Stanford Heuristic Programming Project led by Edward Feigenbaum. Expert systems were proliferated in the 1980s. The first expert system in design capacity was Synthesis Integral Design(SID) software program. SID was written in LISP code language. In the 1990s the idea of the expert system as standalone system vanished. Many of the vendors (such as SAP, Siebel, Oracle) integrate expert systems with their products so that they go hand in hand with business automation and integration. Expert systems are knowledge-based systems. It consists of three subsystems: a user interface, an inference engine, and a knowledge base. The knowledge base contains the rules and the inference engine applies them. There are two modes of inferencing: forward and backward chaining. The various techniques used in inference engine are: 1. Truth maintenance. 2. Hypothetical reasoning. 3. Fuzzy logic. 4. Ontology classification 5. Convolution Neural Networks. The advantages of Expert systems are: 1. With expert systems, the goal to specify rules is easily intuitive and understood. 2. Ease of maintenance is most obvious benefit. 3. The knowledge base can be updated and extended.

- 5. Page | 5 4. They contain large amount of information. The disadvantages of Expert systems are: 1. Most common is knowledge acquisition problem (it is tedious). 2. They cannot learn from their mistake and adapt. 3. Mimicking knowledge of expert is difficult. 4. Performance is a problem for expert system using tools such as LISP code. The most common application of expert system is Image recognition (with help of convolution neural networks or deep leaning). This is most commonly used in medical field, biology and mechanical systems. Image recognition is a classical problem in machine vision in determining if image data contains specific object or feature. The image recognition consists of following varieties: 1. Object recognition/classification. 2. Identification. 3. Detection. The benchmark in image recognition is ImageNet Large Scale Visual Recognition Challenge (ILSVRC). The ILSVRC has been held annually since 2010. Performance of deep learning in this challenge is close to that of humans. The specialized tasks in image recognition are: 1. Content based image retrieval. 2. Pose estimation. 3. Optical character recognition. 4. 2D code reading. 5. Facial recognition. 6. Shape recognition technology.

- 6. Page | 6 Recently image recognition and detection has become common in all fields of technology, such as social networks and cameras to recognize faces; in medicine and microbiology to detect bacteria, germs and small obstructions in surgeries; and in phones and mechanical safety systems. With rise of phones and wireless technologies, use of deep learning for image recognition has been on the rise. We also see a rise in cameras embedded in many wireless phones, safety systems, and unmanned aerial vehicles. It has also been on the rise in automation with increasing usage of robots with compound eyes. It has also been used in pattern recognition in gaming and other fields. In this report we will expand on usage of image recognition systems in mechanical safety devices.

- 7. Page | 7 Literature Review 1. Kent Gauen, Rohit Rangan, Anup Mohan, Yung-Hsiang Lu; Wei Liu, Alexander C. Berg. “Low-Power Image Recognition Challenge”. IEEE Rebooting Computing Initiative. Statement: Large-scale use of cameras in battery powered systems has alleviated the necessity of energy efficiency of cameras in image recognition. LPIRC has decided to set a benchmark in in comparing solutions of low power image recognition. In recent years, rise of availability of cameras has led to significant progress in image recognition. Notwithstanding it also raises the question of efficiency in energy consumption. Embedded cameras are used in many battery-powered systems for image recognition where energy efficiency is a critical criterion. There is no widely accepted benchmark for comparing solutions of low power image recognition. Currently there is no metric available for comparing in terms of both energy efficiency and accuracy in recognition. LPIRC began as a competition to consider both these criteria. It is an offshoot of ILSVRC and began in 2015. The benchmark metrics used in LPIRC are: I. Datasets metric: At ISLVRC 2013, model from New York University “Overfeat” was proposed. It used deep learning to simultaneously classify, locate and detect object [1] and specialized datasets were created. An example is PARASEMPRE in semantic processing [2]. LPIRC considers object detection. This comes in classification and localization. The various datasets existing for object detection are: PASCAL, VOC, ImageNet, ILSVRC and COCO [3][4][5][6]. LPIRC uses ILSVRC dataset as it is the largest one. The dataset for LPIRC is a subset of ILSVRC.

- 8. Page | 8 II. Evaluation metric: LPIRC uses m.A.P (mean Average Precision) to measure accuracy of object detection like ISLVRC [5]. Each detection is in the format (bij, sij) for image Ii and object class Cj; where, bij is bounding box and sij is the score. For the bounding box evaluation, it uses IoU. For x= reported bounding box region. y= ground truth bounding box region. IoU = 𝑥∩𝑦 𝑥∪𝑦 (1) To accommodate smaller objects (less than 25×25 pixels), we lose the threshold value by giving 5 pixel margin to each side of image. thr(B) = min (0.5, 𝑤ℎ (𝑤+10)(ℎ+10) ) (2) A detection result is true positive if IoU overlaps with ground truth box more than threshold value defined in equation (2); otherwise it is false positive. For multiple detection (IoU > 0.5) only the highest score is consideredas true positive. The final score is given by Total score = 𝑚.𝐴.𝑃 𝑇𝑜𝑡𝑎𝑙 𝑒𝑛𝑒𝑟𝑔𝑦 𝑐𝑜𝑛𝑠𝑢𝑚𝑝𝑡𝑖𝑜𝑛 (3) So in conclusion , in the last two years LIPRC has managed to establish itself as a benchmark for low power image detection. There has been has improvements in both m.A.P and energy efficiency in the last two years.

- 9. Page | 9 2. Takashi Matsuyama, “Knowledge-Based Aerial Image Understanding Systems and Expert Systems for Image Processing”. IEEE Transactions on Geoscience and Remote sensing, Vol. GE-25, NO. 3, MAY 1987 Statement: AI, in the form of knowledge based systems, has an extensive role in automatic interpretation of remotely sensed imagery. The development of space aeronautics and drone technologies have led to extensive development of expert systems in aerial image understanding. Automatic interpretation of aerial photo-graphs is now widely preferred and used. The various analysis methods used are: i. Statistical classification methods of pixel understanding. ii. Target direction by template matching. iii. Shape & texture analysis by image processing. iv. Use of structural and contextual information. v. Image understanding of aerial photographs. Knowledge base and reasoning strategy are major topics of research in AI and many techniques have been developed: semantic networks and frames, logical inference and so on. They are used to solve problems requiring expertise [7]. They are flexible and are used to solve following problems: i. Noise in input image data & errors in image recognition. ii. Ill-defined problems. iii. Limited information available. iv. Requirement of versatile capabilities of geometric reasoning. A blackboard model for aerial image allows flexible integration of diverse object detection models. It is the database where all information is stored. Since all image recognition results are written in blackboard, all subsystems can see them to detect new objects.

- 10. Page | 10 However sophisticated control structures are required to realize flexible image understanding [8]. It incorporates: i. Focus of attention to confine spatial domains. ii. Conflict resolution. iii. Error correction. ACRONYM [9] is used to detect complex 3D objects which are represented by frames. It then matches models and image features. Since it is difficult to detect features using bottom-up analysis alone, it also integrates top-down analysis. SIGMA is used to represent about hypothesis [10]. In SIGMA three levels of reasoning are identified: i. Reasoning about structure and spatial relations between objects. ii. Reasoning about transformation of objects. iii. Reasoning about image segmentation. Geographic information systems & aerial image understanding complement each other. In conclusion, to realize flexibility in integration, we solve problems using data mapping, data structuring, accurate correspondence and map guided photo transformation.

- 11. Page | 11 3. Yuki Kamikubo, Minoru Watanabe, Shoji Kawahito, “Image recognition system using an optical Fourier transform on a dynamically reconfigurable vision architecture” Statement: Recently, several varieties of image recognition using Fourier transform have been proposed. The benefit of using Fourier transform is its position independent image recognition capability. Notwithstanding the operation of Fourier transform of high resolution is heavy. Hence it is needed to shorten the time period using dynamic reconfiguration. Demand of high speed image recognition for development of autonomous vehicles, aircraft and robots has been increasing [11]. The frame rates used for image recognition are limited to 30 fps; but the frame rates required are at the rates higher than 1000 fps. Image recognition are always executed sequentially. Numerous template images are stored in memory in advance. Template matching is executed between external images and template images. Recognition slows if various images are recognized simultaneously. To remove this bottleneck, an optoelectronic device with holographic memory is introduced. Multiple template images are stored in this large holographic memory. since the device has massive parallel optical connection (> 1 million), template information can be read out quickly in a very short period. Yet in a position independent image recognition operation, which is mandatorily required in a real-world operation, template matching takes a long time. This can be resolved by Fourier transform [12] [13] [14]. Fourier transform is well known to be useful for position independent image recognition. It is introduced outside VLSI technologies. It is used in dynamically reconfigurable vision chip. The image is focused on PAM-SLM which is an optical read-in and read-out device. The coherent image passes through a set of lens. After this, the power spectrum of image is received on photodiode array. Fourier transform is executed constantly and automatically and phot spectrum can be position independent.

- 12. Page | 12 Use of photodiode arrays reduces time required drastically; only 1 ms elapses for transfer of 100000 templates and its matching. A Fourier transform is calculated theoretically as follows: The amplitude φ(x,y) of diffraction is calculated as φ(x,y) α ∬ 𝐼(x0,y0)L(x0,y0)exp[jkr]dx0dy0 ∞ −∞ In Fresnel region, r can be approximated as r ~ f + ( 𝑥0−𝑥)2 +(𝑦0−𝑦)^2 2𝑓 (4) where f is distance between lens plane and observation plane k is wave number (x0, y0) is co-ordinates of lens plane (x, y) is co-ordinate of observation plane I(x0, y0) is an image information L(x0, y0) is phase modulation of lens L(x0, y0) = exp[-j 𝑘 2𝑓 (x02+y02)] Fourier transform is achieved as φ(x, y) α ∬ 𝐼( 𝑥0, 𝑦0)exp[−j 𝑘 𝑓 (x0x+ y0y)]dx0dy0 ∞ −∞ The diffraction light intensity is calculated as P(x, y) = φ(x, y)φ*(x, y) (5) (*) denotes complex conjugate The result P(x, y) is power spectrum of an image. In conclusion Fourier transform dynamically reconfigurable vision architecture recognizes three artificial images by detecting power spectrum images. The architecture use PAL- SLM and a lens. Fourier transform can be executed in real time. It is useful to extract features of power spectrum information of each image in real time.

- 13. Page | 13 Case Study Problem Statement: To address the need of road safety at traffic signals by installing image detection expert systems. This problem is a common one suffered universally. About 1214 road crashes occur in India daily, with two-wheelers accounting for 25% of total road crash deaths [15] [16]. A major cause for this is jumping of red lights [15]. People breaking the signal and not getting caught tend to have the urge to break it again. As such there is need to have high speed detection systems. Figure 1. Road traffic deaths in India 1970-2014 (Source: NCRB) [15]. Figure 2. Cars and MTW registered in India by year (Source: Transport Research Wing 2014) [15] [16].

- 14. Page | 14 This is where image recognition systems come in. They are low power standalone systems. The knowledge base can be linked with DNN. The output of traffic signal and embedded cameras are connected to input of DNN and the output to inference engine [17]. The vehicle terminal fusion information is given below: Figure. 3. Vehicle terminal fusion information [17] The knowledge base can be linked wirelessly on a cloud-interface with a scanning module connected to Aadhaar/RTO database. The module will scan the database to find the match for license plate no. shown in image; thereby identifying the owner of the vehicle. The culprit can then be caught and handed over to the law. This will help reduce the accident rate. Creation of Rule base: The rule base is created using forward chaining method [18] [19] [20]. R1: IF RED THEN STOP. R2: IF GREEN THEN GO. R3: IF YELLOW AND STOP OR GO THEN GO. R4: IF RED AND STOP THEN NO PHOTO.

- 15. Page | 15 R5: IF RED AND GO THEN PHOTO. R6: IF GREEN AND STOP THEN PHOTO. R7: IF GREEN AND GO THEN NO PHOTO. R8: IF YELLOW THEN NO PHOTO. The block diagram will become: Figure. 4. Forward chained rule base. For a random data set of 1500 images collected the precision rate is given below recorded every 5 minutes:

- 16. Page | 16 Figure. 5. Absolute prediction error (Before peak hour) Figure. 6. Absolute prediction error (Post peak hour)

- 17. Page | 17 Conclusion Based on the results this report conclude that an image detection system can decrease precision error by a margin of 20%. This will help prevent future accidents and instill a sense of road safety in people. DNN will help compensate the weakness of expert systems by gradually adapting to every condition.

- 18. Page | 18 References 1. Pierre Sermanet, David Eigen, Xiang Zhang, Michael Mathieu, Rob Fergus and Yann LeCun. “Overfeat: Integrated Recognition, Localization and detection using convolutional neural networks,” In: CoRR labs/1312.6229(2013). 2. J. Berrant, A. Chou, R. Frostig and P. Liang. “Semantic Parsing on a Freebase from Question-Answer Pairs” In: Empirical methods in Natural Language Processing (EMNLP).2013. 3. M. Everingham, J. Winn and A. Zisserman. “The Pascal Visual Object Classes Challenge – A Perspective” In: International Journal of Computer Vision 111.1(Jan 2015) pp.98-136. 4. Fei-Fei Li, Kai Li, Olga Russakovsky, Jonathan Krause, Jia Deng and Alex Berg. ImageNet http//image-net.org/ 5. Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg and Li Fei-Fei. “ImageNet Large Scale Visual Recognition Challenge” In: International Journal of Computer Vision(IJCV)115.3(2015) pp. 211-252. 6. Tsung-Yi Lin, Michael Maire, Serge J. Belongie, Lubomir D. Bourdev, Ross B. Girshick, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollãr and C. Lawrence Zitnick. “Microsoft COCO: Common objects I context” In: CoRR labs/1405.0312(2014). 7. A, Barr and E.A.Feigenbaum, Eds, The Handbook of Artificial Intelligence. Los Altos, CA: Williams Kaufmann, 1981. 8. M.Nagao, “Control structures in pattern analysis” In: Pattern recognition, Vol. 17, pp 45- 46 1984. 9. R. A. Brooks, “Symbolic Reasoning among 3D models and 2D images” In: Artificial Intell, Vol. 17 pp 285-348, 1981. 10. T. Matsuyama and V. Hwang, “SIGMA: A framework for image understanding” In: Proc. 9th Joint Conf. Artificial Intell, pp 908-915, 1981. 11. C. R. German, M. V. Jakuba, J.C. Kimbley, J. Partan, S. Suman, A. Belani, D. R. Yoerger, “A long term vision for long-range ship-free deep ocean operations: Persistent presence through coordination of Autonomous Surface Underwater Vehicles” IEEE/OES, Autonomous Underwater Vehicles, pp. 1-7, 2012.

- 19. Page | 19 12. Joseph, Joby; Kamra, Kanval; Singh, K; Pillai, P K C, “Real-time image processing using selective erasure in photorefractive two wave mixing,” Applied optics, Vol. 31, Issue 23, pp 4769-4772, 1992. 13. Riasati, Vahid R; Mustafa A G, “Projection-slice synthetic discriminant functions for optical pattern recognition,” Applied optics, Vol. 36, Issue 14, pp 3022-3034, 1997. 14. Bartkiewicz, S; Sikorski, P; Miniewicz, A, “Optical image polymer structure,” Optics Letters, Vol. 23, Issue 22, pp 1769-1771, 1998. 15. National Crime Records Bureau, Ministry of Road Transport & Highway, Law Commission of India, Global status report on road safety, 2016. 16. Dinesh Mohan, Geeta Tiwari, Kavi Bhalla, “Road Saety in India Status Report,” TRIPP, IIT-Delhi. 17. Zhang Li, Lu Fei, Zhao Yongyi, “Based on swarm optimization-Neural Network integration algorithm in Internet Vehicle application” 18. Namarta Kapoor, Nischay Bahl, “Comparative study of forward backward chaining in Artificial Intelligence,” International Journal of Engineering and Computer Science, ISSN: 2319-7242. 19. Griffin N & Lewis F (1998) “A Rule-Based Inference Engine which is optimal and VLSI implementable,” IEEE International workshop on tools for AI Architectures, Languages and Algorithms. pp. 246-251. 20. Marek V, Nerode A & Remmel B, (1994) “A context for brief revision: forward chaining- normal non-monotic rule systems,” Annals of Pure and Applied Logic 67. pp. 269-323

![Page | 7

Literature Review

1. Kent Gauen, Rohit Rangan, Anup Mohan, Yung-Hsiang Lu; Wei Liu, Alexander C.

Berg. “Low-Power Image Recognition Challenge”. IEEE Rebooting Computing

Initiative.

Statement: Large-scale use of cameras in battery powered systems has alleviated the

necessity of energy efficiency of cameras in image recognition. LPIRC has decided to set

a benchmark in in comparing solutions of low power image recognition.

In recent years, rise of availability of cameras has led to significant progress in image

recognition. Notwithstanding it also raises the question of efficiency in energy

consumption. Embedded cameras are used in many battery-powered systems for image

recognition where energy efficiency is a critical criterion.

There is no widely accepted benchmark for comparing solutions of low power image

recognition. Currently there is no metric available for comparing in terms of both energy

efficiency and accuracy in recognition.

LPIRC began as a competition to consider both these criteria. It is an offshoot of ILSVRC

and began in 2015.

The benchmark metrics used in LPIRC are:

I. Datasets metric:

At ISLVRC 2013, model from New York University “Overfeat” was proposed. It

used deep learning to simultaneously classify, locate and detect object [1] and

specialized datasets were created. An example is PARASEMPRE in semantic

processing [2].

LPIRC considers object detection. This comes in classification and localization.

The various datasets existing for object detection are: PASCAL, VOC, ImageNet,

ILSVRC and COCO [3][4][5][6].

LPIRC uses ILSVRC dataset as it is the largest one. The dataset for LPIRC is a

subset of ILSVRC.](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-7-320.jpg)

![Page | 8

II. Evaluation metric:

LPIRC uses m.A.P (mean Average Precision) to measure accuracy of object

detection like ISLVRC [5].

Each detection is in the format (bij, sij) for image Ii and object class Cj; where,

bij is bounding box and sij is the score.

For the bounding box evaluation, it uses IoU.

For x= reported bounding box region.

y= ground truth bounding box region.

IoU =

𝑥∩𝑦

𝑥∪𝑦

(1)

To accommodate smaller objects (less than 25×25 pixels), we lose the threshold

value by giving 5 pixel margin to each side of image.

thr(B) = min (0.5,

𝑤ℎ

(𝑤+10)(ℎ+10)

) (2)

A detection result is true positive if IoU overlaps with ground truth box more than

threshold value defined in equation (2); otherwise it is false positive.

For multiple detection (IoU > 0.5) only the highest score is consideredas true

positive.

The final score is given by

Total score =

𝑚.𝐴.𝑃

𝑇𝑜𝑡𝑎𝑙 𝑒𝑛𝑒𝑟𝑔𝑦 𝑐𝑜𝑛𝑠𝑢𝑚𝑝𝑡𝑖𝑜𝑛

(3)

So in conclusion , in the last two years LIPRC has managed to establish itself as a

benchmark for low power image detection. There has been has improvements in

both m.A.P and energy efficiency in the last two years.](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-8-320.jpg)

![Page | 9

2. Takashi Matsuyama, “Knowledge-Based Aerial Image Understanding Systems and

Expert Systems for Image Processing”. IEEE Transactions on Geoscience and Remote

sensing, Vol. GE-25, NO. 3, MAY 1987

Statement: AI, in the form of knowledge based systems, has an extensive role in automatic

interpretation of remotely sensed imagery. The development of space aeronautics and

drone technologies have led to extensive development of expert systems in aerial image

understanding.

Automatic interpretation of aerial photo-graphs is now widely preferred and used. The

various analysis methods used are:

i. Statistical classification methods of pixel understanding.

ii. Target direction by template matching.

iii. Shape & texture analysis by image processing.

iv. Use of structural and contextual information.

v. Image understanding of aerial photographs.

Knowledge base and reasoning strategy are major topics of research in AI and many

techniques have been developed: semantic networks and frames, logical inference and so

on. They are used to solve problems requiring expertise [7].

They are flexible and are used to solve following problems:

i. Noise in input image data & errors in image recognition.

ii. Ill-defined problems.

iii. Limited information available.

iv. Requirement of versatile capabilities of geometric reasoning.

A blackboard model for aerial image allows flexible integration of diverse object detection

models. It is the database where all information is stored. Since all image recognition

results are written in blackboard, all subsystems can see them to detect new objects.](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-9-320.jpg)

![Page | 10

However sophisticated control structures are required to realize flexible image

understanding [8].

It incorporates:

i. Focus of attention to confine spatial domains.

ii. Conflict resolution.

iii. Error correction.

ACRONYM [9] is used to detect complex 3D objects which are represented by frames. It

then matches models and image features. Since it is difficult to detect features using

bottom-up analysis alone, it also integrates top-down analysis.

SIGMA is used to represent about hypothesis [10].

In SIGMA three levels of reasoning are identified:

i. Reasoning about structure and spatial relations between objects.

ii. Reasoning about transformation of objects.

iii. Reasoning about image segmentation.

Geographic information systems & aerial image understanding complement each other.

In conclusion, to realize flexibility in integration, we solve problems using data mapping,

data structuring, accurate correspondence and map guided photo transformation.](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-10-320.jpg)

![Page | 11

3. Yuki Kamikubo, Minoru Watanabe, Shoji Kawahito, “Image recognition system

using an optical Fourier transform on a dynamically reconfigurable vision

architecture”

Statement: Recently, several varieties of image recognition using Fourier transform have

been proposed. The benefit of using Fourier transform is its position independent image

recognition capability. Notwithstanding the operation of Fourier transform of high

resolution is heavy. Hence it is needed to shorten the time period using dynamic

reconfiguration.

Demand of high speed image recognition for development of autonomous vehicles, aircraft

and robots has been increasing [11]. The frame rates used for image recognition are limited

to 30 fps; but the frame rates required are at the rates higher than 1000 fps.

Image recognition are always executed sequentially.

Numerous template images are stored in memory in advance. Template matching is

executed between external images and template images. Recognition slows if various

images are recognized simultaneously.

To remove this bottleneck, an optoelectronic device with holographic memory is

introduced.

Multiple template images are stored in this large holographic memory. since the device has

massive parallel optical connection (> 1 million), template information can be read out

quickly in a very short period. Yet in a position independent image recognition operation,

which is mandatorily required in a real-world operation, template matching takes a long

time. This can be resolved by Fourier transform [12] [13] [14].

Fourier transform is well known to be useful for position independent image recognition.

It is introduced outside VLSI technologies. It is used in dynamically reconfigurable vision

chip. The image is focused on PAM-SLM which is an optical read-in and read-out device.

The coherent image passes through a set of lens. After this, the power spectrum of image

is received on photodiode array. Fourier transform is executed constantly and automatically

and phot spectrum can be position independent.](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-11-320.jpg)

![Page | 12

Use of photodiode arrays reduces time required drastically; only 1 ms elapses for transfer

of 100000 templates and its matching.

A Fourier transform is calculated theoretically as follows:

The amplitude φ(x,y) of diffraction is calculated as

φ(x,y) α ∬ 𝐼(x0,y0)L(x0,y0)exp[jkr]dx0dy0

∞

−∞

In Fresnel region, r can be approximated as

r ~ f +

( 𝑥0−𝑥)2

+(𝑦0−𝑦)^2

2𝑓

(4)

where

f is distance between lens plane and observation plane

k is wave number

(x0, y0) is co-ordinates of lens plane

(x, y) is co-ordinate of observation plane

I(x0, y0) is an image information

L(x0, y0) is phase modulation of lens

L(x0, y0) = exp[-j

𝑘

2𝑓

(x02+y02)]

Fourier transform is achieved as

φ(x, y) α ∬ 𝐼( 𝑥0, 𝑦0)exp[−j

𝑘

𝑓

(x0x+ y0y)]dx0dy0

∞

−∞

The diffraction light intensity is calculated as

P(x, y) = φ(x, y)φ*(x, y) (5)

(*) denotes complex conjugate

The result P(x, y) is power spectrum of an image.

In conclusion Fourier transform dynamically reconfigurable vision architecture recognizes

three artificial images by detecting power spectrum images. The architecture use PAL-

SLM and a lens. Fourier transform can be executed in real time. It is useful to extract

features of power spectrum information of each image in real time.](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-12-320.jpg)

![Page | 13

Case Study

Problem Statement: To address the need of road safety at traffic signals by installing image

detection expert systems.

This problem is a common one suffered universally. About 1214 road crashes occur in India daily,

with two-wheelers accounting for 25% of total road crash deaths [15] [16]. A major cause for this

is jumping of red lights [15]. People breaking the signal and not getting caught tend to have the

urge to break it again. As such there is need to have high speed detection systems.

Figure 1. Road traffic deaths in India 1970-2014 (Source: NCRB) [15].

Figure 2. Cars and MTW registered in India by year (Source: Transport Research

Wing 2014) [15] [16].](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-13-320.jpg)

![Page | 14

This is where image recognition systems come in. They are low power standalone systems. The

knowledge base can be linked with DNN. The output of traffic signal and embedded cameras are

connected to input of DNN and the output to inference engine [17].

The vehicle terminal fusion information is given below:

Figure. 3. Vehicle terminal fusion information [17]

The knowledge base can be linked wirelessly on a cloud-interface with a scanning module

connected to Aadhaar/RTO database. The module will scan the database to find the match for

license plate no. shown in image; thereby identifying the owner of the vehicle. The culprit can then

be caught and handed over to the law. This will help reduce the accident rate.

Creation of Rule base:

The rule base is created using forward chaining method [18] [19] [20].

R1: IF RED THEN STOP.

R2: IF GREEN THEN GO.

R3: IF YELLOW AND STOP OR GO THEN GO.

R4: IF RED AND STOP THEN NO PHOTO.](https://image.slidesharecdn.com/finalreportpmr-170505110830/85/Image-Recognition-Expert-System-based-on-deep-learning-14-320.jpg)