Image Similarity Detection at Scale Using LSH and Tensorflow with Andrey Gusev

- 2. Image Similarity Detection Andrey Gusev June 6, 2018 Using LSH and Tensorflow

- 3. Help you discover and do what you love.

- 5. 1 2 3 4 5 Agenda Neardup, clustering and LSH Candidate generation Deep dive Candidate selection TF on Spark

- 6. Neardup

- 8. Not Neardup

- 10. Clustering

- 11. Not An Equivalence Class Formulation For each image find a canonical image which represents an equivalence class. Problem Neardup is not an equivalence relation because neardup relation is not a transitive relation. It means we can not find a perfect partition such that all images within a cluster are closer to each other than to the other clusters.

- 12. Incremental approximate K-Cut Incrementally: 1. Generate candidates via batch LSH search 2. Select candidates via a TF model 3. Take a transitive closure over selected candidates 4. Pass over clusters and greedily select sub-clusters (K-Cut).

- 13. LSH

- 14. Embeddings and LSH - Visual Embeddings are high-dimensional vector representations of entities (in our case images) which capture semantic similarity. - Produced via Neural Networks like VGG16, Inception, etc. - Locality-sensitive hashing or LSH is a modern technique used to reduce dimensionality of high-dimensional data while preserving pairwise distances between individual points.

- 15. LSH: Locality Sensitive Hashing - Pick random projection vectors (black) - For each embeddings vector determine on which side of the hyperplane the embeddings vector lands - On the same side: set bit to 1 - On different side: set bit to 0 Result 1: <1 1 0> Result 2: <1 0 1> 1 1 0 1 0 1

- 16. LSH terms Pick optimal number of terms and bits per term - 1001110001011000 -> [00]1001 - [01]1100 - [10]0101 - [11]1000 - [x] → a term index

- 18. Neardup Candidate Generation - Input Data: RDD[(ImgId, List[LSHTerm])] // billions - Goal: RDD[(ImgId, TopK[(ImgId, Overlap))] Nearest Neighbor (KNN) problem formulation

- 19. Neardup Candidate Generation Given a set of documents each described by LSH terms, example: A → (1,2,3) B → (1,3,10) C → (2,10) And more generally: Di → [tj ] Where each Di is a document and [tj ] is a list of LSH terms (assume each is a 4 byte integer) Results: A → (B,2), (C,1) B → (A,2), (C,1) C → (A,1), (B,1)

- 20. Spark Candidate Generation 1. Input RDD[(ImgId, List[LSHTerm])] ← both index and query sets 2. flatMap, groupBy input into RDD[(LSHTerm, PostingList)] ← an inverted index 3. flatMap, groupBy into RDD[(LSHTerm, PostingList)] ← a query list 4. Join (2) and (3), flatMap over queries posting list, and groupBy query ImgId; RDD[(ImgId, List[PostingList])] ← search results by query. 5. Merge List[List[ImgId]] into TopK(ImgId, Overlap) counting number of times each ImgId is seen → RDD[ImgId, TopK[(ImgId, Overlap)]. * PostingList = List[ImgId]

- 21. Orders of magnitude too slow.

- 22. Deep Dive

- 23. def mapDocToInt(termIndexRaw: RDD[(String, List[TermId])]): RDD[(String, DocId)] = { // ensure that mapping between string and id is stable by sorting // this allows attempts to re-use partial stage completions termIndexRaw.keys.distinct().sortBy(x => x).zipWithIndex() } val stringArray = (for (ind <- 0 to 1000) yield randomString(32)).toArray val intArray = (for (ind <- 0 to 1000) yield ind).toArray * https://www.javamex.com/classmexer/ Dictionary encoding 108128 Bytes* 4024 Bytes* 25x

- 24. Variable Byte Encoding - One bit of each byte is a continuation bit; overhead - int → byte (best case) - 32 char string up to 25x4 = 100x memory reduction https://nlp.stanford.edu/IR-book/html/htmledition/variable-byte-codes-1.html

- 25. Inverted Index Partitioning Inverted index is skewed /** * Build partitioned inverted index by taking module of docId into partition. */ def buildPartitionedInvertedIndex(flatTermIndexAndFreq: RDD[(TermId, (DocId, TermFreq))]): RDD[((TermId, TermPartition), Iterable[DocId])] = { flatTermIndexAndFreq.map { case (termId, (docId, _)) => // partition documents within the same term to improve balance // and reduce the posting list length ((termId, (Math.abs(docId) % TERM_PARTITIONING).toByte), docId) }.groupByKey() }

- 26. Packing (Int, Byte) => Long Before: Unsorted: 128.77 MB in 549ms Sort+Limit: 4.41 KB in 7511ms After: Unsorted: 38.83 MB in 219ms Sort+Limit: 4.41 KB in 467ms def packDocIdAndByteIntoLong(docId: DocId, docFreq: DocFreq): Long = { (docFreq.toLong << 32) | (docId & 0xffffffffL) } def unpackDocIdAndByteFromLong(packed: Long): (DocId, DocFreq) = { (packed.toInt, (packed >> 32).toByte) }

- 27. Slicing Split query set into slices to reduce spill and size for “widest” portion of the computation. Union at the end.

- 28. Additional Optimizations - Cost based optimizer - significant improvements to runtime can be realized by analyzing input data sets and setting performance parameters automatically. - Counting - jaccard overlap counting is done via low level, high performance collections. - Off heaping serialization when possible (spark.kryo.unsafe).

- 29. Generic Batch LSH Search - Can be applied generically to KNN, embedding agnostic. - Can work on arbitrary large query set via slicing.

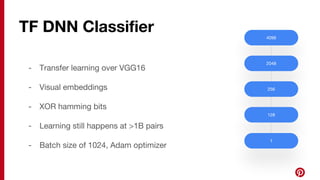

- 31. TF DNN Classifier - Transfer learning over VGG16 - Visual embeddings - XOR hamming bits - Learning still happens at >1B pairs - Batch size of 1024, Adam optimizer 4096 2048 256 128 1

- 32. Vectorization: mapPartitions + grouped - During training and inference vectorization reduces overhead. - Spark mapPartitions + grouped allows for large batches and controlling the size. Works well for inference. - 2ms/prediction on c3.8xl CPUs with network of 10MM parameters . input.mapPartitions { partition: Iterator[(ImgInfo, ImgInfo)] => // break down large partitions into groups and score per group partition.grouped(BATCH_SIZE).flatMap { group: Seq[(ImgInfo, ImgInfo)] => // create tensors and score as features: Array[Array[Float]] --> Tensor.create(features) } }

- 33. One TF Session per JVM - Reduce model loading overhead, load once per JVM; thread-safe. object TensorflowModel { lazy val model: Session = { SavedModelBundle.load(...).session() } }

- 34. Summary - Candidate Generation uses Batch LSH Search over terms from visual embeddings. - Batch LSH scales to billions of objects in the index and is embedding agnostic. - Candidate Selection uses a TF classifier over raw visual embeddings. - Two-pass transitive closure to cluster results.

- 35. Thanks!

![LSH terms

Pick optimal number of terms and bits per term

- 1001110001011000 -> [00]1001 - [01]1100 - [10]0101 - [11]1000

- [x] → a term index](https://image.slidesharecdn.com/1andreygusev-180613194136/85/Image-Similarity-Detection-at-Scale-Using-LSH-and-Tensorflow-with-Andrey-Gusev-16-320.jpg)

![Neardup Candidate Generation

- Input Data:

RDD[(ImgId, List[LSHTerm])] // billions

- Goal:

RDD[(ImgId, TopK[(ImgId, Overlap))]

Nearest Neighbor (KNN) problem formulation](https://image.slidesharecdn.com/1andreygusev-180613194136/85/Image-Similarity-Detection-at-Scale-Using-LSH-and-Tensorflow-with-Andrey-Gusev-18-320.jpg)

![Neardup Candidate Generation

Given a set of documents each described by LSH terms, example:

A → (1,2,3)

B → (1,3,10)

C → (2,10)

And more generally:

Di

→ [tj

]

Where each Di

is a document and [tj

] is a list of LSH terms (assume each is a 4 byte integer)

Results:

A → (B,2), (C,1)

B → (A,2), (C,1)

C → (A,1), (B,1)](https://image.slidesharecdn.com/1andreygusev-180613194136/85/Image-Similarity-Detection-at-Scale-Using-LSH-and-Tensorflow-with-Andrey-Gusev-19-320.jpg)

![Spark Candidate Generation

1. Input RDD[(ImgId, List[LSHTerm])] ← both index and query sets

2. flatMap, groupBy input into RDD[(LSHTerm, PostingList)] ← an inverted index

3. flatMap, groupBy into RDD[(LSHTerm, PostingList)] ← a query list

4. Join (2) and (3), flatMap over queries posting list, and groupBy query ImgId;

RDD[(ImgId, List[PostingList])] ← search results by query.

5. Merge List[List[ImgId]] into TopK(ImgId, Overlap) counting number of times each ImgId is

seen → RDD[ImgId, TopK[(ImgId, Overlap)].

* PostingList = List[ImgId]](https://image.slidesharecdn.com/1andreygusev-180613194136/85/Image-Similarity-Detection-at-Scale-Using-LSH-and-Tensorflow-with-Andrey-Gusev-20-320.jpg)

![def mapDocToInt(termIndexRaw: RDD[(String, List[TermId])]): RDD[(String, DocId)] = {

// ensure that mapping between string and id is stable by sorting

// this allows attempts to re-use partial stage completions

termIndexRaw.keys.distinct().sortBy(x => x).zipWithIndex()

}

val stringArray = (for (ind <- 0 to 1000) yield randomString(32)).toArray

val intArray = (for (ind <- 0 to 1000) yield ind).toArray

* https://www.javamex.com/classmexer/

Dictionary encoding

108128 Bytes*

4024 Bytes*

25x](https://image.slidesharecdn.com/1andreygusev-180613194136/85/Image-Similarity-Detection-at-Scale-Using-LSH-and-Tensorflow-with-Andrey-Gusev-23-320.jpg)

![Inverted Index Partitioning

Inverted index is skewed

/**

* Build partitioned inverted index by taking module of docId into partition.

*/

def buildPartitionedInvertedIndex(flatTermIndexAndFreq: RDD[(TermId, (DocId, TermFreq))]):

RDD[((TermId, TermPartition), Iterable[DocId])] = {

flatTermIndexAndFreq.map { case (termId, (docId, _)) =>

// partition documents within the same term to improve balance

// and reduce the posting list length

((termId, (Math.abs(docId) % TERM_PARTITIONING).toByte), docId)

}.groupByKey()

}](https://image.slidesharecdn.com/1andreygusev-180613194136/85/Image-Similarity-Detection-at-Scale-Using-LSH-and-Tensorflow-with-Andrey-Gusev-25-320.jpg)

![Vectorization: mapPartitions + grouped

- During training and inference vectorization reduces overhead.

- Spark mapPartitions + grouped allows for large batches and controlling

the size. Works well for inference.

- 2ms/prediction on c3.8xl CPUs with network of 10MM parameters .

input.mapPartitions { partition: Iterator[(ImgInfo, ImgInfo)] =>

// break down large partitions into groups and score per group

partition.grouped(BATCH_SIZE).flatMap { group: Seq[(ImgInfo, ImgInfo)] =>

// create tensors and score as features: Array[Array[Float]] --> Tensor.create(features)

}

}](https://image.slidesharecdn.com/1andreygusev-180613194136/85/Image-Similarity-Detection-at-Scale-Using-LSH-and-Tensorflow-with-Andrey-Gusev-32-320.jpg)