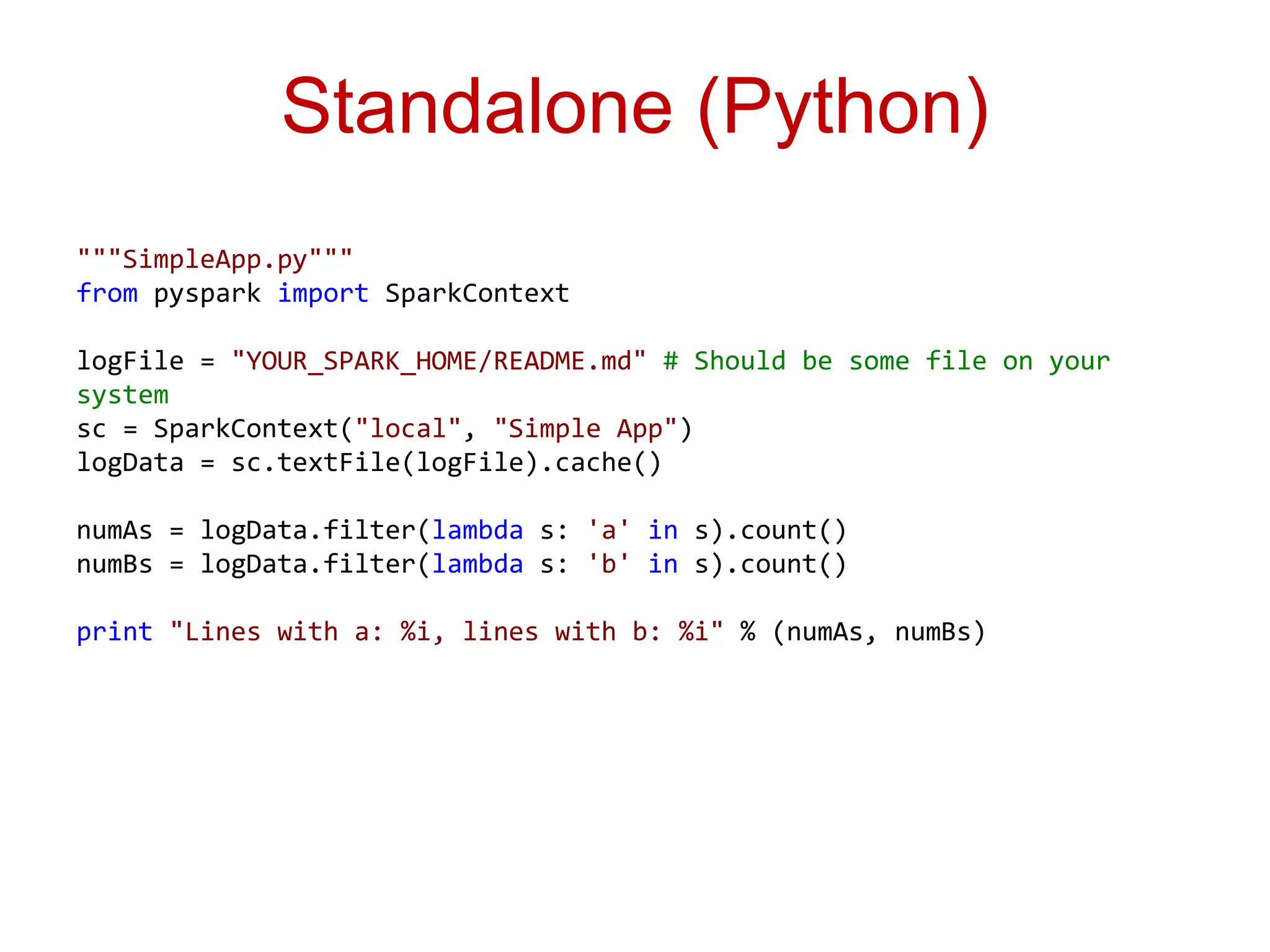

The document details a September 2014 Meetup organized by the Big Data Hyderabad group focusing on Apache Spark, covering its advantages for in-memory data processing, especially in machine learning. It includes an introduction to Spark's architecture, deployment, and basic programming operations in various languages such as Scala, Python, and Java. The presentation also provides examples and practical information about using Spark, including code snippets and how to submit jobs.

![Spark Shell

./bin/spark-shell --master local[2]

The --master option specifies the master URL for a distributed cluster, or local to run

locally with one thread, or local[N] to run locally with N threads. You should start by

using local for testing.

./bin/run-example SparkPi 10

This will run 10 iterations to calculate the value of Pi](https://image.slidesharecdn.com/introductiontoapachespark-140929023201-phpapp02/75/Introduction-to-Apache-Spark-16-2048.jpg)

![Basic operations…

scala> val textFile = sc.textFile("README.md")

textFile: spark.RDD[String] = spark.MappedRDD@2ee9b6e3

scala> textFile.count() // Number of items in this RDD

ees0: Long = 126

scala> textFile.first() // First item in this RDD

res1: String = # Apache Spark

scala> val linesWithSpark = textFile.filter(line =>

line.contains("Spark"))

linesWithSpark: spark.RDD[String] = spark.FilteredRDD@7dd4af09

Simplier - Single liner:

scala> textFile.filter(line => line.contains("Spark")).count()

// How many lines contain "Spark"?

res3: Long = 15](https://image.slidesharecdn.com/introductiontoapachespark-140929023201-phpapp02/75/Introduction-to-Apache-Spark-17-2048.jpg)

![Map - Reduce

scala> textFile.map(line => line.split(" ").size).reduce((a, b)

=> if (a > b) a else b)

res4: Long = 15

scala> import java.lang.Math

scala> textFile.map(line => line.split(" ").size).reduce((a, b)

=> Math.max(a, b))

res5: Int = 15

scala> val wordCounts = textFile.flatMap(line => line.split("

")).map(word => (word, 1)).reduceByKey((a, b) => a + b)

wordCounts: spark.RDD[(String, Int)] =

spark.ShuffledAggregatedRDD@71f027b8

wordCounts.collect()](https://image.slidesharecdn.com/introductiontoapachespark-140929023201-phpapp02/75/Introduction-to-Apache-Spark-18-2048.jpg)

![With Caching…

scala> linesWithSpark.cache()

res7: spark.RDD[String] = spark.FilteredRDD@17e51082

scala> linesWithSpark.count()

res8: Long = 15

scala> linesWithSpark.count()

res9: Long = 15](https://image.slidesharecdn.com/introductiontoapachespark-140929023201-phpapp02/75/Introduction-to-Apache-Spark-19-2048.jpg)

![Standalone (Scala)

/* SimpleApp.scala */

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

object SimpleApp {

def main(args: Array[String]) {

val logFile = "YOUR_SPARK_HOME/README.md" // Should be some file on your

system

val conf = new SparkConf().setAppName("Simple Application")

.setMaster(“local")

val sc = new SparkContext(conf)

val logData = sc.textFile(logFile, 2).cache()

val numAs = logData.filter(line => line.contains("a")).count()

val numBs = logData.filter(line => line.contains("b")).count()

println("Lines with a: %s, Lines with b: %s".format(numAs, numBs))

}

}](https://image.slidesharecdn.com/introductiontoapachespark-140929023201-phpapp02/75/Introduction-to-Apache-Spark-21-2048.jpg)

![Standalone (Java)

/* SimpleApp.java */

import org.apache.spark.api.java.*;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.function.Function;

public class SimpleApp {

public static void main(String[] args) {

String logFile = "YOUR_SPARK_HOME/README.md"; // Should be some file on your system

SparkConf conf = new SparkConf().setAppName("Simple Application").setMaster("local");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> logData = sc.textFile(logFile).cache();

long numAs = logData.filter(new Function<String, Boolean>() {

public Boolean call(String s) { return s.contains("a"); }

}).count();

long numBs = logData.filter(new Function<String, Boolean>() {

public Boolean call(String s) { return s.contains("b"); }

}).count();

System.out.println("Lines with a: " + numAs + ", lines with b: " + numBs);

}

}](https://image.slidesharecdn.com/introductiontoapachespark-140929023201-phpapp02/75/Introduction-to-Apache-Spark-22-2048.jpg)

![Job Submission

$SPARK_HOME/bin/spark-submit

--class "SimpleApp"

--master local[4]

target/scala-2.10/simple-project_2.10-1.0.jar](https://image.slidesharecdn.com/introductiontoapachespark-140929023201-phpapp02/75/Introduction-to-Apache-Spark-24-2048.jpg)