Java util concurrent

- 1. JDK5.0 -- java.util.concurrent Roger Xia 2008-08

- 2. Concurrent Programming The built-in concurrency primitives -- wait(), notify(), synchronized, and volatile Hard to use correctly Specified at too low a level for most applications Can lead to poor performance if used incorrectly Too much wheel-reinventing! Let’s think about -- When do we need concurrency? -- How to do concurrent programming? -- concurrency strategy around us? ACID, Transaction, CAS -- lock, blocking, asynchronous task scheduling

- 3. Questions? 如果要试图获取锁,但如果在给定的时间段内超时了还没有获得它,会发生什么情况? 如果线程中断了,则放弃获取锁的尝试? 创建一个至多可有 N 个线程持有的锁? 支持多种方式的锁定(譬如带互斥写的并发读)? 或者以一种方式来获取锁,但以另一种方式释放它? 内置的锁定机制不直接支持上述这些情形,但可以在 Java 语言所提供的基本并发原语上构建它们。

- 4. Asynchronous task schedule new Thread( new Runnable() { ... } ).start(); Two drawback: It is too much cost to create so many new threads to deal with a short task and then exit as JVM has to do much more work to allocate resources for thread creating and destroying. How to avoid exhausting of resource when too much request at the same time? Thread pool How to effectively schedule task, deal with dead thread, limit tasks in queue avoid server overload, how to deal with the overflow: -- discard the new task, old task -- blocking the committing thread until queue is available)

- 5. util.concurrent Doug Lea util.concurrent JSR-166 Tiger(java.util.concurrent) High quality, extensive use, concurrent open source package Exclusive lock, semaphore, blocking, thread safe collections

- 7. Threads Pools and Task Scheduling

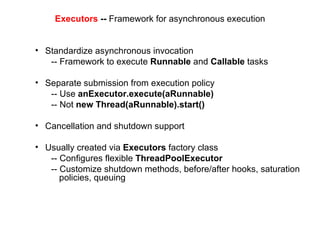

- 8. Executors -- Framework for asynchronous execution Standardize asynchronous invocation -- Framework to execute Runnable and Callable tasks Separate submission from execution policy -- Use anExecutor.execute(aRunnable) -- Not new Thread(aRunnable).start() Cancellation and shutdown support Usually created via Executors factory class -- Configures flexible ThreadPoolExecutor -- Customize shutdown methods, before/after hooks, saturation policies, queuing

- 9. Executor -- Decouple submission from execution policy public interface Executor { void execute(Runnable command); } Code which submits a task doesn’t have to know in what thread the task will run Could run in the calling thread, in a thread pool, in a single background thread (or even in another JVM!) Executor implementation determines execution policy Execution policy controls resource utilization, overload behavior, thread usage, logging, security, etc. Calling code need not know the execution policy

- 10. ExecutorService ExecutorService adds lifecycle management ExecutorService supports both graceful and immediate shutdown public interface ExecutorService extends Executor { void shutdown(); List<Runnable> shutdownNow(); boolean isShutdown(); boolean isTerminated(); boolean awaitTermination(long timeout,TimeUnit unit); // other convenience methods for submitting tasks } Many useful utility methods too

- 11. Executors Example – Web Server poor resource management class UnstableWebServer { public static void main(String[] args) { ServerSocket socket = new ServerSocket(80); while (true) { final Socket connection = socket.accept(); Runnable r = new Runnable() { public void run() { handleRequest(connection); } }; // Don't do this! new Thread(r).start(); } } } better resource management class BetterWebServer { Executor pool = Executors.newFixedThreadPool(7); public static void main(String[] args) { ServerSocket socket = new ServerSocket(80); while (true) { final Socket connection = socket.accept(); Runnable r = new Runnable() { public void run() { handleRequest(connection); } }; pool.execute(r); } } }

- 12. Thread pool package concurrent; import java.util.concurrent.ExecutorService; import java.util.concurrent.Executors; public class TestThreadPool { public static void main(String args[]) throws InterruptedException { // only two threads ExecutorService exec = Executors.newFixedThreadPool(2); for(int index = 0; index < 100; index++) { Runnable run = new Runnable() { public void run() { long time = (long) (Math.random() * 1000); System.out.println("Sleeping " + time + "ms"); try { Thread.sleep(time); } catch (InterruptedException e) { } } }; exec.execute(run); } // must shutdown exec.shutdown(); } } The thread pool size is 2, how can it deal with 100 threads? Will this block the main thread since no more threads are ready for use in the for loop? All runnable instances will be put into a LinkedBlockingQueue through execute(runnable) We use Executors to construct all kinds of thread pools. fixed, single, cached, scheduled. Use ThreadPoolExecutor to construct thread pool with special corePoolSize, maximumPoolSize, keepAliveTime, timeUnit, and blockingQueue.

- 13. ScheduledThreadPool package concurrent; import static java.util.concurrent.TimeUnit.SECONDS; import java.util.Date; import java.util.concurrent.Executors; import java.util.concurrent.ScheduledExecutorService; import java.util.concurrent.ScheduledFuture; public class TestScheduledThread { public static void main(String[] args) { final ScheduledExecutorService scheduler = Executors.newScheduledThreadPool (2); final Runnable beeper = new Runnable() { int count = 0; public void run() { System.out.println(new Date() + " beep " + (++count)); } }; // 1 秒钟后运行,并每隔 2 秒运行一次 final ScheduledFuture<?> beeperHandle = scheduler .scheduleAtFixedRate (beeper, 1, 2, SECONDS); // 2 秒钟后运行,并每次在上次任务运行完后等待 5 秒后重新运行 final ScheduledFuture<?> beeperHandle2 = scheduler .scheduleWithFixedDelay (beeper, 2, 5, SECONDS); // 30 秒后结束关闭任务,并且关闭 Scheduler, We don’t need close it in 24 hours run applications scheduler .schedule ( new Runnable() { public void run() { beeperHandle.cancel(true); beeperHandle2.cancel(true); scheduler. shutdown (); } }, 30, SECONDS); } }

- 14. Future and Callable -- Representing asynchronous tasks interface ArchiveSearcher { String search(String target); } class App { ExecutorService executor = ... ArchiveSearcher searcher = ... void showSearch(final String target) throws InterruptedException { Future<String> future = executor.submit( new Callable<String>() { public String call() { return searcher.search(target); }}); displayOtherThings(); // do other things while searching try { displayText( future.get() ); // use future } catch (ExecutionException ex) { cleanup(); return; } } } Callable is functional analog of Runnable interface Callable<V> { V call() throws Exception; } Sometimes, you want to start your threads asynchronously and get the result of one of the threads when you need it. Future holds result of asynchronous call, normally a Callable The result can only be retrieved using method get() when the computation has completed, blocking if necessary until it is ready. cancel(boolean) isDone() isCancelled()

- 15. Futures Example -- Implementing a concurrent cache public class Cache<K, V>{ Map<K, Feature<V>> map = new ConcurrentHashMap(); Executor executor = Executors.newFixedThreadPool(8); public V get (final K key){ Feature<V> f = map .get(key); if (f == null){ Callable<V> c = new callable<V>(){ public V call(){ //return value associated with key } }; f = new FeatureTask<V>(c) ; Feature old = map. putIfAbsent (key, f ); if (old == null) executor .execute ( f ) ; else f = old; } return f. get(); } }

- 16. ScheduledExecutorService -- Deferred and recurring tasks ScheduledExecutorService can be used to Schedule a Callable or Runnable to run once with a fixed delay after submission Schedule a Runnable to run periodically at a fixed rate Schedule a Runnable to run periodically with a fixed delay between executions Submission returns a ScheduledFutureTask handle which can be used to cancel the task Like java.util.Timer , but supports pooling

- 18. JMM http://gee.cs.oswego.edu/dl/cpj/jmm.html The Java memory model describes how threads in the Java Programming interact through memory. 由于使用处理器寄存器和预处理 cache 来提高内存访问速度带来的性能提升, Java 语言规范( JLS )允许一些内存操作并不对于所有其他线程立即可见。 synchronized and volatile guarantee memory operation cross threads consistent. Synchronization provides not only Atomicity but also Visibility Ordering (happens-before)

- 19. Mutual Exclusive( 互斥 ) AND Memory barrier Memory barrier is used at the time JVM acquire or release monitor . (Memory barrier instructions directly control only the interaction of a CPU with its cache, with its write-buffer that holds stores waiting to be flushed to memory, and/or its buffer of waiting loads or speculatively executed instructions. ) A thread acquire a monitor , use a read barrier to invalid variables cached in thread memory (processor cache or processor register) , this will cause processor reread the variable used in the synchronized block from main memory. When thread release the monitor , use a write barrier to write back to main memory all the modified variables.

- 20. JMM 缓存一致性模型 - "happens-before ordering( 先行发生排序 )" x = 0; y = 0; i = 0; j = 0; // thread A y = 1; x = 1; // thread B i = x; j = y; happens-before ordering 如何避免这种情况 ? 排序原则已经做到 : a, 在程序顺序中 , 线程中的每一个操作 , 发生在当前操作后面将要出现的每一个操作之前 . b, 对象监视器的解锁发生在等待获取对象锁的线程之前 . c, 对 volitile 关键字修饰的变量写入操作 , 发生在对该变量的读取之前 . d, 对一个线程的 Thread.start() 调用 发生在启动的线程中的所有操作之前 . e, 线程中的所有操作 发生在从这个线程的 Thread.join() 成功返回的所有其他线程之前 . 为了实现 happends-before ordering 原则 , java 及 jdk 提供的工具 : a, synchronized 关键字 b, volatile 关键字 c, final 变量 d, java.util.concurrent.locks 包 (since jdk 1.5) e, java.util.concurrent.atmoic 包 (since jdk 1.5) ... 程序中 , 如果线程 A,B 在无保障情况下运行 , 那么 i,j 各会是什么值呢 ? 答案是 , 不确定 . (00,01,10,11 都有可能出现 ) 这里没有使用 java 同步机制 , 所以 JMM 有序性和可视性 都无法得到保障 .

- 21. Concurrent Collections -- achieve maximum results with little effort

- 22. concurrent vs. sychronized Pre 5.0 thread-safe not concurrent classes Thread-safe synchronized collections Hashtable , Vector , Collections.synchronizedMap Often require locking during iteration Monitor is source of contention under concurrent access Concurrent collections Allow multiple operations to overlap each other At the cost of some slight differences in semantics Might not support atomic operations

- 23. interface ConcurrentMap<K, V> extends Map<K, V> boolean replace(K key, V oldValue, V newValue); Replaces the entry for a key only if currently mapped to a given value. This is equivalent to if (map.containsKey(key) && map.get(key).equals(oldValue)) { map.put(key, newValue); return true; } else return false; except that the action is performed atomically. V replace(K key, V value); Replaces the entry for a key only if currently mapped to some value. This is equivalent to if (map.containsKey(key)) { return map.put(key, value); } else return null; except that the action is performed atomically. V putIfAbsent (K key, V value); If the specified key is not already associated with a value, associate it with the given value. This is equivalent to if (!map.containsKey(key)) return map.put(key, value); else return map.get(key); except that the action is performed atomically. boolean remove(Object key, Object value); Removes the entry for a key only if currently mapped to a given value. This is equivalent to if (map.containsKey(key) && map.get(key).equals(value)) { map.remove(key); return true; } else return false; except that the action is performed atomically.

- 24. public class ConcurrentHashMap<K, V> extends AbstractMap<K, V> implements ConcurrentMap<K, V>, Serializable ConcurrentHashMap, keep thread safe and also provide high level concurrency and better throughput. How can we do this? (Use sharing data structure? JMM?)

- 25. Figure 1: Throughput of ConcurrentHashMap vs a synchronized HashMap under moderate contention as might be experienced by a frequently referenced cache on a busy server.

- 26. ConcurrentHashMap The reason why Hashtable and Collections.synchronizedMap have bad scalability is they use a map-wide lock. ConcurrentHashMap uses a set of 32 locks, each of locks is responsible to protect (put() and remove()) a children set of the hash bucket. Theoretically speaking, it means that 32 threads are allowed to modify a map at the same time. Unfortunately, we have to pay for it as to map-wide operations. size(), isEmpty(), rehash()

- 27. CopyOnWriteArrayList public boolean add(E e) { final ReentrantLock lock = this.lock; lock.lock(); try { Object[] elements = getArray(); int len = elements.length; Object[] newElements = Arrays.copyOf(elements, len + 1); newElements[len] = e; setArray(newElements); return true; } finally { lock.unlock(); } } Optimized for case where iteration is much more frequent than insertion or removal Ideal for event listeners A thread-safe variant of ArrayList in which all mutative operations add, set, and so on) are implemented by making a fresh copy of the underlying array.

- 28. CopyOnWriteArrayList List<String> listA = new CopyOnWriteArrayList<String>(); List<String> listB = new ArrayList<String>(); listA.add("a"); listA.add("b"); listB.addAll(listA); Iterator itrA = listA.iterator(); Iterator itrB = listB.iterator(); listA.add("c"); //listB.add("c");//ConcurrentModificationException for (String s:listA){ System. out .println("listA " + s);//a b c } for (String s:listB){ System. out .println("listB " + s);//a b } while (itrA.hasNext()){ System. out .println("listA iterator " + itrA.next());//a b //itrA.remove();//java.lang.UnsupportedOperationException } while (itrB.hasNext()){ System. out .println("listB iterator " + itrB.next());//a b } The returned iterator provides a snapshot of the state of the list when the iterator was constructed. No synchronization is needed while traversing the iterator. The iterator does NOT support the remove, set or add methods. public Iterator<E> iterator() { return new COWIterator <E>(getArray(), 0); } public ListIterator<E> listIterator() { return new COWIterator<E>(getArray(), 0); }

- 29. Iteration semantics Synchronized collection iteration broken by concurrent changes in another thread Throws ConcurrentModificationException Locking a collection during iteration hurts scalability Concurrent collections can be modified concurrently during iteration Without locking the whole collection Without ConcurrentModificationException But changes may not be seen

- 30. Queue -- New interface added to java.util in JDK5.0 interface Queue<E> extends Collection<E> { boolean offer(E x); E poll(); E remove() throws NoSuchElementException; E peek(); E element() throws NoSuchElementException; } Retrofit (non-thread-safe)—implemented by LinkedList Add (non-thread-safe) PriorityQueue Fast thread-safe non-blocking ConcurrentLinkedQueue Better performance than LinkedList is possible as random-access requirement has been removed

- 31. interface BlockingQueue<E> extends Queue<E> class Producer implements Runnable { private final BlockingQueue queue; Producer(BlockingQueue q) { queue = q; } public void run() { try { while (true) { queue.put(produce()); } } catch (InterruptedException ex) { ... handle ...} } Object produce() { ... } } class Consumer implements Runnable { private final BlockingQueue queue; Consumer(BlockingQueue q) { queue = q; } public void run() { try { while (true) { consume( queue.take() ); } } catch (InterruptedException ex) { ... handle ...} } void consume(Object x) { ... } } class Setup { void main() { BlockingQueue q = new SomeQueueImplementation(); Producer p = new Producer(q); Consumer c1 = new Consumer(q); Consumer c2 = new Consumer(q); new Thread(p).start(); new Thread(c1).start(); new Thread(c2).start(); } } Extends Queue to provides blocking operations Retrieval: take —wait for queue to become nonempty Insertion: put —wait for capacity to become available

- 32. implementations of BlockingQueue ArrayBlockingQueue 一个由数组支持的有界队列 Ordered FIFO, bounded, lock-based LinkedBlockingQueue 一个由链接节点支持的可选有界队列 Ordered FIFO, may be bounded, two-lock algorithm PriorityBlockingQueue Unordered but retrieves least element, unbounded, lock-based 一个由优先级堆支持的无界优先级队列。 sort by element’s Comparable, pass Comparator as constructor parameter, 从 iterator() 返回的 Iterator 实例不需要以优先级顺序返回元素。如果必须以优先级顺序遍历所有元素,那么让它们都通过 toArray() 方法并自己对它们排序,像 Arrays.sort(pq.toArray()) DelayQueue 一个由优先级堆支持的、基于时间的调度队列。在延迟时间过去之前,不能从队列中取出元素。如果多个元素完成了延迟,那么最早失效 / 失效时间最长的元素将第一个取出。 SynchronousQueue Rendezvous channel, lock-based 一个利用 BlockingQueue 接口的简单聚集( rendezvous )机制。它没有内部容量。它就像线程之间的手递手机制。在队列中加入一个元素的生产者会等待另一个线程的消费者。当这个消费者出现时,这个元素就直接在消费者和生产者之间传递,永远不会加入到阻塞队列中。

- 33. BlockingQueue Example class LogWriter { BlockingQueue msgQ = new LinkedBlockingQueue(); public void writeMessage(String msg) throws IE { msgQ.put(msg); } // run in background thread public void logServer() { try { while (true) { System.out.println(msqQ.take()); } } catch(InterruptedException ie) { ... } } } Producer Blocking Queue Consumer

- 35. <<interface>> Lock newCondition() Before waiting on the condition the lock must be held by the current thread. A call to Condition#await() will atomically release the lock before waiting and re-acquire the lock before the wait returns. Use of monitor synchronization is just fine for most applications, but it has some shortcomings Single wait-set per lock No way to interrupt a thread waiting for a lock No way to time-out when waiting for a lock Locking must be block-structured Inconvenient to acquire a variable number of locks at once Advanced techniques, such as hand-over-hand locking, are not possible Lock objects address these concerns. But if you don’t need them, stick with synchronized tryLock() Acquires the lock if it is available and returns immediately with the value true. If the lock is not available then this method will return immediately with the value false. Lock lock = ...; if (lock .tryLock() ) { try { // manipulate protected state } finally { lock .unlock() ; } } else { // perform alternative actions }

- 36. 图 1. synchronized 和 Lock 的吞吐率,单 CPU 图 2. synchronized 和 Lock 的吞吐率(标准化之后), 4 个 CPU

- 37. public class ReentrantLock implements Lock, java.io.Serializable Flexible, high-performance lock implementation 当许多线程都想访问共享资源时, JVM 可以 花更少的时候来调度线程 ,把 更多时间用在执行线程 上。 Reentrant, mutual-exclusion lock with the same semantics as synchronized but extra features Can interrupt a thread waiting to acquire a lock 可中断锁等候 Can specify a timeout while waiting for a lock 定时锁等候 Can poll for lock availability 锁投票 Multiple wait-sets per lock via the Condition interface High performance under contention Generally better than synchronized Less convenient to use Requires use of finally block to ensure release ReentrantLock 将由最近成功获得锁,并且还没有释放该锁的线程所 拥有 。 当锁没有被另一个线程所拥有时,调用 lock 的线程将成功获取该锁并返回。 如果当前线程已经拥有该锁,此方法将立即返回。

- 38. ReadWriteLock -- Multiple-reader, single-writer exclusion lock ReadWriteLock defines a pair of locks interface ReadWriteLock { Lock readLock(); Lock writeLock(); } Use for frequent long “reads” with few “writes” Various implementation policies are possible The ReentrantReadWriteLock class: implements ReadWriteLock , Serializable Provides reentrant read and write locks Allows writer to acquire read lock Allows writer to downgrade to read lock ( 重入还允许从写入锁降级为读取锁,其实现方式是: 先获取写入锁,然后获取读取锁,最后释放写入锁 。但是,从读取锁升级到写入锁是 不可能的 。 ) Supports “fair” and “writer preference” acquisition

- 39. ReadWriteLock Example class ReadWriteMap { final Map<String, Data> m = new TreeMap<String, Data>(); final ReentrantReadWriteLock rwl = new ReentrantReadWriteLock(); final Lock r = rwl.readLock(); final Lock w = rwl.writeLock(); public Data get(String key) { r.lock(); try { return m.get(key);} finally { r.unlock(); } } public Data put(String key, Data value) { w.lock(); try { return m.put(key, value); } finally { w.unlock(); } } public void clear() { w.lock(); try { m.clear(); } finally { w.unlock(); } } }

- 40. <<interface>> Condition class BoundedBuffer { final Lock lock = new ReentrantLock(); final Condition notFull = lock.newCondition(); final Condition notEmpty = lock.newCondition(); final Object[] items = new Object[100]; int putptr, takeptr, count; public void put(Object x) throws InterruptedException { lock.lock(); try { while (count == items.length) notFull.await(); items[putptr] = x; if (++putptr == items.length) putptr = 0; ++count; notEmpty.signal(); } finally { lock.unlock(); } } public Object take() throws InterruptedException { lock.lock(); try { while (count == 0) notEmpty.await(); Object x = items[takeptr]; if (++takeptr == items.length) takeptr = 0; --count; notFull.signal(); return x; } finally { lock.unlock(); } } } Monitor-like operations for working with Locks. Condition factors out the Object monitor methods wait, notify and notifyAll into distinct objects to give the effect of having multiple wait-sets per object, by combining them with the use of arbitrary Lock implementations. Where a Lock replaces the use of synchronized methods and statements, a Condition replaces the use of the Object monitor methods. Condition 将 Object 监视器方法( wait 、 notify 和 notifyAll )分解成截然不同的对象,以便通过将这些对象与任意 Lock 实现组合使用,为每个对象提供多个等待 set ( wait-set )。其中, Lock 替代了 synchronized 方法和语句的使用, Condition 替代了 Object 监视器方法的使用。

- 41. Synchronizers Semaphore CyclicBarrier CountDownLatch Exchanger

- 42. Semaphore class Pool { private static final int MAX_AVAILABLE = 100; private final Semaphore available = new Semaphore(MAX_AVAILABLE, true); public Object getItem() throws InterruptedException { available.acquire (); return getNextAvailableItem (); } public void putItem(Object x) { if ( markAsUnused (x)) available.release (); } // Not a particularly efficient data structure; just for demo protected Object[] items = ... whatever kinds of items being managed protected boolean[] used = new boolean[MAX_AVAILABLE]; protected synchronized Object getNextAvailableItem () { for (int i = 0; i < MAX_AVAILABLE; ++i) { if (!used[i]) { used[i] = true; return items[i]; } } return null; // not reached } protected synchronized boolean markAsUnused (Object item) { for (int i = 0; i < MAX_AVAILABLE; ++i) { if (item == items[i]) { if (used[i]) { used[i] = false; return true; } else return false; } } return false; } } 一个计数信号量。从概念上讲,信号量维护了一个许可集。如有必要,在许可可用前会阻塞每一个 acquire() ,然后再获取该许可。每个 release() 添加一个许可,从而可能释放一个正在阻塞的获取者。但是,不使用实际的许可对象, Semaphore 只对可用许可的号码进行计数,并采取相应的行动。 Conceptually, a semaphore maintains a set of permits. Each acquire() blocks if necessary until a permit is available, and then takes it. Each release() adds a permit, potentially releasing a blocking acquirer. However, no actual permit objects are used; the Semaphore just keeps a count of the number available and acts accordingly. Before obtaining an item each thread must acquire a permit from the semaphore, guaranteeing that an item is available for use. When the thread has finished with the item it is returned back to the pool and a permit is returned to the semaphore, allowing another thread to acquire that item.

- 43. CyclicBarrier thread1 thread2 thread3 CyclicBarrier barrier = new CyclicBarrier(3); await() await() await() class Solver { final int N; final float[][] data; final CyclicBarrier barrier ; class Worker implements Runnable { int myRow; Worker(int row) { myRow = row; } public void run() { while (!done()) { processRow(myRow); try { barrier.await (); } catch (InterruptedException ex) { return; } catch (BrokenBarrierException ex) { return; } } } } public Solver(float[][] matrix) { data = matrix; N = matrix.length; barrier = new CyclicBarrier(N, new Runnable() { public void run() { mergeRows(...); } }); for (int i = 0; i < N; ++i) new Thread(new Worker(i)).start(); waitUntilDone (); } } A synchronization aid that allows a set of threads to all wait for each other to reach a common barrier point. CyclicBarriers are useful in programs involving a fixed sized party of threads that must occasionally wait for each other. The barrier is called cyclic because it can be re-used after the waiting threads are released. int await() Waits until all {@linkplain #getParties parties} have invoked

- 44. CountDownLatch class Driver { // ... void main() throws InterruptedException { CountDownLatch startSignal = new CountDownLatch(1); CountDownLatch doneSignal = new CountDownLatch(N); for (int i = 0; i < N; ++i) // create and start threads new Thread(new Worker(startSignal, doneSignal)).start(); doSomethingElse(); // don't let run yet startSignal.countDown (); // let all threads proceed doSomethingElse(); doneSignal.await (); // wait for all to finish } } class Worker implements Runnable { private final CountDownLatch startSignal; private final CountDownLatch doneSignal; Worker(CountDownLatch startSignal, CountDownLatch doneSignal) { this.startSignal = startSignal; this.doneSignal = doneSignal; } public void run() { try { startSignal.await (); doWork(); doneSignal.countDown (); } catch (InterruptedException ex) {} // return; } void doWork() { ... } } A CountDownLatch is initialized with a given count. The await() methods block until the current count reaches zero due to invocations of the countDown() method, after which all waiting threads are released and any subsequent invocations of await return immediately. This is a one-shot phenomenon -- the count cannot be reset. If you need a version that resets the count, consider using a CyclicBarrier. Here is a pair of classes in which a group of worker threads use two countdown latches: The first is a start signal that prevents any worker from proceeding until the driver is ready for them to proceed; The second is a completion signal that allows the driver to wait until all workers have completed.

- 45. CountDownLatch class Driver2 { // ... void main() throws InterruptedException { CountDownLatch doneSignal = new CountDownLatch(N); Executor e = ... for (int i = 0; i < N; ++i) // create and start threads e.execute(new WorkerRunnable(doneSignal, i)); doneSignal.await(); // wait for all to finish } } class WorkerRunnable implements Runnable { private final CountDownLatch doneSignal; private final int i; WorkerRunnable(CountDownLatch doneSignal, int i) { this.doneSignal = doneSignal; this.i = i; } public void run() { try { doWork(i); doneSignal.countDown(); } catch (InterruptedException ex) {} // return; } void doWork() { ... } } Another typical usage would be to divide a problem into N parts, describe each part with a Runnable that executes that portion and counts down on the latch, and queue all the Runnables to an Executor. When all sub-parts are complete, the coordinating thread will be able to pass through await.

- 46. interface CompletionService public class TestCompletionService { public static void main(String[] args) throws InterruptedException, ExecutionException { ExecutorService exec = Executors.newFixedThreadPool(10); CompletionService<String> serv = new ExecutorCompletionService<String>(exec); for (int index = 0; index < 5; index++) { final int NO = index; Callable<String> downImg = new Callable<String>() { public String call() throws Exception { Thread.sleep((long) (Math.random() * 10000)); return "Downloaded Image " + NO; } }; serv.submit(downImg); } Thread.sleep(1000 * 2); System.out.println("Show web content"); for (int index = 0; index < 5; index++) { Future<String> task = serv.take(); String img = task.get(); System.out.println(img); } System.out.println("End"); // 关闭线程池 exec.shutdown(); } } Show web content Downloaded Image 1 Downloaded Image 2 Downloaded Image 4 Downloaded Image 0 Downloaded Image 3 End A service that decouples the production of new asynchronous tasks from the consumption of the results of completed tasks. Producers submit tasks for execution. Consumers take completed tasks and process their results in the order they complete. ExecutorCompletionService, a CompletionService that uses a supplied Executor to execute tasks. This class arranges that submitted tasks are, upon completion, placed on a queue accessible using take. The class is lightweight enough to be suitable for transient use when processing groups of tasks.

- 47. Exchanger Allows two threads to rendezvous and exchange data. Such as exchanging an empty buffer for a full one.

- 49. Lock-free Algorithms Why? A thread will be blocked when it tries to acquire a lock possessed by another thread. The blocking thread can not do any other things. If it is a task of high priority, then it will result in bad result – priority convert Dead lock (more than one lock, different sequence) coarse-grained coordinate mechanism Suitable for management of simple operations, like, increase counter, update mutex owner. Any fine-grained mechanisms? Yes, modern processor support multiple processing 几乎每个现代处理器都有通过可以检测或阻止其他处理器的并发访问的方式来更新共享变量的指令 硬件同步原语 (hardware synchronization primitive?) None-Blocking algorithm

- 50. Compare and Swap (CAS) CAS 操作包含三个操作数 —— 内存位置( V )、预期原值( A )和新值 (B) 。如果内存位置的值与预期原值相匹配,那么处理器会自动将该位置值更新为新值。否则,处理器不做任何操作。 Based around optimistic state updates Read current state Compute next state Swap in next state if current state hasn’t changed (But be aware of ABA problem!) Repeat on failure Lightweight and can be implement in hardware. Concurrent algorithm based on CAS is called non-lock algorithm because thread do not need to wait for lock or mutex anymore. public class SimulatedCAS { private int value; public synchronized int getValue() { return value; } public synchronized int compareAndSwap (int expectedValue, int newValue) { if (value == expectedValue) value = newValue; return value; } } public class CasCounter { private SimulatedCAS value; public int getValue() { return value.getValue(); } public int increment() { int oldValue = value.getValue(); while (value.compareAndSwap(oldValue, oldValue + 1) != oldValue) oldValue = value.getValue(); return oldValue + 1; } }

- 51. Non-blocking Algorithm Lock-free && No wait; also called optimistic algorithm Avoid priority conversion , dead lock; low price in competition, fine-grained schedule and coordinate, high concurrency 非阻塞版本相对于基于锁的版本有几个性能优势。首先,它用硬件的原生形态代替 JVM 的锁定代码路径,从而在更细的粒度层次上(独立的内存位置)进行同步,失败的线程也可以立即重试,而不会被挂起后重新调度。更细的粒度降低了争用的机会, 不用重新调度就能重试的能力也降低了争用的成本。即使有少量失败的 CAS 操作,这种方法仍然会比由于锁争用造成的重新调度快得多。 原子变量类: java.util.concurrent.atomic 这些原语都是使用平台上可用的最快本机结构(比较并交换、加载链接 / 条件存储,最坏的情况下是旋转锁)来实现的 无论是直接的还是间接的,几乎 java.util.concurrent 包中的所有类都使用原子变量,而不使用同步。类似 ConcurrentLinkedQueue 的类也使用原子变量直接实现无等待算法,而类似 ConcurrentHashMap 的类使用 ReentrantLock 在需要时进行锁定。然后, ReentrantLock 使用原子变量来维护等待锁定的线程队列。 更细粒度意味着更轻量级 调整具有竞争的并发应用程序的可伸缩性的通用技术是降低使用的锁定对象的粒度,希望更多的锁定请求从竞争变为不竞争。从锁定转换为原子变量可以获得相同的结果,通过切换为更细粒度的协调机制,竞争的操作就更少,从而提高了吞吐量。

- 52. 8-way Ultrasparc3 中同步、 ReentrantLock 、公平 Lock 和 AtomicLong 的基准吞吐量 单处理器 Pentium 4 中的同步、 ReentrantLock 、公平 Lock 和 AtomicLong 的基准吞吐量

- 53. 使用 CAS 的非阻塞算法 public class NonblockingCounter { private AtomicInteger value; public int getValue() { return value.get(); } public int increment() { int v; do { v = value.get(); while (!value.compareAndSet(v, v + 1)); return v + 1; } } 使用 Treiber 算法的非阻塞堆栈 public class ConcurrentStack<E> { AtomicReference<Node<E>> head = new AtomicReference<Node<E>>(); public void push(E item) { Node<E> newHead = new Node<E>(item); Node<E> oldHead; do { oldHead = head.get(); newHead.next = oldHead; } while (!head.compareAndSet(oldHead, newHead)); } public E pop() { Node<E> oldHead; Node<E> newHead; do { oldHead = head.get(); if (oldHead == null) return null; newHead = oldHead.next; } while (!head.compareAndSet(oldHead,newHead)); return oldHead.item; } static class Node<E> { final E item; Node<E> next; public Node(E item) { this.item = item; } } }

- 54. Performance 在轻度到中度的争用情况下,非阻塞算法的性能会超越阻塞算法,因为 CAS 的多数时间都在第一次尝试时就成功,而发生争用时的开销也不涉及线程挂起和上下文切换,只多了几个循环迭代。没有争用的 CAS 要比没有争用的锁便宜得多(这句话肯定是真的,因为没有争用的锁涉及 CAS 加上额外的处理),而争用的 CAS 比争用的锁获取涉及更短的延迟。 在高度争用的情况下(即有多个线程不断争用一个内存位置的时候),基于锁的算法开始提供比非阻塞算法更好的吞吐率, 因为当线程阻塞时,它就会停止争用,耐心地等候轮到自己,从而避免了进一步争用。但是,这么高的争用程度并不常见,因为多数时候,线程会把线程本地的计算与争用共享数据的操作分开,从而给其他线程使用共享数据的机会。

- 55. 非阻塞的链表 Michael-Scott 非阻塞队列算法中的插入 public class LinkedQueue <E> { private static class Node <E> { final E item; final AtomicReference<Node<E>> next; Node(E item, Node<E> next) { this.item = item; this.next = new AtomicReference<Node<E>>(next); } } private AtomicReference<Node<E>> head = new AtomicReference<Node<E>>(new Node<E>(null, null)); private AtomicReference<Node<E>> tail = head; public boolean put(E item) { Node<E> newNode = new Node<E>(item, null); while (true) { Node<E> curTail = tail.get(); Node<E> residue = curTail.next.get(); if (curTail == tail.get()) { if ( residue == null ) { if ( curTail.next.compareAndSet(null, newNode) ) { tail.compareAndSet(curTail, newNode) ; return true; } } else { tail.compareAndSet(curTail, residue) ; } } } } } 帮助邻居:如果线程发现了处在更新中途的数据结构, 它就可以 “帮助” 正在执行更新的线程完成更新, 然后再进行自己的操作。

- 56. 静止状态 中间状态: 在新元素插入之后,尾指针更新之前 静止状态

- 57. Conclusion 如果深入 JVM 和操作系统,会发现非阻塞算法无处不在。 垃圾收集器使用非阻塞算法加快并发和平行的垃圾搜集; 调度器使用非阻塞算法有效地调度线程和进程,实现内在锁。 在 Mustang ( Java 6.0 )中,基于锁的 SynchronousQueue 算法被新的非阻塞版本代替。 很少有开发人员会直接使用 SynchronousQueue ,但是通过 Executors.newCachedThreadPool() 工厂构建的线程池用它作为工作队列。 比较缓存线程池性能的对比测试显示,新的非阻塞同步队列实现提供了几乎是当前实现 3 倍的速度。

- 58. 一个网络服务器的简单实现 public class Server { private static int produceTaskSleepTime = 100; private static int consumeTaskSleepTime = 1200; private static int produceTaskMaxNumber = 100; private static final int CORE_POOL_SIZE = 2; private static final int MAX_POOL_SIZE = 100; private static final int KEEPALIVE_TIME = 3; private static final int QUEUE_CAPACITY = (CORE_POOL_SIZE + MAX_POOL_SIZE) / 2; private static final TimeUnit TIME_UNIT = TimeUnit.SECONDS; private static final String HOST = "127.0.0.1"; private static final int PORT = 19527; private BlockingQueue<Runnable> workQueue = new ArrayBlockingQueue<Runnable>( QUEUE_CAPACITY); //private ThreadPoolExecutor serverThreadPool = null; private ExecutorService pool = null; private RejectedExecutionHandler rejectedExecutionHandler = new ThreadPoolExecutor.DiscardOldestPolicy(); private ServerSocket serverListenSocket = null; private int times = 5;

- 59. public void start() { // You can also init thread pool in this way. /*serverThreadPool = new ThreadPoolExecutor(CORE_POOL_SIZE, MAX_POOL_SIZE, KEEPALIVE_TIME, TIME_UNIT, workQueue, rejectedExecutionHandler);*/ pool = Executors.newFixedThreadPool(10); try { serverListenSocket = new ServerSocket(PORT); serverListenSocket.setReuseAddress(true); System.out.println("I'm listening"); while (times-- > 0) { Socket socket = serverListenSocket.accept(); String welcomeString = "hello"; //serverThreadPool.execute(new ServiceThread(socket, welcomeString)); pool.execute(new ServiceThread(socket)); } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } cleanup(); } public void cleanup() { if (null != serverListenSocket) { try { serverListenSocket.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } //serverThreadPool.shutdown(); pool.shutdown(); } public static void main(String args[]) { Server server = new Server(); server.start(); } }

- 60. class ServiceThread implements Runnable, Serializable { private static final long serialVersionUID = 0; private Socket connectedSocket = null; private String helloString = null; private static int count = 0; private static ReentrantLock lock = new ReentrantLock(); ServiceThread(Socket socket) { connectedSocket = socket; } public void run() { increaseCount(); int curCount = getCount(); helloString = "hello, id = " + curCount + "\r\n"; ExecutorService executor = Executors.newSingleThreadExecutor(); Future<String> future = executor.submit(new TimeConsumingTask()); DataOutputStream dos = null; try { dos = new DataOutputStream(connectedSocket.getOutputStream()); dos.write(helloString.getBytes()); try { dos.write("let's do soemthing other.\r\n".getBytes()); String result = future.get(); dos.write(result.getBytes()); } catch (InterruptedException e) { e.printStackTrace(); } catch (ExecutionException e) { e.printStackTrace(); } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } finally { if (null != connectedSocket) { try { connectedSocket.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } if (null != dos) { try { dos.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } executor.shutdown(); } }

- 61. private int getCount() { int ret = 0; try { lock.lock(); ret = count; } finally { lock.unlock(); } return ret; } private void increaseCount() { try { lock.lock(); ++count; } finally { lock.unlock(); } } } class TimeConsumingTask implements Callable<String> { public String call() throws Exception { System.out .println("It's a time-consuming task, you'd better retrieve your result in the furture"); return "ok, here's the result: It takes me lots of time to produce this result"; } }

- 62. Q&A

Editor's Notes

- #9: saturation [sætʃə‘reiʃən] 饱和

- #20: mutual [‘mju:tjuəl] 相互的

- #27: HashMaps can be very important on server applications for caching purposes. And as such, they can receive a good deal of concurrent access. Before Java 5, the standard HashMap implementation had the weakness that accessing the map concurrently meant synchronizing on the entire map on each access. Synchronizing on the whole map fails to take advantage of a possible optimisation: because hash maps store their data in a series of separate 'buckets', it is in principle possible to lock only the portion of the map that is being accessed. This optimisation is generally called lock striping . Java 5 brings a hash map optimised in this way in the form of ConcurrentHashMap. A combination of lock striping plus judicious use of volatile variables gives the class two highly concurrent properties: Writing to a ConcurrentHashMap locks only a portion of the map; Reads can generally occur without locking .

- #31: retrofit [‘retrəfit] 更新

![Executors Example – Web Server poor resource management class UnstableWebServer { public static void main(String[] args) { ServerSocket socket = new ServerSocket(80); while (true) { final Socket connection = socket.accept(); Runnable r = new Runnable() { public void run() { handleRequest(connection); } }; // Don't do this! new Thread(r).start(); } } } better resource management class BetterWebServer { Executor pool = Executors.newFixedThreadPool(7); public static void main(String[] args) { ServerSocket socket = new ServerSocket(80); while (true) { final Socket connection = socket.accept(); Runnable r = new Runnable() { public void run() { handleRequest(connection); } }; pool.execute(r); } } }](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-11-320.jpg)

![Thread pool package concurrent; import java.util.concurrent.ExecutorService; import java.util.concurrent.Executors; public class TestThreadPool { public static void main(String args[]) throws InterruptedException { // only two threads ExecutorService exec = Executors.newFixedThreadPool(2); for(int index = 0; index < 100; index++) { Runnable run = new Runnable() { public void run() { long time = (long) (Math.random() * 1000); System.out.println("Sleeping " + time + "ms"); try { Thread.sleep(time); } catch (InterruptedException e) { } } }; exec.execute(run); } // must shutdown exec.shutdown(); } } The thread pool size is 2, how can it deal with 100 threads? Will this block the main thread since no more threads are ready for use in the for loop? All runnable instances will be put into a LinkedBlockingQueue through execute(runnable) We use Executors to construct all kinds of thread pools. fixed, single, cached, scheduled. Use ThreadPoolExecutor to construct thread pool with special corePoolSize, maximumPoolSize, keepAliveTime, timeUnit, and blockingQueue.](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-12-320.jpg)

![ScheduledThreadPool package concurrent; import static java.util.concurrent.TimeUnit.SECONDS; import java.util.Date; import java.util.concurrent.Executors; import java.util.concurrent.ScheduledExecutorService; import java.util.concurrent.ScheduledFuture; public class TestScheduledThread { public static void main(String[] args) { final ScheduledExecutorService scheduler = Executors.newScheduledThreadPool (2); final Runnable beeper = new Runnable() { int count = 0; public void run() { System.out.println(new Date() + " beep " + (++count)); } }; // 1 秒钟后运行,并每隔 2 秒运行一次 final ScheduledFuture<?> beeperHandle = scheduler .scheduleAtFixedRate (beeper, 1, 2, SECONDS); // 2 秒钟后运行,并每次在上次任务运行完后等待 5 秒后重新运行 final ScheduledFuture<?> beeperHandle2 = scheduler .scheduleWithFixedDelay (beeper, 2, 5, SECONDS); // 30 秒后结束关闭任务,并且关闭 Scheduler, We don’t need close it in 24 hours run applications scheduler .schedule ( new Runnable() { public void run() { beeperHandle.cancel(true); beeperHandle2.cancel(true); scheduler. shutdown (); } }, 30, SECONDS); } }](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-13-320.jpg)

![CopyOnWriteArrayList public boolean add(E e) { final ReentrantLock lock = this.lock; lock.lock(); try { Object[] elements = getArray(); int len = elements.length; Object[] newElements = Arrays.copyOf(elements, len + 1); newElements[len] = e; setArray(newElements); return true; } finally { lock.unlock(); } } Optimized for case where iteration is much more frequent than insertion or removal Ideal for event listeners A thread-safe variant of ArrayList in which all mutative operations add, set, and so on) are implemented by making a fresh copy of the underlying array.](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-27-320.jpg)

![<<interface>> Condition class BoundedBuffer { final Lock lock = new ReentrantLock(); final Condition notFull = lock.newCondition(); final Condition notEmpty = lock.newCondition(); final Object[] items = new Object[100]; int putptr, takeptr, count; public void put(Object x) throws InterruptedException { lock.lock(); try { while (count == items.length) notFull.await(); items[putptr] = x; if (++putptr == items.length) putptr = 0; ++count; notEmpty.signal(); } finally { lock.unlock(); } } public Object take() throws InterruptedException { lock.lock(); try { while (count == 0) notEmpty.await(); Object x = items[takeptr]; if (++takeptr == items.length) takeptr = 0; --count; notFull.signal(); return x; } finally { lock.unlock(); } } } Monitor-like operations for working with Locks. Condition factors out the Object monitor methods wait, notify and notifyAll into distinct objects to give the effect of having multiple wait-sets per object, by combining them with the use of arbitrary Lock implementations. Where a Lock replaces the use of synchronized methods and statements, a Condition replaces the use of the Object monitor methods. Condition 将 Object 监视器方法( wait 、 notify 和 notifyAll )分解成截然不同的对象,以便通过将这些对象与任意 Lock 实现组合使用,为每个对象提供多个等待 set ( wait-set )。其中, Lock 替代了 synchronized 方法和语句的使用, Condition 替代了 Object 监视器方法的使用。](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-40-320.jpg)

![Semaphore class Pool { private static final int MAX_AVAILABLE = 100; private final Semaphore available = new Semaphore(MAX_AVAILABLE, true); public Object getItem() throws InterruptedException { available.acquire (); return getNextAvailableItem (); } public void putItem(Object x) { if ( markAsUnused (x)) available.release (); } // Not a particularly efficient data structure; just for demo protected Object[] items = ... whatever kinds of items being managed protected boolean[] used = new boolean[MAX_AVAILABLE]; protected synchronized Object getNextAvailableItem () { for (int i = 0; i < MAX_AVAILABLE; ++i) { if (!used[i]) { used[i] = true; return items[i]; } } return null; // not reached } protected synchronized boolean markAsUnused (Object item) { for (int i = 0; i < MAX_AVAILABLE; ++i) { if (item == items[i]) { if (used[i]) { used[i] = false; return true; } else return false; } } return false; } } 一个计数信号量。从概念上讲,信号量维护了一个许可集。如有必要,在许可可用前会阻塞每一个 acquire() ,然后再获取该许可。每个 release() 添加一个许可,从而可能释放一个正在阻塞的获取者。但是,不使用实际的许可对象, Semaphore 只对可用许可的号码进行计数,并采取相应的行动。 Conceptually, a semaphore maintains a set of permits. Each acquire() blocks if necessary until a permit is available, and then takes it. Each release() adds a permit, potentially releasing a blocking acquirer. However, no actual permit objects are used; the Semaphore just keeps a count of the number available and acts accordingly. Before obtaining an item each thread must acquire a permit from the semaphore, guaranteeing that an item is available for use. When the thread has finished with the item it is returned back to the pool and a permit is returned to the semaphore, allowing another thread to acquire that item.](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-42-320.jpg)

![CyclicBarrier thread1 thread2 thread3 CyclicBarrier barrier = new CyclicBarrier(3); await() await() await() class Solver { final int N; final float[][] data; final CyclicBarrier barrier ; class Worker implements Runnable { int myRow; Worker(int row) { myRow = row; } public void run() { while (!done()) { processRow(myRow); try { barrier.await (); } catch (InterruptedException ex) { return; } catch (BrokenBarrierException ex) { return; } } } } public Solver(float[][] matrix) { data = matrix; N = matrix.length; barrier = new CyclicBarrier(N, new Runnable() { public void run() { mergeRows(...); } }); for (int i = 0; i < N; ++i) new Thread(new Worker(i)).start(); waitUntilDone (); } } A synchronization aid that allows a set of threads to all wait for each other to reach a common barrier point. CyclicBarriers are useful in programs involving a fixed sized party of threads that must occasionally wait for each other. The barrier is called cyclic because it can be re-used after the waiting threads are released. int await() Waits until all {@linkplain #getParties parties} have invoked](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-43-320.jpg)

![interface CompletionService public class TestCompletionService { public static void main(String[] args) throws InterruptedException, ExecutionException { ExecutorService exec = Executors.newFixedThreadPool(10); CompletionService<String> serv = new ExecutorCompletionService<String>(exec); for (int index = 0; index < 5; index++) { final int NO = index; Callable<String> downImg = new Callable<String>() { public String call() throws Exception { Thread.sleep((long) (Math.random() * 10000)); return "Downloaded Image " + NO; } }; serv.submit(downImg); } Thread.sleep(1000 * 2); System.out.println("Show web content"); for (int index = 0; index < 5; index++) { Future<String> task = serv.take(); String img = task.get(); System.out.println(img); } System.out.println("End"); // 关闭线程池 exec.shutdown(); } } Show web content Downloaded Image 1 Downloaded Image 2 Downloaded Image 4 Downloaded Image 0 Downloaded Image 3 End A service that decouples the production of new asynchronous tasks from the consumption of the results of completed tasks. Producers submit tasks for execution. Consumers take completed tasks and process their results in the order they complete. ExecutorCompletionService, a CompletionService that uses a supplied Executor to execute tasks. This class arranges that submitted tasks are, upon completion, placed on a queue accessible using take. The class is lightweight enough to be suitable for transient use when processing groups of tasks.](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-46-320.jpg)

![public void start() { // You can also init thread pool in this way. /*serverThreadPool = new ThreadPoolExecutor(CORE_POOL_SIZE, MAX_POOL_SIZE, KEEPALIVE_TIME, TIME_UNIT, workQueue, rejectedExecutionHandler);*/ pool = Executors.newFixedThreadPool(10); try { serverListenSocket = new ServerSocket(PORT); serverListenSocket.setReuseAddress(true); System.out.println("I'm listening"); while (times-- > 0) { Socket socket = serverListenSocket.accept(); String welcomeString = "hello"; //serverThreadPool.execute(new ServiceThread(socket, welcomeString)); pool.execute(new ServiceThread(socket)); } } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } cleanup(); } public void cleanup() { if (null != serverListenSocket) { try { serverListenSocket.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } //serverThreadPool.shutdown(); pool.shutdown(); } public static void main(String args[]) { Server server = new Server(); server.start(); } }](https://image.slidesharecdn.com/javautilconcurrent-110402031632-phpapp02/85/Java-util-concurrent-59-320.jpg)