Journey through the ML model deployment to production @DSC5

- 1. A Journey through the ML Model Deployment to Production STANKO KUVELJIC

- 2. A Journey through the ML Model Deployment to Production

- 3. A Journey through the ML Model Deployment to Production HELL

- 5. “Expectations were like fine pottery. The harder you held them, the more likely they were to crack.”, The Way of the Kings, Brandon Sanderson 1. ML Deployment 2. Deploy on the edge 3. Data base deployment 4. REST API - Flask 5. REST API - Flask + uWSGI 6. TensorFlow Serving 7. Message Queues 8. Tidal Waves 9. Summary

- 7. People with no idea about AI saying it will take over the world My network

- 9. “I know the pieces fit 'cause I watched them tumble down”, Schism, Tool Light heaven abyss Shadow OrderLife DeathChaos Reality astral AI system astral rift & ML VOID

- 12. Some of Serving Approaches

- 13. Some of Serving Approaches 1. On the edge - (device deployment)

- 14. Some of Serving Approaches 1. On the edge - (device deployment) 2. Database batch inference

- 15. Some of Serving Approaches 1. On the edge - (device deployment) 2. Database batch inference 3. REST API

- 16. Some of Serving Approaches 1. On the edge - (device deployment) 2. Database batch inference 3. REST API 4. Streaming

- 17. ON THE EDGE

- 18. Mobile Device Deployment Calls model on the device Predictions: On the Fly Latency: Low Constraint: Model complexity

- 19. Mobile Device Deployment Calls model on the device Predictions: On the Fly Latency: Low Constraint: Model complexity Animal: Cat

- 22. Batch Inference Predictions: On demand/scheduled Latency: “less important” Constraint: not real time App Preproc Inference Raw Data Scored Data Scheduler/Cli

- 23. REST API

- 25. Flask • Web framework - NOT A SERVER • Easy for development • Single request per time • Can’t scale • Not for production Client Flask GPUML Models Other Services DB

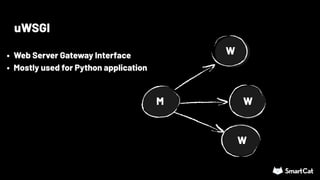

- 26. uWSGI M W W W • Web Server Gateway Interface • Mostly used for Python application

- 27. uWSGI - Forking (copy on write) Master Worker Loads App Worker Worker Copy from Parent Copy from Parent Copy from Parent

- 28. uWSGI - Forking (copy on write) Master Worker Loads App Worker Worker Copy from Parent Copy from Parent Copy from Parent

- 29. uWSGI - Lazy Apps Master Worker Doesn’t Load App Worker Worker Loads App Loads App Loads App

- 30. uWSGI - Postfork fix Master Loads App Worker Copy from Parent Worker Copy from Parent Worker Copy from Parent postfork() postfork() postfork()

- 31. uWSGI - Lazy and Postforked Summary • TF requires postfork (or lazy apps) • Each process makes copy of ML model • Each process maintains own session • High memory footprint • GPU doesn’t always help

- 32. uWSGI - Lazy and Postforked Summary • TF requires postfork (or lazy apps) • Each process makes copy of ML model • Each process maintains own session • High memory footprint • GPU doesn’t always help

- 34. App 1 App 2 App 3 GPU TensorFlow Serving Models

- 35. App 1 App 2 App 3 GPU TensorFlow Serving Models • Separated from the APPS • Multiple models and versions • Manages session with GPU • Allows batch processing • Scalable • REST/GRPC endpoints • Versioning policy

- 36. Client Flask + uWSGI DB Client TF - Client Other Services GPU TensorFlow Serving Models

- 37. STREAMING

- 38. Message queues Client app Producer Client app Producer Consumer Producer Request Queue Response Queue Client app ConsumerML MODELS & INFERENCE

- 40. LEARN TO SWIM Lyrics: Ænima, Tool

- 41. 'CAUSE I'M PRAYING FOR RAIN AND I'M PRAYING FOR TIDAL WAVES Lyrics: Ænima, Tool

- 42. I WANNA SEE IT COME DOWN Lyrics: Ænima, Tool

- 43. BURN IT DOWN Lyrics: Ænima, Tool

- 44. FLASH IT DOWN Lyrics: Ænima, Tool

- 45. Lyrics: Ænima, Tool WITH LOAD TESTS

- 46. Tested Models MODEL PARAMETERS NOTES Inception 4M InceptionV4 CNN (image) 0.5M 6 - CONV LAYERS LSTM (text) 1M 2 x LSTM (128) - 256 unroll Machine OS Ubuntu16 RAM 32GB GPU Nvidia1050Ti CPU i7

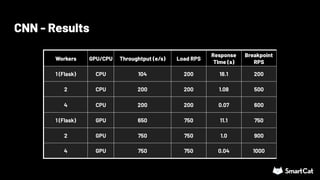

- 47. CNN - Results Workers GPU/CPU Throughtput (e/s) Load RPS Response TIme (s) Breakpoint RPS 1 (Flask) CPU 104 200 18.1 200 2 CPU 200 200 1.08 500 4 CPU 200 200 0.07 600 1 (Flask) GPU 650 750 11.1 750 2 GPU 750 750 1.0 900 4 GPU 750 750 0.04 1000

- 48. Inception - Results Workers GPU/CPU Throughtput (e/s) Load RPS Response TIme (s) Breakpoint RPS 1 (Flask) CPU 4 10 32 10 2 CPU 5 10 18.3 10 4 CPU 3 10 26.5 10 1 (Flask) GPU 20 50 30 50 2 GPU 20 50 21 50

- 49. Inception - Results Workers GPU/CPU Throughtput (e/s) Load RPS Response TIme (s) Breakpoint RPS 1 (Flask) CPU 4 10 32 10 2 CPU 5 10 18.3 10 4 CPU 3 10 26.5 10 1 (Flask) GPU 20 50 30 50 2 GPU 20 50 21 50

- 50. RNN - Results Workers GPU/CPU Throughtput (e/s) Load RPS Response TIme (s) Breakpoint RPS 1 (Flask) CPU 35 100 24.6 100 2 CPU 50 100 19.7 100 4 CPU 60 100 18.0 100 1 (Flask) GPU 10 50 36.59 50 2 GPU 10 50 27.7 50 4 GPU 15 50 26.5 50

- 51. RNN - Results Workers GPU/CPU Throughtput (e/s) Load RPS Response TIme (s) Breakpoint RPS 1 (Flask) CPU 35 100 24.6 100 2 CPU 50 100 19.7 100 4 CPU 60 100 18.0 100 1 (Flask) GPU 10 50 36.59 50 2 GPU 10 50 27.7 50 4 GPU 15 50 26.5 50

- 52. “The purpose of a storyteller is not to tell you how to think, but to give you questions to think upon.”, The Way of the Kings, Brandon Sanderson • Business? • Does accuracy matter? • Real time? • Retraining? • Data size? • Algorithm to use? • Demo vs Production?

- 53. THANK YOU