Learning to learn unlearned feature for segmentation

- 1. Learning to learn unlearned feature for segmentation Sungyeob Han Communication and Machine Learning Lab. Seoul National University

- 2. Introduction • How to transfer with few samples? Primary cancer Brain metastasis

- 3. Outline 1. Training segmentation network 2. Meta-learning 3. Active learning 4. Active meta-tune 5. Applications

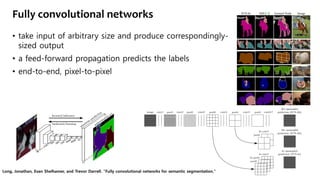

- 5. Fully convolutional networks • take input of arbitrary size and produce correspondingly- sized output • a feed-forward propagation predicts the labels • end-to-end, pixel-to-pixel Long, Jonathan, Evan Shelhamer, and Trevor Darrell. "Fully convolutional networks for semantic segmentation."

- 6. Pyramid Scene Parsing Network pyramid parsing module : harvest different sub-region representations concatenation : upsampling and concatenation layers Zhao, Hengshuang, et al. "Pyramid scene parsing network."

- 7. Zhao, Hengshuang, et al. "Pyramid scene parsing network."

- 8. Details in training segmentation network • Fast feed-forward time (FCN-based) • Given the pre-trained encoding parameters (VGGNet), fine-tuning in stages takes 36 hours on a single GPU. • Ambiguity : object structure, sparse label • constrained categories average loss gives blurry gradient for each category information

- 9. Outline 1. Training segmentation network 2. Meta-learning 3. Active learning 4. Active meta-tune 5. Applications

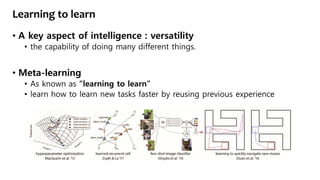

- 10. Learning to learn • A key aspect of intelligence : versatility • the capability of doing many different things. • Meta-learning • As known as ”learning to learn” • learn how to learn new tasks faster by reusing previous experience

- 11. Few-shot Learning • In 2015, Brenden et al. show how to learn new concepts from one or a few instances of that concept. • learn to learn from a few examples • Omniglot • 1623 character classes • each with 20 examples Lake, Brenden M., Ruslan Salakhutdinov, and Joshua B. Tenenbaum. "Human-level concept learning through probabilistic program induction."

- 12. Meta-learner Ravi, Sachin, and Hugo Larochelle. "Optimization as a model for few-shot learning.“

- 13. Meta-learning set-up for few-shot image classification • 1-shot, 5-class classification task • one example from each of 5 classes

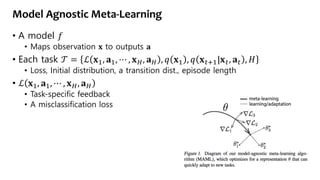

- 14. Model agnostic meta-learning • Motivation : ambiguity on new task • From a dynamical systems standpoint • Maximize the sensitivity of the loss functions of new tasks

- 15. Model Agnostic Meta-Learning • A model 𝑓 • Maps observation 𝐱 to outputs 𝐚 • Each task 𝒯 = ℒ 𝐱1, 𝐚1, ⋯ , 𝐱 𝐻, 𝐚 𝐻 , 𝑞 𝐱1 , 𝑞 𝐱 𝑡+1|𝐱 𝑡, 𝐚 𝑡 , 𝐻 • Loss, Initial distribution, a transition dist., episode length • ℒ 𝐱1, 𝐚1, ⋯ , 𝐱 𝐻, 𝐚 𝐻 • Task-specific feedback • A misclassification loss

- 16. Algorithm : MAML • K-shot learning • Learn a new Task 𝓣𝒊 sampled from 𝒑 𝓣 from only K samples sampled from 𝒒𝒊 • Feedback ℒ 𝑇 𝑖 generated by 𝒯𝑖 • Train with K samples • Test on new samples from 𝒯𝑖 • improved by considering how the test error on new data from 𝑞𝑖 changes with respect to the parameters. • the test error on sampled tasks 𝒯𝑖 serves as the training error of the meta-learning process.

- 17. Algorithm : MAML • A model with parameters 𝑓𝜃 , adapting to an new task 𝒯𝑖 ∶ 𝜃 → 𝜃𝑖′ 𝜃𝑖 ′ = 𝜃 − 𝛼𝛻𝜃ℒ 𝒯𝑖 (𝑓𝜃) • Meta-objective : the model parameters are trained by optimizing for performance of 𝑓 𝜃′ min 𝜃 𝒯𝑖~𝑝(𝒯) ℒ 𝒯𝑖 𝑓 𝜃𝑖 ′ = 𝒯𝑖~𝑝(𝒯) ℒ 𝒯𝑖 𝑓𝜃−𝛼𝛻 𝜃ℒ 𝑇 𝑖 (𝑓 𝜃) • The meta-optimization across tasks 𝜃 ← 𝜃 − 𝛽𝛻𝜃 𝒯𝑖~𝑝(𝒯) ℒ 𝒯𝑖 𝑓 𝜃𝑖 ′

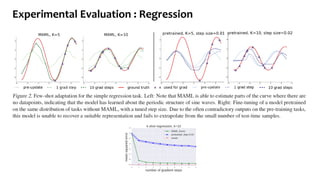

- 18. Experimental Evaluation : Regression

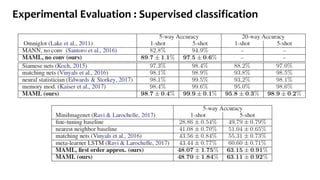

- 19. Experimental Evaluation : Supervised classification

- 20. Experimental Evaluation : RL

- 21. Desinging of meta-learning Levine, Sergey, and Chelsea Finn, “Meta-learning frontiers: universal, uncertain, and unsupervised.”

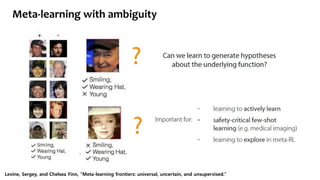

- 22. Meta-learning with ambiguity Levine, Sergey, and Chelsea Finn, “Meta-learning frontiers: universal, uncertain, and unsupervised.”

- 23. Meta-learning with ambiguity Levine, Sergey, and Chelsea Finn, “Meta-learning frontiers: universal, uncertain, and unsupervised.”

- 24. Outline 1. Training segmentation network 2. Meta-learning 3. Active learning 4. Active meta-tune 5. Applications

- 25. Active learning • pool-based active learning • queries are selected from a large pool of unlabeled instances • uncertainty sampling query strategy • select the instance in the pool about which the model is least certain how to label Settles, Burr “Active learning literature survey.”

- 26. pool-based active learning • A toy example : logistic regression (400 instances) • (b) : 30 labeled instances randomly drawn : 70% accuracy • (c) : 30 actively queried instances using uncertainty sampling : 90%

- 27. Uncertainty sampling • uncertainty measures Mussmann, Stephen, and Percy Liang. "On the Relationship between Data Efficiency and Error for Uncertainty Sampling.”

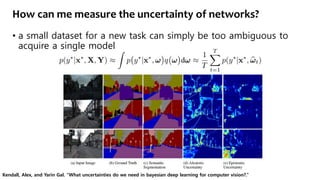

- 28. How can me measure the uncertainty of networks? • a small dataset for a new task can simply be too ambiguous to acquire a single model Kendall, Alex, and Yarin Gal. "What uncertainties do we need in bayesian deep learning for computer vision?.“

- 29. Outline 1. Training segmentation network 2. Meta-learning 3. Active learning 4. Active meta-tune 5. Applications

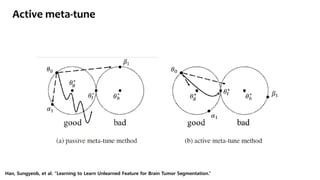

- 31. Generate training tasks good task bad task 𝑻 𝒈𝒐𝒐𝒅 𝑻 𝒃𝒂𝒅 Han, Sungyeob, et al. “Learning to Learn Unlearned Feature for Brain Tumor Segmentation.”

- 32. Active meta-tune Han, Sungyeob, et al. “Learning to Learn Unlearned Feature for Brain Tumor Segmentation.”

- 33. Active meta-tune Han, Sungyeob, et al. “Learning to Learn Unlearned Feature for Brain Tumor Segmentation.”

- 34. Active meta-tune 𝜶 𝑻 𝜷 𝑻 Han, Sungyeob, et al. “Learning to Learn Unlearned Feature for Brain Tumor Segmentation.”

- 35. Outline 1. Training segmentation network 2. Meta-learning 3. Active learning 4. Active meta-tune 5. Applications

- 36. Brain tumor segmentation FLAIR Ground Truth Proposed ModelBaseline • 3D FCN on High grade glioma (BRATS) Mean Dice Score (std) Whole Active Core Baseline 0.72 (0.18) 0.54 (0.25) 0.44 (0.24) Proposed 0.77 (0.13) 0.57 (0.23) 0.51 (0.25) Convolution Deconvolution

- 37. Types of brain tumor – size and location • Brain tumor treatment options depend on the type of brain tumor you have, as well as its size and location. • Types • Acoustic neuroma, Astrocytoma, Brain metastases • Choroid plexus carcinoma, Craniopharyngioma • Embryonal tumors, Ependymoma, Glioblastoma • Glioma, Medulloblastoma, Meningioma • Oligodendroglioma, Pediatric brain tumors • Pineoblastoma, Pituitary tumors early stage of brain metastasis

- 38. Motivation on brain metastasis segmentation • The target feature is different • Too many small tumors : automation needed! x40 slides for 4 seq.

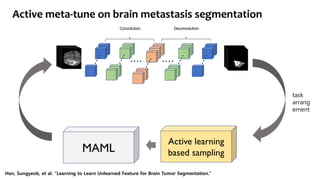

- 39. Active meta-tune on brain metastasis segmentation Convolution Deconvolution Active learning based samplingMAML task arrang ement Han, Sungyeob, et al. “Learning to Learn Unlearned Feature for Brain Tumor Segmentation.”

- 40. Experimental Results – pretrained

- 41. Experimental Results – 1 step update

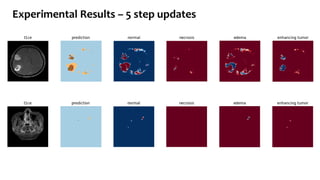

- 42. Experimental Results – 5 step updates

- 43. Experimental Results Results on training dataset Method Dice Score (mean, std) Baseline 0.66 (on BRaTS dataset) Naive 0.33 ± 0.3413 Passive 0.41 ± 0.2752 Active 0.45 ± 0.2317 DSC result for enhancing tumor Results on validation dataset

- 44. Conclusion • Transfer learning method from high grade glioma to brain metastasis • Learn unlearned features without forgetting the original task in brain tumor segmentation • Show the generalization effect in target domain segmentation (brain metastasis)

- 45. Questions? Segmentation Long, Jonathan, Evan Shelhamer, and Trevor Darrell. "Fully convolutional networks for semantic segmentation." Zhao, Hengshuang, et al. "Pyramid scene parsing network.“ Kendall, Alex, and Yarin Gal. "What uncertainties do we need in bayesian deep learning for computer vision?.“ Meta-learning Finn, Chelsea, Pieter Abbeel, and Sergey Levine. "Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks.“ Finn, Chelsea, Kelvin Xu, and Sergey Levine. "Probabilistic Model-Agnostic Meta-Learning.“ Ravi, Sachin, and Hugo Larochelle. "Optimization as a model for few-shot learning.“ Lake, Brenden M., Ruslan Salakhutdinov, and Joshua B. Tenenbaum. "Human-level concept learning through probabilistic program induction." Active learning Settles, Burr “Active learning literature survey.” Mussmann, Stephen, and Percy S. Liang. "Uncertainty Sampling is Preconditioned Stochastic Gradient Descent on Zero-One Loss.“ Mussmann, Stephen, and Percy Liang. "On the Relationship between Data Efficiency and Error for Uncertainty Sampling.” Applications Menze, Bjoern H., et al. "The multimodal brain tumor image segmentation benchmark (BRATS).“ Han, Sungyeob, et al. “Learning to Learn Unlearned Feature for Brain Tumor Segmentation.”