Lecture 10: Data-Intensive Computing for Text Analysis (Fall 2011)

- 1. Data-Intensive Computing for Text Analysis CS395T / INF385T / LIN386M University of Texas at Austin, Fall 2011 Lecture 10 October 27, 2011 Jason Baldridge Matt Lease Department of Linguistics School of Information University of Texas at Austin University of Texas at Austin Jasonbaldridge at gmail dot com ml at ischool dot utexas dot edu 1

- 2. Acknowledgments Course design and slides based on Jimmy Lin’s cloud computing courses at the University of Maryland, College Park Some figures and examples courtesy of the following great Hadoop (order yours today!) • Tom White’s Hadoop: The Definitive Guide, 2nd Edition (2010) 2

- 3. Today’s Agenda • Machine Translation (wrap-up) • Apache Pig • Language Modeling • Only Pig included in this slide deck – slides for other topics will be posted separately on course site 3

- 4. Apache Pig

- 6. grunt> DUMP A; (Joe,cherry,2) (Ali,apple,3) (Joe,banana,2) (Eve,apple,7) grunt> B = FOREACH A GENERATE $0, $2+1, 'Constant'; grunt> DUMP B; (Joe,3,Constant) (Ali,4,Constant) (Joe,3,Constant) (Eve,8,Constant)

- 7. grunt> DUMP A; (2,Tie) (4,Coat) (3,Hat) (1,Scarf) grunt> DUMP B; (Joe,2) (Hank,4) (Ali,0) (Eve,3) (Hank,2)

- 8. grunt> DUMP A; (2,Tie) (4,Coat) (3,Hat) (1,Scarf) grunt> DUMP B; (Joe,2) (Hank,4) (Ali,0) (Eve,3) (Hank,2) grunt> C = JOIN A BY $0, B BY $1; grunt> DUMP C; (2,Tie,Joe,2) (2,Tie,Hank,2) (3,Hat,Eve,3) (4,Coat,Hank,4)

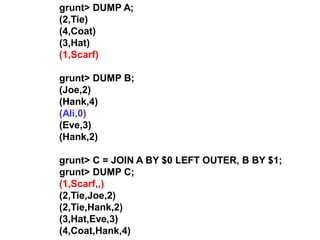

- 9. grunt> DUMP A; (2,Tie) (4,Coat) (3,Hat) (1,Scarf) grunt> DUMP B; (Joe,2) (Hank,4) (Ali,0) (Eve,3) (Hank,2) grunt> C = JOIN A BY $0 LEFT OUTER, B BY $1; grunt> DUMP C; (1,Scarf,,) (2,Tie,Joe,2) (2,Tie,Hank,2) (3,Hat,Eve,3) (4,Coat,Hank,4)

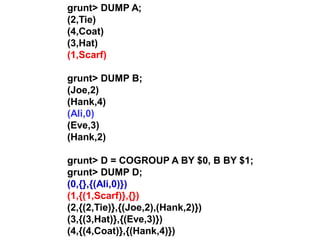

- 10. grunt> DUMP A; (2,Tie) (4,Coat) (3,Hat) (1,Scarf) grunt> DUMP B; (Joe,2) (Hank,4) (Ali,0) (Eve,3) (Hank,2) grunt> D = COGROUP A BY $0, B BY $1; grunt> DUMP D; (0,{},{(Ali,0)}) (1,{(1,Scarf)},{}) (2,{(2,Tie)},{(Joe,2),(Hank,2)}) (3,{(3,Hat)},{(Eve,3)}) (4,{(4,Coat)},{(Hank,4)})

- 11. grunt> DUMP A; (2,Tie) (4,Coat) (3,Hat) (1,Scarf) grunt> DUMP B; (Joe,2) (Hank,4) (Ali,0) (Eve,3) (Hank,2) grunt> E = COGROUP A BY $0 INNER, B BY $1; grunt> DUMP E; (1,{(1,Scarf)},{}) (2,{(2,Tie)},{(Joe,2),(Hank,2)}) (3,{(3,Hat)},{(Eve,3)}) (4,{(4,Coat)},{(Hank,4)})

- 12. grunt> E = COGROUP A BY $0 INNER, B BY $1; grunt> DUMP E; (1,{(1,Scarf)},{}) (2,{(2,Tie)},{(Joe,2),(Hank,2)}) (3,{(3,Hat)},{(Eve,3)}) (4,{(4,Coat)},{(Hank,4)}) grunt> F = FOREACH E GENERATE FLATTEN(A), B.$0; grunt> DUMP F; (1,Scarf,{}) (2,Tie,{(Joe),(Hank)}) (3,Hat,{(Eve)}) (4,Coat,{(Hank)})

- 13. grunt> I = CROSS A, B; grunt> DUMP I; grunt> DUMP A; (2,Tie,Joe,2) (2,Tie) (2,Tie,Hank,4) (2,Tie,Ali,0) (4,Coat) (2,Tie,Eve,3) (3,Hat) (2,Tie,Hank,2) (4,Coat,Joe,2) (1,Scarf) (4,Coat,Hank,4) (4,Coat,Ali,0) grunt> DUMP B; (4,Coat,Eve,3) (4,Coat,Hank,2) (Joe,2) (3,Hat,Joe,2) (Hank,4) (3,Hat,Hank,4) (3,Hat,Ali,0) (Ali,0) (3,Hat,Eve,3) (Eve,3) (3,Hat,Hank,2) (Hank,2) (1,Scarf,Joe,2) (1,Scarf,Hank,4) (1,Scarf,Ali,0) (1,Scarf,Eve,3) (1,Scarf,Hank,2)

- 14. grunt> DUMP A; (Joe,cherry) (Ali,apple) (Joe,banana) (Eve,apple) grunt> B = GROUP A BY $0; grunt> DUMP B; (Joe,{(Joe,Cherry),(Joe,banana)}) (Ali,{(Ali,apple)}) (Eve,{(Eve,apple)}) grunt> C = GROUP A BY $1; grunt> DUMP C; (chery,{(Joe,Cherry)}) (apple,{(Ali,apple),(Eve,apple)}) (banana,{(Joe,banana)})

- 15. grunt> DUMP A; (Joe,cherry) (Ali,apple) (Joe,banana) (Eve,apple) -- group by the number of characters in the second field: grunt> B = GROUP A BY SIZE($1); grunt> DUMP B; (5L,{(Ali,apple),(Eve,apple)}) (6L,{(Joe,cherry),(Joe,banana)}) grunt> C = GROUP A ALL; grunt> DUMP C; (all,{(Joe,cherry),(Ali,apple),(Joe,banana),(Eve,apple)}) grunt> D = GROUP A ANY; // random sampling

- 16. grunt> DUMP A; (Joe,cherry,2) (Ali,apple,3) (Joe,banana,2) (Eve,apple,7) -- Streaming as in Hadoop via stdin and stdout grunt> C = STREAM A THROUGH `cut -f 2`; grunt> DUMP C; (cherry) (apple) (banana) (apple) grunt> D = DISTINCT C; grunt> DUMP D; (cherry) (apple) (banana)

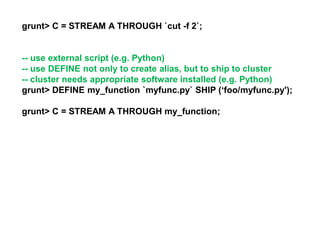

- 17. grunt> C = STREAM A THROUGH `cut -f 2`; -- use external script (e.g. Python) -- use DEFINE not only to create alias, but to ship to cluster -- cluster needs appropriate software installed (e.g. Python) grunt> DEFINE my_function `myfunc.py` SHIP (‘foo/myfunc.py'); grunt> C = STREAM A THROUGH my_function;

- 18. grunt> DUMP A; (2,3) (1,2) (2,4) grunt> B = ORDER A BY $0, $1 DESC; grunt> DUMP B; (1,2) (2,4) (2,3) -- ordering not preserved! grunt> C = FOREACH B GENERATE *; -- order preserved grunt> D = LIMIT B 2; grunt> DUMP D; (1,2) (2,4)

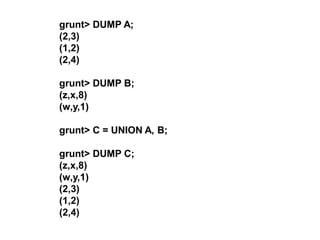

- 19. grunt> DUMP A; (2,3) (1,2) (2,4) grunt> DUMP B; (z,x,8) (w,y,1) grunt> C = UNION A, B; grunt> DUMP C; (z,x,8) (w,y,1) (2,3) (1,2) (2,4)

- 20. grunt> C = UNION A, B; grunt> DUMP C; (z,x,8) (w,y,1) (2,3) (1,2) (2,4) grunt> DESCRIBE A; A: {f0: int,f1: int} grunt> DESCRIBE B; B: {f0: chararray,f1: chararray,f2: int} grunt> DESCRIBE C; Schema for C unknown.

- 21. grunt> records = LOAD ’foo.txt' >> AS (year:chararray, temperature:int, quality:int); grunt> DUMP records; (1950,0,1) (1950,22,1) (1950,-11,1) (1949,111,1) (1949,78,1) grunt> DESCRIBE records; records: {year: chararray, temperature:int, quality: int}

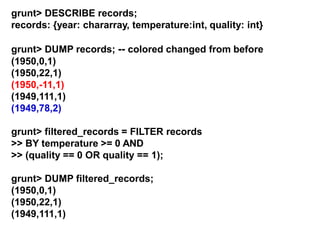

- 22. grunt> DESCRIBE records; records: {year: chararray, temperature:int, quality: int} grunt> DUMP records; -- colored changed from before (1950,0,1) (1950,22,1) (1950,-11,1) (1949,111,1) (1949,78,2) grunt> filtered_records = FILTER records >> BY temperature >= 0 AND >> (quality == 0 OR quality == 1); grunt> DUMP filtered_records; (1950,0,1) (1950,22,1) (1949,111,1)

- 23. grunt> records = LOAD ’foo.txt' >> AS (year:chararray, temperature:int, quality:int); grunt> DUMP records; (1950,0,1) (1950,22,1) (1950,-11,1) (1949,111,1) (1949,78,1) grunt> grouped = GROUP records BY year; grunt> DUMP grouped; (1949,{(1949,111,1),(1949,78,1)}) (1950,{(1950,0,1),(1950,22,1),(1950,-11,1)}) grunt> DESCRIBE grouped; grouped: {group: chararray, records: {year: chararray, temperature:int, quality: int} }

- 24. grunt> DUMP grouped; (1949,{(1949,111,1),(1949,78,1)}) (1950,{(1950,0,1),(1950,22,1),(1950,-11,1)}) grunt> max_temp = FOREACH grouped GENERATE group, >> MAX(records.temperature); grunt> DUMP max_temp; (1949,111) (1950,22) -- let’s put it all together

- 25. records = LOAD 'input/ncdc/micro-tab/sample.txt' AS (year:chararray, temperature:int, quality:int); filtered_records = FILTER records BY temperature != 9999 AND (quality == 0 OR quality == 1 OR quality == 4 OR quality == 5 OR quality == 9); grouped_records = GROUP filtered_records BY year; max_temp = FOREACH grouped_records GENERATE group, MAX(filtered_records.temperature); DUMP max_temp;

- 26. grunt> ILLUSTRATE max_temp; ------------------------------------------------------------------------------- | records | year: bytearray | temperature: bytearray | quality: bytearray | ------------------------------------------------------------------------------- | | 1949 | 9999 | 1 | | | 1949 | 111 | 1 | | | 1949 | 78 | 1 | ------------------------------------------------------------------------------- | records | year: chararray | temperature: int | quality: int | ------------------------------------------------------------------- | | 1949 | 9999 | 1 | | | 1949 | 111 | 1 | | | 1949 | 78 | 1 | ------------------------------------------------------------------- ---------------------------------------------------------------------------- | filtered_records | year: chararray | temperature: int | quality: int | ---------------------------------------------------------------------------- | | 1949 | 111 | 1 | | | 1949 | 78 | 1 | ---------------------------------------------------------------------------- ------------------------------------------------------------------------------------ | grouped_records | group: chararray | filtered_records: bag({year: chararray, | temperature: int,quality: int}) | ------------------------------------------------------------------------------------ | | 1949 | {(1949, 111, 1), (1949, 78, 1)} | ------------------------------------------------------------------------------------ ------------------------------------------- | max_temp | group: chararray | int | ------------------------------------------- | | 1949 | 111 | -------------------------------------------

- 27. White p. 172 Multiquery execution A = LOAD 'input/pig/multiquery/A'; B = FILTER A BY $1 == 'banana'; C = FILTER A BY $1 != 'banana'; STORE B INTO 'output/b'; STORE C INTO 'output/c'; “Relations B and C are both derived from A, so to save reading A twice, Pig can run this script as a single MapReduce job by reading A once and writing two output files from the job, one for each of B and C.” 27

- 28. White p. 172 Handling data corruption grunt> records = LOAD 'corrupt.txt' >> AS (year:chararray, temperature:int, quality:int); grunt> DUMP records; (1950,0,1) (1950,22,1) (1950,,1) (1949,111,1) (1949,78,1) grunt> corrupt = FILTER records BY temperature is null; grunt> DUMP corrupt; (1950,,1) 28

- 29. White p. 172 Handling data corruption grunt> corrupt = FILTER records BY temperature is null; grunt> DUMP corrupt; (1950,,1) grunt> grouped = GROUP corrupt ALL; grunt> all_grouped = FOREACH grouped GENERATE group, COUNT(corrupt); grunt> DUMP all_grouped; (all,1) 29

- 30. White p. 172 Handling data corruption grunt> corrupt = FILTER records BY temperature is null; grunt> DUMP corrupt; (1950,,1) grunt> SPLIT records INTO good IF temperature is not null, >> bad IF temperature is null; grunt> DUMP good; (1950,0,1) (1950,22,1) (1949,111,1) (1949,78,1) grunt> DUMP bad; (1950,,1) 30

- 31. White p. 172 Handling data corruption grunt> corrupt = FILTER records BY temperature is null; grunt> DUMP corrupt; (1950,,1) grunt> SPLIT records INTO good IF temperature is not null, >> bad IF temperature is null; grunt> DUMP good; (1950,0,1) (1950,22,1) (1949,111,1) (1949,78,1) grunt> DUMP bad; (1950,,1) 31

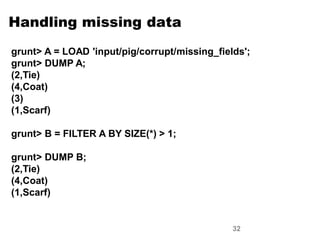

- 32. White p. 172 Handling missing data grunt> A = LOAD 'input/pig/corrupt/missing_fields'; grunt> DUMP A; (2,Tie) (4,Coat) (3) (1,Scarf) grunt> B = FILTER A BY SIZE(*) > 1; grunt> DUMP B; (2,Tie) (4,Coat) (1,Scarf) 32

- 33. User Defined Functions (UDFs) Written in Java Sub-class EvalFunc or FilterFunc FilterFunc sub-classes EvalFunc with type T=Boolean public abstract class EvalFunc<T> { public abstract T exec(Tuple input) throws IOException; } PiggyBank: Public library of pig functions http://wiki.apache.org/pig/PiggyBank

- 34. UDF Example filtered = FILTER records BY temperature != 9999 AND (quality == 0 OR quality == 1 OR quality == 4 OR quality == 5 OR quality == 9); grunt> REGISTER my-udfs.jar; grunt> filtered = FILTER records BY temperature != 9999 AND com.hadoopbook.pig.IsGoodQuality(quality); -- aliasing grunt> DEFINE isGood com.hadoopbook.pig.IsGoodQuality(); grunt> filtered_records = FILTER records >> BY temperature != 9999 AND isGood(quality);

- 35. More on UDFs (functionals) Pig translates com.hadoopbook.pig.IsGoodQuality(x) to com.hadoopbook.pig.IsGoodQuality.exec(x); Look for class named “…isGoodQuality” in registered JAR Instantiate an instance as specified by DEFINE clause Example below uses default constructor (no arguments) • Default behavior if no DEFINE corresponding clause is specified Can optionally pass other constructor arguments to parameterize different UDF behaviors at run-time grunt> DEFINE isGood com.hadoopbook.pig.IsGoodQuality();

- 36. Case Sensitivity Operators & commands are not case-sensitive aliases & function names are case-sensitive Why? Pig resolves function calls by • Treating the function’s name as a Java classname • Trying to load a class with that name Java classnames are case-sensitive

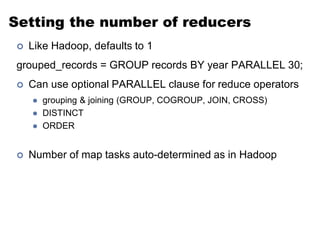

- 37. Setting the number of reducers Like Hadoop, defaults to 1 grouped_records = GROUP records BY year PARALLEL 30; Can use optional PARALLEL clause for reduce operators grouping & joining (GROUP, COGROUP, JOIN, CROSS) DISTINCT ORDER Number of map tasks auto-determined as in Hadoop

- 38. Setting and using parameters pig -param input=in.txt -param output=out.txt foo.pig OR # foo.param input=/user/tom/input/ncdc/micro-tab/sample.txt output=/tmp/out pig -param_file foo.param foo.pig THEN records = LOAD '$input'; … STORE x into '$output';

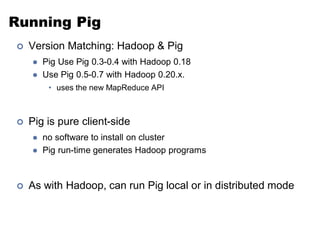

- 39. Running Pig Version Matching: Hadoop & Pig Pig Use Pig 0.3-0.4 with Hadoop 0.18 Use Pig 0.5-0.7 with Hadoop 0.20.x. • uses the new MapReduce API Pig is pure client-side no software to install on cluster Pig run-time generates Hadoop programs As with Hadoop, can run Pig local or in distributed mode

- 40. Ways to run Pig Script file: pig script.pig Command-line pig –e “DUMP a” grunt> interactive shell embedded: launch Pig programs from java code PigPen eclipse plug-in

- 41. White p. 333

- 42. White p. 337 Pig types

- 43. For More Information on Pig… http://hadoop.apache.org/pig http://wiki.apache.org/pig