Lecture 2 Basic Concepts in Machine Learning for Language Technology

- 1. Machine Learning for Language Technology Lecture 2: Basic Concepts Marina Santini Department of Linguistics and Philology Uppsala University, Uppsala, Sweden Autumn 2014 Acknowledgement: Thanks to Prof. Joakim Nivre for course design and material

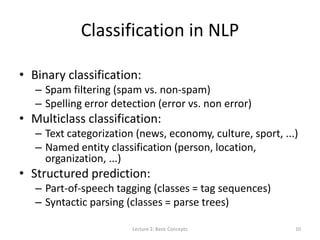

- 2. Outline • Definition of Machine Learning • Type of Machine Learning: – Classification – Regression – Supervised Learning – Unsupervised Learning – Reinforcement Learning • Supervised Learning: – Supervised Classification – Training set – Hypothesis class – Empirical error – Margin – Noise – Inductive bias – Generalization – Model assessment – Cross-Validation – Classification in NLP – Types of Classification Lecture 2: Basic Concepts 2

- 3. What is Machine Learning • Machine learning is programming computers to optimize a performance criterion for some task using example data or past experience • Why learning? – No known exact method – vision, speech recognition, robotics, spam filters, etc. – Exact method too expensive – statistical physics – Task evolves over time – network routing • Compare: – No need to use machine learning for computing payroll… we just need an algorithm Lecture 2: Basic Concepts 3

- 4. Machine Learning – Data Mining – Artificial Intelligence – Statistics • Machine Learning: creation of a model that uses training data or past experience • Data Mining: application of learning methods to large datasets (ex. physics, astronomy, biology, etc.) – Text mining = machine learning applied to unstructured textual data (ex. sentiment analyisis, social media monitoring, etc. Text Mining, Wikipedia) • Artificial intelligence: a model that can adapt to a changing environment. • Statistics: Machine learning uses the theory of statistics in building mathematical models, because the core task is making inference from a sample. Lecture 2: Basic Concepts 4

- 5. The bio-cognitive analogy • Imagine that a learning algorithm as a single neuron. • This neuron receives input from other neurons, one for each input feature. • The strength of these inputs are the feature values. • Each input has a weight and the neuron simply sums up all the weighted inputs. • Based on this sum, the neuron decides whether to “fire” or not. Firing is interpreted as being a positive example and not firing is interpreted as being a negative example. Lecture 2: Basic Concepts 5

- 6. Elements of Machine Learning 1. Generalization: – Generalize from specific examples – Based on statistical inference 2. Data: – Training data: specific examples to learn from – Test data: (new) specific examples to assess performance 3. Models: – Theoretical assumptions about the task/domain – Parameters that can be inferred from data 4. Algorithms: – Learning algorithm: infer model (parameters) from data – Inference algorithm: infer predictions from model Lecture 2: Basic Concepts 6

- 7. Types of Machine Learning • Association • Supervised Learning – Classification – Regression • Unsupervised Learning • Reinforcement Learning Lecture 2: Basic Concepts 7

- 8. Learning Associations • Basket analysis: P (Y | X ) probability that somebody who buys X also buys Y where X and Y are products/services Example: P ( chips | beer ) = 0.7 Lecture 2: Basic Concepts 8

- 9. Classification Lecture 2: Basic Concepts 9 • Example: Credit scoring • Differentiating between low-risk and high-risk customers from their income and savings Discriminant: IF income > θ1 AND savings > θ2 THEN low-risk ELSE high-risk

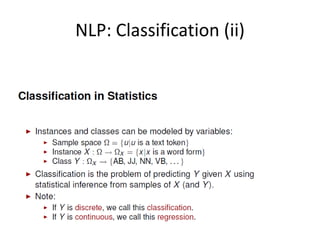

- 10. Classification in NLP • Binary classification: – Spam filtering (spam vs. non-spam) – Spelling error detection (error vs. non error) • Multiclass classification: – Text categorization (news, economy, culture, sport, ...) – Named entity classification (person, location, organization, ...) • Structured prediction: – Part-of-speech tagging (classes = tag sequences) – Syntactic parsing (classes = parse trees) Lecture 2: Basic Concepts 10

- 11. Regression • Example: Price of used car • x : car attributes y : price y = g (x | q ) g ( ) model, q parameters Lecture 2: Basic Concepts 11 y = wx+w0

- 12. Uses of Supervised Learning • Prediction of future cases: – Use the rule to predict the output for future inputs • Knowledge extraction: – The rule is easy to understand • Compression: – The rule is simpler than the data it explains • Outlier detection: – Exceptions that are not covered by the rule, e.g., fraud Lecture 2: Basic Concepts 12

- 13. Unsupervised Learning • Finding regularities in data • No mapping to outputs • Clustering: – Grouping similar instances • Example applications: – Customer segmentation in CRM – Image compression: Color quantization – NLP: Unsupervised text categorization Lecture 2: Basic Concepts 13

- 14. Reinforcement Learning • Learning a policy = sequence of outputs/actions • No supervised output but delayed reward • Example applications: – Game playing – Robot in a maze – NLP: Dialogue systems Lecture 2: Basic Concepts 14

- 15. Supervised Classification • Learning the class C of a “family car” from examples – Prediction: Is car x a family car? – Knowledge extraction: What do people expect from a family car? • Output (labels): Positive (+) and negative (–) examples • Input representation (features): x1: price, x2 : engine power Lecture 2: Basic Concepts 15

- 16. Training set X X {xt ,rt }t1 N r 1 if x is positive 0 if x is negative Lecture 2: Basic Concepts 16 x x1 x2

- 17. Hypothesis class H p1 price p2 AND e1 engine power e2 Lecture 2: Basic Concepts 17

- 18. Empirical (training) error h(x) 1 if h says x is positive 0 if h says x is negative E(h | X) 1 h xt rt t1 N Lecture 2: Basic Concepts 18 Empirical error of h on X:

- 19. S, G, and the Version Space Lecture 2: Basic Concepts 19 most specific hypothesis, S most general hypothesis, G h H, between S and G is consistent [E( h | X) = 0] and make up the version space

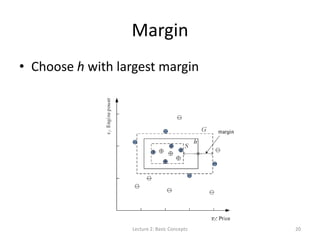

- 20. Margin • Choose h with largest margin Lecture 2: Basic Concepts 20

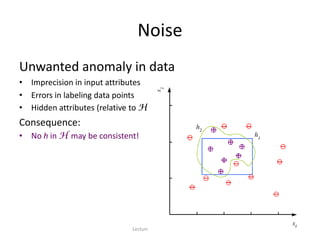

- 21. Noise Unwanted anomaly in data • Imprecision in input attributes • Errors in labeling data points • Hidden attributes (relative to H) Consequence: • No h in H may be consistent! Lecture 2: Basic Concepts 21

- 22. Noise and Model Complexity Arguments for simpler model (Occam’s razor principle): 1. Easier to make predictions 2. Easier to train (fewer parameters) 3. Easier to understand 4. Generalizes better (if data is noisy) Lecture 2: Basic Concepts 22

- 23. Inductive Bias • Learning is an ill-posed problem – Training data is never sufficient to find a unique solution – There are always infinitely many consistent hypotheses • We need an inductive bias: – Assumptions that entail a unique h for a training set X 1. Hypothesis class H – axis-aligned rectangles 2. Learning algorithm – find consistent hypothesis with max- margin 3. Hyperparameters – trade-off between training error and margin Lecture 2: Basic Concepts 23

- 24. Model Selection and Generalization • Generalization – how well a model performs on new data – Overfitting: H more complex than C – Underfitting: H less complex than C Lecture 2: Basic Concepts 24

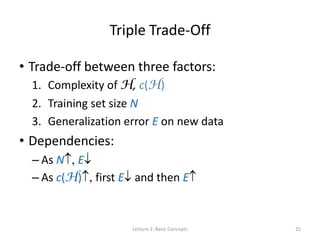

- 25. Triple Trade-Off • Trade-off between three factors: 1. Complexity of H, c(H) 2. Training set size N 3. Generalization error E on new data • Dependencies: – As N, E – As c(H), first E and then E Lecture 2: Basic Concepts 25

- 26. Model Selection Generalization Error • To estimate generalization error, we need data unseen during training: • Given models (hypotheses) h1, ..., hk induced from the training set X, we can use E(hi | V ) to select the model hi with the smallest generalization error Lecture 2: Basic Concepts 26 ˆE E(h | V) 1 h xt rt t1 M V {xt ,rt }t1 M X

- 27. Model Assessment • To estimate the generalization error of the best model hi, we need data unseen during training and model selection • Standard setup: 1. Training set X (50–80%) 2. Validation (development) set V (10–25%) 3. Test (publication) set T (10–25%) • Note: – Validation data can be added to training set before testing – Resampling methods can be used if data is limited Lecture 2: Basic Concepts 27

- 28. Cross-Validation 121 31 2 2 2 32 1 1 1 K K K K K K XXXTXV XXXTXV XXXTXV Lecture 2: Basic Concepts 28 • K-fold cross-validation: Divide X into X1, ..., XK • Note: – Generalization error estimated by means across K folds – Training sets for different folds share K–2 parts – Separate test set must be maintained for model assessment

- 29. Bootstrapping 3680 1 1 1 . e N N Lecture 2: Basic Concepts 29 • Generate new training sets of size N from X by random sampling with replacement • Use original training set as validation set (V = X ) • Probability that we do not pick an instance after N draws that is, only 36.8% of instances are new!

- 30. Measuring Error • Error rate = # of errors / # of instances = (FP+FN) / N • Accuracy = # of correct / # of instances = (TP+TN) / N • Recall = # of found positives / # of positives = TP / (TP+FN) • Precision = # of found positives / # of found = TP / (TP+FP) Lecture 2: Basic Concepts 30

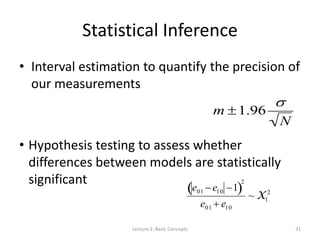

- 31. Statistical Inference • Interval estimation to quantify the precision of our measurements • Hypothesis testing to assess whether differences between models are statistically significant Lecture 2: Basic Concepts 31 m 1.96 N e01 e10 1 2 e01 e10 ~ X1 2

- 32. Supervised Learning – Summary • Training data + learner hypothesis – Learner incorporates inductive bias • Test data + hypothesis estimated generalization – Test data must be unseen Lecture 2: Basic Concepts 32

- 33. Anatomy of a Supervised Learner (Dimensions of a supervised machine learning algorithm) • Model: • Loss function: • Optimization procedure: g x |q E q | X L rt ,g xt |q t Lecture 2: Basic Concepts 33 q* arg min q E q | X

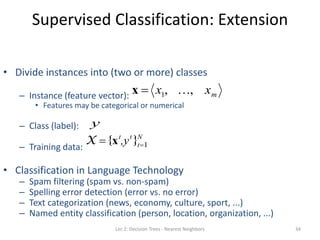

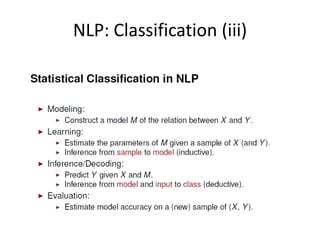

- 34. Supervised Classification: Extension 34 • Divide instances into (two or more) classes – Instance (feature vector): • Features may be categorical or numerical – Class (label): – Training data: • Classification in Language Technology – Spam filtering (spam vs. non-spam) – Spelling error detection (error vs. no error) – Text categorization (news, economy, culture, sport, ...) – Named entity classification (person, location, organization, ...) X {xt ,yt }t1 N x x1, , xm y Lec 2: Decision Trees - Nearest Neighbors

- 38. Types of Classification (i)

- 39. Types of Classification (ii)

- 40. Reading • Alpaydin (2010): chs 1-2; 19 • Daume’ III (2012): ch 4: only 4.5-4.6 Lecture 2: Basic Concepts 40

- 41. End of Lecture 2 Lecture 2: Basic Concepts 41

![S, G, and the Version Space

Lecture 2: Basic Concepts 19

most specific hypothesis, S

most general hypothesis, G

h H, between S and G is

consistent [E( h | X) = 0] and

make up the version space](https://image.slidesharecdn.com/lecture2basicconceptsofmachinelearning-140814084140-phpapp01/85/Lecture-2-Basic-Concepts-in-Machine-Learning-for-Language-Technology-19-320.jpg)