Embed presentation

Download as PDF, PPTX

This document provides a summary of Lecture 9 on Bayesian decision theory and machine learning. The lecture begins with a recap of previous lectures on topics like decision trees, k-nearest neighbors, and using probabilities for classification. It then discusses Thomas Bayes and the origins of Bayesian probability. Key concepts from Bayes' theorem are explained, like calculating posterior probabilities. Examples are provided to illustrate Bayesian reasoning, such as calculating the probability that the Pope is an alien or whether to switch doors in the Monty Hall problem. The lecture concludes by discussing how these Bayesian concepts can be applied to machine learning.

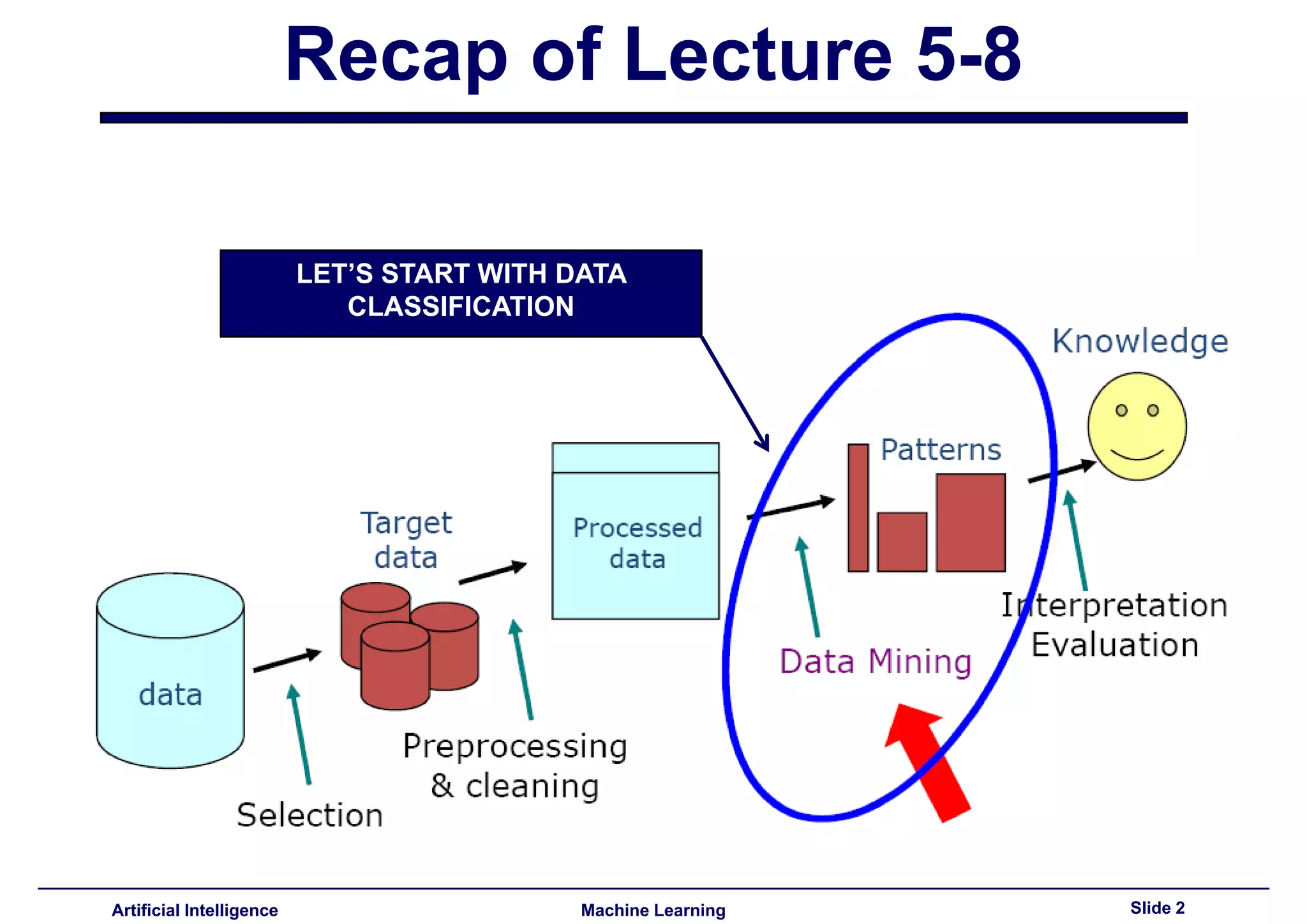

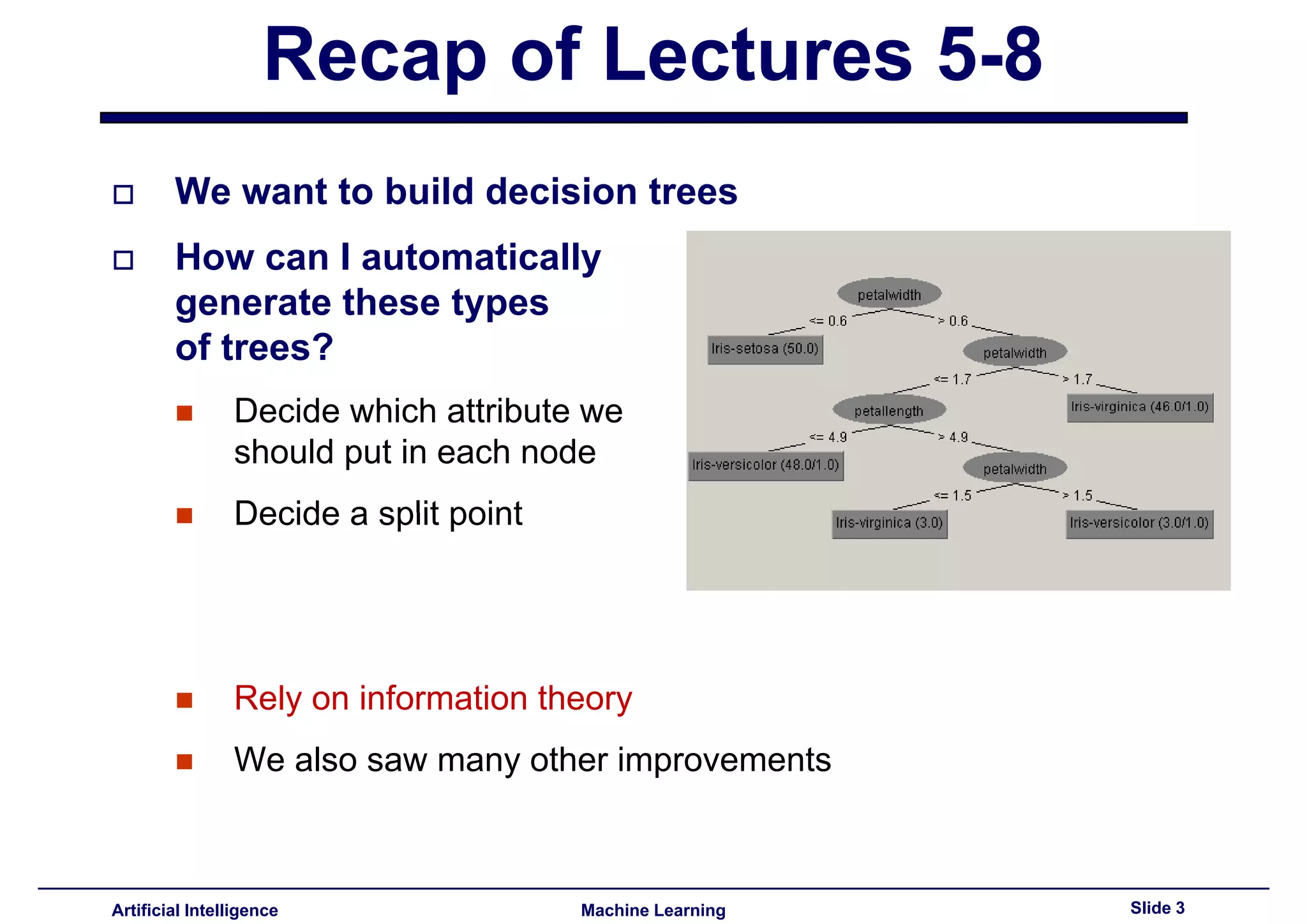

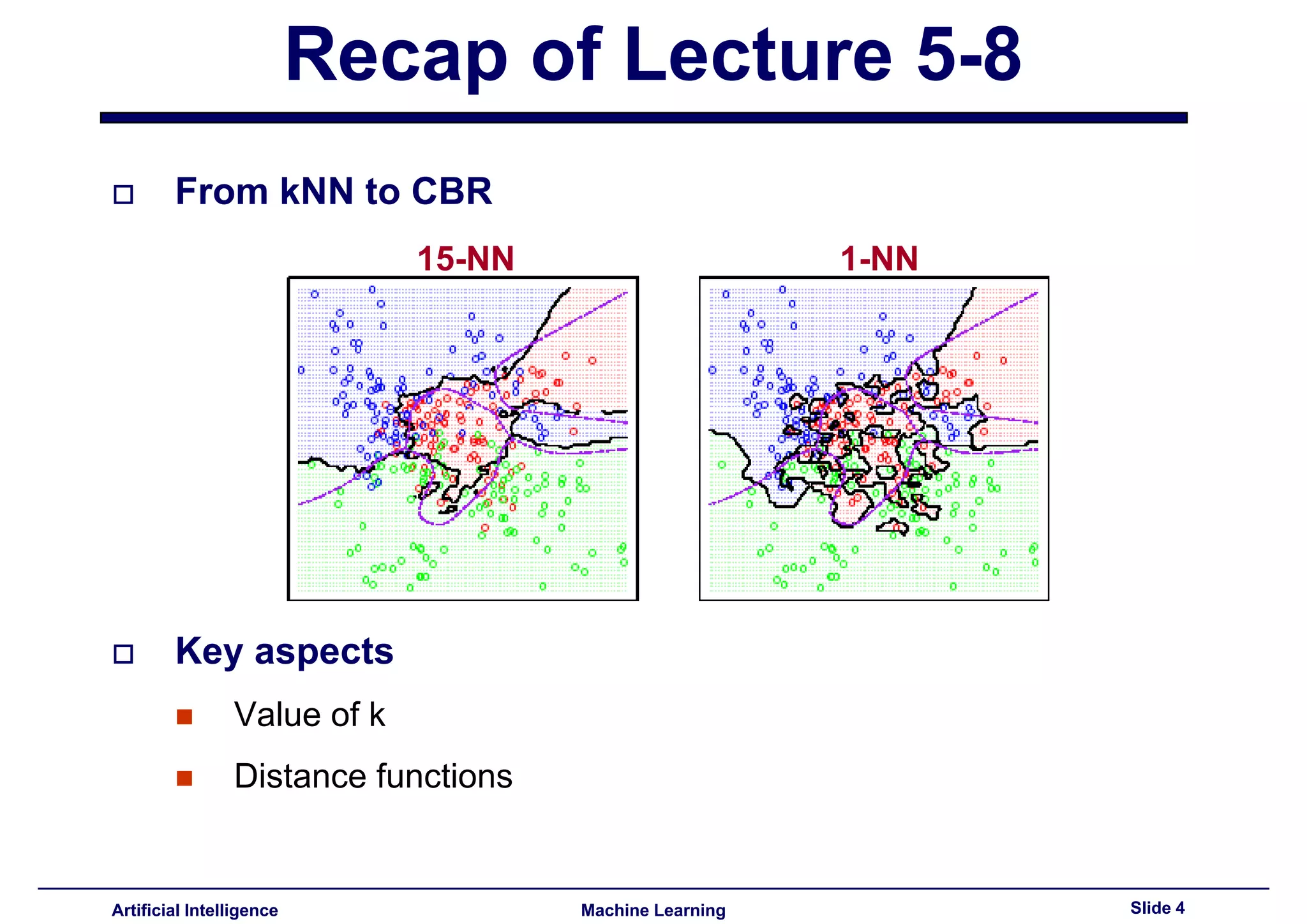

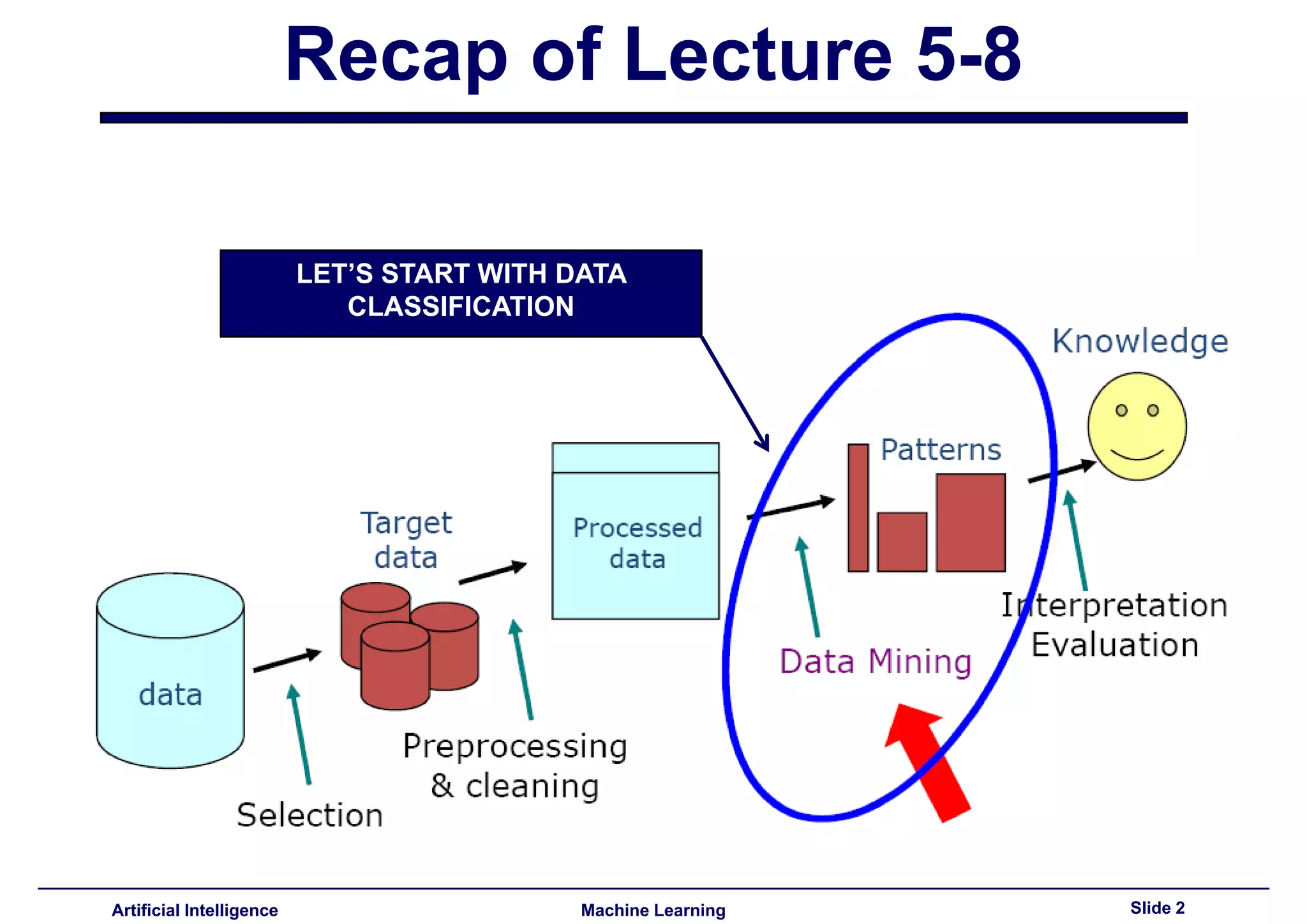

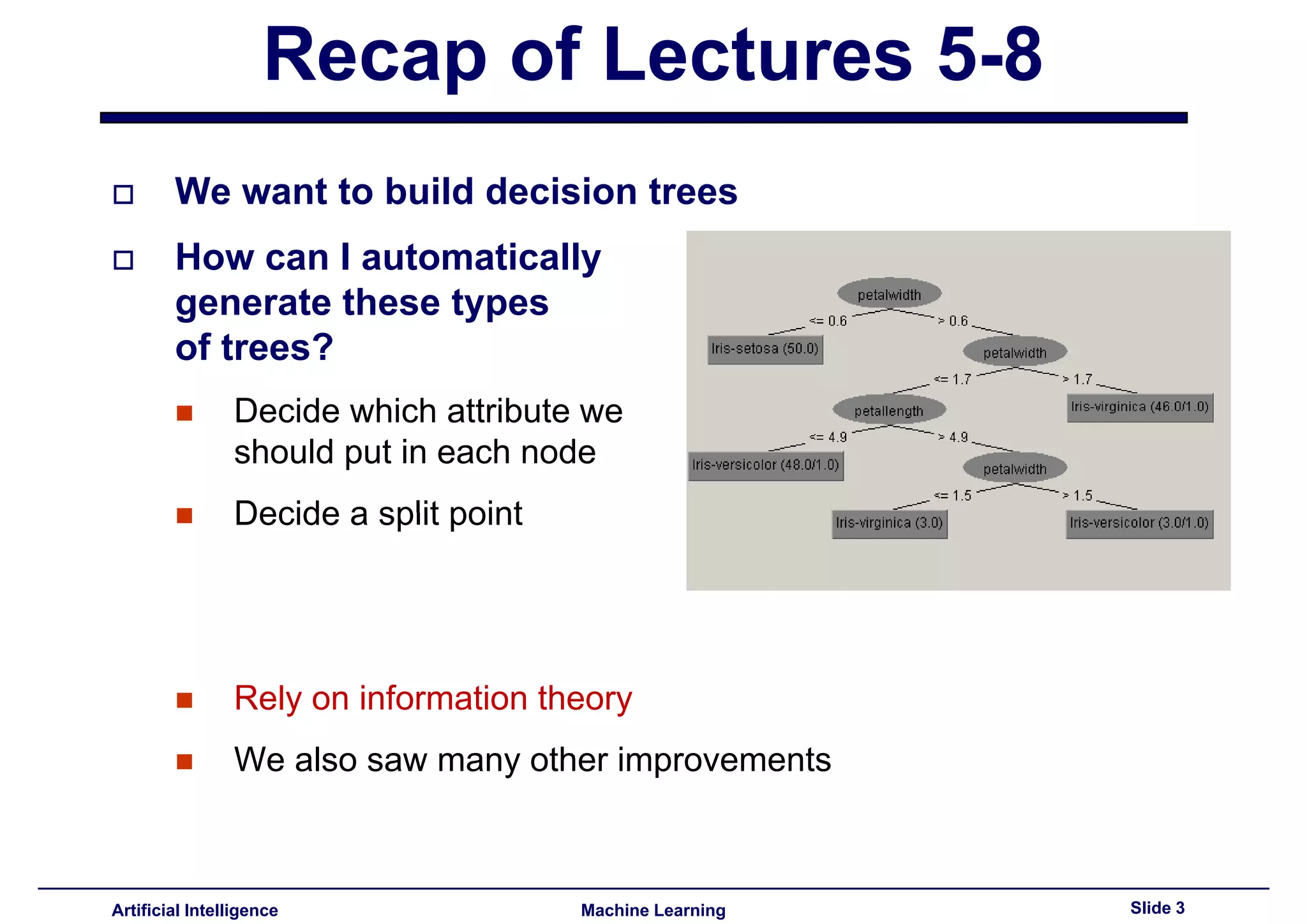

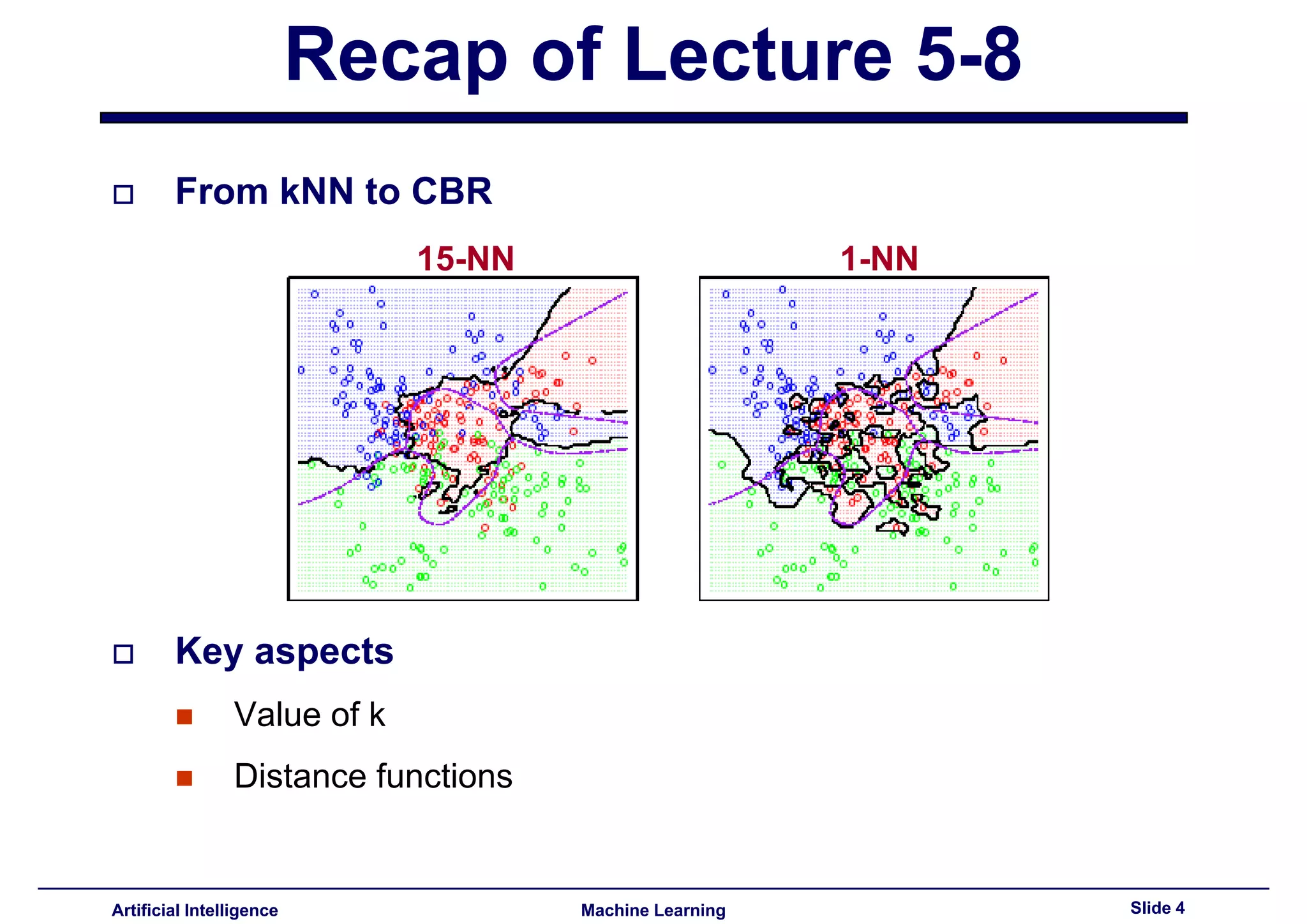

Introduction to machine learning, focus on decision tree generation and classification methods including kNN and CBR.

Discussion on using probability for classification due to uncertainty in AI and ML domains.

Introduction to Thomas Bayes and the historical context of Bayesian theory, influenced by Kant and Newton.

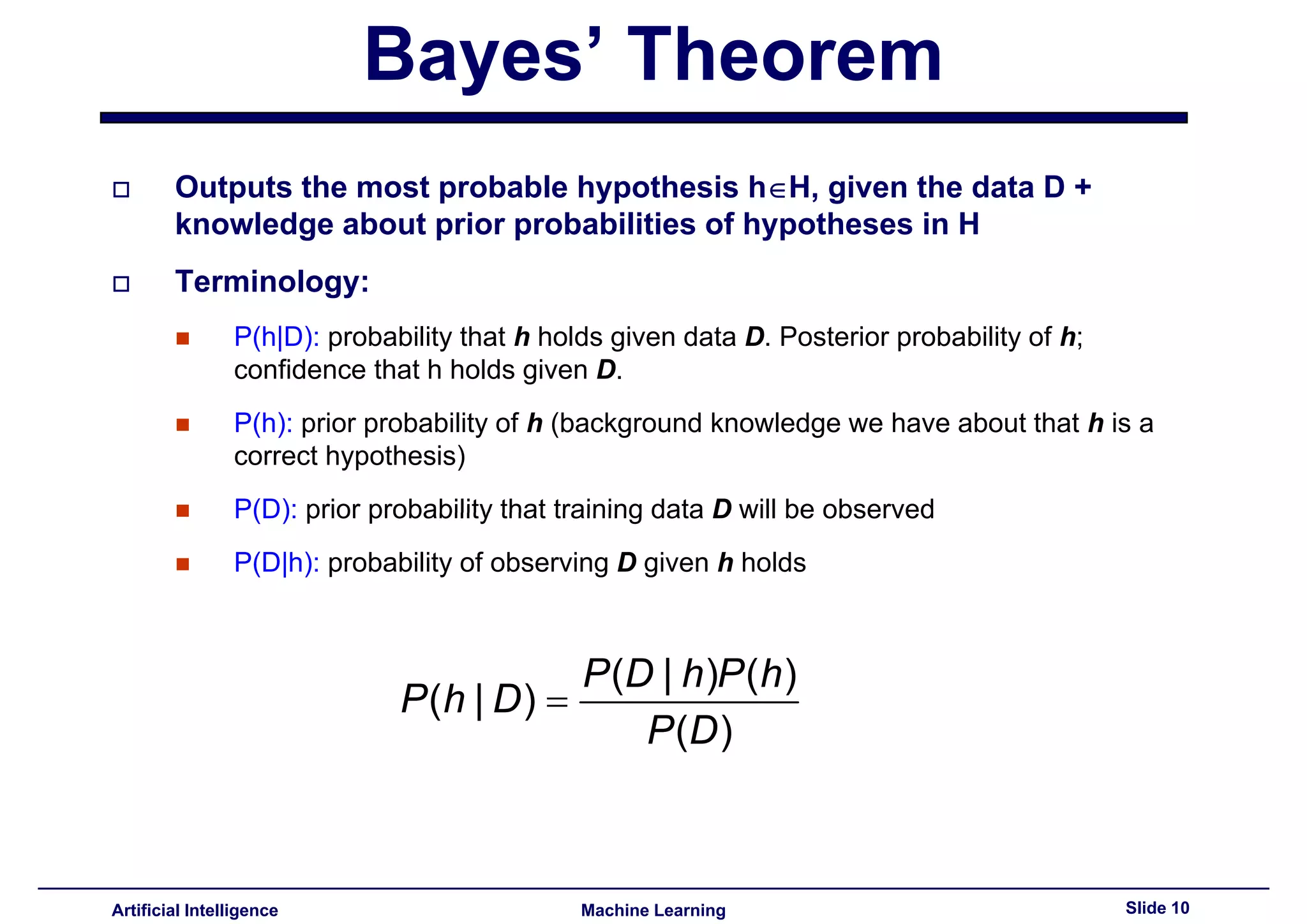

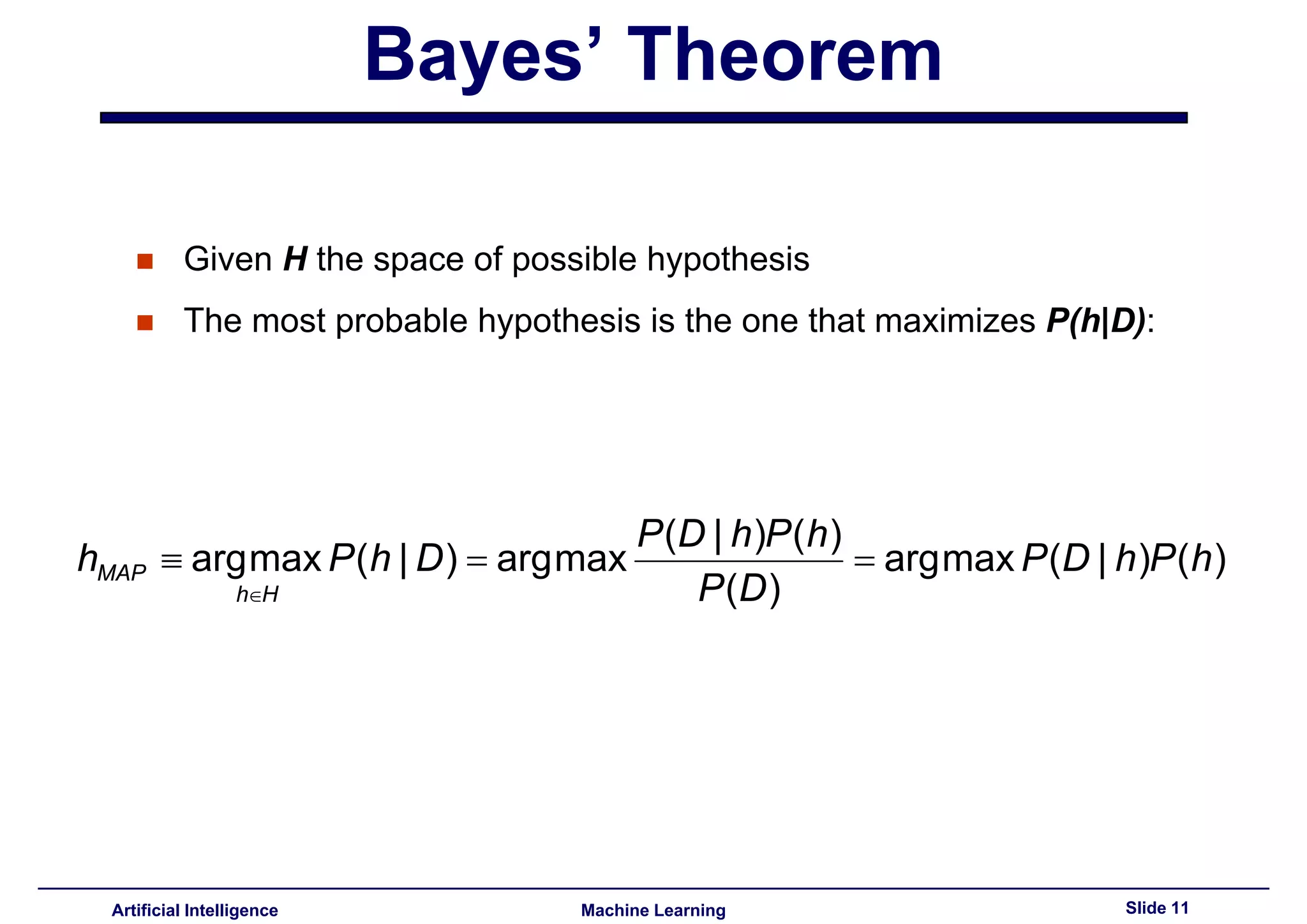

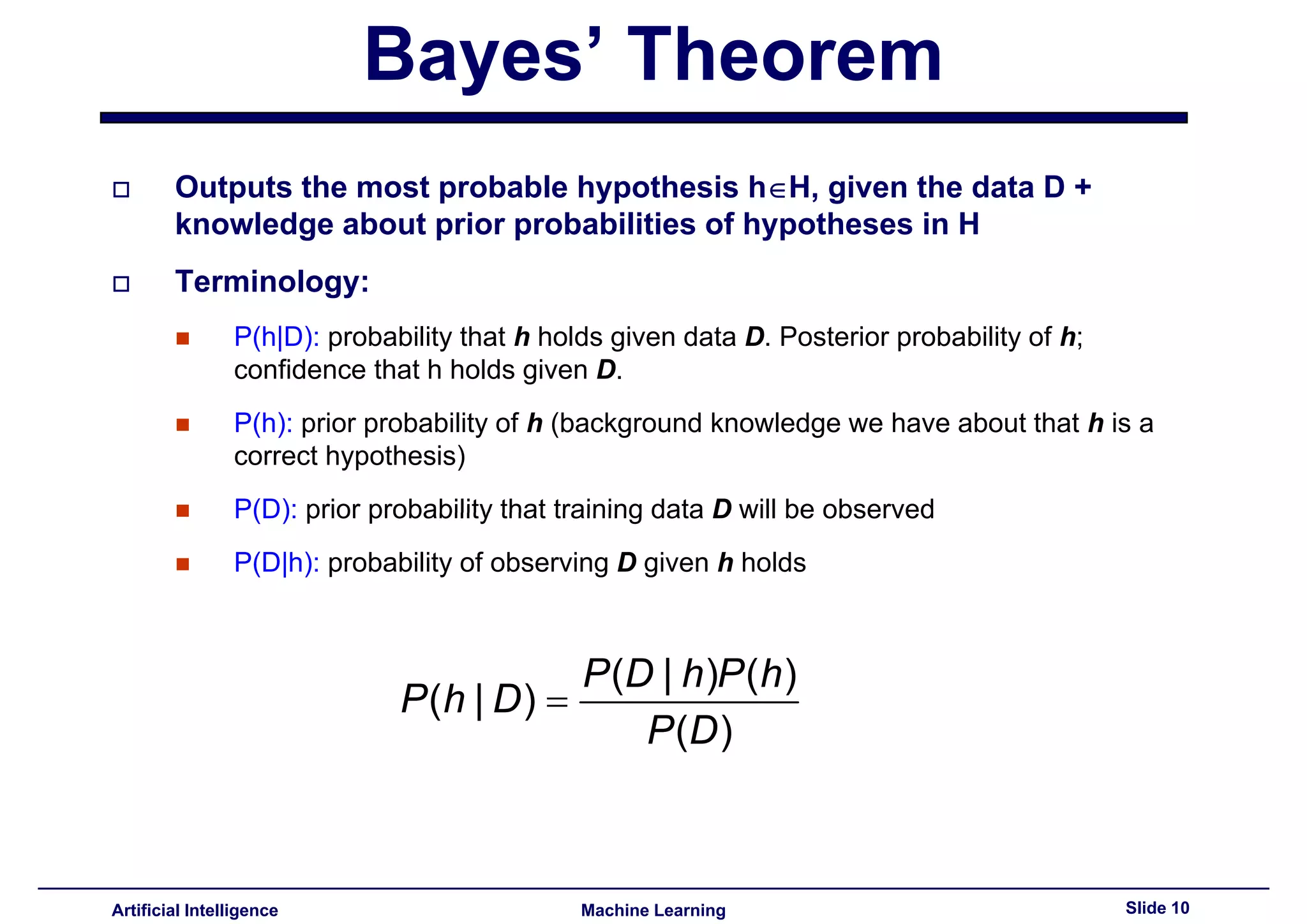

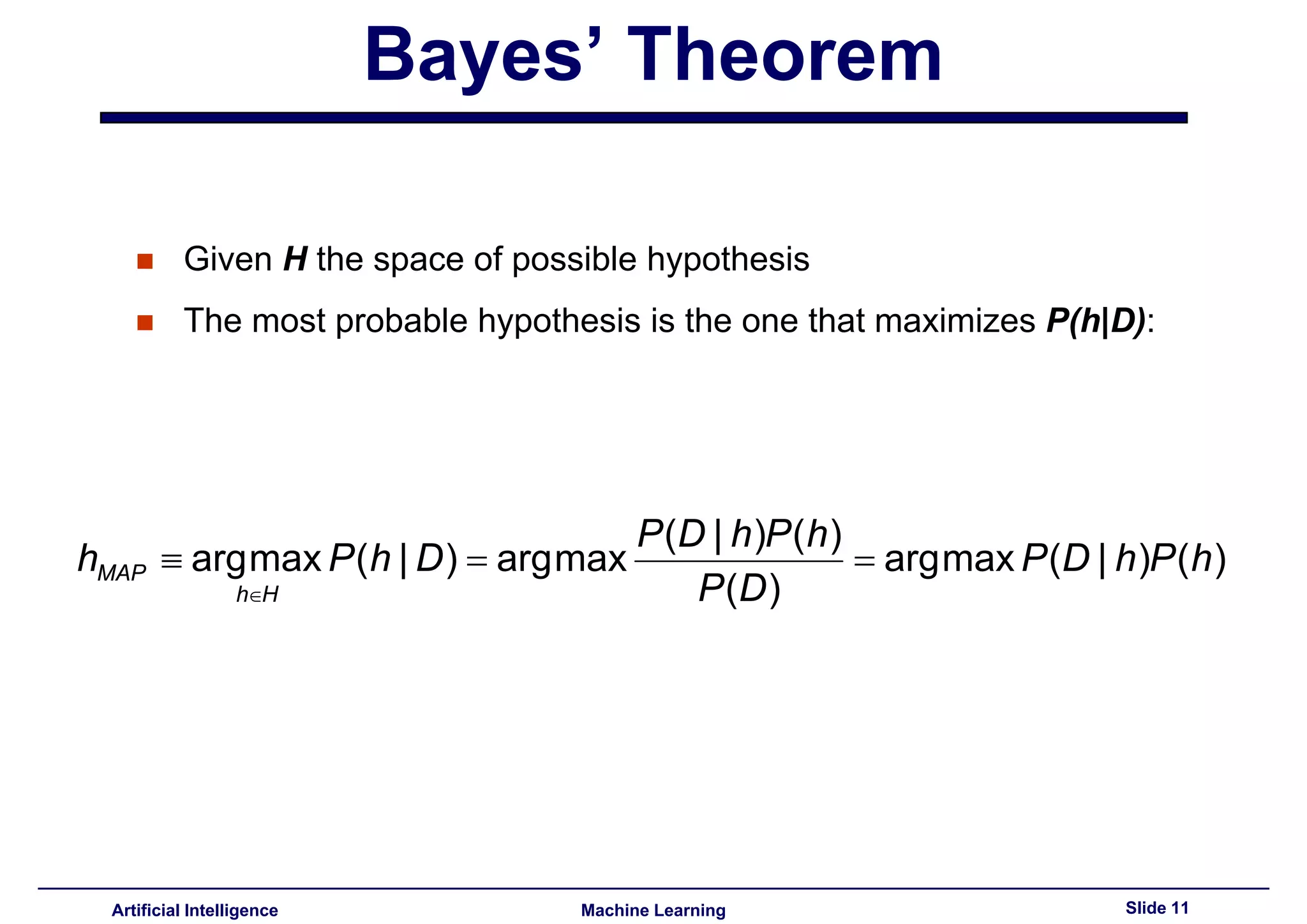

Definition of Bayesian probability, its application in estimation, and formulation of Bayes' theorem.

Illustration of Bayes' theorem with examples, analyzing the likelihood of hypotheses like 'Is the Pope human?'

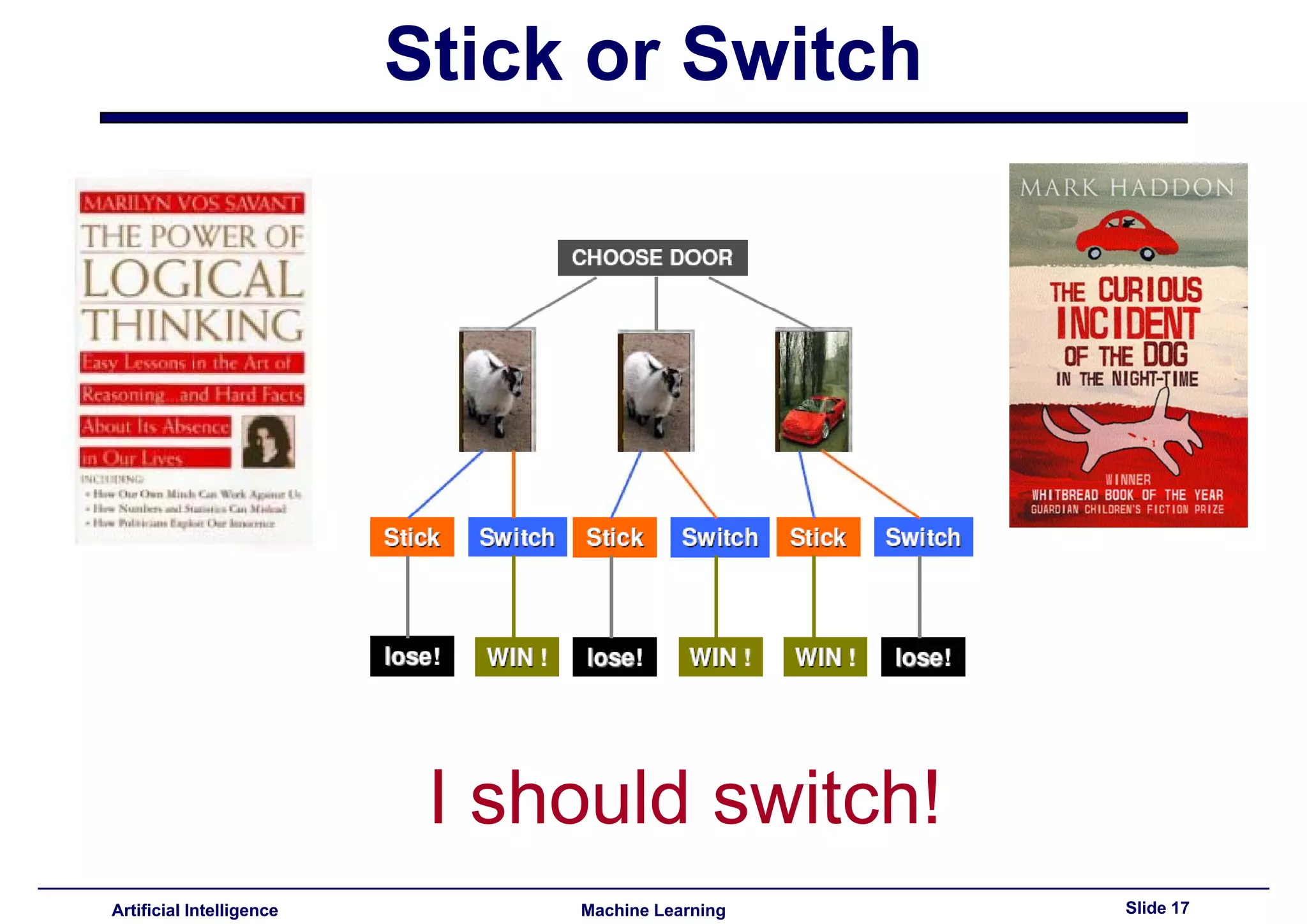

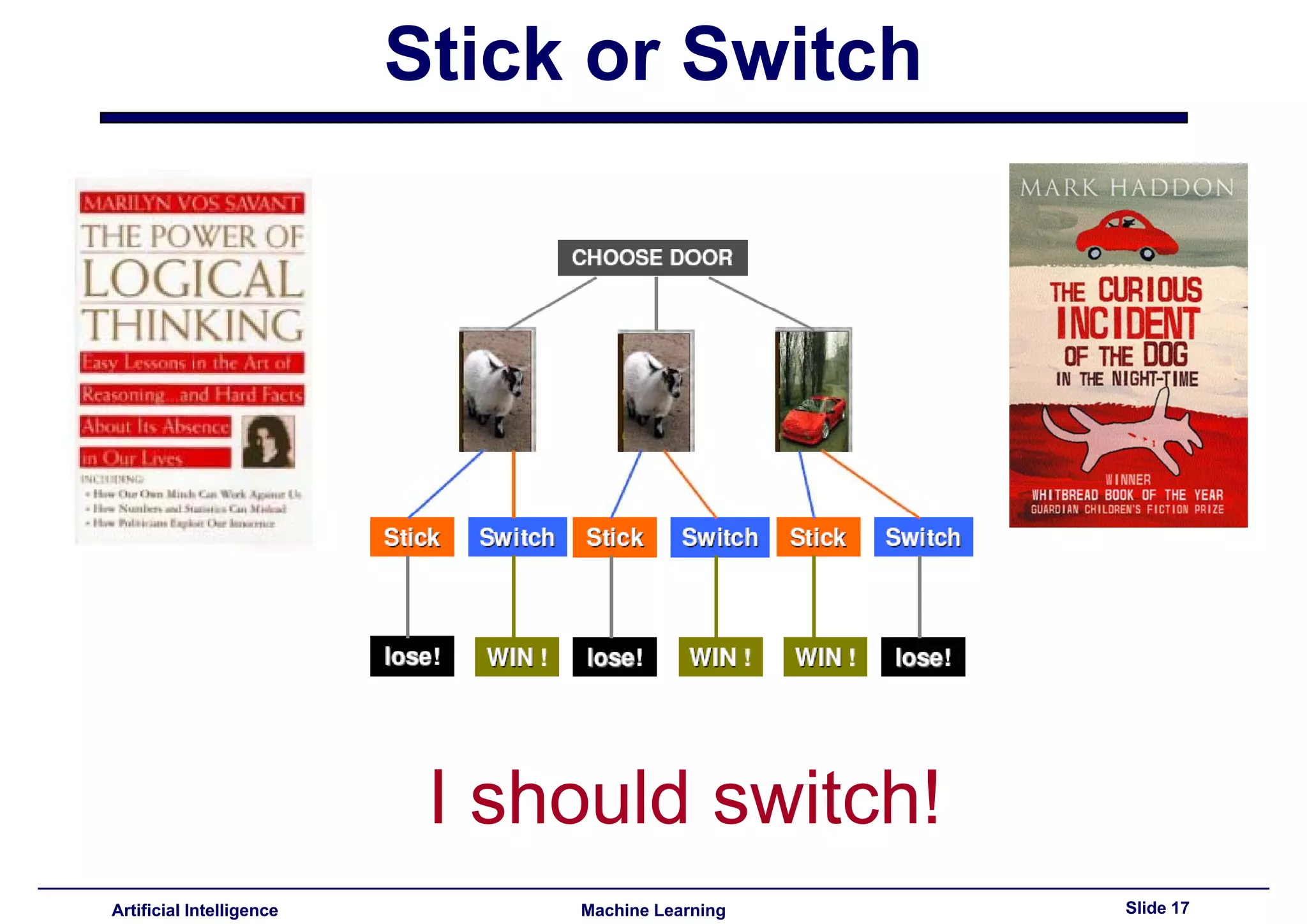

Monty Hall problem illustrating decision making under uncertainty, emphasizing probability over faith.

Explains how misinterpretation of DNA test probabilities can lead to erroneous conclusions about guilt.Teases future class discussion on applying Bayesian concepts in machine learning context.