Lessons Learned on Benchmarking Big Data Platforms

- 1. Lessons Learned on Benchmarking Big Data Platforms Todor Ivanov [email protected] Goethe University Frankfurt am Main, Germany http://www.bigdata.uni-frankfurt.de/

- 2. Agenda • Evaluation of Hadoop using TPCx-HS • Comparing SQL-on-Hadoop Engines with TPC-H • Performance Evaluation of Spark SQL using BigBench • Evaluation of Big Data Platforms with HiBench 2

- 3. Contact Information http://www.bigdata.uni-frankfurt.de Robert-Mayer-Str. 10, 60325 Institut für Informatik, Fachbereich Informatik und Mathematik (FB12) Frankfurt Germany Telefon: 069-798-28212 Fax: 069-798-25123 3

- 4. Industrial Partner Technology Partners Member of Supported by 4

- 5. Research Areas Our lab is currently active in the following research areas: • Big Data Management Technologies • Graph Databases / Linked Open Data (LOD) • Data Analytics / Data Science • Big Data for Common Good 5

- 6. Big Data Management Technologies Systems: Benchmarking Big Data platforms for performance, scalability, elasticity, fault-tolerance … Benchmarks used: • Yahoo Cloud Service Benchmark (YCSB) - Evaluating the performance (read/write workloads) of NoSQL stores like Cassandra. • HiBench - 10 workloads for evaluating the Hadoop platform in terms of speed, throughput, HDFS bandwidth, system resource utilization and machine learning algorithms. • BigBench – Application level benchmark consisting of 30 queries implemented in Hive and Hadoop, based on the TPC-DS benchmark. • TPCx-HS – The first standard Big Data Benchmark for Hadoop, based on the TeraSort workload. 6

- 7. Member of the Standard Performance Evaluation Corporation (SPEC) SPEC is a non-profit corporation formed to establish, maintain and endorse a standardized set of relevant benchmarks that can be applied to the newest generation of high-performance computers. The RG Big Data Working Group is a forum for individuals and organizations interested in the big data benchmarking topic. List of all 52 Member Organizations: Advanced Strategic Technology LLC * ARM * bankmark UG * Barcelona Supercomputing Center * Charles University * Cisco Systems * Cloudera, Inc * Compilaflows * Delft University of Technology * Dell fortiss GmbH * Friedrich-Alexander-University Erlangen-Nuremberg * Goethe University Frankfurt * Hewlett-Packard * Huawei * IBM * Imperial College London * Indian Institute of Technology, Bombay * Institute for Information Industry, Taiwan * Institute of Communication and Computer Systems/NTUA * Intel Karlsruhe Institute of Technology * Kiel University * Microsoft * MIOsoft Corporation * NICTA * NovaTec GmbH * Oracle Purdue University * Red Hat * RWTH Aachen University * Salesforce.com * San Diego Supercomputing Center * San Francisco State University * SAP AG * Siemens Corporation * Technische Universität Darmstadt * Technische Universität Dresden * The MITRE Corporation Umea University * University of Alberta * University of Coimbra * University of Florence * University of Lugano * University of Minnesota * University of North Florida * University of Paderborn * University of Pavia * University of Stuttgart * University of Texas at Austin * University of Wuerzburg * VMware 7

- 8. Evaluation of Hadoop Clusters using TPCx-HS Todor Ivanov and Sead Izberovic

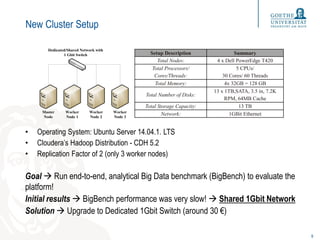

- 9. New Cluster Setup • Operating System: Ubuntu Server 14.04.1. LTS • Cloudera’s Hadoop Distribution - CDH 5.2 • Replication Factor of 2 (only 3 worker nodes) Goal Run end-to-end, analytical Big Data benchmark (BigBench) to evaluate the platform! Initial results BigBench performance was very slow! Shared 1Gbit Network Solution Upgrade to Dedicated 1Gbit Switch (around 30 €) 9 Setup Description Summary Total Nodes: 4 x Dell PowerEdge T420 Total Processors/ Cores/Threads: 5 CPUs/ 30 Cores/ 60 Threads Total Memory: 4x 32GB = 128 GB Total Number of Disks: 13 x 1TB,SATA, 3.5 in, 7.2K RPM, 64MB Cache Total Storage Capacity: 13 TB Network: 1GBit EthernetMaster Node Worker Node 1 Worker Node 2 Worker Node 3 Dedicated/Shared Network with 1 Gbit Switch

- 10. TPCx-HS: TPC Express for Hadoop Systems • X: Express, H: Hadoop, S: Sort • TPCx-HS [6],[7] is the first industry standard Big Data Benchmark released in July 2014 • Based on TeraSort and consists of 4 modules: HSGen, HSDataCkeck, HSSort & HSValidate • Scale Factors following stepped size model: 100GB, 300GB, 1TB, 3TB,10TB …. • The TPCx-HS specification defines three major metrics: – Performance metric (HSph@SF) – Price-performance metric ($/HSph@SF) – Power per performance metric (Watts/HSph@SF) [6] TPC website - http://www.tpc.org/tpcx-hs [7] Nambiar et al.,“Introducing TPCx-HS: The First Industry Standard for Benchmarking Big Data Systems,” in Performance Characterization and Benchmarking. Traditional to Big Data, Eds. Springer International Publishing, 2014. 10

- 11. Shared vs. Dedicated - Performance • Tested with 3 scale factors: 100GB, 300GB, 1TB • The shared setup is 5 times slower compared to the dedicated one. 11 2.98 8.93 29.30 0.62 1.67 5.85 0 10 20 30 40 100 GB 300 GB 1 TB Time(Hours) Time (Lower is better) Shared Dedicated 0.03 0.03 0.03 0.16 0.18 0.17 0 0.05 0.1 0.15 0.2 100 GB 300 GB 1 TB Metric(HSph@SF) HSph@SF Metric (Higher is better) Shared Dedicated

- 12. Shared vs. Dedicated - CPU • Performance Analysis Tool (PAT) (https://github.com/intel-hadoop/PAT) • Measured for 100GB scale factor. • The dedicated setup performs 5-6 times faster than the shared setup. 12 0 20 40 60 80 100 0 125 250 375 500 625 750 875 1000 1125 1250 1375 1500 1625 1750 1875 2000 2125 2250 2375 2500 2625 2750 2875 3000 3125 3250 3375 3500 3625 3750 3875 4000 4125 4250 4375 4500 CPU% Time (sec) Dedicated 1 Gbit - CPU Utilization % System % User % IOwait % 0 20 40 60 80 100 0 585 1170 1755 2340 2925 3510 4095 4680 5265 5850 6435 7020 7605 8190 8775 9360 9945 10530 11115 11700 12285 12870 13455 14040 14625 15210 15795 16380 16965 17550 18135 18720 19305 19890 20475 21060 CPU% Time (sec) Shared 1Gbit - CPU Utilization % System % User % IOwait %

- 13. Shared vs. Dedicated – Disk Bandwidth • Dedicated setup: on average read throughput is around 6.4 MB per second and write throughput is around 18.6 MB per second. • Shared setup: on average read throughput is around 1.4 MB per second and write throughput is around 4 MB per second. 13 0 20000 40000 60000 80000 100000 0 135 270 405 540 675 810 945 1080 1215 1350 1485 1620 1755 1890 2025 2160 2295 2430 2565 2700 2835 2970 3105 3240 3375 3510 3645 3780 3915 4050 4185 4320 4455 4590 KBytes Time (sec) Dedicated 1 Gbit - Disk Bandwidth KBytes Read per Second KBytes Written per Second 0 10000 20000 30000 40000 50000 60000 0 620 1240 1860 2480 3100 3720 4340 4960 5580 6200 6820 7440 8060 8680 9300 9920 10540 11160 11780 12400 13020 13640 14260 14880 15500 16120 16740 17360 17980 18600 19220 19840 20460 21080 KBytes Time (sec) Shared 1Gbit - Disk Bandwidth KBytes Read per Second KBytes Written per Second

- 14. Shared vs. Dedicated – Network • Dedicated setup: on average received 32.8MB per second and transmitted 30.6MB per second. • Shared setup: on average are received 7.1MB per second and transmitted 6.4MB per second • The dedicated setup achieves almost 5 times better network utilization. 14 0 10000 20000 30000 40000 50000 60000 70000 80000 0 130 260 390 520 650 780 910 1040 1170 1300 1430 1560 1690 1820 1950 2080 2210 2340 2470 2600 2730 2860 2990 3120 3250 3380 3510 3640 3770 3900 4030 4160 4290 4420 4550 KBytes Time (sec) Dedicated 1 Gbit - Network I/O Kbytes Received per Second KBytes Transmitted per Second 0 20000 40000 60000 80000 0 585 1170 1755 2340 2925 3510 4095 4680 5265 5850 6435 7020 7605 8190 8775 9360 9945 10530 11115 11700 12285 12870 13455 14040 14625 15210 15795 16380 16965 17550 18135 18720 19305 19890 20475 21060 KBytes Time (sec) Shared 1Gbit - Network I/O KBytes Received per Second KBytes Transmitted per Second

- 15. Next Steps • Improve network speed by using 2 NICs in parallel – Network bonding driver (Mode 0 (balance-rr) or Mode 2 (balance-xor)) – Switch supporting Link Aggregation (LACP)/Trunking • Re-run the TPCx-HS and compare with the current numbers. 16

- 16. Comparing SQL-on-Hadoop Engines with TPC-H Todor Ivanov

- 17. Motivation • Comparing SQL-on-Hadoop engines/technologies. – Very dynamic field, new commercial and open source products introduced every day. – Which one is most suitable for a particular use case? What are the similarities and differences in terms of design and features? • Analogously, growing number of file formats are available: – SequenceFile – RCfile – ORC – Parquet – Avro • What is the optimal use case for each format? What are the advantages compared to other formats? How to configure it in an optimal way? • Is there existing benchmark for such comparison and what are the important characteristics? 18

- 18. SQL-on-Hadoop Engines Open Source • Apache Hive • Hive-on-Tez • Hive-on-Spark • Apache Pig • Pig-on-Tez • Pig-on-Spark • Apache Spark SQL • Cloudera Impala • Apache Drill • Apache Tajo • Apache Phoenix • Phoenix-on-Spark • Facebook Presto • Apache Flink • Apache Kylin • Apache MRQL • Splout SQL • Cascading Lingual • Apache HAWQ Commercial • IBM Big SQL • Microsoft PolyBase • Teradata Aster SQL-MapReduce • SAP HANA Integration & Vora • Oracle Big Data SQL • RainStor • Jethro • Splice Machine 19

- 19. Why to start with TPC-H? • Standard TPC Data Warehousing (OLAP) benchmark since 1999 – Defines 5 Tables (parts, suppliers, customers, orders and lineitems) – Dynamic scale factor starting from 1GB upwards – Include 22 analytical queries • TPC-H implementation available for many engines – Complete: Hive, Spark SQL, Impala, Pig, Presto, Tajo, IBM Big SQL, SAP HANA – Partial: Drill, Phoenix 20

- 20. Cluster Setup • Operating System: Ubuntu Server 14.04.1. LTS • Cloudera’s Hadoop Distribution - CDH 5.2 • Replication Factor of 2 (only 3 worker nodes) • Hive version 0.13.1 • Spark version 1.4.0-SNAPSHOT (March 27th 2015) • TPC-H code (https://github.com/t-ivanov/D2F-Bench ) • At least 3 test repetitions • ORC and Parquet with default configurations 21 Setup Description Summary Total Nodes: 4 x Dell PowerEdge T420 Total Processors/ Cores/Threads: 5 CPUs/ 30 Cores/ 60 Threads Total Memory: 4x 32GB = 128 GB Total Number of Disks: 13 x 1TB,SATA, 3.5 in, 7.2K RPM, 64MB Cache Total Storage Capacity: 13 TB Network: Dedicated 1 GBit Ethernet

- 21. ORC vs. Parquet – Data Loading • Loading 1TB data in Hive • Loading data in Parquet is faster. Are they doing the same? No • Default configuration in Hive – ORC block size is 256MB with ZLIB compression. – Parquet block size is 128MB with no compression. 22 TABLE Number of Rows ORC Time (sec) Num. Files Total Size (GB) Parquet Time (sec) Num. Files Total Size (GB) Time Diff % Data Diff % part 200000000 887.5 14 3.19 725 50 11.95 22.41 275.05 partsupp 800000000 1056 1000 23.07 824 1000 104.07 28.16 351.04 supplier 10000000 584 2 0.43 484 6 1.39 20.66 221.07 customer 150000000 911.5 28 6.77 546 1000 22.29 66.94 229.04 orders 1500000000 2133 1000 34.35 1380.5 1000 127.29 54.51 270.54 lineitem 5999989709 6976.5 1000 151.24 4081.5 1000 342.69 70.93 126.59 nation 25 33 1 0.00 35 1 0.00 -5.71 93.20 region 5 32 1 0.00 32 1 0.00 0.00 15.87 Sum 220 610

- 22. ORC vs. Parquet – 1TB Data size • Hive finished successfully all 22 queries with acceptable Standard Deviation between the runs. • Hive with ORC is on average around 1.44 times faster than Hive with Parquet without any exceptions. (both using default configurations in Hive) • Spark SQL finished successfully only 12 queries. • Only 5 queries (Q1, Q6, Q14, Q15 and Q22) have Standard Deviation less than 3% for both file formats. • Spark SQL with Parquet is on average around 1.35 times faster than Spark with ORC except Q22. 23

- 23. Hive vs. Spark SQL – ORC – 1TB Data size • 6 queries (Q1, Q2, Q7, Q10, Q12 and Q16) perform slower than Hive. Only 5 Spark SQL queries are stable and perform faster than Hive! 24 Query Hive ORC Duration (sec) Hive ORC STDV % Spark SQL ORC Duration (sec) Spark SQL ORC STDV % Diff % Query 1 509.33 1.15 873.00 0.40 -41.66 Query 2 869.66 1.04 1292.66 0.29 -32.72 Query 3 2632 0.60 Query 4 1530.33 0.64 Query 5 4146.33 0.70 Query 6 555.33 0.28 341.75 1.13 62.50 Query 7 6075.66 1.59 10303.00 1.51 -41.03 Query 8 3928.33 0.56 Query 9 17396 0.54 Query 10 1673 0.96 2456.50 7.72 -31.89 Query 11 1560 0.23 Query 12 2519 2.17 4863.50 4.32 -48.21 Query 13 1229.33 1.39 925.00 0.54 32.90 Query 14 724.66 1.00 590.00 1.36 22.82 Query 15 1223 0.29 1073.00 0.34 13.98 Query 16 1418.33 0.18 1722.66 5.43 -17.67 Query 17 6038 1.61 Query 18 4393.66 0.80 Query 19 4286.66 0.82 1721.66 3.43 148.98 Query 20 1404.66 0.36 Query 21 8669.66 0.10 Query 22 922 0.47 436.50 1.19 111.23

- 24. Hive vs. Spark SQL – Parquet – 1TB Data size • 4 queries (Q2, Q7, Q12 and Q16) are slower than Hive. Only 6 Spark SQL queries are stable and perform faster than Hive! 25 Query Hive Parquet Duration (sec) Hive Parquet STDV % Spark SQL Parquet Duration (sec) Spark SQL Parquet STDV % Diff % Query 1 1772.33 0.94 530.75 0.73 233.93 Query 2 968.00 0.63 1267.33 7.69 -23.62 Query 3 3472.00 1.25 Query 4 2743.33 1.55 Query 5 4905.00 1.75 Query 6 908.33 1.04 260.75 2.45 248.35 Query 7 6649.66 1.52 10456.75 6.76 -36.41 Query 8 4796.66 1.14 Query 9 17972.66 0.96 Query 10 2491.00 0.26 2288.00 3.34 8.87 Query 11 1756.00 0.93 Query 12 3140.66 1.40 4154.66 2.22 -24.41 Query 13 1449.66 0.70 1399.75 5.01 3.57 Query 14 1280.00 0.61 532.50 2.32 140.38 Query 15 2546.00 1.06 805.00 1.94 216.27 Query 16 1622.66 0.98 1713.25 5.71 -5.29 Query 17 6986.00 1.60 Query 18 5914.00 0.69 Query 19 4721.66 1.27 1676.00 1.49 181.72 Query 20 2376.33 0.46 Query 21 11077.66 0.08 Query 22 1137.00 1.38 612.50 1.40 85.63

- 25. Lessons Learned • Parquet and ORC default configurations in Hive are not comparable. – How to configure and test them in a proper way? • Spark SQL is not stable and can run only a subset of the TPC-H queries. • Spark SQL with Parquet performs faster than Spark SQL with ORC. – Does Spark SQL use different Parquet configuration than Hive? (Answer: Yes) Next Steps Update the Parquet configuration and re-run the experiments for both Hive and Spark SQL. Generate the data with each engine using their default format settings. (Spark SQL) 26

- 26. Performance Evaluation of Spark SQL using BigBench Todor Ivanov and Max-Georg Beer Slides: http://www.slideshare.net/t_ivanov/wbdb-2015-performance-evaluation-of-spark- sql-using-bigbench

- 27. Practical Tips • Use BigBench to configure your cluster for Big Data applications. Tests a wide range of components and use cases. • Not all BigBench queries are suitable for scalability tests. • Spark SQL does not perform stable for complex queries and large data sizes. • Always look at the Standard Deviation (%) between the runs. You can identify faulty queries. 46

- 28. Evaluation of Cassandra (DSE) and Hadoop (CDH) using HiBench Todor Ivanov, Raik Niemann, Sead Izberovic, Marten Rosselli, Karsten Tolle and Roberto V. Zicari Slides: http://www.slideshare.net/t_ivanov/bdse-2015-evaluation-of-big-data-platforms-with- hibench

- 29. Contact Todor Ivanov [email protected] Goethe University Frankfurt am Main, Germany http://www.bigdata.uni-frankfurt.de/ 63

![TPCx-HS: TPC Express for Hadoop Systems

• X: Express, H: Hadoop, S: Sort

• TPCx-HS [6],[7] is the first industry standard Big Data

Benchmark released in July 2014

• Based on TeraSort and consists of 4 modules: HSGen,

HSDataCkeck, HSSort & HSValidate

• Scale Factors following stepped size model: 100GB, 300GB,

1TB, 3TB,10TB ….

• The TPCx-HS specification defines three major metrics:

– Performance metric (HSph@SF)

– Price-performance metric ($/HSph@SF)

– Power per performance metric (Watts/HSph@SF)

[6] TPC website - http://www.tpc.org/tpcx-hs

[7] Nambiar et al.,“Introducing TPCx-HS: The First Industry Standard for Benchmarking Big Data

Systems,” in Performance Characterization and Benchmarking. Traditional to Big Data, Eds. Springer

International Publishing, 2014.

10](https://image.slidesharecdn.com/lessonslearnedbigdatabenchmarks-150930165530-lva1-app6892/85/Lessons-Learned-on-Benchmarking-Big-Data-Platforms-10-320.jpg)