Lexical Analyzers and Parsers

- 3. lexical analysis is the process of converting a sequence of characters into a sequence of tokens. Programs performing lexical analysis are called lexical analyzers or lexers . A lexer consists of a scanner and a tokenizer .

- 5. A lexical analyzer breaks an input stream of characters into tokens. Writing lexical analyzers by hand can be a tedious process, so software tools have been developed to ease this task. Perhaps the best known such utility is Lex. Lex is a lexical analyzer generator for the UNIX operating system, targeted to the C programming language

- 6. Lex takes a specially-formatted specification file containing the details of a lexical analyzer. This tool then creates a C source file for the associated table-driven lexer. The JLex utility is based upon the Lex lexical analyzer generator model. JLex takes a specification file similar to that accepted by Lex, then creates a Java source file for the corresponding lexical analyzer.

- 7. A JLex input file is organized into three sections, separated by double-percent directives (``%%''). A proper JLex specification has the following format.

- 8. user code %% JLex directives %% regular expression rules The ``%%'' directives distinguish sections of the input file and must be placed at the beginning of their line.

- 9. The user code section - the first section of the specification file - is copied directly into the resulting output file. This area of the specification provides space for the implementation of utility classes or return types. The JLex directives section is the second part of the input file. Here, macros definitions are given and state names are declared. The third section contains the rules of lexical analysis, each of which consists of three parts: an optional state list, a regular expression, and an action.

- 10. This code is copied verbatim into the lexical analyzer source file that JLex outputs, at the top of the file. Therefore, if the lexer source file needs to begin with a package declaration or with the importation of an external class, the user code section should begin with the corresponding declaration. This declaration will then be copied onto the top of the generated source file.

- 11. The JLex directive section begins after the first ``%%'' and continues until the second ``%%'' delimiter. Each JLex directive should be contained on a single line and should begin that line.

- 12. The third part of the JLex specification consists of a series of rules for breaking the input stream into tokens. These rules specify regular expressions, then associate these expressions with actions consisting of Java source code. The rules have three distinct parts: the optional state list, the regular expression, and the associated action. This format is represented as follows. [<states>] <expression> { <action> }

- 13. If more than one rule matches strings from its input, the generated lexer resolves conflicts between rules by greedily choosing the rule that matches the longest string. Rules appearing earlier in the specification are given a higher priority by the generated lexer. If the generated lexical analyzer receives input that does not match any of its rules, an error will be raised.

- 14. Therefore, all input should be matched by at least one rule. This can be guaranteed by placing the following rule at the bottom of a JLex specification: . { java.lang.System.out.println("Unmatched input: " + yytext()); } The dot (.) , will match any input except for the newline

- 15. JLex will take a properly-formed specification and transform it into a Java source file for the corresponding lexical analyzer. A benchmark experiment was conducted, comparing the performance of a lexical analyzer generated by JLex to that of a hand-written lexical analyzer.

- 16. The comparison was made for lexical analyzers of a simple ''toy'' programming language. The hand-written lexical analyzer was written in Java. The experiment consists of running each lexical analyzer on two source files written in the toy language, then measuring the time required to process these files.

- 17. The generated lexical analyzer proved to be quite quick, as the following results show. Source File JLex-Generated Hand-Written Lexical Analyzer Lexical Analyzer 177 lines 0.42 seconds 0.53 seconds 897 lines 0.98 seconds 1.28 seconds The JLex lexical analyzer soundly outperformed the hand-written lexer.

- 18. One of the biggest complaints about table-driven lexical analyzers generated by programs like JLex is that these lexical analyzers do not perform as well as hand-written ones. Therefore, this experiment is particularly important in demonstrating the relative speed of JLex lexical analyzers.

- 19. The following is a (possibly incomplete) list of unimplemented features of JLex. 1)The regular expression lookahead operator is unimplemented, and not included in the list of special regular expression metacharacters. 2)The start-of-line operator (^) assumes the following nonstandard behavior. A match on a regular expression that uses this operator will cause the newline that precedes the match to be discarded.

- 20. Javac Main.java Java JLex.Main Sample.lex Javac sample.lex.java Java Sample

- 21. Java CUP is a parser generator for Java Java CUP compatibility is turned off by default, but can be activated with the following JLex directive. %cup

- 22. When given, this directive makes the generated scanner conform to the java_cup.runtime.Scanner interface. It has the same effect as the following three directives: %implements java_cup.runtime.Scanner %function next_token %type java_cup.runtime.Symbol

- 26. Parsing ( syntactic analysis) is the process of analyzing a sequence of tokens to determine their grammatical structure with respect to a given (more or less) formal grammar.

- 27. Document Object Model Platform- and language-independent standard object model for representing HTML or XML and related formats. Tree Structure based API: The Dom parser implements the dom api and it creates a DOM tree in memory for a XML document

- 28. supports navigation in any direction (e.g., parent and previous sibling) and allows for arbitrary modifications an implementation must at least buffer the document that has been read so far (or some parsed form of it). best suited for applications where the document must be accessed repeatedly or out of sequence order

- 29. DOM parsers must have the entire tree in memory before any processing can begin, so the amount of memory used by a DOM parser depends entirely on the size of the input data.

- 30. When to use DOM parser Manipulate the document Traverse the document back and forth Small XML files Drawbacks of DOM parser Consumes a lot of memory

- 31. Is a serial access parser API for XML. Provides a mechanism for reading data from an XML document. Popular alternative to the DOM. The quantity of memory that a SAX parser must use in order to function is typically much smaller than that of a DOM parser.

- 32. Because of the event-driven nature of SAX, processing documents can often be faster than DOM-style parsers

- 33. When to use SAX parser No structural modification Huge XML files Drawbacks of SAX Parser Certain kinds of XML validation require access to the document in full

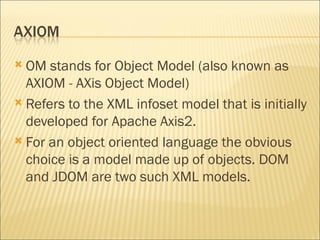

- 34. OM stands for Object Model (also known as AXIOM - AXis Object Model) Refers to the XML infoset model that is initially developed for Apache Axis2. For an object oriented language the obvious choice is a model made up of objects. DOM and JDOM are two such XML models.

- 35. OM is conceptually similar to such an XML model by its external behavior but deep down it is very much different. OM is based on Pull Parsing instead of Push Parsing.

- 36. Pull parsing is a recent trend in XML processing. The previously popular XML processing frameworks such as SAX and DOM were "push-based“, which means the control of the parsing was in the hands of the parser itself.

- 37. Push-based approach is fine and easy to use, but it was not efficient in handling large XML documents since a complete memory model will be generated in the memory. Pull parsing inverts the control and hence the parser only proceeds at the users command. The user can decide to store or discard events generated from the parser.

- 38. Credits goes out to Mr Elliot Joel Berk who wrote JLex. To the Department of Computer Science, Princeton University for maintaining JLex. All the others who contributed towards these projects. A special thanks goes out to Dr. Damith Karunaratne for giving me this opportunity.

![The third part of the JLex specification consists of a series of rules for breaking the input stream into tokens. These rules specify regular expressions, then associate these expressions with actions consisting of Java source code. The rules have three distinct parts: the optional state list, the regular expression, and the associated action. This format is represented as follows. [<states>] <expression> { <action> }](https://image.slidesharecdn.com/lexical-analysers-parsersintroucsc2009-1231438711448314-2/85/Lexical-Analyzers-and-Parsers-12-320.jpg)