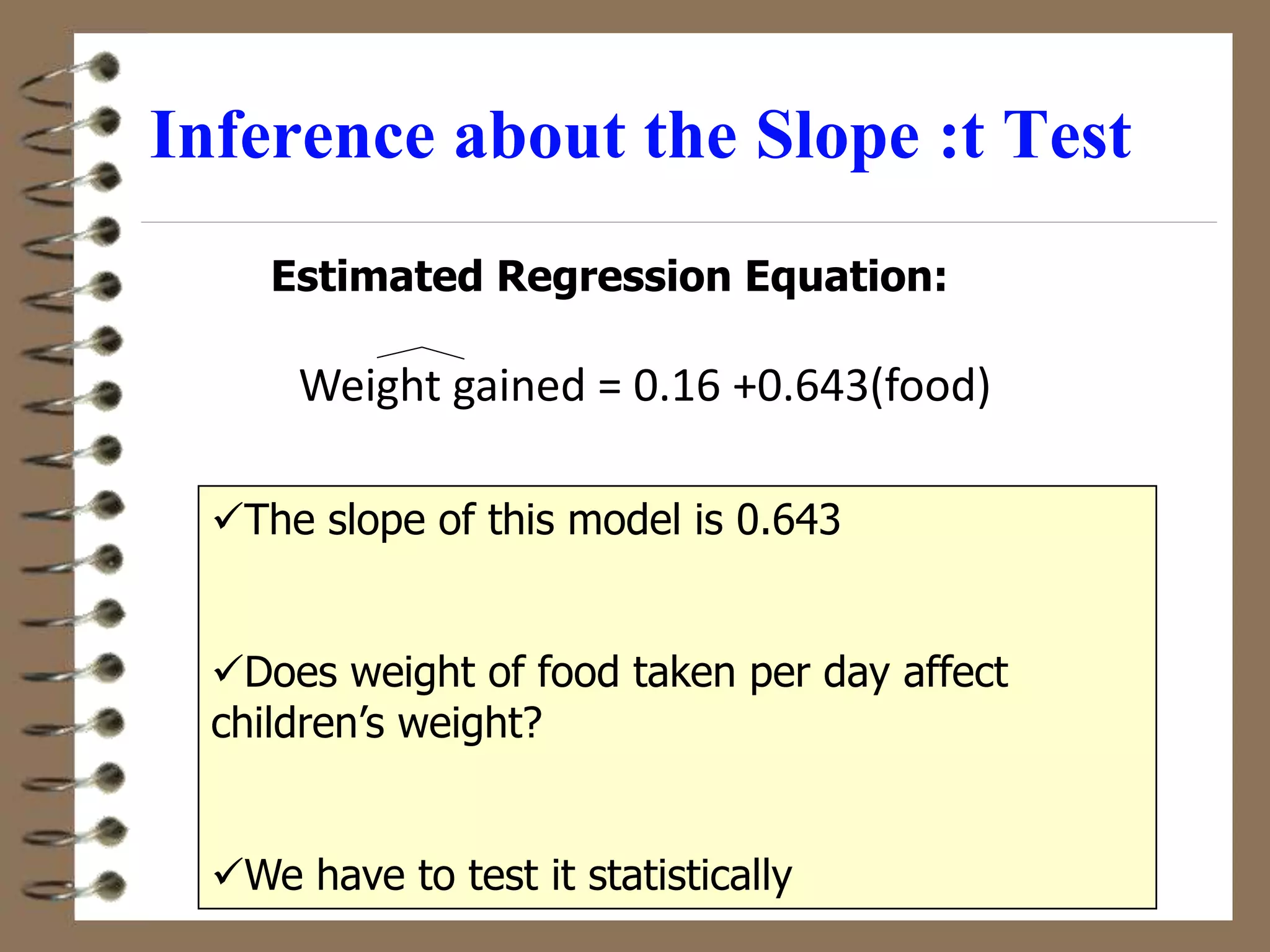

Linear regression was used to analyze the relationship between daily food intake (independent variable) and weight gain (dependent variable) in a sample of 20 children. The regression equation obtained was: Weight gained = 0.16 + 0.643(food weight). This indicates that for each additional 1kg of daily food intake, a child's weight increases by 0.643kg on average. The coefficient of determination (R2) was 0.81, meaning 81% of the variation in children's weight gain was explained by differences in daily food intake.

![Calculating the Correlation Coefficient

yy

xx

xy SS

SS

SS

y

y

x

x

y

y

x

x

r /

]

)

(

][

)

(

[

)

)(

(

2

2

where:

r = Sample correlation coefficient

n = Sample size

x = Value of the ‘independent’ variable

y = Value of the ‘dependent’ variable

]

)

(

)

(

][

)

(

)

(

[ 2

2

2

2

y

y

n

x

x

n

y

x

xy

n

r

Sample correlation coefficient:

or the algebraic equivalent:](https://image.slidesharecdn.com/linearregression-230404141305-7fe215cc/75/Linear-regression-ppt-9-2048.jpg)

![0

10

20

30

40

50

60

70

0 2 4 6 8 10 12 14

0.886

]

(321)

][8(14111)

(73)

[8(713)

(73)(321)

8(3142)

]

y)

(

)

y

][n(

x)

(

)

x

[n(

y

x

xy

n

r

2

2

2

2

2

2

Child weight, x

Child

Height,

y

Calculation Example

r = 0.886 → relatively strong positive

linear association between x and y](https://image.slidesharecdn.com/linearregression-230404141305-7fe215cc/75/Linear-regression-ppt-11-2048.jpg)