Linux Synchronization Mechanism: RCU (Read Copy Update)

- 1. Linux Synchronization Mechanism: RCU (Read- Copy-Update) Adrian Huang | Apr, 2023

- 2. Agenda • [Overview] rwlock (reader-writer spinlock) vs RCU • RCU Implementation Overview ✓High-level overview ✓RCU: List Manipulation – Old and New data ✓Reader/Writer synchronization: six basic APIs ➢Reader ➢ rcu_read_lock() & rcu_read_unlock() ➢ rcu_dereference() ➢Writer ➢ rcu_assign_pointer() ➢ synchronize_rcu() & call_rcu() • Classic RCU vs Tree RCU • RCU Flavors • RCU Usage Summary & RCU Case Study

- 3. task 0 task 1 task N read_unlock() read_lock() Critical Section write_unlock() write_lock() Readers Critical Section [Overview] rwlock (reader-writer spinlock) vs RCU rcu_read_unlock() rcu_read_lock() Critical Section synchronize_rcu() or call_rcu() spin_unlock spinlock Update or remove data (pointer) Optional: free memory Ensure only one writer Wait for job completion of readers Deferred destruction: Safe to free memory task 0 task 1 task N Readers (Subscribers) task 0 task 1 task N Writers task 0 task 1 task N Writers/Updaters (Publisher) rwlock_t 1. Mutual exclusion between reader and writer 2. Writer might be starved 1. RCU is a non-blocking synchronization mechanism: No mutual exclusion between readers and a writer 2. No specific *lock* data structure

- 4. [Overview] rwlock (reader-writer spinlock) vs RCU * Reference from section 9.5 of Is Parallel Programming Hard, And, If So, What Can You Do About It? How does RCU achieve concurrent readers and one writer? (No mutual exclusion between readers and one writer) rwlock • Mutual exclusion between reader and writer • Writer might be starved RCU • A non-blocking synchronization mechanism • No specific *lock* data structure

- 5. Agenda • [Overview] rwlock (reader-writer spinlock) vs RCU • RCU Implementation Overview ✓High-level overview ✓RCU: List Manipulation – Old and New data ✓Reader/Writer synchronization: six basic APIs ➢Reader ➢ rcu_read_lock() & rcu_read_unlock() ➢ rcu_dereference() ➢Writer ➢ rcu_assign_pointer() ➢ synchronize_rcu() & call_rcu() • Classic RCU vs Tree RCU • RCU Flavors • RCU Usage Summary & RCU Case Study

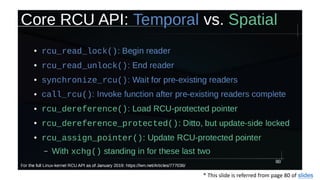

- 6. 6 important APIs Readers or Updaters Primitive Purpose Readers rcu_read_lock() Start an RCU read-side critical section rcu_read_unlock() End an RCU read-side critical section rcu_derefernce() Safely load an RCU-protected pointer Updaters synchronize_rcu() Wait for all pre-existing RCU read-side critical sections to complete call_rcu() Invoke the specified function after all pre-existing RCU read-side critical sections complete rcu_assign_pointer() Safely update an RCU-protected pointer RCU manipulates pointers

- 7. RCU Implementation Overview rcu_read_unlock() rcu_read_lock() Critical Section synchronize_rcu() or call_rcu() spin_unlock spinlock Update or remove data (pointer) Optional: free memory Ensure only one writer: removal phase Wait for job completion of readers Safe to free memory: reclamation phase task 0 task 1 task N Readers (Subscribers) task 0 task 1 task N Writers/Updaters (Publisher) • RCU is often used as a replacement for reader-writer locking • Lockless readers ✓ rcu_read_lock() simply disables preemption: No lock mechanism (Ex: spinlock, mutex and so on) ✓ Readers need not wait for updates (non-blocking): low overhead and excellent scalability Reader

- 8. RCU Implementation Overview rcu_read_unlock() rcu_read_lock() Critical Section synchronize_rcu() or call_rcu() spin_unlock spinlock Update or remove data (pointer) Optional: free memory Ensure only one writer: removal phase Wait for job completion of readers Safe to free memory: reclamation phase task 0 task 1 task N Readers (Subscribers) task 0 task 1 task N Writers/Updaters (Publisher) • [Writer] Waiting for readers ✓ Removal phase: remove references to data items (possibly by replacing them with references to new versions of these data items) ➢ Can run concurrently with readers: readers see either the old or the new version of the data structure rather than a partially updated reference. ✓ synchronize_rcu() and call_rcu(): wait for readers exiting critical section ✓ Block or register a callback that is invoked after active readers have completed. ✓ Reclamation phase: reclaim data items. • Quiescent State (QS): [per-core] The time after pre-existing readers are done ✓ Context switch: a valid quiescent state • Grace Period (GP): All cores have passed through the quiescent state → Complete a grace period Typical RCU Update Sequence

- 9. RCU: List Manipulation – Old and New data Head 5 2 9 Allocate/fill a structure 8 Insert to the list 1 2 Head 5 2 9 8

- 10. RCU: List Manipulation – Old and New data Head 5 2 9 8 Remove 1 Head 5 2 9 rcu_read_unlock() rcu_read_lock() Critical Section synchronize_rcu() or call_rcu() spin_unlock spinlock Update or remove data (pointer) Optional: free memory Ensure only one writer Wait for job completion of readers Safe to free memory task 0 task 1 task N Readers (Subscribers) task 0 task 1 task N Writers/Updaters (Publisher) 2 Old data 2 8 New data 2 9 Either the old data or the new data is read by readers.

- 11. RCU: List Manipulation – Old and New data Head 5 2 9 8 Remove 1 Head 5 2 9 2 Old data 2 8 New data 2 9 Either the old data or the new data is read by readers. RCU reader Old data is read New data is read Legend * Reference from section 9.5 of Is Parallel Programming Hard, And, If So, What Can You Do About It?

- 12. Reader/Writer synchronization rcu_read_unlock() rcu_read_lock() ptr = rcu_dereference(shared_abc); synchronize_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory Ensure only one writer Wait for job completion of readers Safe to free memory printk("%dn", ptr->number); synchronize_rcu(); spin_unlock spinlock rcu_assign_pointer(shared_abc, NULL); kfree(tmp); RCU Reader RCU Writer w/ valid pointer assignment RCU Writer w/ NULL pointer assignment and free memory

- 13. RCU Spatial/Temporal Synchronization: Sample Code RCU Reader RCU Writer/Updater Reference: Is Parallel Programming Hard, And, If So, What Can You Do About It?

- 14. RCU Spatial/Temporal Synchronization 5,25 9,81 curconfig Address Space rcu_read_lock(); mcp = rcu_dereference(curconfig); *cur_a = mcp->a; (5) *cur_b = mcp->b; (25) rcu_read_unlock(); mcp = kmalloc(…); rcu_assign_pointer(curconfig, mcp); synchronize_rcu(); … … kfree(old_mcp); rcu_read_lock(); mcp = rcu_dereference(curconfig); *cur_a = mcp->a; (9) *cur_b = mcp->b; (81) rcu_read_unlock(); Grace Period Readers Readers • Temporal Synchronization ✓ [Reader] rcu_read_lock() / rcu_read_unlock() ✓ [Writer/Update] synchronize_rcu() / call_rcu() • Spatial Synchronization • [Reader] rcu_dereference() • [Writer/Update] rcu_assign_pointer() Reference: Is Parallel Programming Hard, And, If So, What Can You Do About It? Time

- 15. RCU Spatial/Temporal Synchronization 5,25 9,81 curconfig Address Space rcu_read_lock(); mcp = rcu_dereference(curconfig); *cur_a = mcp->a; (5) *cur_b = mcp->b; (25) rcu_read_unlock(); mcp = kmalloc(…); rcu_assign_pointer(curconfig, mcp); synchronize_rcu(); … … kfree(old_mcp); rcu_read_lock(); mcp = rcu_dereference(curconfig); *cur_a = mcp->a; (9) *cur_b = mcp->b; (81) rcu_read_unlock(); Grace Period Readers Readers RCU combines temporal and spatial synchronization in order to approximate reader-writer locking • Temporal Synchronization ✓ [Reader] rcu_read_lock() / rcu_read_unlock() ✓ [Writer/Update] synchronize_rcu() / call_rcu() • Spatial Synchronization • [Reader] rcu_dereference() • [Writer/Update] rcu_assign_pointer()

- 16. Reader: rcu_read_lock() & rcu_read_unlock(): rcu_read_unlock() rcu_read_lock() ptr = rcu_dereference(shared_abc); synchronize_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory Ensure only one writer Wait for job completion of readers Safe to free memory printk("%dn", ptr->number); RCU Reader RCU Writer w/ valid pointer assignment ✓ Simply disable/enable preemption when entering/exiting RCU critical section • [Why] A QS is detected by a context switch. Kernel Source Reference: 2.6.24

- 17. Reader: rcu_read_lock() & rcu_read_unlock() rcu_read_unlock() rcu_read_lock() ptr = rcu_dereference(shared_abc); synchronize_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory Ensure only one writer Wait for job completion of readers Safe to free memory printk("%dn", ptr->number); RCU Reader RCU Writer w/ valid pointer assignment Kernel Source Reference: 2.6.24 • __acquire() / __release(): sparse checker (semantic parser) ✓ Sparse checking of RCU-protected pointers: Add __rcu marker ➢ Reference: PROPER CARE AND FEEDING OF RETURN VALUES FROM rcu_dereference()

- 18. Reader: rcu_read_lock() & rcu_read_unlock() rcu_read_unlock() rcu_read_lock() ptr = rcu_dereference(shared_abc); synchronize_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory Ensure only one writer Wait for job completion of readers Safe to free memory printk("%dn", ptr->number); RCU Reader RCU Writer w/ valid pointer assignment Kernel Source Reference: 2.6.24 • rcu_read_acquire() / rcu_read_release(): lock dep (runtime locking correctness validator) ✓ Programming error (deadlock)

- 19. rcu_read_unlock() rcu_read_lock() ptr = rcu_dereference(shared_abc); synchronize_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory Ensure only one writer Wait for job completion of readers Safe to free memory printk("%dn", ptr->number); RCU Reader RCU Writer w/ valid pointer assignment It’s illegal to block while in an RCU read-side CS (Exception: CONFIG_PREEMPT_RCU=y) Reader: rcu_read_lock() & rcu_read_unlock() Kernel Source Reference: 2.6.24

- 20. rcu_read_unlock() rcu_read_lock() ptr = rcu_dereference(shared_abc); synchronize_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory Ensure only one writer Wait for job completion of readers Safe to free memory printk("%dn", ptr->number); RCU Reader RCU Writer w/ valid pointer assignment rcu_assign_pointer() and rcu_dereference() invocations communicate spatial synchronization via stores to and loads from the RCU-protected pointer Reader: rcu_read_lock() & rcu_read_unlock() Kernel Source Reference: 2.6.24

- 21. Reader: rcu_dereference() rcu_read_unlock() rcu_read_lock() ptr = rcu_dereference(shared_abc); printk("%dn", ptr->number); RCU Reader • Preserve order • Load -> Load, Load -> Store and Store -> Store • Might be re-order because of store buffer • Store -> Load Total Store Order (TSO) – x86 Memory Model: x86 is relatively strongly ordered system Kernel Source Reference: 2.6.24

- 22. Writer: rcu_assign_pointer() synchronize_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory • Preserve order • Load -> Load, Load -> Store and Store -> Store • Might be re-order because of store buffer • Store -> Load Total Store Order (TSO) – x86 Memory Model: x86 is relatively strongly ordered system RCU Writer Kernel Source Reference: 2.6.24

- 23. Writer: synchronize_rcu() & call_rcu() synchronize_rcu() or call_rcu() spin_unlock spinlock rcu_assign_pointer(shared_abc, ptr); Optional: free memory RCU Writer • Mark the end of updater code and the beginning of reclaimer code • [Synchronous] synchronize_rcu() ✓ Block until all pre-existing RCU read-side critical sections on all CPUs have completed ✓ leverages call_rcu() • [Asynchronous] call_rcu() ✓ Queue a callback for invocation after a grace period ✓ [Scenario] ➢ It’s illegal to block RCU updater ➢ Update-side performance is critically important [Updater] Ensure only one writer: removal phase Wait for job completion of readers [Reclaimer] Safe to free memory: reclamation phase

- 24. Why the name RCU (read-copy update)? RCU Writer Create a copy

- 25. Why the name RCU (read-copy update)? RCU Writer Update the copy

- 26. Why the name RCU (read-copy update)? RCU Writer Replace the old entry with the newly created one

- 27. RCU: Quiescent State & Grace Period Removal Reclamation reader reader reader reader reader Old data is read New data is read Legend reader reader Core 0 Core 1 Core 2 Core 3 Core 4 Core 5 (RCU Updater) Grace Period Time reader list_del_rcu() synchronize_rcu() kfree()

- 28. RCU: Quiescent State & Grace Period Removal Reclamation reader reader reader reader reader Old data is read New data is read Legend reader reader Core 0 Core 1 Core 2 Core 3 Core 4 Core 5 (RCU Updater) Grace Period Time Quiescent State (QS) • A point in the code where there can be no references held to RCU-protected data structures, which is normally any point outside of an RCU read-side critical section. ✓ RCU read-side critical section is defined by the range between rcu_read_lock() and rcu_read_unlock(). Grace Period (GP) • All threads (cores) pass through at least one quiescent state. Quotes from the book “Is Parallel Programming Hard, And, If So, What Can You Do About It?” reader list_del_rcu() synchronize_rcu() kfree()

- 29. RCU: Quiescent State & Grace Period Removal Reclamation reader reader reader reader reader Old data is read New data is read Legend reader reader Core 0 Core 1 Core 2 Core 3 Core 4 Core 5 (RCU Updater) Grace Period Time reader list_del_rcu() synchronize_rcu() kfree() synchronize_rcu(): How to detect a grace period?

- 30. Approach #1: Reference Counter Approach #2: CPU Register Approach #3: Wait for a fixed period time Approach #4: Wait forever Approach #5: Avoid the period crashes Approach #6: Quiescent-state- based reclamation (QSBR) Grace Period: Waiting for readers - 6 Approaches

- 31. Approach #1: Reference Counter Approach #2: CPU Register Approach #3: Wait for a fixed period time Approach #4: Wait forever Approach #5: Avoid the period crashes Approach #6: Quiescent-state- based reclamation (QSBR) Grace Period: Waiting for readers – Reference Counter • Reference counter (share data) updated by rcu_read_lock() and rcu_read_unlock() ✓ Scalability problem due to cache bouncing Reference Counter * Figure reference from Is Parallel Programming Hard, And, If So, What Can You Do About It?

- 32. Approach #1: Reference Counter Approach #2: CPU Register Approach #3: Wait for a fixed period time Approach #4: Wait forever Approach #5: Avoid the period crashes Approach #6: Quiescent-state- based reclamation (QSBR) Grace Period: Waiting for readers – CPU Register • Check CPUs’ register (Prevent from accessing share data such as reference counter) ✓CPUs’ register: Check each CPU’s program counter (PC) ➢The updater polls each relevant PC. If the PC in not within read-side code, the corresponding CPU is within a quiescent state. ➢A complete grace period: All CPU’s PCs have been observed to be outside of read-side code. ✓Challenges ➢Readers might invoke other functions ➢Code-motion optimization CPU Register

- 33. Approach #1: Reference Counter Approach #2: CPU Register Approach #3: Wait for a fixed period time Approach #4: Wait forever Approach #5: Avoid the period crashes Approach #6: Quiescent-state- based reclamation (QSBR) Grace Period: Waiting for readers – Wait for a fixed period time • Wait the enough time to comfortably exceed the lifetime of any reasonable reader ✓Unreasonable reader → issue! Wait for a fixed period time

- 34. Approach #1: Reference Counter Approach #2: CPU Register Approach #3: Wait for a fixed period time Approach #4: Wait forever Approach #5: Avoid the period crashes Approach #6: Quiescent-state- based reclamation (QSBR) Grace Period: Waiting for readers – Wait forever • Accommodate the unreasonable reader. • Bad reputation: leaking memory ✓ Memory leaks often require untimely and inconvenient reboots. ✓ Work well in a high-availability cluster where systems were periodically crashed in order to ensure that cluster really remained highly available. Wait forever

- 35. Approach #1: Reference Counter Approach #2: CPU Register Approach #3: Wait for a fixed period time Approach #4: Wait forever Approach #5: Avoid the period crashes Approach #6: Quiescent-state- based reclamation (QSBR) Grace Period: Waiting for readers – Avoid the period crashes • Covered by stop-the-world garbage collector • In today’s always-connected always-on world, stopping the world can gravely degrade response times. Avoid the period crashes

- 36. Approach #1: Reference Counter Approach #2: CPU Register Approach #3: Wait for a fixed period time Approach #4: Wait forever Approach #5: Avoid the period crashes Approach #6: Quiescent-state- based reclamation (QSBR) Grace Period: Waiting for readers – QSBR • Numerous applications already have states (termed quiescent states) that can be reached only after all pre-existing readers are done. ✓ Transaction-processing application: the time between a pair of successive transactions might be a quiescent state. ✓ Non-preemptive OS kernel: Context switch can be a quiescent state. ✓ [Non-preemptive OS kernel] RCU reader must be prohibited from blocking while referencing a global data. QSBR

- 37. * Reference from Is Parallel Programming Hard, And, If So, What Can You Do About It? Grace Period: Waiting for readers – QSBR [Concept] Implementation for non-preemptive Linux kernel * [Not production quality] sched_setaffinity() function causes the current thread to execute on the specified CPU, which forces the destination CPU to execute a context switch.

- 38. [Concept] QSBR: non-production and non-preemptible implementation Force the destination CPU to execution switch: Completion of RCU reader Reference: Page #142 of Is Parallel Programming Hard, And, If So, What Can You Do About It? synchronize_rcu() rcu_read_lock() & rcu_read_unlock() → disable/enable preemption

- 39. Agenda • [Overview] rwlock (reader-writer spinlock) vs RCU • RCU Implementation Overview ✓High-level overview ✓RCU: List Manipulation – Old and New data ✓Reader/Writer synchronization: five basic APIs ➢Reader ➢ rcu_read_lock() & rcu_read_unlock() ➢ rcu_dereference() ➢Writer ➢ rcu_assign_pointer() ➢ synchronize_rcu() & call_rcu() • Classic RCU vs Tree RCU ✓Will discuss implementation details about classic RCU *only* • RCU Flavors • RCU Usage Summary & RCU Case Study

- 40. Classic RCU (< 2.6.29) and Tree RCU (>= 2.6.29) struct rcu_ctrlblk rcu_ctrlblk rcu_ctrlblk.lock (spinlock protection) rcu_ctrlblk.cpumask CPU 0 CPU 1 CPU N . . . • Global cpumask: each bit indicates each core • A grace period is complete if rcu_ctrlblk.cpumask = 0 • Scalability problem happens when the number of cores are increased ✓ Lock contention: QS cores are contended to update rcu_ctrlblk.cpumask ✓ Cache bouncing for frequent writes CPU 0 CPU 1 CPU N . . . Classic RCU • Group cpumask: reduce lock contention • A grace period is complete if root’s rcu_node->qsmask = 0 • Excellent scalability Tree RCU qsmask struct rcu_node CPU 2 CPU 3 qsmask struct rcu_node CPU M . . . qsmask struct rcu_node Root node struct rcu_state Reference code: v2.6.24 Reference code: v2.6.29

- 41. Classic RCU (< 2.6.29) struct rcu_ctrlblk rcu_ctrlblk rcu_ctrlblk.lock (spinlock protection) rcu_ctrlblk.cpumask CPU 0 CPU 1 CPU N . . . • Global cpumask: each bit indicates each core • Scalability problem happens when the number of cores are increased ✓ Lock contention ✓ Cache bouncing for frequent writes start a new grace period rcu_ctrlblk.cpumask=0xffff schedule(): clear the corresponding bit in rcu_ctrlblk.cpumask rcu_ctrlblk.cpumask=0? Context switch Y: Finish a grace period N: Wait for all CPUs to pass a QS High-level concept: Suppose it’s a 16-core system Kernel Source Reference: 2.6.24

- 42. Classic RCU: Data Structure rcu_head struct rcu_head *next rcu_ctrlblk func cur completed next_pending signaled lock cpumask rcu_data quiescbatch passed_quiesc qs_pending batch *nxtlist **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist **donetail qlen # of queued callbacks cpu Global control block per-cpu qs_pending: Avoid cacheline thrashing by assessing this percpu instead of cpumask bitmap rcu_head rcu_head Callback supplied by call_rcu() struct rcu_ctrlblk rcu_ctrlblk rcu_ctrlblk.lock (spinlock protection) rcu_ctrlblk.cpumask CPU 0 CPU 1 CPU N . . . Kernel Source Reference: 2.6.24

- 43. Classic RCU: start_kernel() -> rcu_init() rcu_ctrlblk cur = -300 completed = -300 next_pending signaled lock cpumask = 0 rcu_data quiescbatch passed_quiesc qs_pending batch *nxtlist **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist **donetail qlen # of queued callbacks cpu Global control block: rcu_ctrlblk per-cpu: rcu_data struct rcu_ctrlblk rcu_ctrlblk rcu_ctrlblk.lock (spinlock protection) rcu_ctrlblk.cpumask CPU 0 CPU 1 CPU N . . . Kernel Source Reference: 2.6.24 struct rcu_ctrlblk rcu_bh_ctrlblk rcu_ctrlblk.lock (spinlock protection) rcu_ctrlblk.cpumask CPU 0 CPU 1 CPU N . . . rcu_ctrlblk cur = -300 completed = -300 next_pending signaled lock cpumask = 0 Global control block: rcu_bh_ctrlblk rcu_data quiescbatch passed_quiesc qs_pending batch *nxtlist **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist **donetail qlen # of queued callbacks cpu per-cpu: rcu_bh_data 1 2 init percpu data init percpu data rcu_tasklet per-cpu rcu_process_callbacks() 3 init rcu_tasklet

- 44. rcu_ctrlblk cur = -300 completed = -300 next_pending = 0 signaled = 0 lock cpumask = 0 [Global control block] static struct rcu_ctrlblk rcu_ctrlblk; per-cpu variable initialized by rcu_init_percpu_data() rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = 0 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist = NULL **curtail *donelist = NULL **donetail qlen = 0 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) address of address of address of Kernel Source Reference: 2.6.24 Classic RCU: start_kernel() -> rcu_init(): show “rcu_ctrlblk” only

- 45. Classic RCU: call_rcu() per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = 0 *nxtlist **nxttail qs handling batch handling struct rcu_head *curlist = NULL **curtail *donelist = NULL **donetail qlen = 0 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func call_rcu(&my_rcu_head, my_rcu_func); address of address of address of Kernel Source Reference: 2.6.24

- 46. Classic RCU: synchronize_rcu() leverages call_rcu() Kernel Source Reference: 2.6.24

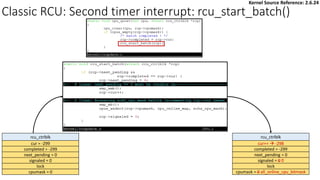

- 47. Classic RCU: Invoke rcu_pending() when a timer interrupt is triggered rcu_tasklet per-cpu rcu_process_callbacks() rcu_check_callbacks tasklet_schedule update_process_times rcu_pending()? Y timer interrupt __rcu_process_callbacks() rcu_check_quiescent_state call rcu_do_batch if rdp->donelist tasklet_schedule if rdp->donelist: All callbacks are not invoked yet due to rdp->blimit (limit on a processed batch) per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = 0 *nxtlist **nxttail qs handling batch handling struct rcu_head *curlist = NULL **curtail *donelist = NULL **donetail qlen = 0 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of address of Kernel Source Reference: 2.6.24

- 48. Classic RCU: Check if a CPU passes a QS rcu_check_callbacks update_process_times rcu_pending()? Y timer interrupt Kernel Source Reference: 2.6.24 rcu_qsctr_inc schedule Timer interrupt Scheduler: context switch [Note] “rcu_data.passed_quiesc = 1” does not clear the corresponding rcu_ctrlblk.cpumask directly. More checks are performed. See later slides.

- 49. Classic RCU: first timer interrupt rcu_tasklet per-cpu rcu_process_callbacks() rcu_check_callbacks tasklet_schedule update_process_times rcu_pending()? Y timer interrupt __rcu_process_callbacks() rcu_check_quiescent_state call rcu_do_batch if rdp->donelist rcu_ctrlblk cur = -300 completed = -300 next_pending = 0 signaled = 0 lock cpumask = 0 [Global control block] static struct rcu_ctrlblk rcu_ctrlblk; per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = 0 *nxtlist **nxttail qs handling batch handling struct rcu_head *curlist = NULL **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of address of Manipulation Kernel Source Reference: 2.6.24

- 50. Classic RCU per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = rcp->cur + 1 = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur = -300 completed = -300 next_pending = 0 1 signaled = 0 lock cpumask = 0 static struct rcu_ctrlblk rcu_ctrlblk; rcu_ctrlblk cur = -300 completed = -300 next_pending = 0 signaled = 0 lock cpumask = 0 static struct rcu_ctrlblk rcu_ctrlblk; per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = 0 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist = NULL **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of __rcu_process_callbacks() Kernel Source Reference: 2.6.24

- 51. Classic RCU: first timer interrupt per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = rcp->cur + 1 = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur = -300 completed = -300 next_pending = 0 1 signaled = 0 lock cpumask = 0 static struct rcu_ctrlblk rcu_ctrlblk; rcu_start_batch() • A new grace period is started • All cpus must go through a quiescent state ✓ at least two calls to rcu_check_quiescent_state() are required ➢ The first call: A new grace period is running ➢ The second call: If there was a quiescent state, then 1. Update rcu_ctrlblk.cpumask: Clear the corresponding CPU bit 2. rcu_ctrlblk.cpumask is empty: the grace period is completed. Kernel Source Reference: 2.6.24

- 52. Classic RCU: first timer interrupt rcu_ctrlblk cur = -300 completed = -300 next_pending = 1 signaled = 0 lock cpumask = 0 rcu_ctrlblk cur++ → -299 completed = -300 next_pending = 1 0 signaled = 0 0 lock cpumask = cpu_online_map & ~nohz_cpu_mask Kernel Source Reference: 2.6.24

- 53. Classic RCU per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur = -300 completed = -300 next_pending = 1 signaled = 0 lock cpumask = 0 static struct rcu_ctrlblk rcu_ctrlblk; per-cpu rcu_data quiescbatch = rcp->completed = -300 passed_quiesc = 0 qs_pending = 0 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur++ → -299 completed = -300 next_pending = 1 0 signaled = 0 0 lock cpumask = 0 all_online_cpu_bitmask static struct rcu_ctrlblk rcu_ctrlblk; rcu_start_batch() Kernel Source Reference: 2.6.24

- 54. Classic RCU: first timer interrupt rcu_start_batch() • A new grace period is started • All cpus must go through a quiescent state ✓ at least two calls to rcu_check_quiescent_state() are required ➢ The first call: A new grace period is running ➢ The second call: If there was a quiescent state, then 1. Update rcu_ctrlblk.cpumask: Clear the corresponding CPU bit 2. rcu_ctrlblk.cpumask is empty: the grace period is completed. The first call The second call Kernel Source Reference: 2.6.24

- 55. The first call, then wait for the next qs (context switch) The second call per-cpu rcu_data quiescbatch = rcp->cur = -299 passed_quiesc = 0 qs_pending = 0 1 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur = -299 completed = -300 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask static struct rcu_ctrlblk rcu_ctrlblk; After the first call Classic RCU (< 2.6.29): first timer interrupt Kernel Source Reference: 2.6.24

- 56. per-cpu rcu_data quiescbatch = rcp->cur = -299 passed_quiesc = 0 1 qs_pending = 1 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur = -299 completed = -300 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask static struct rcu_ctrlblk rcu_ctrlblk; Context switch happens rcu_data quiescbatch passed_quiesc qs_pending batch *nxtlist **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist **donetail qlen # of queued callbacks cpu schedule rcu_qsctr_inc rdp->passed_quiesc = 1 Classic RCU: first timer interrupt: quiescent state (context switch) 2 1 Kernel Source Reference: 2.6.24

- 57. Classic RCU: second timer interrupt per-cpu rcu_data quiescbatch = -299 passed_quiesc = 1 qs_pending = 1 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur = -299 completed = -300 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask static struct rcu_ctrlblk rcu_ctrlblk; Second timer interrupt -> __rcu_pending() True Kernel Source Reference: 2.6.24 rcu_tasklet per-cpu rcu_process_callbacks() rcu_check_callbacks tasklet_schedule update_process_times rcu_pending()? Y timer interrupt

- 58. Classic RCU per-cpu rcu_data quiescbatch = -299 passed_quiesc = 1 qs_pending = 1 0 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of rcu_ctrlblk cur = -299 completed = cur = -299 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask static struct rcu_ctrlblk rcu_ctrlblk; The second call cpu_clear() 1 2 3 4 5 6 All CPUs pass QS 7

- 59. Classic RCU: Second timer interrupt: rcu_start_batch() rcu_ctrlblk cur++ → -298 completed = -299 next_pending = 0 signaled = 0 0 lock cpumask = 0 all_online_cpu_bitmask rcu_ctrlblk cur = -299 completed = -299 next_pending = 0 signaled = 0 lock cpumask = 0 Kernel Source Reference: 2.6.24

- 60. Classic RCU: Third timer interrupt rcu_data quiescbatch = -299 passed_quiesc = 1 qs_pending = 0 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of static struct rcu_ctrlblk rcu_ctrlblk; 6 All CPUs pass QS rcu_ctrlblk cur++ = -298 completed = -299 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask rcu_tasklet per-cpu rcu_process_callbacks() rcu_check_callbacks tasklet_schedule update_process_times rcu_pending()? Y Third timer interrupt __rcu_process_callbacks() rcu_check_quiescent_state call rcu_do_batch if rdp->donelist Kernel Source Reference: 2.6.24

- 61. Classic RCU: Third timer interrupt rcu_data quiescbatch = -299 passed_quiesc = 1 qs_pending = 0 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of static struct rcu_ctrlblk rcu_ctrlblk; 6 All CPUs pass QS rcu_ctrlblk cur++ = -298 completed = -299 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask rcu_data quiescbatch = -299 passed_quiesc = 1 qs_pending = 0 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist **curtail *donelist = NULL **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of address of 1 2 Kernel Source Reference: 2.6.24

- 62. Classic RCU: Third timer interrupt rcu_data quiescbatch = -299 = rcp->cur = -298 passed_quiesc = 1 0 qs_pending = 0 1 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist = NULL **curtail *donelist **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of static struct rcu_ctrlblk rcu_ctrlblk; 6 All CPUs pass QS rcu_ctrlblk cur++ = -298 completed = -299 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask 1 2 3 4 5 Kernel Source Reference: 2.6.24

- 63. Classic RCU: Third timer interrupt rcu_data quiescbatch = rcp->cur = -298 passed_quiesc = 1 0 qs_pending = 0 1 batch = -299 *nxtlist = NULL **nxttail qs handling batch handling struct rcu_head *curlist = NULL **curtail *donelist **donetail qlen = 1 # of queued callbacks cpu = current cpu’s ID blimit = 10 (default value) rcu_head struct rcu_head *next func = my_rcu_func address of static struct rcu_ctrlblk rcu_ctrlblk; 6 All CPUs pass QS rcu_ctrlblk cur++ = -298 completed = -299 next_pending = 0 signaled = 0 lock cpumask = all_online_cpu_bitmask 1 2 3 Invoke callbacks: depend on rdp->blimit (Check function ‘rcu_do_batch’ for detail) Kernel Source Reference: 2.6.24

- 64. Tree RCU (>= 2.6.29) CPU 0 CPU 1 CPU N . . . • Group cpumask: reduce lock contention • A grace period is complete if root’s rcu_node->qsmask = 0 • Excellent scalability Tree RCU qsmask struct rcu_node CPU 2 CPU 4 qsmask struct rcu_node CPU M . . . qsmask struct rcu_node Root node struct rcu_state Won’t describe implementation detail about tree RCU. (kinda similar to classic RCU)

- 65. Agenda • [Overview] rwlock (reader-writer spinlock) vs RCU • RCU Implementation Overview ✓High-level overview ✓RCU: List Manipulation – Old and New data ✓Reader/Writer synchronization: five basic APIs ➢Reader ➢ rcu_read_lock() & rcu_read_unlock() ➢ rcu_dereference() ➢Writer ➢ rcu_assign_pointer() ➢ synchronize_rcu() & call_rcu() • Classic RCU vs Tree RCU • RCU Flavors • RCU Usage Summary & RCU Case Study

- 66. • Vanilla RCU • Bottom-half Flavor (Historical) • Sched Flavor (Historical) • Sleepable RCU (SRCU) • Tasks RCU • Tasks Rude RCU • Tasks Trace RCU RCU Flavors

- 67. • Reader RCU APIs ✓rcu_read_lock() / rcu_read_unlock() ✓rcu_dereference() • Writer RCU APIs ✓rcu_assign_pointer() ✓Synchronous grace-period wait primitive: synchronize_rcu() ✓Asynchronous grace-period wait primitive: call_rcu() RCU Flavors: Vanilla RCU (Classic RCU and Tree RCU)

- 68. • Networking data structures that may be subjected to remote denial- of-service attacks. ✓CPUs never exit softirq execution due to DoS attacks. ➢Prevent CPUs from executing a context switch → prevent grace periods from ever ending. ◼ Out-of-memory and a system hang ✓Disabling bottom halve can prevent the issue. • Reader RCU APIs ✓rcu_read_lock_bh() / rcu_read_unlock_bh() ➢ local_bh_disable() / local_bh_enable() ✓rcu_dereference_bh() • Writer: No change RCU Flavors: Bottom-half Flavor (Historical)

- 69. • Before preemptible RCU, context switch is a quiescent state ✓ [A complete grace period] Need to wait for all pre-existing interrupt and NMI handler • [CONFIG_PREEMPTION=n] ✓ Vanilla RCU and RCU-sched grace period waits for pre-existing interrupt and NMI handlers ✓ Vanilla RCU and RCU-sched have identical implementations • [CONFIG_PREEMPTION=Y] for RCU-sched ✓ Preemptible RCU does not need to wait for pre-existing interrupt and NMI handler. ✓ The code outside of an RCU read-side critical section → a QS ✓ rcu_read_lock_sched() → disable preemption ✓ rcu_read_unlock_sched() → re-enable preemption ✓ A preemption attempt during the RCU-sched read-side critical section: ✓ rcu_read_unlock_sched() will enter the scheduler • Reader RCU APIs ✓ rcu_read_lock_sched() / rcu_read_unlock_sched() ✓ preempt_disable() / preempt_enable() ✓ local_irq_save() / local_irq_restore() ✓ hardirq enter / hardirq exit ✓ NMI enter / NMI exit ✓ rcu_dereference_sched() • Writer: No change RCU Flavors: Sched Flavor (Historical)

- 70. • Classic RCU: blocking or sleeping is strictly prohibited • SRCU: Allow arbitrary sleeping (or blocking) within RCU read-side critical section ✓Real-time kernel ➢ Require that spinlock critical section be preemptible ➢ Require that RCU read-side critical section be preemptible ✓Extend grace periods • Different domains (srcu_struct structure) are defined • Benefit: a slow SRCU reader in one domain does not delay a SRCU grace period in another domain RCU Flavors: Sleepable RCU (SRCU) struct srcu_struct ss; int idx; idx = srcu_read_lock(&ss); do_something(); srcu_read_unlock(&ss, idx);

- 71. • RCU mechanism ✓Keep old version of data structure until no CPU holds a reference to it. → the structure can be freed (context switch happens). • Tasks RCU ✓Defer the destruction of an old data structure until it is known that no process holds a reference to it ✓Scenario: Handle the trampolines used in Linux-kernel tracing ➢ Tracer subsystem: ftrace and kprobe ➢ Kprobe: Return probe – Trampoline ✓Reader RCU APIs ➢ No explicit read-side marker ➢ Voluntary context switches separate successive Tasks RCU read-side critical sections. ✓Writer RCU APIs ➢ Synchronous grace-period-wait primitives: synchronize_rcu_tasks() ➢ Asynchronous grace-period-wait primitives: call_rcu_tasks() RCU Flavors: Tasks RCU

- 72. • Tasks RCU does not wait for idle tasks ✓Idle tasks: do not run voluntary context switches ➢ Remain idle for long periods of time ✓Cannot work for tracing of code within idle loop • Tasks Rude RCU ✓Scenario: Trampoline that might be involved in tracing of code within the idle loop ✓Reader RCU APIs ➢ No explicit read-side marker ➢ Preemption-disabled region of code is a Tasks Rude RCU reader ✓Writer RCU APIs ➢ Synchronous grace-period-wait primitives: synchronize_rcu_tasks_rude() ➢ Asynchronous grace-period-wait primitives: call_rcu_tasks_rude() RCU Flavors: Tasks Rude RCU

- 73. • Tasks RCU and Tasks Rude RCU disallow sleeping while executing in a given trampoline • Tasks Rude RCU ✓Scenario: BPF programs need to sleep ✓Reader RCU APIs ➢ Explicit read-side marker: rcu_read_lock_trace() / rcu_read_unlock_trace() ✓Writer RCU APIs ➢ Synchronous grace-period-wait primitives: synchronize_rcu_tasks_trace() ➢ Asynchronous grace-period-wait primitives: call_rcu_tasks_trace() RCU Flavors: Tasks Trace RCU

- 74. Agenda • [Overview] rwlock (reader-writer spinlock) vs RCU • RCU Implementation Overview ✓High-level overview ✓RCU: List Manipulation – Old and New data ✓Reader/Writer synchronization: five basic APIs ➢Reader ➢ rcu_read_lock() & rcu_read_unlock() ➢ rcu_dereference() ➢Writer ➢ rcu_assign_pointer() ➢ synchronize_rcu() & call_rcu() • Classic RCU vs Tree RCU • RCU Flavors • RCU Usage Summary & RCU Case Study

- 75. RCU Usage Summary Usage Description Applicable? Routing Table 1. Read mostly 2. Stale and inconsistent data is permissible Work great Linux kernel’s mapping from user-level System-V semaphore IDs to in-kernel data structures 1. Read mostly: Semaphore tends to be used far more frequently than they are created and destroyed. 2. Need consistent data: perform a semaphore operation on a semaphore that has already been deleted. Work well dentry cache in Linux kernel 1. Read/Write workload 2. Need consistent data Might be ok SLAB_TYPESAFE_BY_RCU slab-allocator flag provides type-safe memory to RCU readers 1. Write mostly 2. Need consistent data Not best

- 76. Case Study: Design Patterns and Lock Granularity • Code locking: use global locks only ✓ Lock contention & scalability issue • Data locking ✓ Many data structures may be partitioned ➢ Example: hash table ✓ Each partition of data structure has its own lock ✓ Improve lock contention & better scalability • Data ownership ✓ Data structure is partitioned over threads or CPUs ➢ Each thread/CPU accesses its subset of data structure without any synchronization overhead ➢ The most-heavily used data owned by a single CPU → Hot spot in this CPU ➢ No sharing is required -> achieve ideal performance ➢ Example: percpu variables in Linux kernel

- 77. Case Study: Code locking: dentry lookup • v2.5.61 and earlier • dcache_lock ✓ protect the hash chain (hash table), d_child, d_alias, d_lru lists and d_inode . . dentry_hashtable Source code from: v2.5.61

- 78. Case Study: Code locking: dentry lookup • v2.5.61 and earlier • dcache_lock ✓ protect the hash chain (hash table), d_child, d_alias, d_lru lists and d_inode . . dentry_hashtable Code locking by `dcache_lock` • dcache_lock protects the whole hash table • More lock contention, poor scalability Code locking Source code from: v2.5.61

- 79. Case Study: Data locking: dentry lookup • v2.5.62 and later • RCU ✓ lock-free for dcache (dentry) look-up ✓ No need to acquire `dcache_lock` when traversing the hash table in d_lookup ✓ Rely on RCU to ensure the dentry has not been *freed*. • dcache_lock ✓ dcache_lock must be taken for the followings: ✓ Traversing and updating ✓ Hashtable update . . dentry_hashtable Data locking by `dentry->d_lock • Improve lock contention • Better scalability Source code from: v2.5.62 Data locking

- 80. Case Study: Data locking: dentry lookup - seqlock Source code from: v2.5.67

- 81. Case Study: Data locking: dentry lookup - seqlock Source code from: v2.5.67 This approach is still used in latest kernel (v6.3)

- 82. Reference • Is Parallel Programming Hard, And, If So, What Can You Do About It? • What is RCU? – “Read, Copy, Update” • What Does It Mean To Be An RCU Implementation? • https://docs.kernel.org/RCU/index.html • Using RCU for linked lists — a case study • Scaling dcache with RCU • Sleepable RCU (SRCU) • https://lwn.net/Articles/253651/ • https://zhuanlan.zhihu.com/p/90223380 • Preemptible RCU • https://lwn.net/Articles/253651/ • https://zhuanlan.zhihu.com/p/90223380

- 83. Backup

- 84. RCU (Read-Copy-Update) • Non-blocking synchronization ✓Deadlock Immunity: RCU read-side primitives do not block, spin or even do backwards branches → execution time is deterministic. ➢Exception (programming error): ➢Immunity to priority inversion ◼ Low-priority RCU readers cannot prevent a high-priority RCU updater from acquiring the update-side lock ◼ A low-priority RCU updater cannot prevent high-priority RCU readers from entering read-side critical section ➢[-rt kernel] RCU is susceptible to priority inversion scenarios: ➢ A High-priority process blocked waiting for an RCU grace period to elapse can be blocked by low-priority RCU readers. --> Solve by RCU priority boosting. ➢ [RCU priority boosting] Require rcu_read_unclock() do deboosting, which entails acquiring scheduler locks. ➢ Need to avoid deadlocks within the scheduler and RCU: v5.15 kernel requires RCU to avoid invoking the scheduler while holding any of RCU’s locks ➢ rcu_read_unlock() is not always lockless when RCU priority boosting is enabled.

- 85. RCU Properties • Reader ✓Reads need not wait for updates ➢Low-cost or even no-cost readers → excellent scalability ✓Each reader has a coherent view of each object within the block of rcu_read_lock() and rcu_read_unlock() • Updater ✓synchronized_rcu(): ensure that objects are not freed until after the completion of all readers that might be using them. ✓rcu_assign_pointer() and rcu_dereference(): efficient and scalable mechanisms for publishing and reading new versions of an object.

- 86. Wait for pre-existing RCU readers • Wait for the RCU read-side critical section: rcu_read_lock()/rcu_read_unlock() ✓Illegal to sleep within an RCU read-side critical section because a context switch is a quiescent state * If any portion of a given critical section precedes the beginning of a given grace period, then RCU guarantees that all of that critical section will precede the end of that grace period.

- 87. Wait for pre-existing RCU readers: if-then relationship • If any portion of a given critical section precedes the beginning of a given grace period, then RCU guarantees that all of that critical section will precede the end of that grace period. ✓ r1 = 0 and r2 = 0

- 88. • If any portion of a given critical section follows the end of a given grace period, then RCU guarantees that all of that critical section will follow the beginning of that grace period. ✓ r1 = 1 and r2 = 1 • What would happen if the order of P()’s two accesses was reversed? ✓ Nothing change because loads from x and y are in the same RCU read-side critical section. Wait for pre-existing RCU readers: if-then relationship

- 89. • An RCU read-side critical section can be completely overlapped by an RCU grace period. ✓ r1 = 1 and r2 = 0 ✓ Cannot be r1 = 0 and r2 = 1 Wait for pre-existing RCU readers: if-then relationship

- 90. RCU Grace-Period Ordering Guarantee Given a grace period, each readers ends before the end of that grace period, starts after the beginning of that grace period, or both.

- 91. Maintain Multiple Versions of Recently Updated Objects • RCU accommodates synchronization-free readers (weak temporal synchronization) by maintaining multiple versions of data

- 92. * This slide is referred from page 44 of What is RCU?

- 93. * This slide is referred from page 80 of slides

- 94. task_struct cg_list rcu_node_entry (CONFIG_PREEMPT_RCU=y) tasks thread_node (linked to signal_struct) Kernel Source Reference: 6.2 Not use RCU: not read-mostly data structures (use spinlock) RCU: Defer destruction ptraced ptrace_entry RCU + spinlock children sibling Case study: Access task_struct linked list __cacheline_aligned DEFINE_RWLOCK(tasklist_lock);

![Agenda

• [Overview] rwlock (reader-writer spinlock) vs RCU

• RCU Implementation Overview

✓High-level overview

✓RCU: List Manipulation – Old and New data

✓Reader/Writer synchronization: six basic APIs

➢Reader

➢ rcu_read_lock() & rcu_read_unlock()

➢ rcu_dereference()

➢Writer

➢ rcu_assign_pointer()

➢ synchronize_rcu() & call_rcu()

• Classic RCU vs Tree RCU

• RCU Flavors

• RCU Usage Summary & RCU Case Study](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-2-320.jpg)

![task 0

task 1

task N read_unlock()

read_lock()

Critical Section

write_unlock()

write_lock()

Readers

Critical Section

[Overview] rwlock (reader-writer spinlock) vs RCU

rcu_read_unlock()

rcu_read_lock()

Critical Section

synchronize_rcu() or call_rcu()

spin_unlock

spinlock

Update or remove data (pointer)

Optional: free memory

Ensure only one writer

Wait for job completion of readers

Deferred destruction: Safe to free memory

task 0

task 1

task N

Readers

(Subscribers)

task 0

task 1

task N

Writers

task 0

task 1

task N

Writers/Updaters

(Publisher)

rwlock_t

1. Mutual exclusion between reader and writer

2. Writer might be starved

1. RCU is a non-blocking synchronization mechanism: No mutual exclusion between readers and a writer

2. No specific *lock* data structure](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-3-320.jpg)

![[Overview] rwlock (reader-writer spinlock) vs RCU

* Reference from section 9.5 of Is Parallel Programming Hard, And, If So, What Can You Do About It?

How does RCU achieve concurrent readers and one writer?

(No mutual exclusion between readers and one writer)

rwlock

• Mutual exclusion between reader and writer

• Writer might be starved

RCU

• A non-blocking synchronization mechanism

• No specific *lock* data structure](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-4-320.jpg)

![Agenda

• [Overview] rwlock (reader-writer spinlock) vs RCU

• RCU Implementation Overview

✓High-level overview

✓RCU: List Manipulation – Old and New data

✓Reader/Writer synchronization: six basic APIs

➢Reader

➢ rcu_read_lock() & rcu_read_unlock()

➢ rcu_dereference()

➢Writer

➢ rcu_assign_pointer()

➢ synchronize_rcu() & call_rcu()

• Classic RCU vs Tree RCU

• RCU Flavors

• RCU Usage Summary & RCU Case Study](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-5-320.jpg)

![RCU Implementation Overview

rcu_read_unlock()

rcu_read_lock()

Critical Section

synchronize_rcu() or call_rcu()

spin_unlock

spinlock

Update or remove data (pointer)

Optional: free memory

Ensure only one writer:

removal phase

Wait for job completion of readers

Safe to free memory:

reclamation phase

task 0

task 1

task N

Readers

(Subscribers)

task 0

task 1

task N

Writers/Updaters

(Publisher)

• [Writer] Waiting for readers

✓ Removal phase: remove references to data items (possibly by replacing them with references to new versions of these

data items)

➢ Can run concurrently with readers: readers see either the old or the new version of the data structure rather than

a partially updated reference.

✓ synchronize_rcu() and call_rcu(): wait for readers exiting critical section

✓ Block or register a callback that is invoked after active readers have completed.

✓ Reclamation phase: reclaim data items.

• Quiescent State (QS): [per-core] The time after pre-existing readers are done

✓ Context switch: a valid quiescent state

• Grace Period (GP): All cores have passed through the quiescent state → Complete a grace period

Typical RCU Update Sequence](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-8-320.jpg)

![RCU Spatial/Temporal Synchronization

5,25 9,81

curconfig

Address Space

rcu_read_lock();

mcp = rcu_dereference(curconfig);

*cur_a = mcp->a; (5)

*cur_b = mcp->b; (25)

rcu_read_unlock();

mcp = kmalloc(…);

rcu_assign_pointer(curconfig, mcp);

synchronize_rcu();

…

…

kfree(old_mcp);

rcu_read_lock();

mcp = rcu_dereference(curconfig);

*cur_a = mcp->a; (9)

*cur_b = mcp->b; (81)

rcu_read_unlock();

Grace

Period

Readers

Readers

• Temporal Synchronization

✓ [Reader] rcu_read_lock() / rcu_read_unlock()

✓ [Writer/Update] synchronize_rcu() / call_rcu()

• Spatial Synchronization

• [Reader] rcu_dereference()

• [Writer/Update] rcu_assign_pointer()

Reference: Is Parallel Programming Hard, And, If So, What Can You Do About It?

Time](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-14-320.jpg)

![RCU Spatial/Temporal Synchronization

5,25 9,81

curconfig

Address Space

rcu_read_lock();

mcp = rcu_dereference(curconfig);

*cur_a = mcp->a; (5)

*cur_b = mcp->b; (25)

rcu_read_unlock();

mcp = kmalloc(…);

rcu_assign_pointer(curconfig, mcp);

synchronize_rcu();

…

…

kfree(old_mcp);

rcu_read_lock();

mcp = rcu_dereference(curconfig);

*cur_a = mcp->a; (9)

*cur_b = mcp->b; (81)

rcu_read_unlock();

Grace

Period

Readers

Readers

RCU combines temporal and spatial synchronization in order to approximate

reader-writer locking

• Temporal Synchronization

✓ [Reader] rcu_read_lock() / rcu_read_unlock()

✓ [Writer/Update] synchronize_rcu() / call_rcu()

• Spatial Synchronization

• [Reader] rcu_dereference()

• [Writer/Update] rcu_assign_pointer()](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-15-320.jpg)

![Reader: rcu_read_lock() & rcu_read_unlock():

rcu_read_unlock()

rcu_read_lock()

ptr = rcu_dereference(shared_abc);

synchronize_rcu()

spin_unlock

spinlock

rcu_assign_pointer(shared_abc, ptr);

Optional: free memory

Ensure only one writer

Wait for job completion of readers

Safe to free memory

printk("%dn", ptr->number);

RCU Reader

RCU Writer w/ valid pointer assignment

✓ Simply disable/enable preemption when entering/exiting RCU critical section

• [Why] A QS is detected by a context switch.

Kernel Source Reference: 2.6.24](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-16-320.jpg)

![Writer: synchronize_rcu() & call_rcu()

synchronize_rcu() or call_rcu()

spin_unlock

spinlock

rcu_assign_pointer(shared_abc, ptr);

Optional: free memory

RCU Writer

• Mark the end of updater code and the beginning of reclaimer code

• [Synchronous] synchronize_rcu()

✓ Block until all pre-existing RCU read-side critical sections on all CPUs have completed

✓ leverages call_rcu()

• [Asynchronous] call_rcu()

✓ Queue a callback for invocation after a grace period

✓ [Scenario]

➢ It’s illegal to block RCU updater

➢ Update-side performance is critically important

[Updater] Ensure only one writer: removal phase

Wait for job completion of readers

[Reclaimer] Safe to free memory: reclamation phase](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-23-320.jpg)

![Approach #1:

Reference

Counter

Approach #2:

CPU Register

Approach #3:

Wait for a fixed

period time

Approach #4:

Wait forever

Approach #5:

Avoid the

period crashes

Approach #6: Quiescent-state-

based reclamation (QSBR)

Grace Period: Waiting for readers – QSBR

• Numerous applications already have states (termed quiescent states) that

can be reached only after all pre-existing readers are done.

✓ Transaction-processing application: the time between a pair of successive

transactions might be a quiescent state.

✓ Non-preemptive OS kernel: Context switch can be a quiescent state.

✓ [Non-preemptive OS kernel] RCU reader must be prohibited from blocking

while referencing a global data.

QSBR](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-36-320.jpg)

![* Reference from Is Parallel Programming Hard, And, If So, What Can You Do About It?

Grace Period: Waiting for readers – QSBR

[Concept] Implementation for non-preemptive Linux kernel

* [Not production quality] sched_setaffinity() function causes the

current thread to execute on the specified CPU, which forces the

destination CPU to execute a context switch.](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-37-320.jpg)

![[Concept] QSBR: non-production and non-preemptible

implementation

Force the destination CPU to execution switch: Completion of RCU reader

Reference: Page #142 of Is Parallel Programming Hard, And, If So, What Can You Do About It?

synchronize_rcu()

rcu_read_lock() & rcu_read_unlock() → disable/enable preemption](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-38-320.jpg)

![Agenda

• [Overview] rwlock (reader-writer spinlock) vs RCU

• RCU Implementation Overview

✓High-level overview

✓RCU: List Manipulation – Old and New data

✓Reader/Writer synchronization: five basic APIs

➢Reader

➢ rcu_read_lock() & rcu_read_unlock()

➢ rcu_dereference()

➢Writer

➢ rcu_assign_pointer()

➢ synchronize_rcu() & call_rcu()

• Classic RCU vs Tree RCU

✓Will discuss implementation details about classic RCU *only*

• RCU Flavors

• RCU Usage Summary & RCU Case Study](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-39-320.jpg)

![rcu_ctrlblk

cur = -300

completed = -300

next_pending = 0

signaled = 0

lock

cpumask = 0

[Global control block]

static struct rcu_ctrlblk rcu_ctrlblk;

per-cpu variable initialized by rcu_init_percpu_data()

rcu_data

quiescbatch = rcp->completed = -300

passed_quiesc = 0

qs_pending = 0

batch = 0

*nxtlist = NULL

**nxttail

qs

handling

batch

handling

struct

rcu_head

*curlist = NULL

**curtail

*donelist = NULL

**donetail

qlen = 0

# of queued callbacks

cpu = current cpu’s ID

blimit = 10 (default value)

address of

address of

address of

Kernel Source Reference: 2.6.24

Classic RCU: start_kernel() -> rcu_init(): show “rcu_ctrlblk” only](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-44-320.jpg)

![Classic RCU: Check if a CPU passes a QS

rcu_check_callbacks

update_process_times

rcu_pending()?

Y

timer interrupt

Kernel Source Reference: 2.6.24

rcu_qsctr_inc

schedule

Timer interrupt Scheduler: context switch

[Note] “rcu_data.passed_quiesc = 1” does not clear the corresponding rcu_ctrlblk.cpumask

directly. More checks are performed. See later slides.](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-48-320.jpg)

![Classic RCU: first timer interrupt

rcu_tasklet per-cpu

rcu_process_callbacks()

rcu_check_callbacks

tasklet_schedule

update_process_times

rcu_pending()?

Y

timer interrupt

__rcu_process_callbacks()

rcu_check_quiescent_state

call rcu_do_batch if

rdp->donelist

rcu_ctrlblk

cur = -300

completed = -300

next_pending = 0

signaled = 0

lock

cpumask = 0

[Global control block]

static struct rcu_ctrlblk rcu_ctrlblk;

per-cpu

rcu_data

quiescbatch = rcp->completed = -300

passed_quiesc = 0

qs_pending = 0

batch = 0

*nxtlist

**nxttail

qs

handling

batch

handling

struct

rcu_head

*curlist = NULL

**curtail

*donelist = NULL

**donetail

qlen = 1

# of queued callbacks

cpu = current cpu’s ID

blimit = 10 (default value)

rcu_head

struct rcu_head *next

func = my_rcu_func

address of

address of

address of

Manipulation

Kernel Source Reference: 2.6.24](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-49-320.jpg)

![Agenda

• [Overview] rwlock (reader-writer spinlock) vs RCU

• RCU Implementation Overview

✓High-level overview

✓RCU: List Manipulation – Old and New data

✓Reader/Writer synchronization: five basic APIs

➢Reader

➢ rcu_read_lock() & rcu_read_unlock()

➢ rcu_dereference()

➢Writer

➢ rcu_assign_pointer()

➢ synchronize_rcu() & call_rcu()

• Classic RCU vs Tree RCU

• RCU Flavors

• RCU Usage Summary & RCU Case Study](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-65-320.jpg)

![• Before preemptible RCU, context switch is a quiescent state

✓ [A complete grace period] Need to wait for all pre-existing interrupt and NMI handler

• [CONFIG_PREEMPTION=n]

✓ Vanilla RCU and RCU-sched grace period waits for pre-existing interrupt and NMI handlers

✓ Vanilla RCU and RCU-sched have identical implementations

• [CONFIG_PREEMPTION=Y] for RCU-sched

✓ Preemptible RCU does not need to wait for pre-existing interrupt and NMI handler.

✓ The code outside of an RCU read-side critical section → a QS

✓ rcu_read_lock_sched() → disable preemption

✓ rcu_read_unlock_sched() → re-enable preemption

✓ A preemption attempt during the RCU-sched read-side critical section:

✓ rcu_read_unlock_sched() will enter the scheduler

• Reader RCU APIs

✓ rcu_read_lock_sched() / rcu_read_unlock_sched()

✓ preempt_disable() / preempt_enable()

✓ local_irq_save() / local_irq_restore()

✓ hardirq enter / hardirq exit

✓ NMI enter / NMI exit

✓ rcu_dereference_sched()

• Writer: No change

RCU Flavors: Sched Flavor (Historical)](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-69-320.jpg)

![Agenda

• [Overview] rwlock (reader-writer spinlock) vs RCU

• RCU Implementation Overview

✓High-level overview

✓RCU: List Manipulation – Old and New data

✓Reader/Writer synchronization: five basic APIs

➢Reader

➢ rcu_read_lock() & rcu_read_unlock()

➢ rcu_dereference()

➢Writer

➢ rcu_assign_pointer()

➢ synchronize_rcu() & call_rcu()

• Classic RCU vs Tree RCU

• RCU Flavors

• RCU Usage Summary & RCU Case Study](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-74-320.jpg)

![RCU (Read-Copy-Update)

• Non-blocking synchronization

✓Deadlock Immunity: RCU read-side primitives do not block, spin or even do

backwards branches → execution time is deterministic.

➢Exception (programming error):

➢Immunity to priority inversion

◼ Low-priority RCU readers cannot prevent a high-priority RCU updater from acquiring the

update-side lock

◼ A low-priority RCU updater cannot prevent high-priority RCU readers from entering read-side

critical section

➢[-rt kernel] RCU is susceptible to priority inversion scenarios:

➢ A High-priority process blocked waiting for an RCU grace period to elapse can be blocked by

low-priority RCU readers. --> Solve by RCU priority boosting.

➢ [RCU priority boosting] Require rcu_read_unclock() do deboosting, which entails acquiring

scheduler locks.

➢ Need to avoid deadlocks within the scheduler and RCU: v5.15 kernel requires RCU to

avoid invoking the scheduler while holding any of RCU’s locks

➢ rcu_read_unlock() is not always lockless when RCU priority boosting is enabled.](https://image.slidesharecdn.com/rcu-230525071116-f4256278/85/Linux-Synchronization-Mechanism-RCU-Read-Copy-Update-84-320.jpg)