MAP REDUCE PROGRAMMING_using hadoop_a.ppt

- 1. MAP REDUCE PROGRAMMING Dr G Sudha Sadasivam

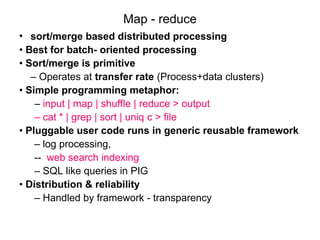

- 2. Map - reduce • sort/merge based distributed processing • Best for batch- oriented processing • Sort/merge is primitive – Operates at transfer rate (Process+data clusters) • Simple programming metaphor: – input | map | shuffle | reduce > output – cat * | grep | sort | uniq c > file • Pluggable user code runs in generic reusable framework – log processing, -- web search indexing – SQL like queries in PIG • Distribution & reliability – Handled by framework - transparency

- 3. MR model • Map() – Process a key/value pair to generate intermediate key/value pairs • Reduce() – Merge all intermediate values associated with the same key • Users implement interface of two primary methods: 1. Map: (key1, val1) → (key2, val2) 2. Reduce: (key2, [val2]) → [val3] • Map - clause group-by (for Key) of an aggregate function of SQL • Reduce - aggregate function (e.g., average) that is computed over all the rows with the same group-by attribute (key).

- 4. • Application writer specifies – A pair of functions called Map and Reduce and a set of input files and submits the job • Workflow – Input phase generates a number of FileSplits from input files (one per Map task) – The Map phase executes a user function to transform input kev-pairs into a new set of kev-pairs – The framework sorts & Shuffles the kev-pairs to output nodes – The Reduce phase combines all kev-pairs with the same key into new kevpairs – The output phase writes the resulting pairs to files • All phases are distributed with many tasks doing the work – Framework handles scheduling of tasks on cluster – Framework handles recovery when a node fails

- 5. Data distribution • Input files are split into M pieces on distributed file system - 128 MB blocks • Intermediate files created from map tasks are written to local disk • Output files are written to distributed file system Assigning tasks • Many copies of user program are started • Tries to utilize data localization by running map tasks on machines with data • One instance becomes the Master • Master finds idle machines and assigns them tasks

- 7. Execution • Map workers read in contents of corresponding input partition • Perform user-defined map computation to create intermediate <key,value> pairs • Periodically buffered output pairs written to local disk Reduce • Reduce workers iterate over ordered intermediate data – Each unique key encountered – values are passed to user's reduce function – eg. <key, [value1, value2,..., valueN]> • Output of user's reduce function is written to output file on global file system • When all tasks have completed, master wakes up user program

- 11. • Map • Reduce • Combiner – combines the O/P of a single map task. Same as reducer, but it stores the intermediate O/P in a local file wrt final output file • Debugging We can test the tasks locally using special Map reduce libraries Offers human readable status info on http server

- 14. map(String input_key, String input_value): // input_key: document name // input_value: document contents for each word w in input_value: EmitIntermediate(w, "1"); reduce(String output_key, Iterator intermediate_values): // output_key: a word // output_values: a list of counts int result = 0; for each v in intermediate_values: result += ParseInt(v); Emit(AsString(result));

- 15. • Map() – Input <filename, file text> – Parses file and emits <word, count> pairs • eg. <”hello”, 1> • Reduce() – Sums all values for the same key and emits <word, TotalCount> • eg. <”hello”, (3 5 2 7)> => <”hello”, 17>

- 16. • File Hello World Bye World Hello Hadoop GoodBye Hadoop • Map For the given sample input the first map emits: < Hello, 1> < World, 1> < Bye, 1> < World, 1> • The second map emits: < Hello, 1> < Hadoop, 1> < Goodbye, 1> < Hadoop, 1>

- 17. The output of the first combine: < Bye, 1> < Hello, 1> < World, 2> The output of the second combine: < Goodbye, 1> < Hadoop, 2> < Hello, 1> Thus the output of the job (reduce) is: < Bye, 1> < Goodbye, 1> < Hadoop, 2> < Hello, 2> < World, 2>

- 20. Configuration

- 21. CONCLUSION Hadoop Map-Reduce is a software framework for easily writing applications which process vast amounts of data (multi-terabyte data-sets) in- parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.

![MR model

• Map()

– Process a key/value pair to generate intermediate

key/value pairs

• Reduce()

– Merge all intermediate values associated with the same

key

• Users implement interface of two primary methods:

1. Map: (key1, val1) → (key2, val2)

2. Reduce: (key2, [val2]) → [val3]

• Map - clause group-by (for Key) of an aggregate function

of SQL

• Reduce - aggregate function (e.g., average) that is

computed over all the rows with the same group-by

attribute (key).](https://image.slidesharecdn.com/mapreduceprogramming-240902090609-f5f0641a/85/MAP-REDUCE-PROGRAMMING_using-hadoop_a-ppt-3-320.jpg)

![Execution

• Map workers read in contents of corresponding input

partition

• Perform user-defined map computation to create

intermediate <key,value> pairs

• Periodically buffered output pairs written to local disk

Reduce

• Reduce workers iterate over ordered intermediate data

– Each unique key encountered – values are passed to

user's reduce function

– eg. <key, [value1, value2,..., valueN]>

• Output of user's reduce function is written to output file

on global file system

• When all tasks have completed, master wakes up user

program](https://image.slidesharecdn.com/mapreduceprogramming-240902090609-f5f0641a/85/MAP-REDUCE-PROGRAMMING_using-hadoop_a-ppt-7-320.jpg)