Map-Reduce and Apache Hadoop

- 1. Map-Reduce and Apache Hadoop for Big Data Computations Svetlin Nakov Training and Inspiration Software University http://softuni.bg BGOUG Seminar, Borovets, 4-June-2016

- 2. 2 1. Big Data 2. Map-Reduce What is Map-Reduce? How It Works? Mappers and Reducers Examples 3. Apache Hadoop Table of Contents

- 3. Big Data What is Big Data Processing?

- 4. 4 Big data == process very large data sets (terabytes / petabytes) So large or complex, that traditional data processing is inadequate Usually stored and processed by distributed databases Often related to analysis of very large data sets Typical components of big data systems Distributed databases (like Cassandra, HBase and Hive) Distributed processing frameworks (like Apache Hadoop) Distributed processing systems (like Map-Reduce) Distributed file systems (like HDFS) Big Data

- 5. Map-Reduce

- 6. 6 Map-Reduce is a distributed processing framework Computational model for processing huge data-sets (terabytes) Using parallel processing on large clusters (thousands of nodes) Relying on distributed infrastructure Like Apache Hadoop or MongoDB cluster The input and output data is stored in a distributed file system (or distributed database) The framework takes care of scheduling, executing and monitoring tasks, and re-executes the failed tasks What is Map-Reduce?

- 7. 7 How map-reduce works? 1. Splits the input data-set into independent chunks 2. Process each chunk by "map" tasks in parallel manner The "map" function groups the input data into key-value pairs Equal keys are processed by the same "reduce" node 3. Outputs of the "map" tasks are Sorted, grouped by key, then sent as input to the "reduce" tasks 4. The "reduce" tasks Aggregate the results per each key and produces the final output Map-Reduce: How It Works?

- 8. 8 The map-reduce process is a sequence of transformations, executed on several nodes in parallel Map: groups input chunks of data to key-value pairs E.g. splits documents(id, chunk-content) into words(word, count) Combine: sorts and combines all values by the same key E.g. produce a list of counts for each word Reduce: combines (aggregates) all values for certain key The Map-Reduce Process (input) <key1, val1> map <key2, val2> combine <key2, list<val2>> reduce <key3, val3> (output)

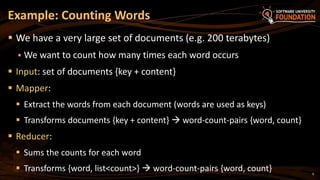

- 9. 9 We have a very large set of documents (e.g. 200 terabytes) We want to count how many times each word occurs Input: set of documents {key + content} Mapper: Extract the words from each document (words are used as keys) Transforms documents {key + content} word-count-pairs {word, count} Reducer: Sums the counts for each word Transforms {word, list<count>} word-count-pairs {word, count} Example: Counting Words

- 10. 10 Counting Words: Mapper and Reducer public void map(Object offset, Text docText, Context context) throws IOException, InterruptedException { String[] words = docText.toString().toLowerCase().split("W+"); for (String word : words) context.write(new Text(word), new IntWritable(1)); } public void reduce(Text word, Iterable<IntWritable> counts, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable count : counts) sum += count.get(); context.write(word, new IntWritable(sum)); }

- 11. Word Count in Apache Hadoop Live Demo

- 12. 12 We are given a CSV file holding real estate sales data: Estate address, city, ZIP code, state, # beds, # baths, square foots, sale date price and GPS coordinates (latitude + longitude) Find all cities that have sales in price range [100 000 … 200 000] As side effect, find the sum of all sales by city Example: Extract Data from CSV Report

- 13. 13 Process CSV Report – How It Works? SELECT city, SUM(price) FROM Sales GROUP BY city chunkingchunking map map map city sum(price) SACRAMENTO 625140 LINCOLN 843620 RIO LINDA 348500 reduce reduce reduce

- 14. 14 Process CSV Report: Mapper and Reducer public void map(Object offset, Text inputCSVLine, Context context) throws IOException, InterruptedException { String[] fields = inputCSVLine.toString().split(","); String city = fields[1]; int price = Integer.parseInt(fields[9]); if (price > 100000 && price < 200000) context.write(new Text(city), new LongWritable(price); } public void reduce(Text city, Iterable<LongWritable> prices, Context context) throws IOException, InterruptedException { long sum = 0; for (LongWritable val : prices) sum += val.get(); context.write(city, new LongWritable(sum)); }

- 15. Processing CSV Report in Apache Hadoop Live Demo

- 16. Apache Hadoop Distributed Processing Framework

- 17. 17 Apache Hadoop project develops open-source software for reliable, scalable, distributed computing Hadoop Distributed File System (HDFS) – a distributed file system that transparently moves data across Hadoop cluster nodes Hadoop MapReduce – the map-reduce framework HBase – a scalable, distributed database for large tables Hive – SQL-like query for large datasets Pig – a high-level data-flow language for parallel computation Hadoop is driven by big players like IBM, Microsoft, Facebook, VMware, LinkedIn, Yahoo, Cloudera, Intel, Twitter, Hortonworks, … Apache Hadoop

- 18. 18 Hadoop Ecosystem HDFS Storage Redundant (3 copies) For large files – large blocks 64 MB or 128 MB / block Can scale to 1000s of nodes MapReduce API Batch (Job) processing Distributed and localized to clusters Auto-parallelizable for huge amounts of data Fault-tolerant (auto retries) Adds high availability and more Hadoop Libraries Pig Hive HBase Others

- 19. 19 Hadoop Cluster HDFS (Physical) Storage Name Node Data Node 1 Data Node 2 Data Node 3 Secondary Name Node • Contains web site to view cluster information • V2 Hadoop uses multiple Name Nodes for HA One Name Node • 3 copies of each node by default Many Data Nodes • Using common Linux shell commands • Block size is 64 or 128 MB Work with data in HDFS

- 20. Tips: sudo means "run as administrator" (super user) Some distributions use hadoop dfs rather than hadoop fs Common Hadoop Shell Commands hadoop fs –cat file:///file2 hadoop fs –mkdir /user/hadoop/dir1 /user/hadoop/dir2 hadoop fs –copyFromLocal <fromDir> <toDir> hadoop fs –put <localfile> hdfs://nn.example.com/hadoopfile sudo hadoop jar <jarFileName> <class> <fromDir> <toDir> hadoop fs –ls /user/hadoop/dir1 hadoop fs –cat hdfs://nn1.example.com/file1 hadoop fs –get /user/hadoop/file <localfile>

- 21. Hadoop Shell Commands Live Demo

- 22. 22 Apache Hadoop MapReduce The world's leading implementation of the map-reduce computational model Provides parallelized (scalable) computing For processing very large data sets Fault tolerant Runs on commodity of hardware Implemented in many cloud platforms: Amazon EMR, Azure HDInsight, Google Cloud, Cloudera, Rackspace, HP Cloud, … Hadoop MapReduce

- 24. 24 Download and install Java and Hadoop http://hadoop.apache.org/releases.html You will need to install Java first Download a pre-installed Hadoop virtual machine (VM) Hortonworks Sandbox Cloudera QuickStart VM You can use Hadoop in the cloud / local emulator E.g. Azure HDInsight Emulator Hadoop: Getting Started

- 25. Playing with Apache Hadoop Live Demo

- 26. 26 Big data == processing huge datasets that are too big for processing on a single machine Use a cluster of computing nodes Map-reduce == computational paradigm for parallel data processing of huge data-sets Data is chunked, then mapped into groups, then groups are processed and the results are aggregated Highly scalable, can process petabytes of data Apache Hadoop – industry's leading Map-Reduce framework Summary

- 28. License This course (slides, examples, labs, videos, homework, etc.) is licensed under the "Creative Commons Attribution- NonCommercial-ShareAlike 4.0 International" license 28 Attribution: this work may contain portions from "Fundamentals of Computer Programming with C#" book by Svetlin Nakov & Co. under CC-BY-SA license "Data Structures and Algorithms" course by Telerik Academy under CC-BY-NC-SA license

- 29. Free Trainings @ Software University Software University Foundation – softuni.org Software University – High-Quality Education, Profession and Job for Software Developers softuni.bg Software University @ Facebook facebook.com/SoftwareUniversity Software University @ YouTube youtube.com/SoftwareUniversity Software University Forums – forum.softuni.bg

Editor's Notes

- #21: http://hadoop.apache.org/docs/r0.18.3/hdfs_shell.html http://nsinfra.blogspot.in/2012/06/difference-between-hadoop-dfs-and.html

![10

Counting Words: Mapper and Reducer

public void map(Object offset, Text docText, Context context)

throws IOException, InterruptedException {

String[] words = docText.toString().toLowerCase().split("W+");

for (String word : words)

context.write(new Text(word), new IntWritable(1));

}

public void reduce(Text word, Iterable<IntWritable> counts,

Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable count : counts)

sum += count.get();

context.write(word, new IntWritable(sum));

}](https://image.slidesharecdn.com/nakov-at-bgoug-map-reduce-apache-hadoop-june-2016-160604140223/85/Map-Reduce-and-Apache-Hadoop-10-320.jpg)

![12

We are given a CSV file holding real estate sales data:

Estate address, city, ZIP code, state, # beds, # baths, square foots,

sale date price and GPS coordinates (latitude + longitude)

Find all cities that have sales in price range [100 000 … 200 000]

As side effect, find the sum of all sales by city

Example: Extract Data from CSV Report](https://image.slidesharecdn.com/nakov-at-bgoug-map-reduce-apache-hadoop-june-2016-160604140223/85/Map-Reduce-and-Apache-Hadoop-12-320.jpg)

![14

Process CSV Report: Mapper and Reducer

public void map(Object offset, Text inputCSVLine, Context context)

throws IOException, InterruptedException {

String[] fields = inputCSVLine.toString().split(",");

String city = fields[1];

int price = Integer.parseInt(fields[9]);

if (price > 100000 && price < 200000)

context.write(new Text(city), new LongWritable(price);

}

public void reduce(Text city, Iterable<LongWritable> prices,

Context context) throws IOException, InterruptedException {

long sum = 0;

for (LongWritable val : prices)

sum += val.get();

context.write(city, new LongWritable(sum));

}](https://image.slidesharecdn.com/nakov-at-bgoug-map-reduce-apache-hadoop-june-2016-160604140223/85/Map-Reduce-and-Apache-Hadoop-14-320.jpg)