Migrating from InnoDB and HBase to MyRocks at Facebook

- 1. Migrating InnoDB and HBase to MyRocks Yoshinori Matsunobu Production Engineer / MySQL Tech Lead, Facebook Feb 2019, MariaDB Openworks

- 2. What is MyRocks ▪ MySQL on top of RocksDB (Log-Structured Merge Tree Database) ▪ Open Source, distributed from MariaDB and Percona as well MySQL Clients InnoDB RocksDB Parser Optimizer Replication etc SQL/Connector MySQL http://myrocks.io/

- 3. LSM Compaction Algorithm -- Level ▪ For each level, data is sorted by key ▪ Read Amplification: 1 ~ number of levels (depending on cache -- L0~L3 are usually cached) ▪ Write Amplification: 1 + 1 + fanout * (number of levels – 2) / 2 ▪ Space Amplification: 1.11 ▪ 11% is much smaller than B+Tree’s fragmentation

- 4. bottommost_compression and compression_per_level compression_per_level=kLZ4Compression or kNoCompression bottommost_compression=kZSTD ▪ Use stronger compression algorithm (Zstandard) in Lmax to save space ▪ Use faster compression algorithm (LZ4 or None) in higher levels to keep up with writes

- 5. Read, Write and Space Performance/Efficiency ▪ Pick two of them ▪ InnoDB/B-Tree favors Read at cost of Write and Space ▪ For large scale database on Flash, Space is important ▪ Read inefficiency can be mitigated by Flash and Cache tiers ▪ Write inefficiency can not be easily resolved ▪ Implementations matter (e.g. ZSTD > Zlib)

- 6. Read, Write and Space Performance/Efficiency - Compressed InnoDB is roughly 2x smaller than uncompressed InnoDB, MyRocks/HBase are 4x smaller - Decompression cost on read is non zero. It matters less on i/o bound workloads - HBase vs MyRocks perf differences came from implementation efficiencies rather than database architecture

- 7. UDB – Migration from InnoDB to MyRocks ▪ UDB: Our largest user database that stores social activities ▪ Biggest motivation was saving space ▪ 2X savings vs compressed InnoDB, 4X vs uncompressed InnoDB ▪ Write efficiency was 10X better ▪ Read efficiency was no worse than X times ▪ Could migrate without rewriting applications

- 8. User Database at Facebook InnoDB in user database 90% SpaceIOCPU Machine limit 15%20% MyRocks in user database 45% SpaceIOCPU Machine limit 15%21% 21% 15% 45%

- 9. MyRocks on Facebook Messaging ▪ In 2010, we created Facebook Messenger and we chose HBase as backend database ▪ LSM database ▪ Write optimized ▪ Smaller space ▪ Good enough on HDD ▪ Successful MyRocks on UDB led us to migrate Messenger as well ▪ MyRocks used much less CPU time, worked well on Flash ▪ p95~99 latency and error rates improved by 10X ▪ Migrated from HBase to MyRocks in 2017~2018

- 10. FB Messaging Migration from HBase to MyRocks

- 11. MyRocks migration -- Technical Challenges ▪ Migration ▪ Creating MyRocks instances without downtime ▪ Loading into MyRocks tables within reasonable time ▪ Verifying data consistency between InnoDB and MyRocks ▪ Serving write traffics ▪ Continuous Monitoring ▪ Resource Usage like space, iops, cpu and memory ▪ Query plan outliers ▪ Stalls and crashes

- 12. Creating first MyRocks instance without downtime ▪ Picking one of the InnoDB slave instances, then starting logical dump and restore ▪ Stopping one slave does not affect services Master (InnoDB) Slave1 (InnoDB) Slave2 (InnoDB) Slave3 (InnoDB) Slave4 (MyRocks) Stop & Dump & Load

- 13. Faster Data Loading Normal Write Path in MyRocks/RocksDB Write Requests MemTableWAL Level 0 SST Level 1 SST Level max SST …. Flush Compaction Compaction Faster Write Path Write Requests Level max SST

- 14. Creating second MyRocks instance without downtime Master (InnoDB) Slave1 (InnoDB) Slave2 (InnoDB) Slave3 (MyRocks) Slave4 (MyRocks) myrocks_hotbackup (Online binary backup)

- 15. Shadow traffic tests ▪ We have a shadow test framework ▪ MySQL audit plugin to capture read/write queries from production instances ▪ Replaying them into shadow master instances ▪ Shadow master tests ▪ Client errors ▪ Rewriting queries relying on Gap Lock ▪ Added a feature to detect such queries

- 16. Promoting MyRocks as a master Master (MyRocks) Slave1 (InnoDB) Slave2 (InnoDB) Slave3 (InnoDB) Slave4 (MyRocks)

- 17. Promoting MyRocks as a master Master (MyRocks) Slave1 (MyRocks) Slave2 (MyRocks) Slave3 (MyRocks) Slave4 (MyRocks)

- 19. Switching to Direct I/O ▪ MyRocks/RocksDB relied on Buffered I/O while InnoDB had Direct I/O ▪ Heavily dependent on Linux Kernel ▪ Stalls often happened on older Linux Kernel ▪ Wasted memory for slab (10GB+ DRAM was not uncommon) ▪ Swap happened under memory pressure

- 20. Direct I/O configurations in MyRocks/RocksDB ▪ rocksdb_use_direct_reads = ON ▪ rocksdb_use_direct_io_for_flush_and_compaction = ON ▪ rocksdb_cache_high_pri_pool_ratio = 0.5 ▪ Avoiding invalidating caches on ad hoc full table scan ▪ SET GLOBAL rocksdb_block_cache_size = X ▪ Dynamically changing buf pool size online, without causing stalls

- 21. Bulk loading Secondary Keys ▪ ALTER TABLE … ADD INDEX secondary_key (…) ▪ This enters bulk loading path for building a secondary key ▪ A big pain point for us was it blocked live master promotion ▪ (orig master) SET GLOBAL read_only=1; (new master) CHANGE MASTER; read_only=0; ▪ ALTER TABLE blocks SET GLOBAL read_only=1 ▪ Nonblocking bulk loading ▪ CREATE TABLE with primary and secondary keys; ▪ SET SESSION rocksdb_bulk_load_allow_sk=1; ▪ SET SESSION rocksdb_bulk_load=1; ▪ LOAD DATA ….. (bulk loading into primary key, and creating temporary files for secondary keys) ▪ SET SESSION rocksdb_bulk_load=0; // building secondary keys without blocking read_only=1

- 22. Zstandard dictionary compression ▪ Dictionary helps to save space and decompression speed, especially for small blocks ▪ Dictionary is created for each SST file ▪ Newer Zstandard (1.3.6+) has significant improvements for creating dictionaries ▪ max_dict_bytes (8KB) and zstd_max_train_bytes (256KB) -- compression_opts=- 14:6:0:8192:262144

- 23. Parallel and reliable Manual Compaction ▪ Several configuration changes need reformatting data to get benefits ▪ Changing compression algorithm or compression level ▪ Changing bloom filter settings ▪ Changing block size ▪ Parallel Manual Compaction is useful to reformat quickly ▪ set session rocksdb_manual_compaction_threads=24; ▪ set global rocksdb_compact_cf=‘default’; ▪ (Manual Compaction is done for a specified CF) ▪ Not blocking DDL, DML, Replication

- 24. compaction_pri=kMinOverlappingRatio ▪ Default compaction priority (kByCompensatedSize) picks SST files that have many deletions ▪ In general, it reads and writes more than necessary ▪ compaction_pri=kMinOverlappingRatio compacts files that overlap less between levels ▪ Typically reducing read/write bytes and compaction time spent by half

- 25. Controlling compaction volume and speed ▪ From performance stability point of view: ▪ Each compaction should not run too long ▪ Should not generate new SST files too fast ▪ On Flash, should not remove old SST files too fast ▪ max_compaction_bytes=400MB ▪ rocksdb_sst_mgr_rate_bytes_per_sec = 64MB ▪ rocksdb_delete_obsolete_files_period_micros = 64MB ▪ rocksdb_max_background_jobs = 12 ▪ Compactions start with one thread, and gradually increasing threads based on pending compaction bytes

- 26. TTL based compactions ▪ When database size becomes large, you may run some logical-deletion jobs to purge old data ▪ But old Lmax SST files might not be deleted as expected, higher levels have old SST files remained. As a result, space is not reclaimed ▪ TTL based compaction forces to compact old SST files in higher levels, to make sure to reclaim space L1 L2 L3 key=1, value=1MB Key=2, value=1MB … Key=1, value=null Key=2, value=null L1 L2 L3 key=1, value=null Key=2, value=null …

- 27. Read Free Replication ▪ Read Free Replication is a feature to skip random reads on replicas ▪ Skipping unique constraint checking on INSERT (slave) ▪ Skipping finding rows on UPDATE/DELETE (slave) ▪ (Upcoming) Skipping both on REPLACE (master/slave) ▪ rocksdb-read-free-rpl = OFF, PK_ONLY, PK_SK ▪ Enabling Read Free for INSERT, UPDATE and DELETE for tables, depending on secondary keys existence ▪ If you update outside of replication, secondary indexes may become inconsistent

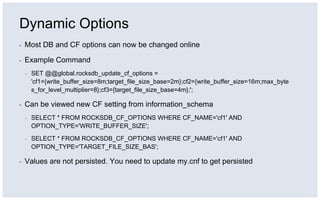

- 28. Dynamic Options ▪ Most DB and CF options can now be changed online ▪ Example Command ▪ SET @@global.rocksdb_update_cf_options = 'cf1={write_buffer_size=8m;target_file_size_base=2m};cf2={write_buffer_size=16m;max_byte s_for_level_multiplier=8};cf3={target_file_size_base=4m};'; ▪ Can be viewed new CF setting from information_schema ▪ SELECT * FROM ROCKSDB_CF_OPTIONS WHERE CF_NAME='cf1' AND OPTION_TYPE='WRITE_BUFFER_SIZE'; ▪ SELECT * FROM ROCKSDB_CF_OPTIONS WHERE CF_NAME='cf1' AND OPTION_TYPE='TARGET_FILE_SIZE_BAS'; ▪ Values are not persisted. You need to update my.cnf to get persisted

- 29. Our current status ▪ Our two biggest database services (UDB and Facebook Messenger) have been reliably running on top of MyRocks ▪ Efficiency wins : InnoDB to MyRocks ▪ Performance and Reliability wins : HBase to MyRocks ▪ Gradually working on migrating long tail, smaller database services to MyRocks

- 30. Future Plans ▪ MySQL 8.0 ▪ Pushing more efficiency efforts ▪ Simple read query paths to be much more CPU efficient ▪ Working without WAL, engine crash recovery relying on Binlog ▪ Towards more general purpose database ▪ Gap Lock and Foreign Key ▪ Long running transactions ▪ Online and fast schema changes ▪ Mixing MyRocks and InnoDB in the same instance

- 31. (c) 2009 Facebook, Inc. or its licensors. "Facebook" is a registered trademark of Facebook, Inc.. All rights reserved. 1.0