Migrating to Apache Spark at Netflix

- 1. Migrating to Spark at Netflix Ryan Blue Spark Summit 2019

- 3. ● ETL was mostly written in Pig, with some in Hive ● Pipelines required data engineering ● Data engineers had to understand the processing engine Long ago . . .

- 6. Today S3 bytes read S3 bytes written

- 7. ● Spark is > 90% of job executions – high tens-of-thousands daily ● Data platform is easier to use and more efficient ● Customers from all parts of the business Today

- 8. How did we get there?

- 9. ● High-profile Spark features: DataFrames, codegen, etc. ● S3 optimizations and committers ● Parquet filtering, tuning, and compression ● Notebook environment Not included

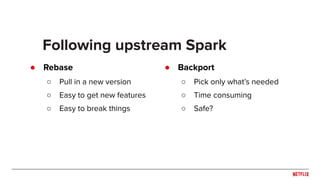

- 11. ● Rebase ○ Pull in a new version ○ Easy to get new features ○ Easy to break things Following upstream Spark ● Backport ○ Pick only what’s needed ○ Time consuming ○ Safe?

- 12. ● Maintain supported versions in parallel using backports ● Periodic rebase to add new minor versions: 1.6, 2.0, 2.1, 2.3 ● Recommend version based on actual use and experience ● Requires patching job submission Netflix: Parallel branches

- 13. ● Easily test another branch before spending time ● Avoids coordinating versions across major applications ● Fast iteration: deploy changes several times per week Benefits of parallel branches

- 14. ● Unstable branches ● Nightly canaries for stable and unstable ● CI runs unit tests for unstable ● Integration tests validate every deployment Testing

- 15. ● 1.6 – scale problems ● 2.0 – a little too unpolished ● 2.1 – solid, with some additional love ● 2.3 – slow migration, faster in some cases Supported versions

- 16. Challenges

- 17. ● 1.6 is unstable above 500 executors ○ Use of the Actor model caused coarse locking ○ RPC dependencies make lock issues worse ○ Runaway retry storms ● Spark needs distributed tracing Stability

- 18. ● Much better in 2.1, plus patches ○ Remove block status data from heartbeats (SPARK-20084) ○ Multi-threaded listener bus (SPARK-18838) ○ Unstable executor requests (SPARK-20540) ● 2.1 and 2.3 still have problems with 100,000+ tasks ○ Applications hang after shutdown ○ Increase job maxPartitionBytes or coalesce Stability

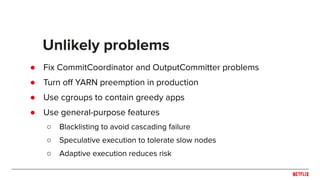

- 19. ● Happen all the time at scale ● Scale in several dimensions ○ Large clusters, lots of disks to fail ○ High tens-of-thousands of executions ○ Many executors, many tasks, diverse workloads Unlikely problems

- 20. ● Fix CommitCoordinator and OutputCommitter problems ● Turn off YARN preemption in production ● Use cgroups to contain greedy apps ● Use general-purpose features ○ Blacklisting to avoid cascading failure ○ Speculative execution to tolerate slow nodes ○ Adaptive execution reduces risk Unlikely problems

- 21. ● Fix persistent OOM causes ○ Use less driver memory for broadcast joins (SPARK-22170) ○ Add PySpark memory region and limits (SPARK-25004) ○ Base stats on row count, not size on disk Memory management

- 22. ● Educate users about memory regions ○ Spark memory vs JVM memory vs overhead ○ Know what region fixes your problem (e.g., spilling) ○ Never set spark.executor.memory without also setting spark.memory.fraction Memory management

- 23. Best practices

- 24. ● Avoid RDDs ○ Kryo problems plagued 1.6 apps ○ Let the optimizer improve jobs over time ● Aggressively broadcast ○ Remove the broadcast timeout ○ Set broadcast threshold much higher Basics

- 25. ● 3 rules: ○ Don’t copy configuration ○ If you don’t know what it does, don’t change it ○ Never change timeouts ● Document defaults and recommendations Configuration

- 26. ● Know how to control parallelism ○ spark.sql.shuffle.partitions, spark.sql.files.maxPartitionBytes ○ repartition vs coalesce ● Use the least-intrusive option ○ Set shuffle parallelism high and use adaptive execution ○ Allow Spark to improve Parallelism

- 27. ● Keep tasks in low tens-of-thousands ○ Too many tasks and the driver can’t handle heartbeats ○ Jobs hang for 10+ minutes after shutdown ● Reduce pressure on shuffle service ○ map tasks * reduce tasks = shuffle shards Avoid wide stages

- 28. ● Fixed --num-executors accidents (SPARK-13723) ● Use materialize instead of caching ○ Materialize: convert to RDD, back to DF, and count ○ Stores cache data in shuffle servers ○ Also avoids over-optimization Dynamic Allocation

- 29. ● Add ORDER BY ○ Partition columns, filter columns, and one high cardinality column ● Benefits ○ Cluster by partition columns – minimize output files ○ Cluster by common filter columns – faster reads ○ Automatic skew estimation – faster writes (wall time) ● Needs adaptive execution support Sort before writing

- 30. Current problems

- 31. ● Easy to overload one node ○ Skewed data, not enough threads, GC ● Prevents graceful shrink ● Causes huge runtime variance Shuffle service

- 32. ● Collect is wasteful ○ Iterate through compressed result blocks to collect ● Configuration is confusing ○ Memory fraction is often ignored ○ Simpler is better ● Should build broadcast tables on executors Memory management

- 33. ● Forked the write path for 2.x releases ○ Consistent rules across “datasource” and Hive tables ○ Remove unsafe operations, like implicit unsafe casts ○ Dynamic partition overwrites and Netflix “batch” pattern ● Fix upstream behavior and consistency with DSv2 ● Fix table usability with Iceberg ○ Schema evolution and partitioning DataSourceV2