MLSD18. Automating Machine Learning Workflows

- 1. Automating Machine Learning Workflows #MLDS18 Doha, November 2018 #MLDS18 Automating Machine Learning Workflows Doha, November 2018 1 / 57

- 2. Outline 1 ML as a System Service 2 ML as a RESTful Cloudy Service 3 Machine Learning Workflows on an ML Service 4 Client–side Workflow Automation 5 Server–side Workflow Automation 6 ML Algorithms as Server–side Workflows #MLDS18 Automating Machine Learning Workflows Doha, November 2018 2 / 57

- 3. Outline 1 ML as a System Service 2 ML as a RESTful Cloudy Service 3 Machine Learning Workflows on an ML Service 4 Client–side Workflow Automation 5 Server–side Workflow Automation 6 ML Algorithms as Server–side Workflows #MLDS18 Automating Machine Learning Workflows Doha, November 2018 3 / 57

- 4. Machine Learning as a System Service The goal Machine Learning as a system level service • Accessibility • Integrability • Automation • Ease of use #MLDS18 Automating Machine Learning Workflows Doha, November 2018 4 / 57

- 5. Machine Learning as a System Service #MLDS18 Automating Machine Learning Workflows Doha, November 2018 5 / 57

- 6. Machine Learning as a System Service The goal Machine Learning as a system level service The means • APIs: ML building blocks • Abstraction layer over feature engineering • Abstraction layer over algorithms • Automation #MLDS18 Automating Machine Learning Workflows Doha, November 2018 6 / 57

- 7. Outline 1 ML as a System Service 2 ML as a RESTful Cloudy Service 3 Machine Learning Workflows on an ML Service 4 Client–side Workflow Automation 5 Server–side Workflow Automation 6 ML Algorithms as Server–side Workflows #MLDS18 Automating Machine Learning Workflows Doha, November 2018 7 / 57

- 8. RESTful-ish ML Services #MLDS18 Automating Machine Learning Workflows Doha, November 2018 8 / 57

- 9. RESTful-ish ML Services #MLDS18 Automating Machine Learning Workflows Doha, November 2018 9 / 57

- 10. RESTful-ish ML Services #MLDS18 Automating Machine Learning Workflows Doha, November 2018 10 / 57

- 11. RESTful-ish ML Services Web UI: REST resources in action #MLDS18 Automating Machine Learning Workflows Doha, November 2018 11 / 57

- 12. RESTful-ish ML Services • Excellent abstraction layer • Transparent data model • Immutable resources and UUIDs: traceability • Simple yet effective interaction model • Easy access from any language (API bindings) Algorithmic complexity and computing resources management problems mostly washed away #MLDS18 Automating Machine Learning Workflows Doha, November 2018 12 / 57

- 13. RESTful done right: Whitebox resources • Your data, your model • Model reverse engineering becomes moot • Maximizes reach (Web, CLI, desktop, IoT) Example: Alexa skills using ML resources #MLDS18 Automating Machine Learning Workflows Doha, November 2018 13 / 57

- 14. Outline 1 ML as a System Service 2 ML as a RESTful Cloudy Service 3 Machine Learning Workflows on an ML Service 4 Client–side Workflow Automation 5 Server–side Workflow Automation 6 ML Algorithms as Server–side Workflows #MLDS18 Automating Machine Learning Workflows Doha, November 2018 14 / 57

- 15. Idealized Machine Learning Workflows Dr. Natalia Konstantinova (http://nkonst.com/machine-learning-explained-simple-words/) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 15 / 57

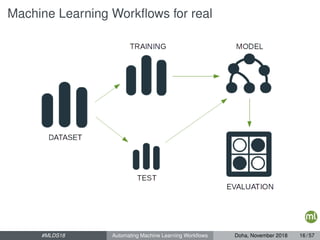

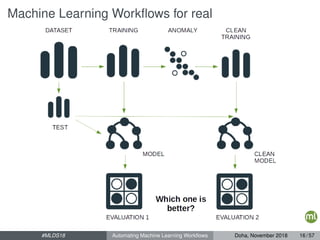

- 16. Machine Learning Workflows for real #MLDS18 Automating Machine Learning Workflows Doha, November 2018 16 / 57

- 17. Machine Learning Workflows for real #MLDS18 Automating Machine Learning Workflows Doha, November 2018 16 / 57

- 18. Machine Learning Workflows for real Jeannine Takaki, Microsoft Azure Team #MLDS18 Automating Machine Learning Workflows Doha, November 2018 16 / 57

- 19. Outline 1 ML as a System Service 2 ML as a RESTful Cloudy Service 3 Machine Learning Workflows on an ML Service 4 Client–side Workflow Automation 5 Server–side Workflow Automation 6 ML Algorithms as Server–side Workflows #MLDS18 Automating Machine Learning Workflows Doha, November 2018 17 / 57

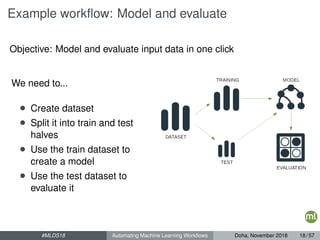

- 20. Example workflow: Model and evaluate Objective: Model and evaluate input data in one click We need to... • Create dataset • Split it into train and test halves • Use the train dataset to create a model • Use the test dataset to evaluate it #MLDS18 Automating Machine Learning Workflows Doha, November 2018 18 / 57

- 21. Example workflow: Web UI #MLDS18 Automating Machine Learning Workflows Doha, November 2018 19 / 57

- 22. (Non) automation via Web UI Strengths of Web UI Simple Just clicking around Discoverable Exploration and experimenting Abstract Transparent error handling and scalability Problems of Web UI Only simple Simple tasks are simple, hard tasks quickly get hard No automation or batch operations Clicking humans don’t scale well #MLDS18 Automating Machine Learning Workflows Doha, November 2018 20 / 57

- 23. Example workflow: automation via REST curl -X POST "https://bigml.io?$AUTH/dataset" -D '{"source": "source/56fbbfea200d5a3403000db7"}' curl -X POST "https://bigml.io?$AUTH/cluster" -D '{"dataset": "dataset/43ffe231a34fff333000b65"}' curl -X POST "https://bigml.io?$AUTH/batchcentroid" -D '{"dataset": "dataset/43ffe231a34fff333000b65", "cluster": "cluster/33e2e231a34fff333000b65"}' curl -X GET "https://bigml.io?$AUTH/dataset/1234ff45eab8c0034334" #MLDS18 Automating Machine Learning Workflows Doha, November 2018 21 / 57

- 24. Automation via HTTP Strengths of HTTP automation Scriptable Automation is possible, but that’s about it Problems of direct HTTP automation Not Simple Explicit error handling and scalability Not Discoverable Or at least not easily Not Scalable Exponential complexity with workflow size #MLDS18 Automating Machine Learning Workflows Doha, November 2018 22 / 57

- 25. Abstracting over raw HTTP: bindings #MLDS18 Automating Machine Learning Workflows Doha, November 2018 23 / 57

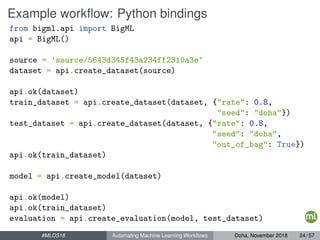

- 26. Example workflow: Python bindings from bigml.api import BigML api = BigML() source = 'source/5643d345f43a234ff2310a3e' dataset = api.create_dataset(source) api.ok(dataset) train_dataset = api.create_dataset(dataset, {"rate": 0.8, "seed": "doha"}) test_dataset = api.create_dataset(dataset, {"rate": 0.8, "seed": "doha", "out_of_bag": True}) api.ok(train_dataset) model = api.create_model(dataset) api.ok(model) api.ok(train_dataset) evaluation = api.create_evaluation(model, test_dataset) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 24 / 57

- 27. Example workflow: Python bindings # Now do it 100 times, serially for i in range(0, 100): train = (api.create_dataset(dataset, {"rate": 0.8, "seed": i})) test = (api.create_dataset(dataset, {"rate": 0.8, "seed": i, "out_of_bag": True}) api.ok(train) model.append(api.create_model(train)) api.ok(model) api.ok(test) evaluation.append(api.create_evaluation(model, test)) api.ok(evaluation[i]) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 25 / 57

- 28. Example workflow: Python bindings # Now do it 100 times, serially, and with error handling... for i in range(0, 100): try: train = (api.create_dataset(dataset, {"rate": 0.8, "seed": i})) test = (api.create_dataset(dataset, {"rate": 0.8, "seed": i, "out_of_bag": True}) api.ok(train) model.append(api.create_model(train)) api.ok(model) api.ok(test) evaluation.append(api.create_evaluation(model, test)) api.ok(evaluation[i]) except: # Recover, retry? # What do we do with the previous i - 1 resources? #MLDS18 Automating Machine Learning Workflows Doha, November 2018 26 / 57

- 29. Example workflow: Python bindings # More efficient if we parallelize, but at what level? for i in range(0, 100): train.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i}) test.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i, "out_of_bag": True}) # Do we wait here? api.ok(train[i]) api.ok(test[i]) for i in range(0, 100): model.append(api.create_model(train[i])) api.ok(model[i]) for i in range(0, 100): evaluation.append(api.create_evaluation(model, test_dataset)) api.ok(evaluation[i]) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 27 / 57

- 30. Example workflow: Python bindings # More efficient if we parallelize, but at what level? for i in range(0, 100): train.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i}) test.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i, "out_of_bag": True}) for i in range(0, 100): # Or do we wait here? api.ok(train[i]) model.append(api.create_model(train[i])) for i in range(0, 100): # and here? api.ok(model[i]) api.ok(train[i]) evaluation.append(api.create_evaluation(model, test_dataset)) api.ok(evaluation[i]) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 28 / 57

- 31. Example workflow: Python bindings # More efficient if we parallelize, but how do we handle errors?? for i in range(0, 100): train.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i}) test.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i, "out_of_bag": True}) for i in range(0, 100): api.ok(train[i]) model.append(api.create_model(train[i])) for i in range(0, 100): try: api.ok(model[i]) api.ok(test[i]) evaluation.append(api.create_evaluation(model, test_dataset)) api.ok(evaluation[i]) except: # How to recover if test[i] is failed? New datasets? Abort? #MLDS18 Automating Machine Learning Workflows Doha, November 2018 29 / 57

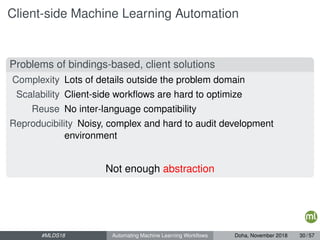

- 32. Client-side Machine Learning Automation Problems of bindings-based, client solutions Complexity Lots of details outside the problem domain Scalability Client-side workflows are hard to optimize Reuse No inter-language compatibility Reproducibility Noisy, complex and hard to audit development environment Not enough abstraction #MLDS18 Automating Machine Learning Workflows Doha, November 2018 30 / 57

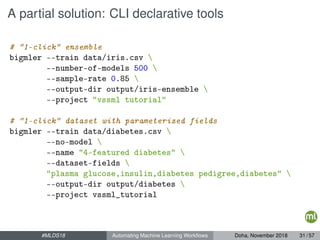

- 33. A partial solution: CLI declarative tools # "1-click" ensemble bigmler --train data/iris.csv --number-of-models 500 --sample-rate 0.85 --output-dir output/iris-ensemble --project "vssml tutorial" # "1-click" dataset with parameterized fields bigmler --train data/diabetes.csv --no-model --name "4-featured diabetes" --dataset-fields "plasma glucose,insulin,diabetes pedigree,diabetes" --output-dir output/diabetes --project vssml_tutorial #MLDS18 Automating Machine Learning Workflows Doha, November 2018 31 / 57

- 34. Rich, parameterized workflows: cross-validation bigmler analyze --cross-validation # parameterized input --dataset $(cat output/diabetes/dataset) --k-folds 3 # number of folds during validation --output-dir output/diabetes-validation #MLDS18 Automating Machine Learning Workflows Doha, November 2018 32 / 57

- 35. Client-side Machine Learning Automation Problems of client-side solutions Hard to generalize Declarative client tools hide complexity at the cost of flexibility Hard to combine Black–box tools cannot be easily integrated as parts of bigger client–side workflows Hard to audit Client–side development environments are complex and very hard to sandbox Not enough automation #MLDS18 Automating Machine Learning Workflows Doha, November 2018 33 / 57

- 36. Client-side Machine Learning Automation Problems of client-side solutions Complex Too fine-grained, leaky abstractions Cumbersome Error handling, network issues Hard to reuse Tied to a single programming language Hard to scale Parallelization again a problem Hard to generalize Declarative client tools hide complexity at the cost of flexibility Hard to combine Black–box tools cannot be easily integrated as parts of bigger client–side workflows Hard to audit Client–side development environments are complex and very hard to sandbox Not enough abstraction #MLDS18 Automating Machine Learning Workflows Doha, November 2018 33 / 57

- 37. Client-side Machine Learning Automation Problems of client-side solutions Complex Too fine-grained, leaky abstractions Cumbersome Error handling, network issues Hard to reuse Tied to a single programming language Hard to scale Parallelization again a problem Hard to generalize Declarative client tools hide complexity at the cost of flexibility Hard to combine Black–box tools cannot be easily integrated as parts of bigger client–side workflows Hard to audit Client–side development environments are complex and very hard to sandbox Algorithmic complexity and computing resources management problems mostly washed away are back! #MLDS18 Automating Machine Learning Workflows Doha, November 2018 33 / 57

- 38. Outline 1 ML as a System Service 2 ML as a RESTful Cloudy Service 3 Machine Learning Workflows on an ML Service 4 Client–side Workflow Automation 5 Server–side Workflow Automation 6 ML Algorithms as Server–side Workflows #MLDS18 Automating Machine Learning Workflows Doha, November 2018 34 / 57

- 39. Machine Learning Automation #MLDS18 Automating Machine Learning Workflows Doha, November 2018 35 / 57

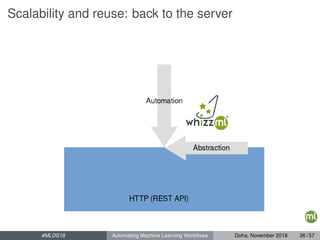

- 40. Scalability and reuse: back to the server #MLDS18 Automating Machine Learning Workflows Doha, November 2018 36 / 57

- 41. Complexity and reuse: domain-specific language #MLDS18 Automating Machine Learning Workflows Doha, November 2018 37 / 57

- 42. In a Nutshell 1 Workflows reified as server–side, RESTful resources 2 Domain–specific language for ML workflow automation #MLDS18 Automating Machine Learning Workflows Doha, November 2018 38 / 57

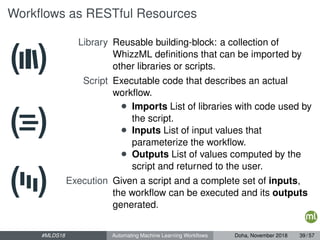

- 43. Workflows as RESTful Resources Library Reusable building-block: a collection of WhizzML definitions that can be imported by other libraries or scripts. Script Executable code that describes an actual workflow. • Imports List of libraries with code used by the script. • Inputs List of input values that parameterize the workflow. • Outputs List of values computed by the script and returned to the user. Execution Given a script and a complete set of inputs, the workflow can be executed and its outputs generated. #MLDS18 Automating Machine Learning Workflows Doha, November 2018 39 / 57

- 44. Metaprogramming in reflective DSLs: Scriptify Resources that create resources that create resources that create resources that create resources that create resources that create . . . #MLDS18 Automating Machine Learning Workflows Doha, November 2018 40 / 57

- 45. Different ways to create WhizzML Scripts and Libraries Github Script editor Gallery Other scripts Scriptify −→ #MLDS18 Automating Machine Learning Workflows Doha, November 2018 41 / 57

- 46. Syntactic Abstraction in WhizzML: Simple workflow ;; ML artifacts are first-class citizens, ;; we only need to talk about our domain (let ([train-id test-id] (create-dataset-split id 0.8) model-id (create-model train-id)) (create-evaluation test-id model-id)) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 42 / 57

- 47. Language Interoperability in WhizzML from bigml.api import BigML api = BigML() # choose workflow script = 'script/567b4b5be3f2a123a690ff56' # define parameters inputs = {'source': 'source/5643d345f43a234ff2310a3e'} # execute api.ok(api.create_execution(script, inputs)) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 43 / 57

- 48. Domain Specificity and Scalability: Trivial parallelization ;; Workflow for 1 resource (let ([train-id test-id] (create-dataset-split id 0.8) model-id (create-model train-id)) (create-evaluation test-id model-id)) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 44 / 57

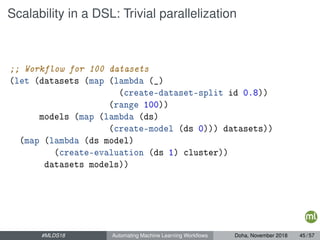

- 49. Scalability in a DSL: Trivial parallelization ;; Workflow for 100 datasets (let (datasets (map (lambda (_) (create-dataset-split id 0.8)) (range 100)) models (map (lambda (ds) (create-model (ds 0))) datasets)) (map (lambda (ds model) (create-evaluation (ds 1) cluster)) datasets models)) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 45 / 57

- 50. Outline 1 ML as a System Service 2 ML as a RESTful Cloudy Service 3 Machine Learning Workflows on an ML Service 4 Client–side Workflow Automation 5 Server–side Workflow Automation 6 ML Algorithms as Server–side Workflows #MLDS18 Automating Machine Learning Workflows Doha, November 2018 46 / 57

- 51. Advanced ML algorithms with WhizzML • Functional language, immutable data structures • Rich, very high-level libraries • Full tail call optimization #MLDS18 Automating Machine Learning Workflows Doha, November 2018 47 / 57

- 52. Advanced ML algorithms with WhizzML • Many ML algorithms can be thought of as workflows • In these algorithms, machine learning operations are the primitives Make a model Make a prediction Evaluate a model • Many such algorithms can be implemented in WhizzML Reap the advantages of BigML’s infrastructure Once implemented, it is language-agnostic #MLDS18 Automating Machine Learning Workflows Doha, November 2018 47 / 57

- 53. Examples: Best-first Feature Selection Objective: Select the n best features for modeling your data • Initialize a set S of used features as the empty set • Split your dataset into training and test sets • For i in 1 . . . n For each feature f not in S, model and evaluate with feature set S + f Greedily select ˆf, the feature with the best performance and set S ← S + ˆf https://github.com/whizzml/examples/tree/master/best-first #MLDS18 Automating Machine Learning Workflows Doha, November 2018 48 / 57

- 54. Examples: Best-first Feature Selection #MLDS18 Automating Machine Learning Workflows Doha, November 2018 49 / 57

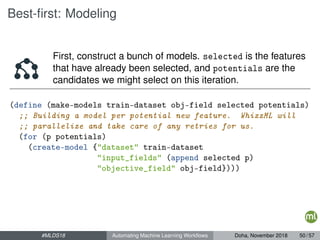

- 55. Best-first: Modeling First, construct a bunch of models. selected is the features that have already been selected, and potentials are the candidates we might select on this iteration. (define (make-models train-dataset obj-field selected potentials) ;; Building a model per potential new feature. WhizzML will ;; parallelize and take care of any retries for us. (for (p potentials) (create-model {"dataset" train-dataset "input_fields" (append selected p) "objective_field" obj-field}))) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 50 / 57

- 56. Best-first: Evaluation Now, conduct the evaluations. potentials is again the list of potential features to add, and model-ids is the list of corresponding model-ids created in the last step. (define (get-average-phi ev-id) (get (fetch (wait ev-id)) ["result" "model" "average_phi"])) (define (select-feature test-dataset-id potentials model-ids) (let (ids (for (model-id model-ids) (create-evaluation test-dataset-id model-id)) phis (for (ev-id ids) (get-average-phi ev-id)) ;; e.g. {0.8 "000000" 0.7 "000001"} phi->field (make-map potentials phis) (get phi->field (apply max phis)))) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 51 / 57

- 57. Main Loop Set up your objective id, inputs, and training and test dataset. Initialize the selected features to the empty set and iteratively call the previous two functions. (define (select-features dataset-id nfeatures) (let (obj-id (dataset-get-objective-id dataset-id) input-ids (default-inputs dataset-id obj-id) [train-id test-id] (create-dataset-split dataset-id 0.5)) (loop (selected [] potentials input-ids) (if (or (>= (count selected) nfeatures) (empty? potentials)) selected (let (models (make-models dataset-id obj-id selected potentials) feature (select-feature test-id potentials model)) (recur (append selected feature) (remove feature selected))))))) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 52 / 57

- 58. Example: Stacked Generalization Objective: Improve predictions by modeling the output scores of multiple trained models. • Create a training and a holdout set • Create n different models on the training set (with some difference among them; e.g., single-tree vs. ensemble vs. logistic regression) • Make predictions from those models on the holdout set • Train a model to predict the class based on the other models’ predictions #MLDS18 Automating Machine Learning Workflows Doha, November 2018 53 / 57

- 59. Impromptu example: eval by size (define (model-range dataset from to) (create-model dataset {"range" [from to]})) (define (eval-range dataset model from to) (let (ev-id (create-evaluation dataset model {"range" [from to]})) [ev-id ((fetch (wait ev-id)) ["result" "model" "average_phi"])])) (define (size-evaluations dataset-id steps) (let (ds (fetch dataset-id) rows (ds "rows") step-rows (div rows steps) tos (for (step (range (- steps 2))) (* (+ 1 step) step-rows)) models (for (to tos) (model-range dataset-id step-rows rows)) evals (map (lambda (from model) (eval-range dataset-id model 1 from)) tos models)) (map list froms evals))) #MLDS18 Automating Machine Learning Workflows Doha, November 2018 54 / 57

- 60. Example: Stacked Generalization #MLDS18 Automating Machine Learning Workflows Doha, November 2018 55 / 57

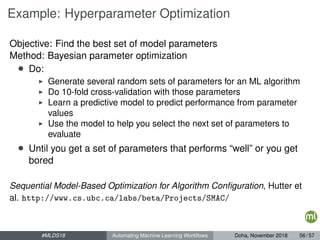

- 61. Example: Hyperparameter Optimization Objective: Find the best set of model parameters Method: Bayesian parameter optimization • Do: Generate several random sets of parameters for an ML algorithm Do 10-fold cross-validation with those parameters Learn a predictive model to predict performance from parameter values Use the model to help you select the next set of parameters to evaluate • Until you get a set of parameters that performs “well” or you get bored Sequential Model-Based Optimization for Algorithm Configuration, Hutter et al. http://www.cs.ubc.ca/labs/beta/Projects/SMAC/ #MLDS18 Automating Machine Learning Workflows Doha, November 2018 56 / 57

- 62. Thank you! #MLDS18 Automating Machine Learning Workflows Doha, November 2018 57 / 57

![Example workflow: Python bindings

# Now do it 100 times, serially

for i in range(0, 100):

train = (api.create_dataset(dataset, {"rate": 0.8, "seed": i}))

test = (api.create_dataset(dataset, {"rate": 0.8, "seed": i,

"out_of_bag": True})

api.ok(train)

model.append(api.create_model(train))

api.ok(model)

api.ok(test)

evaluation.append(api.create_evaluation(model, test))

api.ok(evaluation[i])

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 25 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-27-320.jpg)

![Example workflow: Python bindings

# Now do it 100 times, serially, and with error handling...

for i in range(0, 100):

try:

train = (api.create_dataset(dataset, {"rate": 0.8, "seed": i}))

test = (api.create_dataset(dataset, {"rate": 0.8, "seed": i,

"out_of_bag": True})

api.ok(train)

model.append(api.create_model(train))

api.ok(model)

api.ok(test)

evaluation.append(api.create_evaluation(model, test))

api.ok(evaluation[i])

except:

# Recover, retry?

# What do we do with the previous i - 1 resources?

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 26 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-28-320.jpg)

![Example workflow: Python bindings

# More efficient if we parallelize, but at what level?

for i in range(0, 100):

train.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i})

test.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i,

"out_of_bag": True})

# Do we wait here?

api.ok(train[i])

api.ok(test[i])

for i in range(0, 100):

model.append(api.create_model(train[i]))

api.ok(model[i])

for i in range(0, 100):

evaluation.append(api.create_evaluation(model, test_dataset))

api.ok(evaluation[i])

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 27 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-29-320.jpg)

![Example workflow: Python bindings

# More efficient if we parallelize, but at what level?

for i in range(0, 100):

train.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i})

test.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i,

"out_of_bag": True})

for i in range(0, 100):

# Or do we wait here?

api.ok(train[i])

model.append(api.create_model(train[i]))

for i in range(0, 100):

# and here?

api.ok(model[i])

api.ok(train[i])

evaluation.append(api.create_evaluation(model, test_dataset))

api.ok(evaluation[i])

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 28 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-30-320.jpg)

![Example workflow: Python bindings

# More efficient if we parallelize, but how do we handle errors??

for i in range(0, 100):

train.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i})

test.append(api.create_dataset(dataset, {"rate": 0.8, "seed": i,

"out_of_bag": True})

for i in range(0, 100):

api.ok(train[i])

model.append(api.create_model(train[i]))

for i in range(0, 100):

try:

api.ok(model[i])

api.ok(test[i])

evaluation.append(api.create_evaluation(model, test_dataset))

api.ok(evaluation[i])

except:

# How to recover if test[i] is failed? New datasets? Abort?

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 29 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-31-320.jpg)

![Syntactic Abstraction in WhizzML: Simple workflow

;; ML artifacts are first-class citizens,

;; we only need to talk about our domain

(let ([train-id test-id] (create-dataset-split id 0.8)

model-id (create-model train-id))

(create-evaluation test-id model-id))

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 42 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-46-320.jpg)

![Domain Specificity and Scalability: Trivial

parallelization

;; Workflow for 1 resource

(let ([train-id test-id] (create-dataset-split id 0.8)

model-id (create-model train-id))

(create-evaluation test-id model-id))

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 44 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-48-320.jpg)

![Best-first: Evaluation

Now, conduct the evaluations. potentials is again the list

of potential features to add, and model-ids is the list of

corresponding model-ids created in the last step.

(define (get-average-phi ev-id)

(get (fetch (wait ev-id)) ["result" "model" "average_phi"]))

(define (select-feature test-dataset-id potentials model-ids)

(let (ids (for (model-id model-ids)

(create-evaluation test-dataset-id model-id))

phis (for (ev-id ids) (get-average-phi ev-id))

;; e.g. {0.8 "000000" 0.7 "000001"}

phi->field (make-map potentials phis)

(get phi->field (apply max phis))))

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 51 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-56-320.jpg)

![Main Loop

Set up your objective id, inputs, and training and test

dataset. Initialize the selected features to the empty set and

iteratively call the previous two functions.

(define (select-features dataset-id nfeatures)

(let (obj-id (dataset-get-objective-id dataset-id)

input-ids (default-inputs dataset-id obj-id)

[train-id test-id] (create-dataset-split dataset-id 0.5))

(loop (selected []

potentials input-ids)

(if (or (>= (count selected) nfeatures)

(empty? potentials))

selected

(let (models (make-models dataset-id obj-id

selected potentials)

feature (select-feature test-id potentials model))

(recur (append selected feature)

(remove feature selected)))))))

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 52 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-57-320.jpg)

![Impromptu example: eval by size

(define (model-range dataset from to)

(create-model dataset {"range" [from to]}))

(define (eval-range dataset model from to)

(let (ev-id (create-evaluation dataset model {"range" [from to]}))

[ev-id ((fetch (wait ev-id)) ["result" "model" "average_phi"])]))

(define (size-evaluations dataset-id steps)

(let (ds (fetch dataset-id)

rows (ds "rows")

step-rows (div rows steps)

tos (for (step (range (- steps 2)))

(* (+ 1 step) step-rows))

models (for (to tos) (model-range dataset-id step-rows rows))

evals (map (lambda (from model)

(eval-range dataset-id model 1 from))

tos models))

(map list froms evals)))

#MLDS18 Automating Machine Learning Workflows Doha, November 2018 54 / 57](https://image.slidesharecdn.com/mlsd18automatingmlworkflows-181107171544/85/MLSD18-Automating-Machine-Learning-Workflows-59-320.jpg)