MongoDB 3.2 - Analytics

- 1. Massimo Brignoli Principal Solutions Architect [email protected] @massimobrignoli Analytics in MongoDB

- 2. Agenda • Analytics in MongoDB? • Aggregation Framework • Aggregation Pipeline Stages • Aggregation Framework in Action • Joins in MongoDB 3.2 • Integrations • Analytical Architectures

- 3. Relational Expressive Query Language & Secondary Indexes Strong Consistency Enterprise Management & Integrations

- 4. The World Has Changed Volume Velocity Variety Iterative Agile Short Cycles Always On Secure Global Open-Source Cloud Commodity Data Time Risk Cost

- 5. Scalability & Performance Always On, Global Deployments FlexibilityExpressive Query Language & Secondary Indexes Strong Consistency Enterprise Management & Integrations NoSQL

- 6. Nexus Architecture Scalability & Performance Always On, Global Deployments FlexibilityExpressive Query Language & Secondary Indexes Strong Consistency Enterprise Management & Integrations

- 7. Some Common MongoDB Use Cases Single View Internet of Things Mobile Real-Time Analytics Catalog Personalization Content Management

- 10. Analytics in MongoDB? Create Read Update Delete Analytics ? Group Count Derive Values Filter Average Sort

- 11. Analytics on MongoDB Data • Extract data from MongoDB and perform complex analytics with Hadoop – Batch rather than real-time – Extra nodes to manage • Direct access to MongoDB from SPARK • MongoDB BI Connector – Direct SQL Access from BI Tools • MongoDB aggregation pipeline – Real-time – Live, operational data set – Narrower feature set Hadoop Connector MapReduce & HDFS SQL Connector

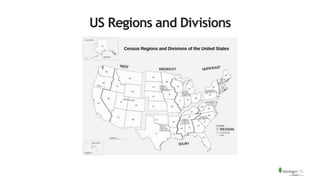

- 12. For Example: US Census Data • Census data from 1990, 2000, 2010 • Question: – Which US Division has the fastest growing population density? – We only want to include data states with more than 1M people – We only want to include divisions larger than 100K square miles – Division = a group of US States – Population density = Area of division/# of people – Data is provided at the state level

- 13. US Regions and Divisions

- 14. How would we solve this in SQL? • SELECT GROUP BY HAVING

- 17. What is an Aggregation Pipeline? • A Series of Document Transformations – Executed in stages – Original input is a collection – Output as a cursor or a collection • Rich Library of Functions – Filter, compute, group, and summarize data – Output of one stage sent to input of next – Operations executed in sequential order

- 18. Aggregation Pipeline {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds}

- 21. Aggregation Pipeline $match $project {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {} {★ds} {★ds} {★ds} {=d+s}

- 22. Aggregation Pipeline $match $project {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {} {★ds} {★ds} {★ds} {★} {★} {★} {=d+s}

- 23. Aggregation Pipeline $match $project $lookup {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {} {★ds} {★ds} {★ds} {★} {★} {★} {★} {★} {★} {★} {=d+s}

- 24. Aggregation Pipeline $match $project $lookup {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {} {★ds} {★ds} {★ds} {★} {★} {★} {★} {★} {★} {★} {=d+s} {★[]} {★[]} {★}

- 25. Aggregation Pipeline $match $project $lookup $group {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {★ds} {} {★ds} {★ds} {★ds} {★} {★} {★} {★} {★} {★} {★} {=d+s} { Σ λ σ} { Σ λ σ} { Σ λ σ} {★[]} {★[]} {★}

- 26. Aggregation Pipeline Stages • $match Filter documents • $geoNear Geospherical query • $project Reshape documents • $lookup New – Left-outer equi joins • $unwind Expand documents • $group Summarize documents • $sample New – Randomly selects a subset of documents • $sort Order documents • $skip Jump over a number of documents • $limit Limit number of documents • $redact Restrict documents • $out Sends results to a new collection

- 27. Aggregation Framework in Action (let’s play with the census data)

- 28. MongoDB State Collection • Document For Each State • Name • Region • Division • Census Data For 1990, 2000, 2010 – Population – Housing Units – Occupied Housing Units • Census Data is an array with three subdocuments

- 29. Document Model { "_id" : ObjectId("54e23c7b28099359f5661525"), "name" : "California", "region" : "West", "data" : [ { "totalPop" : 33871648, "totalHouse" : 12214549, "occHouse" : 11502870, "year" : 2000}, { "totalPop" : 37253956, "totalHouse" : 13680081, "occHouse" : 12577498, "year" : 2010}, { "totalPop" : 29760021, "totalHouse" : 11182882, "occHouse" : 29008161, "year" : 1990} ], … }

- 30. Total US Area db.cData.aggregate([ {"$group" : {"_id" : null, "totalArea" : {$sum : "$areaM"}, "avgArea" : {$avg : "$areaM"}}}])

- 31. $group • Group documents by value – Field reference, object, constant – Other output fields are computed • $max, $min, $avg, $sum • $addToSet, $push • $first, $last – Processes all data in memory by default

- 32. Area By Region db.cData.aggregate([{ "$group" : { "_id" : "$region", "totalArea" : {$sum : "$areaM"}, "avgArea" : {$avg : "$areaM"}, "numStates" : {$sum : 1}, "states" : {$push : "$name"}}}])

- 33. Calculating Average State Area By Region {state: ”New York", areaM: 218, region: “North East" } {state: ”New Jersey", areaM: 90, region: “North East” } {state: “California", area: 300, region: “West" } { $group: { _id: "$region", avgAreaM: {$avg: ”$areaM" } }} { _id: ”North East", avgAreaM: 154} {_id: “West", avgAreaM: 300}

- 34. Calculating Total Area and State Count {state: ”New York", areaM: 218, region: “North East" } {state: ”New Jersey", areaM: 90, region: “North East” } {state: “California", area: 300, region: “West" } { $group: { _id: "$region", totArea: {$sum: ”$areaM" }, sCount : {$sum : 1} }} { _id: ”North East", totArea: 308 sCount: 2} { _id: “West", totArea: 300, sCount: 1}

- 35. Total US Population By Year db.cData.aggregate([ {$unwind : "$data"}, {$group : { "_id" : "$data.year", "totalPop" : {$sum :"$data.totalPop"}}}, {$sort : {"totalPop" : 1}} ])

- 36. $unwind • Operate on an array field – Create documents from array elements • Array replaced by element value • Missing/empty fields → no output • Non-array fields → error – Pipe to $group to aggregate

- 37. $unwind { state: ”New York", census: [1990, 2000, 2010]} { state: ”New Jersey", census: [1990, 2000]} { state: “California", census: [1980, 1990, 2000, 2010]} { state: ”Delaware", census: [1990, 2000]} { $unwind: $census } { state: “New York”, census: 1990} { state: “New York”, census: 2000} { state: “New York”, census: 2010} { state: “New Jersey”, census: 1990} { state: “New Jersey”, census: 2000}

- 38. Southern State Population By Year db.cData.aggregate([ {$match : {"region" : "South"}}, {$unwind : "$data"}, {$group : { "_id" : "$data.year", "totalPop" : {"$sum" :"$data.totalPop"}}} ])

- 39. $match • Filter documents – Uses existing query syntax, same as .find()

- 40. $match {state: ”New York", areaM: 218, region: “North East" } {state: ”Oregon", areaM: 245, region: “West” } {state: “California", area: 300, region: “West" } {state: ”Oregon", areaM: 245, region: “West”} {state: “California", area: 300, region: “West"} { $match: { “region” : “West” } }

- 41. Population Delta By State from 1990 to 2010 db.cData.aggregate([ {$unwind : "$data"}, {$sort : {"data.year" : 1}}, {$group : { "_id" : "$name", "pop1990" : {"$first" : "$data.totalPop"}, "pop2010" : {"$last" : "$data.totalPop"}}}, {$project : { "_id" : 0, "name" : "$_id", "delta" : {"$subtract" : ["$pop2010", "$pop1990"]}, "pop1990" : 1, "pop2010" : 1} }])

- 42. $sort, $limit, $skip • Sort documents by one or more fields – Same order syntax as cursors – Waits for earlier pipeline operator to return – In-memory unless early and indexed • Limit and skip follow cursor behavior

- 43. $first, $last • Collection operations like $push and $addToSet • Must be used in $group • $first and $last determined by document order • Typically used with $sort to ensure ordering is known

- 44. $project • Reshape Documents – Include, exclude or rename fields – Inject computed fields – Create sub-document fields

- 45. Including and Excluding Fields { "_id" : "Virginia”, "pop1990" : 453588, "pop2010" : 3725789 } { "_id" : "South Dakota", "pop1990" : 453588, "pop2010" : 3725789 } { $project: { “_id” : 0, “pop1990” : 1, “pop2010” : 1} } {"pop1990" : 453588, "pop2010" : 3725789} {"pop1990" : 453588, "pop2010" : 3725789}

- 46. Renaming and Computing Fields { $project: { “_id” : 0, “pop1990” : 0, “pop2010” : 0, “name” : “$_id”, "delta" : {"$subtract" : ["$pop2010", "$pop1990"]}} } { "_id" : "Virginia”, "pop1990" : 6187358, "pop2010" : 8001024 } { "_id" : "South Dakota", "pop1990" : 696004, "pop2010" : 814180 } {”name" : “Virginia”, ”delta" : 1813666} {“name" : “South Dakota”, “delta" : 118176}

- 47. Compare number of people living within 500KM of Memphis, TN in 1990, 2000, 2010

- 48. Compare number of people living within 500KM of Memphis, TN in 1990, 2000, 2010 db.cData.aggregate([ {$geoNear : { "near" : {"type" : "Point", "coordinates" : [90, 35]}, "distanceField" : "dist.calculated", "maxDistance" : 500000, "includeLocs" : "dist.location", "spherical": true }}, {$unwind : "$data"}, {$group : { "_id" : "$data.year", "totalPop" : {"$sum" : "$data.totalPop"}, "states" : {"$addToSet" : "$name"}}}, {$sort : {"_id" : 1}} ])

- 49. $geoNear • Order/Filter Documents by Location – Requires a geospatial index – Output includes physical distance – Must be first aggregation stage

- 50. $geoNear {"_id" : "Virginia”, "pop1990" : 6187358, "pop2010" : 8001024, “center” : {“type” : “Point”, “coordinates” : [78.6, 37.5]}} { "_id" : ”Tennessee", "pop1990" : 4877185, "pop2010" : 6346105, “center” : {“type” : “Point”, “coordinates” : [86.6, 37.8]}} {"_id" : ”Tennessee", "pop1990" : 4877185, "pop2010" : 6346105, “center” : {“type” : “Point”, “coordinates” : [86.6, 37.8]}} {$geoNear : { "near”: {"type”: "Point", "coordinates”: [90, 35]}, maxDistance : 500000, spherical : true }}

- 51. What if I want to save the results to a collection? db.cData.aggregate([ {$geoNear : { "near" : {"type" : "Point", "coordinates" : [90, 35]}, “distanceField” : "dist.calculated", “maxDistance” : 500000, “includeLocs” : "dist.location", “spherical” : true }}, {$unwind : "$data"}, {$group : { "_id" : "$data.year", "totalPop" : {"$sum" : "$data.totalPop"}, "states" : {"$addToSet" : "$name"}}}, {$sort : {"_id" : 1}}, {$out : “peopleNearMemphis”} ])

- 52. $out db.cData.aggregate([<pipeline stages>, {“$out”:“resultsCollection”}]) • Save aggregation results to a new collection • New aggregation uses: • Transform documents - ETL

- 53. Back To The Original Question • Which US Division has the fastest growing population density? – We only want to include data states with more than 1M people – We only want to include divisions larger than 100K square miles

- 54. Division with Fastest Growing Pop Density db.cData.aggregate( [{$match : {"data.totalPop" : {"$gt" : 1000000}}}, {$unwind : "$data"}, {$sort : {"data.year" : 1}}, {$group : {"_id" : "$name", "pop1990" : {"$first" : "$data.totalPop"}, "pop2010" : {"$last" : "$data.totalPop"}, "areaM" : {"$first" : "$areaM"}, "division" : {"$first" : "$division"}}}, {$group : { "_id" : "$division", "totalPop1990" : {"$sum" : "$pop1990"}, "totalPop2010" : {"$sum" : "$pop2010"}, "totalAreaM" : {"$sum" : "$areaM"}}}, {$match : {"totalAreaM" : {"$gt" : 100000}}}, {$project : {"_id" : 0, "division" : "$_id", "density1990" : {"$divide" : ["$totalPop1990", "$totalAreaM"]}, "density2010" : {"$divide" : ["$totalPop2010", "$totalAreaM"]}, "denDelta" : {"$subtract" : [{"$divide" : ["$totalPop2010", "$totalAreaM"]}, {"$divide" : ["$totalPop1990","$totalAreaM"]}]}, "totalAreaM" : 1, "totalPop1990" : 1, "totalPop2010" : 1}}, {$sort : {"denDelta" : -1}}])

- 56. Aggregate options db.cData.aggregate([<pipeline stages>], {‘explain’ : false 'allowDiskUse' : true, 'cursor' : {'batchSize' : 5}}) • explain – similar to find().explain() • allowDiskUse – enable use of disk to store intermediate results • cursor – specify the size of the initial result

- 58. Sharding • Workload split between shards – Shards execute pipeline up to a point – Primary shard merges cursors and continues processing* – Use explain to analyze pipeline split – Early $match can exclude shards – Potential CPU and memory implications for primary shard host *Prior to v2.6 second stage pipeline processing was done by mongos

- 59. MongoDB 3.2: Joins and other improvements

- 60. Existing Alternatives to Joins { "_id": 10000, "items": [ { "productName": "laptop", "unitPrice": 1000, "weight": 1.2, "remainingStock": 23}, { "productName": "mouse", "unitPrice": 20, "weight": 0.2, "remainingStock": 276}], … } • Option 1: Include all data for an order in the same document – Fast reads • One find delivers all the required data – Captures full description at the time of the event – Consumes extra space • Details of each product stored in many order documents – Complex to maintain • A change to any product attribute must be propagated to all affected orders orders

- 61. The Winner? • In general, Option 1 wins – Performance and containment of everything in same place beats space efficiency of normalization – There are exceptions • e.g. Comments in a blog post -> unbounded size • However, analytics benefit from combining data from multiple collections – Keep listening...

- 62. Existing Alternatives to Joins { "_id": 10000, "items": [ 12345, 54321 ], ... } • Option 2: Order document references product documents – Slower reads • Multiple trips to the database – Space efficient • Product details stored once – Lose point-in-time snapshot of full record – Extra application logic • Must iterate over product IDs in the order document and find the product documents • RDBMS would automate through a JOIN orders { "_id": 12345, "productName": "laptop", "unitPrice": 1000, "weight": 1.2, "remainingStock": 23 } { "_id": 54321, "productName": "mouse", "unitPrice": 20, "weight": 0.2, "remainingStock": 276 } products

- 63. $lookup • Left-outer join – Includes all documents from the left collection – For each document in the left collection, find the matching documents from the right collection and embed them Left Collection Right Collection

- 64. $lookup db.leftCollection.aggregate([{ $lookup: { from: “rightCollection”, localField: “leftVal”, foreignField: “rightVal”, as: “embeddedData” } }]) Left Collection Right Collection

- 65. Worked Example – Data Set db.postcodes.findOne() { "_id":ObjectId("5600521e50fa77da54d fc0d2"), "postcode": "SL6 0AA", "location": { "type": "Point", "coordinates": [ 51.525605, -0.700974 ]}} db.homeSales.findOne() { "_id":ObjectId("56005dd980c3678b19792b7f"), "amount": 9000, "date": ISODate("1996-09-19T00:00:00Z"), "address": { "nameOrNumber": 25, "street": "NORFOLK PARK COTTAGES", "town": "MAIDENHEAD", "county": "WINDSOR AND MAIDENHEAD", "postcode": "SL6 7DR" } }

- 66. Reduce Data Set First db.homeSales.aggregate([ {$match: { amount: {$gte:3000000}} } ]) … { "_id": ObjectId("56005dda80c3678b19799e52"), "amount": 3000000, "date": ISODate("2012-04-19T00:00:00Z"), "address": { "nameOrNumber": "TEMPLE FERRY PLACE", "street": "MILL LANE", "town": "MAIDENHEAD", "county": "WINDSOR AND MAIDENHEAD", "postcode": "SL6 5ND" } },…

- 67. Join (left-outer-equi) Results With Second Collection db.homeSales.aggregate([ {$match: { amount: {$gte:3000000}} }, {$lookup: { from: "postcodes", localField: "address.postcode", foreignField: "postcode", as: "postcode_docs"} } ]) ... "county": "WINDSOR AND MAIDENHEAD", "postcode": "SL6 5ND" }, "postcode_docs": [ { "_id": ObjectId("560053e280c3678b1978b293"), "postcode": "SL6 5ND", "location": { "type": "Point", "coordinates": [ 51.549516, -0.80702 ] }}]}, ...

- 68. Refactor Each Resulting Document ...}, {$project: { _id: 0, saleDate: ”$date", price: "$amount", address: 1, location: {$arrayElemAt: ["$postcode_docs.location", 0]}} ]) { "address": { "nameOrNumber": "TEMPLE FERRY PLACE", "street": "MILL LANE", "town": "MAIDENHEAD", "county": "WINDSOR AND MAIDENHEAD", "postcode": "SL6 5ND" }, "saleDate": ISODate("2012-04-19T00:00:00Z"), "price": 3000000, "location": { "type": "Point", "coordinates": [ 51.549516, -0.80702 ]}},...

- 69. Sort on Sale Price & Write to Collection ...}, {$sort: {price: -1}}, {$out: "hotSpots"} ]) …{"address": { "nameOrNumber": "2 - 3", "street": "THE SWITCHBACK", "town": "MAIDENHEAD", "county": "WINDSOR AND MAIDENHEAD", "postcode": "SL6 7RJ" }, "saleDate": ISODate("1999-03-15T00:00:00Z"), "price": 5425000, "location": { "type": "Point", "coordinates": [ 51.536848, -0.735835 ]}},...

- 70. Aggregated Statistics db.homeSales.aggregate([ {$group: { _id: {$year: "$date"}, higestPrice: {$max: "$amount"}, lowestPrice: {$min: "$amount"}, averagePrice: {$avg: "$amount"}, amountStdDev: {$stdDevPop: "$amount"} }} ]) ... { "_id": 1995, "higestPrice": 1000000, "lowestPrice": 12000, "averagePrice": 114059.35206869633, "amountStdDev": 81540.50490801703 }, { "_id": 1996, "higestPrice": 975000, "lowestPrice": 9000, "averagePrice": 118862, "amountStdDev": 79871.07569783277 }, ...

- 71. Clean Up Output ..., {$project: { _id: 0, year: "$_id", higestPrice: 1, lowestPrice: 1, averagePrice: {$trunc: "$averagePrice"}, priceStdDev: {$trunc: "$amountStdDev"} } } ]) ... { "higestPrice": 1000000, "lowestPrice": 12000, "averagePrice": 114059, "year": 1995, "priceStdDev": 81540 }, { "higestPrice": 2200000, "lowestPrice": 10500, "averagePrice": 307372, "year": 2004, "priceStdDev": 199643 },...

- 72. Integrations

- 73. Hadoop Connector

- 74. Input data Hadoop Cluster -or- .BSON Mongo-Hadoop Connector • Turn MongoDB into a Hadoop-enabled filesystem: use as the input or output for Hadoop • Works with MongoDB backup files (.bson)

- 75. Benefits and Features • Takes advantage of full multi-core parallelism to process data in Mongo • Full integration with Hadoop and JVM ecosystems • Can be used with Amazon Elastic MapReduce • Can read and write backup files from local filesystem, HDFS, or S3

- 76. Benefits and Features • Vanilla Java MapReduce • If you don’t want to use Java, support for Hadoop Streaming. • Write MapReduce code in

- 77. Benefits and Features • Support for Pig – high-level scripting language for data analysis and building map/reduce workflows • Support for Hive – SQL-like language for ad-hoc queries + analysis of data sets on Hadoop- compatible file systems

- 78. How It Works • Adapter examines the MongoDB input collection and calculates a set of splits from the data • Each split gets assigned to a node in Hadoop cluster • In parallel, Hadoop nodes pull data for splits from MongoDB (or BSON) and process them locally • Hadoop merges results and streams output back to MongoDB or BSON

- 79. BI Connector

- 80. MongoDB Connector for BI Visualize and explore multi-dimensional documents using SQL-based BI tools. The connector does the following: • Provides the BI tool with the schema of the MongoDB collection to be visualized • Translates SQL statements issued by the BI tool into equivalent MongoDB queries that are sent to MongoDB for processing • Converts the results into the tabular format expected by the BI tool, which can then visualize the data based on user requirements

- 81. Location & Flow of Data MongoDB BI Connector Mapping meta-data Application data {name: “Andrew”, address: {street:… }} DocumentTableAnalytics & visualization

- 82. 82 Defining Data Mapping mongodrdl --host 192.168.1.94 --port 27017 -d myDbName -o myDrdlFile.drdl mongobischema import myCollectionName myDrdlFile.drdl DRDL mongodrdl mongobischema PostgreSQL MongoDB- specific Foreign Data Wrapper

- 83. 83 Optionally Manually Edit DRDL File • Redact attributes • Use more appropriate types (sampling can get it wrong) • Rename tables (v1.1+) • Rename columns (v1.1+) • Build new views using MongoDB Aggregation Framework • e.g., $lookup to join 2 tables - table: homesales collection: homeSales pipeline: [] columns: - name: _id mongotype: bson.ObjectId sqlname: _id sqltype: varchar - name: address.county mongotype: string sqlname: address_county sqltype: varchar - name: address.nameOrNumber mongotype: int sqlname: address_nameornumber sqltype: varchar

- 84. Summary

- 85. Analytics in MongoDB? Create Read Update Deletet Analytics ? Group Count Derive Values Filter Average Sort YES!

- 86. Framework Use Cases • Complex aggregation queries • Ad-hoc reporting • Real-time analytics • Visualizing and reshaping data

- 87. Questions?

![Aggregation Pipeline

$match $project $lookup

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds} {}

{★ds}

{★ds}

{★ds}

{★}

{★}

{★}

{★}

{★}

{★}

{★}

{=d+s}

{★[]}

{★[]}

{★}](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-24-320.jpg)

![Aggregation Pipeline

$match $project $lookup $group

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds}

{★ds} {}

{★ds}

{★ds}

{★ds}

{★}

{★}

{★}

{★}

{★}

{★}

{★}

{=d+s}

{

Σ λ σ}

{

Σ λ σ}

{

Σ λ σ}

{★[]}

{★[]}

{★}](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-25-320.jpg)

![Document Model

{ "_id" : ObjectId("54e23c7b28099359f5661525"),

"name" : "California",

"region" : "West",

"data" : [

{ "totalPop" : 33871648,

"totalHouse" : 12214549,

"occHouse" : 11502870,

"year" : 2000},

{ "totalPop" : 37253956,

"totalHouse" : 13680081,

"occHouse" : 12577498,

"year" : 2010},

{ "totalPop" : 29760021,

"totalHouse" : 11182882,

"occHouse" : 29008161,

"year" : 1990}

],

…

}](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-29-320.jpg)

![Total US Area

db.cData.aggregate([

{"$group" :

{"_id" : null,

"totalArea" : {$sum : "$areaM"},

"avgArea" : {$avg : "$areaM"}}}])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-30-320.jpg)

![Area By Region

db.cData.aggregate([{

"$group" : {

"_id" : "$region",

"totalArea" : {$sum : "$areaM"},

"avgArea" : {$avg : "$areaM"},

"numStates" : {$sum : 1},

"states" : {$push : "$name"}}}])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-32-320.jpg)

![Total US Population By Year

db.cData.aggregate([

{$unwind : "$data"},

{$group : {

"_id" : "$data.year",

"totalPop" : {$sum :"$data.totalPop"}}},

{$sort : {"totalPop" : 1}}

])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-35-320.jpg)

![$unwind

{ state: ”New York",

census: [1990, 2000,

2010]}

{ state: ”New Jersey",

census: [1990, 2000]}

{ state: “California",

census: [1980, 1990, 2000,

2010]}

{ state: ”Delaware",

census: [1990, 2000]}

{ $unwind: $census }

{ state: “New York”, census: 1990}

{ state: “New York”, census: 2000}

{ state: “New York”, census: 2010}

{ state: “New Jersey”, census: 1990}

{ state: “New Jersey”, census: 2000}](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-37-320.jpg)

![Southern State Population By Year

db.cData.aggregate([

{$match : {"region" : "South"}},

{$unwind : "$data"},

{$group : { "_id" : "$data.year",

"totalPop" : {"$sum" :"$data.totalPop"}}}

])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-38-320.jpg)

![Population Delta By State from 1990 to 2010

db.cData.aggregate([

{$unwind : "$data"},

{$sort : {"data.year" : 1}},

{$group : { "_id" : "$name",

"pop1990" : {"$first" : "$data.totalPop"},

"pop2010" : {"$last" : "$data.totalPop"}}},

{$project : { "_id" : 0,

"name" : "$_id",

"delta" : {"$subtract" : ["$pop2010", "$pop1990"]},

"pop1990" : 1,

"pop2010" : 1}

}])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-41-320.jpg)

![Renaming and Computing Fields

{ $project:

{ “_id” : 0,

“pop1990” : 0,

“pop2010” : 0,

“name” : “$_id”,

"delta" :

{"$subtract" :

["$pop2010",

"$pop1990"]}}

}

{

"_id" : "Virginia”,

"pop1990" : 6187358,

"pop2010" : 8001024

}

{

"_id" : "South Dakota",

"pop1990" : 696004,

"pop2010" : 814180

} {”name" : “Virginia”,

”delta" : 1813666}

{“name" : “South Dakota”,

“delta" : 118176}](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-46-320.jpg)

![Compare number of people living within 500KM of

Memphis, TN in 1990, 2000, 2010

db.cData.aggregate([

{$geoNear : { "near" : {"type" : "Point", "coordinates" : [90, 35]},

"distanceField" : "dist.calculated",

"maxDistance" : 500000,

"includeLocs" : "dist.location",

"spherical": true }},

{$unwind : "$data"},

{$group : { "_id" : "$data.year",

"totalPop" : {"$sum" : "$data.totalPop"},

"states" : {"$addToSet" : "$name"}}},

{$sort : {"_id" : 1}}

])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-48-320.jpg)

![$geoNear

{"_id" : "Virginia”,

"pop1990" : 6187358,

"pop2010" : 8001024,

“center” :

{“type” : “Point”,

“coordinates” :

[78.6, 37.5]}}

{ "_id" : ”Tennessee",

"pop1990" : 4877185,

"pop2010" : 6346105,

“center” :

{“type” : “Point”,

“coordinates” :

[86.6, 37.8]}}

{"_id" : ”Tennessee",

"pop1990" : 4877185,

"pop2010" : 6346105,

“center” :

{“type” : “Point”,

“coordinates” :

[86.6, 37.8]}}

{$geoNear : {

"near”: {"type”: "Point",

"coordinates”:

[90, 35]},

maxDistance : 500000,

spherical : true }}](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-50-320.jpg)

![What if I want to save the results to a collection?

db.cData.aggregate([

{$geoNear : { "near" : {"type" : "Point", "coordinates" : [90, 35]},

“distanceField” : "dist.calculated",

“maxDistance” : 500000,

“includeLocs” : "dist.location",

“spherical” : true }},

{$unwind : "$data"},

{$group : { "_id" : "$data.year",

"totalPop" : {"$sum" : "$data.totalPop"},

"states" : {"$addToSet" : "$name"}}},

{$sort : {"_id" : 1}},

{$out : “peopleNearMemphis”}

])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-51-320.jpg)

![$out

db.cData.aggregate([<pipeline stages>,

{“$out”:“resultsCollection”}])

• Save aggregation results to a new collection

• New aggregation uses:

• Transform documents - ETL](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-52-320.jpg)

![Division with Fastest Growing Pop Density

db.cData.aggregate(

[{$match : {"data.totalPop" : {"$gt" : 1000000}}},

{$unwind : "$data"},

{$sort : {"data.year" : 1}},

{$group : {"_id" : "$name",

"pop1990" : {"$first" : "$data.totalPop"},

"pop2010" : {"$last" : "$data.totalPop"},

"areaM" : {"$first" : "$areaM"},

"division" : {"$first" : "$division"}}},

{$group : { "_id" : "$division",

"totalPop1990" : {"$sum" : "$pop1990"},

"totalPop2010" : {"$sum" : "$pop2010"},

"totalAreaM" : {"$sum" : "$areaM"}}},

{$match : {"totalAreaM" : {"$gt" : 100000}}},

{$project : {"_id" : 0,

"division" : "$_id",

"density1990" : {"$divide" : ["$totalPop1990", "$totalAreaM"]},

"density2010" : {"$divide" : ["$totalPop2010", "$totalAreaM"]},

"denDelta" : {"$subtract" : [{"$divide" : ["$totalPop2010", "$totalAreaM"]}, {"$divide" : ["$totalPop1990","$totalAreaM"]}]},

"totalAreaM" : 1,

"totalPop1990" : 1,

"totalPop2010" : 1}},

{$sort : {"denDelta" : -1}}])](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-54-320.jpg)

![Aggregate options

db.cData.aggregate([<pipeline stages>],

{‘explain’ : false

'allowDiskUse' : true,

'cursor' : {'batchSize' : 5}})

• explain – similar to find().explain()

• allowDiskUse – enable use of disk to store intermediate

results

• cursor – specify the size of the initial result](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-56-320.jpg)

![Existing Alternatives to Joins

{ "_id": 10000,

"items": [

{ "productName": "laptop",

"unitPrice": 1000,

"weight": 1.2,

"remainingStock": 23},

{ "productName": "mouse",

"unitPrice": 20,

"weight": 0.2,

"remainingStock": 276}],

…

}

• Option 1: Include all data for

an order in the same document

– Fast reads

• One find delivers all the required data

– Captures full description at the time of the

event

– Consumes extra space

• Details of each product stored in many

order documents

– Complex to maintain

• A change to any product attribute must be

propagated to all affected orders

orders](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-60-320.jpg)

![Existing Alternatives to Joins

{

"_id": 10000,

"items": [

12345,

54321

],

...

}

• Option 2: Order document

references product documents

– Slower reads

• Multiple trips to the database

– Space efficient

• Product details stored once

– Lose point-in-time snapshot of full record

– Extra application logic

• Must iterate over product IDs in the order

document and find the product documents

• RDBMS would automate through a JOIN

orders

{

"_id": 12345,

"productName": "laptop",

"unitPrice": 1000,

"weight": 1.2,

"remainingStock": 23

}

{

"_id": 54321,

"productName": "mouse",

"unitPrice": 20,

"weight": 0.2,

"remainingStock": 276

}

products](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-62-320.jpg)

![$lookup

db.leftCollection.aggregate([{

$lookup:

{

from: “rightCollection”,

localField: “leftVal”,

foreignField: “rightVal”,

as: “embeddedData”

}

}])

Left Collection Right Collection](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-64-320.jpg)

![Worked Example – Data Set

db.postcodes.findOne()

{

"_id":ObjectId("5600521e50fa77da54d

fc0d2"),

"postcode": "SL6 0AA",

"location": {

"type": "Point",

"coordinates": [

51.525605,

-0.700974

]}}

db.homeSales.findOne()

{

"_id":ObjectId("56005dd980c3678b19792b7f"),

"amount": 9000,

"date": ISODate("1996-09-19T00:00:00Z"),

"address": {

"nameOrNumber": 25,

"street": "NORFOLK PARK COTTAGES",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 7DR"

}

}](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-65-320.jpg)

![Reduce Data Set First

db.homeSales.aggregate([

{$match: {

amount: {$gte:3000000}}

}

])

…

{

"_id":

ObjectId("56005dda80c3678b19799e52"),

"amount": 3000000,

"date": ISODate("2012-04-19T00:00:00Z"),

"address": {

"nameOrNumber": "TEMPLE FERRY

PLACE",

"street": "MILL LANE",

"town": "MAIDENHEAD",

"county": "WINDSOR AND

MAIDENHEAD",

"postcode": "SL6 5ND"

}

},…](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-66-320.jpg)

![Join (left-outer-equi) Results With Second Collection

db.homeSales.aggregate([

{$match: {

amount: {$gte:3000000}}

},

{$lookup: {

from: "postcodes",

localField: "address.postcode",

foreignField: "postcode",

as: "postcode_docs"}

}

])

...

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 5ND"

},

"postcode_docs": [

{

"_id": ObjectId("560053e280c3678b1978b293"),

"postcode": "SL6 5ND",

"location": {

"type": "Point",

"coordinates": [

51.549516,

-0.80702

]

}}]}, ...](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-67-320.jpg)

![Refactor Each Resulting Document

...},

{$project: {

_id: 0,

saleDate: ”$date",

price: "$amount",

address: 1,

location:

{$arrayElemAt:

["$postcode_docs.location", 0]}}

])

{ "address": {

"nameOrNumber": "TEMPLE FERRY PLACE",

"street": "MILL LANE",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 5ND"

},

"saleDate": ISODate("2012-04-19T00:00:00Z"),

"price": 3000000,

"location": {

"type": "Point",

"coordinates": [

51.549516,

-0.80702

]}},...](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-68-320.jpg)

![Sort on Sale Price & Write to Collection

...},

{$sort:

{price: -1}},

{$out: "hotSpots"}

])

…{"address": {

"nameOrNumber": "2 - 3",

"street": "THE SWITCHBACK",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 7RJ"

},

"saleDate": ISODate("1999-03-15T00:00:00Z"),

"price": 5425000,

"location": {

"type": "Point",

"coordinates": [

51.536848,

-0.735835

]}},...](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-69-320.jpg)

![Aggregated Statistics

db.homeSales.aggregate([

{$group:

{ _id:

{$year: "$date"},

higestPrice:

{$max: "$amount"},

lowestPrice:

{$min: "$amount"},

averagePrice:

{$avg: "$amount"},

amountStdDev:

{$stdDevPop: "$amount"}

}}

])

...

{

"_id": 1995,

"higestPrice": 1000000,

"lowestPrice": 12000,

"averagePrice": 114059.35206869633,

"amountStdDev": 81540.50490801703

},

{

"_id": 1996,

"higestPrice": 975000,

"lowestPrice": 9000,

"averagePrice": 118862,

"amountStdDev": 79871.07569783277

}, ...](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-70-320.jpg)

![Clean Up Output

...,

{$project:

{

_id: 0,

year: "$_id",

higestPrice: 1,

lowestPrice: 1,

averagePrice:

{$trunc: "$averagePrice"},

priceStdDev:

{$trunc: "$amountStdDev"}

}

}

])

...

{

"higestPrice": 1000000,

"lowestPrice": 12000,

"averagePrice": 114059,

"year": 1995,

"priceStdDev": 81540

},

{

"higestPrice": 2200000,

"lowestPrice": 10500,

"averagePrice": 307372,

"year": 2004,

"priceStdDev": 199643

},...](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-71-320.jpg)

![83

Optionally Manually Edit DRDL File

• Redact attributes

• Use more appropriate types

(sampling can get it wrong)

• Rename tables (v1.1+)

• Rename columns (v1.1+)

• Build new views using

MongoDB Aggregation

Framework

• e.g., $lookup to join 2 tables

- table: homesales

collection: homeSales

pipeline: []

columns:

- name: _id

mongotype: bson.ObjectId

sqlname: _id

sqltype: varchar

- name: address.county

mongotype: string

sqlname: address_county

sqltype: varchar

- name:

address.nameOrNumber

mongotype: int

sqlname:

address_nameornumber

sqltype: varchar](https://image.slidesharecdn.com/mongodb3-180129220716/85/MongoDB-3-2-Analytics-83-320.jpg)