Multimodal Interaction in Distributed and Ubiquitous Computing - ICIW 2010

- 1. ICIW 2010 - The Fifth International Conference on Internet and Web Applications and ServicesMay 9 - 15, 2010 - Barcelona, Spain Titol de la presentacióMultimodal Interaction in Distributed and Ubiquitous ComputingMarc Pous and Luigi CeccaronibdigitalCognom Nom, CàrrecNom de la jornada, lloc

- 2. SUMMARYWhy multimodality?Multimodal distributed services deploymentUser tests and conclusions

- 3. In our cities, urban services practically have not changed in a century

- 9. http://www.flickr.com/photos/loquat73/3385335980/

- 10. MOTIVATIONS2: Interactions and multimodality

- 19. INREDIS3G = ACCESSIBILITY + UBIQUITY + INTEROPERABILITYhttp://www.inredis.es

- 20. INREDIS ICD

- 21. Management of distributed services that offer the capability of processing and synthesizing multiple modalities of interaction

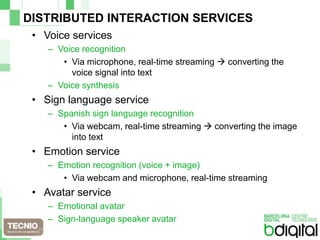

- 22. DISTRIBUTED INTERACTION SERVICESVoice servicesVoice recognitionVia microphone, real-time streaming converting the voice signal into textVoice synthesisSign language serviceSpanish sign language recognitionVia webcam, real-time streaming converting the image into textEmotion serviceEmotion recognition (voice + image)Via webcam and microphone, real-time streamingAvatar serviceEmotional avatarSign-language speaker avatar

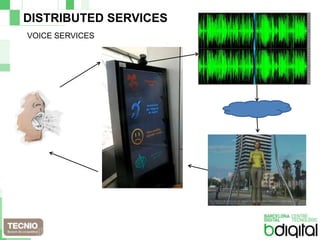

- 23. DISTRIBUTED SERVICESSIGN LANGUAGE SERVICES

- 25. How to consume multimodal distributed services offered by an ICD?

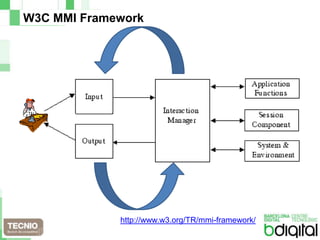

- 26. W3C MMI ArchitectureW3C MMI Architecturehttp://www.w3.org/TR/mmi-arch/

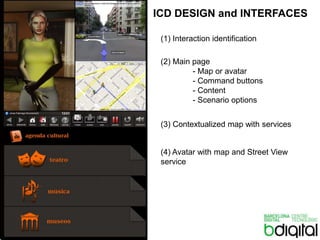

- 30. ICD DESIGN and INTERFACES(1) Interactionidentification(2) Main page- Mapor avatar- Commandbuttons- Content - Scenariooptions(3) Contextualizedmapwithservices(4) Avatar withmap and Street View service

- 31. USER–CENTERED DESIGNUser profiles (Personas)Blind usersDeaf usersUnable to readPeople living or visiting a cityScenariosContext-aware informative scenarioEmergency scenario

- 32. IMPLEMENTATION

- 33. USABILITY TESTINGPLI (PeopleLedInnovation) methodologyPreliminar usabilitytestswith a limitednumber of usersButthe ICD implementationreceivedgoodfeedback!

- 34. CONCLUSIONS and FUTURE WORKImplementation based on the W3C MMI Architecture ideaPlatform able to integrate multimodal interactive distributed services and offer interaction in real-timeWeb-based applications and device-independent implementationImprovement of the user experience and accessibility of people with special needsWhat are we working on now?Real-time interaction: delay reduction between interactionsBrowser-based apps not being able to easily access device hardwareSynchronous interaction vs. Asynchronous technologiesImprovement of the distributed services orchestrationEnhancement of the mobile usability

- 36. Thankyou!Moltesgràcies!¡Muchas gracias!Marc Pous and Luigi [email protected] and [email protected] de la presentacióCognom Nom, CàrrecNom de la jornada, lloc