Neural Networks in the Wild: Handwriting Recognition

- 1. Neural Networks in the Wild Handwriting Recognition By John Liu

- 2. Motivation ! US Post Office (700 million pieces of mail per day) ! HWAI (Lockheed-Martin 1997) ! Letter Recognition Improvement Program ! Electronic Health Records (Medical scribble) ! Banking: check processing ! Legal: signature verification ! Education: autograding

- 3. OCR is not HWR Optical Character Recognition Handwriting Recognition Fixed fonts Free flowing No character overlap Overlapping characters Easy alignment id Flowing alignment Fixed aspect ratios Variable aspect ratios Low noise Can be noisy

- 4. HWR: Online vs Offline ! Online recognition = conversion of text as it is written ! Palm PDA, Google Handwrite ! Low noise = easier classification ! Features: stroke pressure, velocity, trajectory ! Offline recognition = image conversion of text ! Computer-vision based methods to extract glyphs ! Much more difficult than normal OCR

- 5. Offline HWR ! Preprocessing ! Discard irrelevant artifacts and remove noise ! Smoothing, thresholding, morphological filtering ! Segmentation and Extraction ! Find contours and bounding boxes ! Glyph extraction ! Classification ! Linear and ensemble methods ! Neural Networks

- 6. HWR Examples Scanned handwritten note • noisy background • varying character size • biased ground truth License Plate image • goal: plate ID • similar problem to HWR • registration renewal soon

- 7. Kernel Smoothing ! Blurring helps eliminate noise after thresholding ! Local kernel defines averaging area ! Used 8x8 kernel for example images ! OpenCV: cv2.blur()

- 8. Thresholding ! Thresholding converts to b/w image ! Colors are inverted so (black,white) = (0,1) ! Some noise is present, but greatly reduced due to smoothing in previous step ! OpenCV: cv2.threshold()

- 9. Morphological Filtering ! Erosion: eat away at the boundaries of objects ! Removes white noise and small artifacts ! OpenCV: cv2.erode() with 4x4 kernel ! Dilation: increases thickness and white region ! opposite of erosion ! Useful in joining broken parts of an object ! OpenCV: cv2.dilate() with 2x2 kernel

- 10. Opening vs Closing ! Erosion/Dilation = Opening ! Eliminates noise outside objects ! Dilation/Erosion = Closing ! Eliminates noise within objects

- 11. Contour/Bounding Box ! Contours = set of all outer contiguous points ! Approximate contours as a reduced polygon ! Calculate the bounding rectangle ! OpenCV: cv2.findContours(), cv2.approxPolyDP(), cv2.boundingRect()

- 12. Glyph Extraction ! Results from bounding boxes

- 13. NIST Special DB 19 ! Contains 814,255 segmented handwritten characters ! Superset of MNIST that includes alphabetic characters ! 62 character classes [A-Z], [a-z], [0-9], 128x128 pixels ! We down-sample to 32x32 and use only a subsample of 90,000 characters (train=70,000, test&valid=10,000)

- 14. MNIST vs SD19 MNIST (LeCun) SD19 10 classes 62 classes Digits Upper & Lower case + Digits 28x28 pixel 128x128 pixel 60,000 samples 814,255 samples boring

- 15. Classification ! Goal: correctly classify character with highest: ! Accuracy ! F1 = geometric mean of precision & recall ! Typical Methods ! Linear ! SVM ! Ensemble ! Neural Networks

- 16. Logistic Regression ! a.k.a. Softmax, MaxEnt, logit regression ! We use multinomial LR with classes = 62 ! Implemented with scikit-learn Accuracy score = 59%, avg F1 score = 0.56 (baseline)

- 17. SVM - RBF ! We use a Gaussian kernel (a.k.a. Radial Basis Function) ! Implemented with scikit-learn (slow!) Accuracy score = 65%, avg F1 score = 0.61

- 18. Random Forest ! Ensemble estimator that builds a random forest of decision trees and combines their estimations ! Crowd Intelligence: “the wisdom of Crowds” ! Implemented with scikit-learn (fast!) Accuracy score = 69%, avg F1 score = 0.66

- 19. Extra Trees ! a.k.a. Extremely Randomized Trees, similar to Random Forest except splits are also randomized RF picks best from random subset of features Extra Trees picks randomly from random subset of features Accuracy score = 73%, avg F1 score = 0.71

- 20. Neural Networks ! HWR inherently a computer vision problem, apt for neural networks given recent advances ! Inputs: image reshaped as a binary vector ! Outputs: one-hot representation = 62 bit vector ! Question: How do you actually go about building a (deep) neural network model? WARNING: don’t try this in Excel

- 21. Theano ! Python library that implements Tensor objects that leverage GPUs for calculation ! Plays well with numpy.ndarray datatypes ! Transparent use of GPU (float32) = 140X faster ! Dynamic C code generation ! Installation is not fun: ! Update GPU driver ! Install CUDA 6.5 + Toolkit ! Install CUDAMat

- 22. Pylearn2 ! Python machine learning library toolbox built upon Theano (GPU speed) ! Provides flexibility to build customized neural network models with full access to hyper-params (nodes per layer, learning rate, etc…) ! Uses pickle for file I/O ! Two methods to implement models: ! YAML ! ipython notebook YAML

- 23. Pylearn2 Code Structure ! Dataset specification ! Uses DenseDesignMatrix class ! Model configuration ! LR, Kmeans, Softmax, RectLinear, Autoencoder, RBM, DBM, Cuda-convnet, more… ! Training Algorithm choice ! SGD, BGD, Dropout, Corruption ! Training execution ! Train class ! Prediction and results

- 24. #1 Feedforward NN ! Hidden layer = sigmoid, Output layer = softmax Input = 1024 (=32x32) bit vector Prediction= 62 bit vector (one-hot rep)

- 25. Hyperparams ! Hidden layer = 400 neurons ! Output layer = 62 neurons ! Random initialization (symmetry breaking) ! SGD algorithm (mini-batch stochastic gradient descent) ! Fixed learning rate = 0.05 ! Batch size = 100 ! Termination = after 100 epochs

- 26. #1 pylearn2 code

- 27. #1 Running in ipython Epochs seen: 100 Batches seen: 70000 Examples seen: 7000000 learning_rate: 0.0500000119209 objective: 0.623726010323 y0_col_norms_max: 11.5188903809 y0_col_norms_mean: 6.90935611725 y0_col_norms_min: 4.80359125137 y0_max_max_class: 0.996758043766 y0_mean_max_class: 0.759563267231 y0_min_max_class: 0.221193775535 y0_misclass: 0.173800006509 y0_nll: 0.623726010323 y0_row_norms_max: 6.41655635834 y0_row_norms_mean: 2.55642676353 y0_row_norms_min: 0.48346811533 Accuracy = 83%

- 28. Problem: Overfitting ! It’s easy to overfit using neural networks ! How many neurons per layer? 400? 800? ! Methods to deal with it include: ! L1, L2, ElasticNet regularization ! Early stopping ! Model averaging ! DROPOUT

- 29. Remedy: Dropout ! Dropout invented by G. Hinton to address overfitting ! Automatically provides ensemble boosting ! Prevents neurons from co-adapting

- 30. #2 NN w/Dropout ! 2 Softplus hidden layers, Softmax output layer Input = 1024 (=32x32) bit vector Prediction= 62 bit vector (one-hot rep) Softplus f(x) = log(1+ex)

- 31. #2 pylearn2 code Termination Condition = stop if no improvement after N=10 epochs

- 32. #2 running in ipython Epochs seen: 93 Batches seen: 65100 Examples seen: 6510000 learning_rate: 0.100000023842 total_seconds_last_epoch: 10.1289653778 training_seconds_this_epoch: 6.20919704437 valid_objective: 1.4562972784 valid_y0_col_norms_max: 5.99511814117 valid_y0_col_norms_mean: 2.90585327148 valid_y0_col_norms_min: 2.15357899666 valid_y0_max_max_class: 0.967477440834 valid_y0_mean_max_class: 0.583315730095 valid_y0_min_max_class: 0.12644392252 valid_y0_misclass: 0.253300011158 valid_y0_nll: 0.946564733982 valid_y0_row_norms_max: 2.06650829315 valid_y0_row_norms_mean: 0.773801326752 valid_y0_row_norms_min: 0.347277522087 Accuracy = 76%

- 33. Problem: SGD speed ! SGD learning tends to be slow when curvature differs among features ! Errors change trajectory of gradient, but always perpendicular to feature surface wt = wt-1 – ε dE/dw

- 34. Remedy: Momentum ! Errors change velocity of gradient, not gradient itself vt = αwt-1 – εdE/dw wt = wt-1 + vt ! Start with small momentum to dampen oscillations ! Fully implemented in Pylearn2 ! Other methods (conjugate gradient, Hessian-free)

- 35. #3 NN w/Momentum ! Rectified Linear hidden layer, Softmax output layer Input = 1024 (=32x32) bit vector Prediction= 62 bit vector (one-hot rep) Hidden = 400 neurons Initial Momentum = 0.5 Final Momentum = 0.99 Rectified Linear f(x) = max(x,0)

- 36. #3 pylearn2 code

- 37. #3 running in ipython Accuracy = 76% Epochs seen: 27 Batches seen: 18900 Examples seen: 1890000 learning_rate: 0.100000023842 momentum: 0.760000526905 total_seconds_last_epoch: 4.66804122925 training_seconds_this_epoch: 2.96171355247 valid_objective: 1.42965459824 valid_y0_col_norms_max: 4.5757818222 valid_y0_col_norms_mean: 3.47835850716 valid_y0_col_norms_min: 2.6680624485 valid_y0_max_max_class: 0.962530076504 valid_y0_mean_max_class: 0.555216372013 valid_y0_min_max_class: 0.12590457499 valid_y0_misclass: 0.249799996614 valid_y0_nll: 0.971761405468 valid_y0_row_norms_max: 2.2137401104 valid_y0_row_norms_mean: 1.3540699482 valid_y0_row_norms_min: 0.666570603848

- 38. Autoencoders ! Learns efficient codings (dimensionality reduction) ! Linear units = similar to PCA (+rotation) ! Nonlinear units = manifold learning ! Autoencoders are deterministic (not good at predicting)

- 39. Denoising Autoencoders ! Corrupt input with noise (randomly set to 0), then force hidden layer to predict input source: neuraltip

- 40. #4 Stacked DAE ! Autoencoders for hidden layers 1 & 2, softmax output layer Unsupervised Training for Autoencoder Hidden Layers ! Pre-train Hidden Layers 1 & 2 sequentially, stack a softmax output layer on top and fine tune Supervised Training to fine-tune Stacked DAE

- 41. #4 training layer 1 ! Pre-train Layer 1, save weights Epochs seen: 10 Batches seen: 7000 Examples seen: 700000 learning_rate: 0.0010000000475 objective: 9.47059440613 total_seconds_last_epoch: 5.54894971848 training_seconds_this_epoch: 3.88439941406

- 42. #4 training layer 2 ! Pre-train layer 2, using outputs of layer 1 as inputs Epochs seen: 10 Batches seen: 7000 Examples seen: 700000 learning_rate: 0.0010000000475 objective: 2.91170930862 total_seconds_last_epoch: 4.94157600403 training_seconds_this_epoch: 3.63193702698

- 43. #4 Fine-tuning ! SGD with momentum for 50 Epochs

- 44. Classification Results Model Accuracy Logistic Regression 59% SVM w/RBF 65% Random Forest 69% Extremely Random Forest 73% 2-layer Sigmoid 83% 3-layer Softplus w/dropout 76% 2-layer RectLin w/momentum 76% Stacked DAE w/momentum 89%

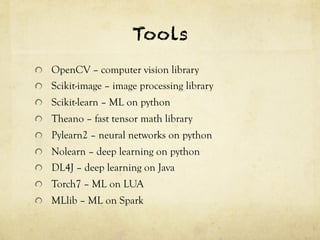

- 45. Tools ! OpenCV – computer vision library ! Scikit-image – image processing library ! Scikit-learn – ML on python ! Theano – fast tensor math library ! Pylearn2 – neural networks on python ! Nolearn – deep learning on python ! DL4J – deep learning on Java ! Torch7 – ML on LUA ! MLlib – ML on Spark

- 46. LAST WORDS I LIKE CHINESE FOOD - STACKED DAE

![NIST Special DB 19

! Contains 814,255 segmented handwritten characters

! Superset of MNIST that includes alphabetic characters

! 62 character classes [A-Z], [a-z], [0-9], 128x128 pixels

! We down-sample to 32x32 and use only a subsample of

90,000 characters (train=70,000, test&valid=10,000)](https://image.slidesharecdn.com/neuralnetworksinthewild-141028173746-conversion-gate01/85/Neural-Networks-in-the-Wild-Handwriting-Recognition-13-320.jpg)