NGINX as a Content Cache

- 1. Content Caching with NGINX Plus HOW TO CACHE CONTENT FOR YOUR APPLICATIONS TO ENSURE ENHANCED PERFORMANCE FOR YOUR USERS

- 2. | ©2020 F52 • Working in Technology for 20 years. • Background: − System Integration. − Telecommunications and Unified Communications. − WAN Optimisation & CDN. − Video Streaming. • 14 Years in Pre-Sales • 2 Years in NGINX • Former NGINX Customer. • LinkedIn: https://www.linkedin.com/in/sean-maritz-489917/ CONFIDENTIAL SEAN MARITZ – TECHNICAL SOLUTIONS ARCHITECT About Me

- 3. | ©2020 F53 CONFIDENTIAL Agenda What is NGINX Plus? Configuration Files Server & Location Blocks Directives & Variables Regex Example Advanced Cache Deployments

- 4. What is NGINX Plus? A BRIEF HISTORY OF NGINX AND NGINX PLUS

- 5. | ©2020 F55 450M sites run NGINX WE HAVE TREMENDOUS INSIGHT INTO APPLICATION PATTERNS | ©2019 F5 NETWORKS5

- 6. | ©2020 F56 About NGINX NGINX OSS released 2003 NGINX Inc. Founded in 2011, NGINX Plus first released in 2013 Acquired by F5 May 2019 1,500+ commercial customers Web Server Reverse Proxy Load Balancer Cache Web Application Firewall Internal DDOS Protection API Gateway K8s IC Sidecar Proxy

- 7. | ©2020 F57 NGINX Plus works in all environments

- 8. | ©2020 F58 What is a Cache? • Collins Dictionary: ◦ A cache or cache memory is an area of computer memory that is used for temporary storage of data and can be accessed more quickly than the main memory.

- 9. | ©2020 F59 Configuration Files

- 10. | ©2020 F510 Default Directories of NGINX Configuration Files /etc/nginx

- 11. | ©2020 F511 50x Error page Dashboard Index page Nginx module reference swagger-ui CONFIDENTIAL HTML Directory /usr/share/nginx/html

- 12. | ©2020 F512 Default Logs • Access.log • Error.log CONFIDENTIAL Logs /var/log/nginx

- 13. | ©2020 F513 Server & Location Blocks

- 14. | ©2020 F514 Main Events Http • Server − Location − Location (nested) Stream • Server Structure of a Configuration File

- 15. | ©2020 F515 CONFIDENTIAL Key-Value Store The NGINX plus API can be used to maintain a set of key-value pairs which NGINX plus can access at runtime. To configure this, we follow these steps: • Set up a shared-memory zone to store the key-value pairs (the keyval_zone directive) • Give the zone a name • Specify the maximum amount of memory to allocate for it • Optionally, specify a state file to store the entries so they persist across NGINX Plus restarts

- 16. | ©2020 F516 CONFIDENTIAL Defaults INCLUDE DIRECTIVE

- 17. | ©2020 F517 Directives & Variables

- 18. | ©2020 F518 proxy_cache - Defines a shared memory zone used for caching. proxy_cache_background_update - Allows starting a background subrequest to update an expired cache item, while a stale cached response is returned to the client. proxy_cache_bypass - Defines conditions under which the response will not be taken from a cache. proxy_cache_convert_head - Enables or disables the conversion of the “HEAD” method to “GET” for caching. When the conversion is disabled, the cache key should be configured to include the $request_method. proxy_cache_key - Defines a key for caching. proxy_cache_lock - When enabled, only one request at a time will be allowed to populate a new cache element identified according to the proxy_cache_key directive by passing a request to a proxied server. proxy_cache_lock_age - If the last request passed to the proxied server for populating a new cache element has not completed for the specified time, one more request may be passed to the proxied server. proxy_cache_lock_timeout - Sets a timeout for proxy_cache_lock. When the time expires, the request will be passed to the proxied server, however, the response will not be cached. proxy_cache_methods - If the client request method is listed in this directive then the response will be cached. “GET” and “HEAD” methods are always added to the list, though it is recommended to specify them explicitly. proxy_cache_min_uses - Sets the number of requests after which the response will be cached. proxy_cache_path - Sets the path and other parameters of a cache. proxy_cache_purge - Defines conditions under which the request will be considered a cache purge request. proxy_cache_revalidate - Enables revalidation of expired cache items using conditional requests with the “If-Modified-Since” and “If-None-Match” header fields. proxy_cache_use_stale - Determines in which cases a stale cached response can be used during communication with the proxied server. proxy_cache_valid - Sets caching time for different response codes. CONFIGURATION DIRECTIVES FOR CACHING (HTTP, SERVER & LOCATION) CONFIDENTIAL Directives Alphabetical index of directives http://nginx.org/en/docs/dirindex.html

- 19. | ©2020 F519 $cookie_nocache - the name cookie $arg_nocache - argument name in the request line $http_pragma - arbitrary request header field; the last part of a variable pragma is the field name $http_authorization - arbitrary request header field; the last part of a variable authorization is the field name $host - in this order of precedence: host name from the request line, or host name from the “Host” request header field, or the server name matching a request. $request_uri - full original request URI (with arguments) $cookie_user – the cookie named user CONFIGURATION VARIABLES FOR CACHING (HTTP, SERVER & LOCATION) CONFIDENTIAL Variables Alphabetical index of variables http://nginx.org/en/docs/varindex.html

- 20. | ©2020 F520 Regular Expressions (Regex) Using PCRE to extract the information you need. CONFIDENTIAL

- 21. | ©2020 F521 NGINX regex locations are of the form: CONFIDENTIAL Regular Expressions REGEX For example, a location block with the following regex handles all PHP requests with a URI ending with myapp/filename.php, such as /test/myapp/hello.php and /myapp/hello.php. The asterisk after the tilde (~*) makes the match case insensitive. NGINX and the regex tester support positional capture groups in location blocks. In the following example, the first group captures everything before the PHP file name and the second captures the PHP filename: For the URI /myapp/hello.php, the variable $1 is set to /myapp and $2 is set to hello.php. NGINX also supports named capture groups (but note that the regex tester does not): In this case the variable $begin is set to /myapp and $end is set

- 22. | ©2020 F522 NGINX maps that use regular expressions are of the form: CONFIDENTIAL Maps NGINX MAPS THAT USE REGULAR EXPRESSIONS ARE OF THE FORM: For example, this map block sets the variable $isphp to 1 if the URI (as recorded in the $uri variable) ends in .php, and 0 if it does not (the match is case sensitive):

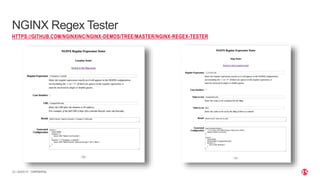

- 23. | ©2020 F523 CONFIDENTIAL NGINX Regex Tester HTTPS://GITHUB.COM/NGINXINC/NGINX-DEMOS/TREE/MASTER/NGINX-REGEX-TESTER

- 24. | ©2020 F524 Example & Demonstration CONFIDENTIAL

- 25. | ©2020 F525 To enable caching, include the proxy_cache_path directive in the top-level http {} context. The mandatory first parameter is the local filesystem path for cached content, and the mandatory keys_zone parameter defines the name and size of the shared memory zone that is used to store metadata about cached items: Then include the proxy_cache directive in the context (protocol type, virtual server, or location) for which you want to cache server responses, specifying the zone name defined by the keys_zone parameter to the proxy_cache_path directive (in this case, one): CONFIDENTIAL Enable the Caching of Responses

- 26. | ©2020 F526 The cache manager is activated periodically to check the state of the cache. If the cache size exceeds the limit set by the max_size parameter to the proxy_cache_path directive, the cache manager removes the data that was accessed least recently. As previously mentioned, the amount of cached data can temporarily exceed the limit during the time between cache manager activations. The cache loader runs only once, right after NGINX starts. It loads metadata about previously cached data into the shared memory zone. Loading the whole cache at once could consume sufficient resources to slow NGINX performance during the first few minutes after startup. To avoid this, configure iterative loading of the cache by including the following parameters to the proxy_cache_path directive: • loader_threshold – Duration of an iteration, in milliseconds (by default, 200) • loader_files – Maximum number of items loaded during one iteration (by default, 100) • loader_sleeps – Delay between iterations, in milliseconds (by default, 50) In the following example, iterations last 300 milliseconds or until 200 items have been loaded: CONFIDENTIAL NGINX Processes Involved in Caching THERE ARE TWO ADDITIONAL NGINX PROCESSES INVOLVED IN CACHING:

- 27. | ©2020 F527 Levels sets up a two-level directory hierarchy under /path/to/cache/. Having a large number of files in a single directory can slow down file access, so we recommend a two-level directory hierarchy for most deployments. If the levels parameter is not included, NGINX puts all files in the same directory. CONFIDENTIAL Levels DIRECTORY HIERARCHY For example, in the following configuration proxy_cache_path /data/nginx/cache levels=1:2 keys_zone=one:10m; file names in a cache will look like this: /data/nginx/cache/c/29/b7f54b2df7773722d382f4809d65029c

- 28. | ©2020 F528 By default, NGINX Plus caches all responses to requests made with the HTTP GET and HEAD methods the first time such responses are received from a proxied server. As the key (identifier) for a request, NGINX Plus uses the request string. If a request has the same key as a cached response, NGINX Plus sends the cached response to the client. You can include various directives in the http {}, server {}, or location {} context to control which responses are cached. To change the request characteristics used in calculating the key, include the proxy_cache_key directive: CONFIDENTIAL Specifying Which Requests to Cache PROXY_CACHE_KEY

- 29. | ©2020 F529 To define the minimum number of times that a request with the same key must be made before the response is cached, include the proxy_cache_min_uses directive: CONFIDENTIAL Specifying Which Requests to Cache PROXY_CACHE_MIN_USES

- 30. | ©2020 F530 To cache responses to requests with methods other than GET and HEAD, list them along with GET and HEAD as parameters to the proxy_cache_methods directive: CONFIDENTIAL Specifying Which Requests to Cache PROXY_CACHE_METHOD

- 31. | ©2020 F531 By default, responses remain in the cache indefinitely. They are removed only when the cache exceeds the maximum configured size, and then in order by length of time since they were last requested. You can set how long cached responses are considered valid, or even whether they are used at all, by including directives in the http {}, server {}, or location {} context: To limit how long cached responses with specific status codes are considered valid, include the proxy_cache_valid directive: CONFIDENTIAL Limiting or Disabling Caching PROXY_CACHE_VALID In this example, responses with the code 200 or 302 are considered valid for 10 minutes, and responses with code 404 are valid for 1 minute. To define the validity time for responses with all status codes, specify any as the first parameter:

- 32. | ©2020 F532 To define conditions under which NGINX Plus does not send cached responses to clients, include the proxy_cache_bypass directive. Each parameter defines a condition and consists of a number of variables. If at least one parameter is not empty and does not equal “0” (zero), NGINX Plus does not look up the response in the cache, but instead forwards the request to the backend server immediately. CONFIDENTIAL Limiting or Disabling Caching PROXY_CACHE_BYPASS

- 33. | ©2020 F533 To define conditions under which NGINX Plus does not cache a response at all, include the proxy_no_cache directive, defining parameters in the same way as for the proxy_cache_bypass directive. CONFIDENTIAL Limiting or Disabling Caching PROXY_NO_CACHE

- 34. | ©2020 F534 NGINX makes it possible to remove outdated cached files from the cache. This is necessary for removing outdated cached content to prevent serving old and new versions of web pages at the same time. The cache is purged upon receiving a special “purge” request that contains either a custom HTTP header, or the HTTP PURGE method. Let’s set up a configuration that identifies requests that use the HTTP PURGE method and deletes matching URLs. 1. In the http {} context, create a new variable, for example, $purge_method, that depends on the $request_method variable: 2. In the location {} block where caching is configured, include the proxy_cache_purge directive to specify a condition for cache-purge requests. In our example, it is the $purge_method configured in the previous step: CONFIDENTIAL Purging Content From The Cache CONFIGURING CACHE PURGE

- 35. | ©2020 F535 When the proxy_cache_purge directive is configured, you need to send a special cache-purge request to purge the cache. You can issue purge requests using a range of tools, including the curl command as in this example: $ curl -X PURGE -D – https://www.example.com/* HTTP/1.1 204 No Content Server: nginx/1.15.0 Date: Sat, 19 May 2018 16:33:04 GMT Connection: keep-alive CONFIDENTIAL Sending the Purge Command PROXY_CACHE_PURGE

- 36. | ©2020 F536 CONFIDENTIAL Cache Purge Configuration Example

- 37. | ©2020 F537 CONFIDENTIAL Dashboard LIVE ACTIVITY MONITORING

- 39. | ©2020 F539 Advanced Cache Architectures CONFIDENTIAL

- 40. | ©2020 F540 The two proxy_cache_path directives define two caches (my_cache_hdd1 and my_cache_hdd2) on two different hard drives. The split_clients configuration block specifies that the results from half the requests (50%) are cached in my_cache_hdd1 and the other half in my_cache_hdd2. The hash based on the $request_uri variable (the request URI) determines which cache is used for each request, the result being that requests for a given URI are always cached in the same cache. Please note this approach is not a replacement for a RAID hard drive setup. If there is a hard drive failure this could lead to unpredictable behavior on the system, including users seeing 500 response codes for requests that were directed to the failed hard drive. A proper RAID hard drive setup can handle hard drive failures. CONFIDENTIAL Configuration SPLITTING THE CACHE ACROSS MULTIPLE HARD DRIVES

- 41. | ©2020 F541 Although sharing a filesystem is not a good approach for caching, there are still good reasons to cache content across multiple NGINX Plus servers, each with a corresponding technique: • If your primary goal is to create a very high-capacity cache, shard (partition) your cache across multiple servers. • If your primary goal is to achieve high availability while minimizing load on the origin servers, use a highly available shared cache. CONFIDENTIAL Shared Caches with NGINX Plus Cache Clusters

- 42. | ©2020 F542 Sharding a cache is the process of distributing cache entries across multiple web cache servers. NGINX Plus cache sharding uses a consistent hashing algorithm to select the one cache server for each cache entry. The figures show what happens to a cache sharded across three servers (left figure) when either one server goes down (middle figure) or another server is added (right figure). CONFIDENTIAL Cache Sharding DISTRIBUTING CACHE ENTRIES ACROSS MULTIPLE WEB CACHE SERVERS.

- 43. | ©2020 F543 The total cache capacity is the sum of the cache capacity of each server. You minimize trips to the origin server because only one server attempts to cache each resource (you don’t have multiple independent copies of the same resource). CONFIDENTIAL DISTRIBUTING CACHE ENTRIES ACROSS MULTIPLE WEB CACHE SERVERS. Cache Sharding

- 44. | ©2020 F544 You can combine the LB and Cache tiers. In this configuration, two virtual servers run on each NGINX Plus instance. The load-balancing virtual server (“LB VS” in the figure) accepts requests from external clients and uses a consistent hash to distribute them across all NGINX Plus instances in the cluster, which are connected by an internal network. The caching virtual server (“Cache VS”) on each NGINX Plus instance listens on its internal IP address for its share of requests, forwarding them to the origin server and caching the responses. This allows all NGINX Plus instances to act as caching servers, maximizing your cache capacity. CONFIDENTIAL Optimizing Your Sharded Cache Configuration COMBINING THE LOAD BALANCER AND CACHE TIERS

- 45. | ©2020 F545 You can configure a first-level cache on the frontend LB tier for very hot content, using the large shared cache as a second-level cache. This can improve performance and reduce the impact on the origin server if a second-level cache tier fails, because content only needs to be refreshed as the first-tier cache content gradually expires. CONFIDENTIAL Configuring a First-Level “Hot” Cache

- 46. | ©2020 F546 If minimizing the number of requests to your origin servers at all costs is your primary goal, then the cache sharding solution is not the best option. Instead, a solution with careful configuration of primary and secondary NGINX Plus instances can meet your requirements: CONFIDENTIAL High Availability Cache Cluster CREATING A HIGHLY AVAILABLE CACHE CLUSTER

- 47. | ©2020 F547 CONFIDENTIAL HA Cache HIGH AVAILABILITY CACHING CLUSTER.

Editor's Notes

- #6: NGINX is one of the world’s most widely-deployed open source software (OSS) projects. There are more than 400 million websites and applications that run on NGINX.

- #7: NGINX was first released as open source back in 2003 NGINX as an entity was founded in 2011 and our first commercial product, namely NGINX Plus, was released back in 2013. To date we have over 1500 commercial customers globally And I am sure that many of you are aware that in May of last year that NGINX was acquired by F5 Networks I am sure that many of you on this call today will know NGINX as a web server and proxy server Something we have noticed over the past few years is that as more and more features are added to NGINX, the use cases for NGINX are now expanding…… particularly as our customers start to modernize their applications and move towards APIs, microservices and more agile development practices. In fact over 40% of our commercial customers today use NGINX Plus as an API gateway.

- #8: NGINX can run in almost any environment whether it be bare metal or Cloud environments and interestingly more recently we are seeing a huge adoption of NGINX in containerised environments for use with technologies such as docker and K8. I’m now going to hand over to Sean who will now dive straight into using NGINX an API gw. Thank you