Openbar Leuven // Less is more. Working with less data in NLP by Yves Peirsman

- 1. Less is More. Working with Less Data in Natural Language Processing Yves Peirsman

- 2. Artificial Intelligence Natural Language Processing Natural Language Processing Machine translation Sentiment analysis Information retrieval Information extraction Text classification

- 3. We provide consultancy for companies that need guidance in the NLP domain and/or would like to develop their AI software in-house. We develop software and train custom NLP models for challenging or domain-specific applications.

- 4. Example projects Sentiment analysis from Tweets NER and text classification for personalization

- 5. Example projects Document parsing Text generation “type”: “dress”, “color”: “red”, “length”: “knee-length”, “sleeve-length”: “short”, “style”: “60s-style”, We are selling this knee-length dress. Its 60s-style look and red color will completely win you over. With its short sleeves, it is perfect for long summer evenings. With one click, this fantastic dress can be yours.

- 6. Age of Big Data We live in the age of big data. ● Enormous amounts of texts are created every day: e-mails, tweets, text messages, blogs, research papers, news articles, legislation, books, etc., etc. ● This holds great promise for NLP: ○ We need NLP to uncover information in these texts. ○ We can use this data as training data

- 7. Age of Big Data

- 8. Transfer Learning The problem ● Machine Learning is data-hungry. ● Labelling training data is difficult, time-consuming and expensive. ● This limits the application of NLP in low-resource domains or languages. ⇒ How can we train accurate Machine Learning models with little data? The solution: Transfer Learning Re-use knowledge gained while solving one problem and apply it to a new problem

- 9. Pretrained task-specific models Benefit from pretrained models. ● For many tasks, pretrained models are available that are trained on data different than yours. ● These models can often be finetuned on your data. ● Example: spaCy’s generic Dutch NER finetuned on a limited set of financial news articles.

- 10. From task-specific to generic models Pretrained task-specific models ● are only useful for classic NLP tasks, ● are not available for custom tasks and smaller languages, ● still require lots of labelled training data.

- 11. From task-specific to generic models Pretrained task-specific models ● are only useful for classic NLP tasks, ● are not available for custom tasks and smaller languages, ● still require lots of labelled training data. Pretrained generic models ● are useful for virtually any NLP task, ● are easy to obtain for smaller languages, ● should require unlabelled data only.

- 12. From task-specific to generic models Solution: language models predict a word on the basis of its context. ● Texts are self-labelled for language modelling tasks. ● Language models need knowledge of word meaning, syntax, co-reference, etc. ● This generic knowledge can be reused for specific NLP tasks. This movie won her an Oscar for best actress. The keys to the house are on the table.

- 13. From task-specific to generic models Pre-trained language models can be finetuned for new NLP tasks. ULMFit, Howard and Ruder 2018

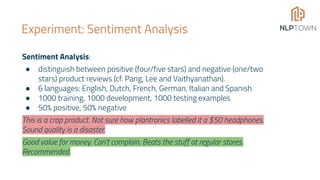

- 14. Experiment: Sentiment Analysis Sentiment Analysis: ● distinguish between positive (four/five stars) and negative (one/two stars) product reviews (cf. Pang, Lee and Vaithyanathan). ● 6 languages: English, Dutch, French, German, Italian and Spanish ● 1000 training, 1000 development, 1000 testing examples ● 50% positive, 50% negative This is a crap product. Not sure how plantronics labelled it a $50 headphones. Sound quality is a disaster. Good value for money. Can't complain. Beats the stuff at regular stores. Recommended.

- 15. Experiment: Models Baseline: spaCy ● One of the most popular open-source NLP libraries ● Pre-trained parsing, part-of-speech tagging, NER models ● Allows user to train text classification models based on a convolutional neural network State of the art: BERT ● Popular transfer learning model, developed by Google ● Pre-trained (mostly) by predicting masked words

- 16. First results ● spaCy: accuracy between 79.5% (Italian) and 83.4% (French) ● BERT: accuracy +8.4%, 45% error reduction

- 17. Disadvantages Transfer-learning models typically have hundreds of millions of parameters. This makes them heavy, slow and challenging to deploy. (source: Huggingface)

- 18. Distillation Options for shrinking these models: ● quantization: reduce the precision of the weights in a model by encoding them in fewer bits ● pruning: remove certain parts of a model completely (connection weights, neurons or even full weight matrices) ● distillation: train a small model to mimic the behaviour of a larger one Experiment: can we use model distillation to train small spaCy models that rival BERT?

- 19. Augmented data Challenge: distillation requires more than 1000 labelled examples Solution: Augmented data (Tang et al. 2019) ● mask random words in the training data ○ I like this book ⇒ I [MASK] this book ● replace random words in the training data by another word with the same part of speech. ○ I like this book ⇒ I like this screen ● sample a random n-gram of length 1 to 5 from the training example ● sample a random sentence from the training example Use BERT’s output for 60,000 such examples as spaCy’s training input.

- 20. Distillation

- 21. spaCy distilled The distilled spaCy models perform almost as well as the BERT models: improvement in accuracy of 7.3% and error reduction of 39%.

- 22. Conclusions ● Transfer learning allows us to train better NLP models with less data. ● Many transfer-learning models are huge and slow. ● For many tasks you don't need hundreds of millions of parameters to achieve high accuracies. ● Approaches like model distillation allow us to train simpler models that rival more complex ones.

![Augmented data

Challenge: distillation requires more than 1000 labelled examples

Solution: Augmented data (Tang et al. 2019)

● mask random words in the training data

○ I like this book ⇒ I [MASK] this book

● replace random words in the training data by another word with the

same part of speech.

○ I like this book ⇒ I like this screen

● sample a random n-gram of length 1 to 5 from the training example

● sample a random sentence from the training example

Use BERT’s output for 60,000 such examples as spaCy’s training input.](https://image.slidesharecdn.com/lessdatainnlp-openbar-190927072920/85/Openbar-Leuven-Less-is-more-Working-with-less-data-in-NLP-by-Yves-Peirsman-19-320.jpg)