OPERATION SYSTEM - INTRODUCTORY COURSE PPT

- 2. WHAT IS AN OPERATING SYSTEM? • An OS is a program that acts an intermediary between the user of a computer and computer hardware. • Major cost of general purpose computing is software. • OS simplifies and manages the complexity of running application programs efficiently. • It also provides a basis for application programs and acts as an intermediary between the computer user and the computer hardware. • Operating System (OS) acts as an interface between the computer user and the computer hardware. • It is a software which carries out all the fundamental tasks like file management, memory management, process management, handling input and output and controlling peripheral devices like disk drives and printers

- 3. Operating System Overview • A computer system can be divided into four components: the hardware, the operating system, the application programs and the users

- 4. Computer System Components • Hardware • Provides basic computing resources (CPU, memory, I/O devices). • Operating System • Controls and coordinates the use of hardware among application programs. • Application Programs • Solve computing problems of users (compilers, database systems, video games, business programs such as banking software). • Users • People, machines, other computers

- 5. Four Components of a Computer System

- 6. OS From Two Viewpoints • User View (Ease of use, performance, resource utilization) • PC • Mainframe/Minicomputer terminals • Networks of Workstations and Servers • Handheld computers • System View • OS as a resource allocator • OS as a control program

- 7. Goals of an Operating System • Simplify the execution of user programs and make solving user problems easier. • Use computer hardware efficiently. • Allow sharing of hardware and software resources. • Make application software portable and versatile. • Provide isolation, security and protection among user programs. • Improve overall system reliability • error confinement, fault tolerance, reconfiguration

- 8. Why should I study Operating Systems? • Need to understand interaction between the hardware and applications • New applications, new hardware.. • Inherent aspect of society today • Need to understand basic principles in the design of computer systems • Efficient resource management, security, flexibility • Increasing need for specialized operating systems • e.g. embedded operating systems for devices - cell phones, sensors and controllers • real-time operating systems - aircraft control, multimedia services

- 9. The Basic Elements of an OS • User Interface – The part of the OS that you interface with; • Command Interpreter: allows users to directly enter commands that are to be performed by the operating system. • Some operating systems include the command interpreter in the kernel. Others, such as Windows XP and UNIX, treat the command interpreter as a special program that is running when a job is initiated or when a user first logs on (on interactive systems). • Many of the commands given at this level manipulate files: create, delete, list, print, copy, execute, and so on. The MS-DOS and UNIX shells operate in this way. • Graphical User Interfaces • GUI allows provides a mouse-based window-and-menu system as an interface. A GUI provides a desktop metaphor where the mouse is moved to position its pointer on images, or icons, on the screen (the desktop) that represent programs, files, directories, and system function

- 10. Basic Element of Operating System • Kernel – The core of the OS. Interacts with the BIOS (at one end), and the UI (at the other end). • The kernel is the central module of an OS . It is the part of the operating system that loads first (into a protected area of memory to prevent it from being overwritten by programs or other parts of the operating system) , and it remains in main memory. • Provides all the essential services required by other parts of the operating system and applications. • Typically, the kernel is responsible for memory management, process management/task /disk management and connects the system hardware to the application software. • File Management System – Organizes and manages files.

- 11. Types of OS Operating systems are there from the very first computer generation and they keep evolving with time. • Batch operating system • The users of a batch operating system do not interact with the computer directly. • Each user prepares his job on an off-line device like punch cards and submits it to the computer operator. • To speed up processing, jobs with similar needs are batched together and run as a group. • The problems with Batch Systems are as follows − • Lack of interaction between the user and the job. • CPU is often idle, because the speed of the mechanical I/O devices is slower than the CPU. • Difficult to provide the desired priority.

- 12. • Time-sharing operating systems • Time-sharing is a technique which enables many people, located at various terminals, to use a particular computer system at the same time. • Time-sharing or multitasking is a logical extension of multiprogramming. Processor's time which is shared among multiple users simultaneously is termed as time-sharing. • The operating system uses CPU scheduling and multiprogramming to provide each user with a small portion of a time. Computer systems that were designed primarily as batch systems have been modified to time-sharing systems • Multiple jobs are executed by the CPU by switching between them, but the switches occur so frequently. Thus, the user can receive an immediate response. • Advantages of Timesharing operating systems are as follows − • Provides the advantage of quick response. • Avoids duplication of software. • Reduces CPU idle time. • Disadvantages of Time-sharing operating systems are as follows − • Problem of reliability. • Question of security and integrity of user programs and data. • Problem of data communication.

- 13. Distributed operating System • Distributed systems use multiple central processors to serve multiple real-time applications and multiple users. Data processing jobs are distributed among the processors accordingly. • The processors communicate with one another through various communication lines (such as high-speed buses or telephone lines). • Processors in a distributed system may vary in size and function. These processors are referred as sites, nodes, computers, and so on. • The advantages of distributed systems are as follows − • With resource sharing facility, a user at one site may be able to use the resources available at another. • Speedup the exchange of data with one another via electronic mail. • If one site fails in a distributed system, the remaining sites can potentially continue operating. • Better service to the customers. • Reduction of the load on the host computer. • Reduction of delays in data processing.

- 14. Network operating System • A Network Operating System runs on a server and provides the server the capability to manage data, users, groups, security, applications, and other networking functions. • The primary purpose of the network operating system is to allow shared file and printer access among multiple computers in a network, typically a local area network (LAN), a private network or to other networks. Examples of network operating systems include Microsoft Windows Server 2003, Microsoft Windows Server 2008, UNIX, Linux, Mac OS X, Novell NetWare, and BSD • The advantages of network operating systems are as follows − • Centralized servers are highly stable. • Security is server managed. • Upgrades to new technologies and hardware can be easily integrated into the system. • Remote access to servers is possible from different locations and types of systems. • The disadvantages of network operating systems are as follows − • High cost of buying and running a server. • Dependency on a central location for most operations. • Regular maintenance and updates are required.

- 16. Computer System Organization • Computer-system operation • One or more CPUs, device controllers connect through common bus providing access to shared memory • Bus Architecture: All devices in a computer are connected through a complicated bus system. • Concurrent execution of CPUs and devices competing for memory cycles

- 17. Main Components of a Computer System • Processor (CPU) • Runs program instructions • Main Memory • Storage for running programs and current data • Secondary Storage • Long-term program & data storage (hard disk, CD, etc) • Input Devices • Communication from the user to the computer(e.g. keyboard, mouse) • Output Devices • Communication from the computer to the user (e.g. monitor, printer, speakers)

- 18. Main Memory 5248 5249 5250 5251 5252 5253 5254 5255 5256 A word is stored in consecutive memory bytes. 10011010 Each memory cell stores a set number of bits (some computers use 8 bits/one byte, others use words) Each memory cell has a numeric address, which uniquely identifies its location

- 19. The internal memory of the computer RAM (Random Access Memory) Located on the motherboard It is used for temporary storage of data during the immediate PC Provides recording modes, read, store information ROM (Read Only Memory) Used for permanent storage of data that do not require intervention of the user (program start and stop the computer, Testing devices, the control operation of the processor, display, keyboard, printer, external memory) It is intended for reading information only A cache memory (buffer memory unit) Internal memory cache located inside the processor External cache memory located on the motherboard It used to increase the performance of your computer, matching operation of devices with different speeds, the exchange of data between Processor and memory

- 20. Device Controller • Device drivers are software modules that is embedded in an OS to handle a particular device. Operating System takes help from device drivers to handle all I/O devices. • All the I/O device have a controller to act as the middle-man between the OS • From a hardware point of view it plays an important role in order to operate that device by acting as a bridge between hardware device and operating system.

- 21. Central Processing Unit (CPU) Arithmetic / Logic Unit Registers Control Unit Small, fast storage areas for instructions and data Performs calculations and decisions Coordinates processing steps

- 22. Program Instructions • A Program instruction is a sequence of bits. • Instructions simply tell the computer’s CPU what to do • A simple instruction format may consist of an operation code (op code) and an address or operands • Programs instructions are stored in secondary storage (hard disks, CD- ROM, DVD). • To process data, the CPU requires a working area • Uses Main Memory • Also called: RAM (random access memory), primary storage, and internal memory. Op Code Operands / Address

- 23. Instructions • The operation code specifies the operation the computer is to carry out (add, compare, etc) • The operand/address area can store an operand or an address • An operand is a specific value or a register number • An address allows the instruction to refer to a location in main memory • The CPU runs each instruction in the program, starting with instruction 0, using the fetch-decode-execute cycle.

- 24. Operating-System Operations Operating System Structures

- 25. A View of Operating System Services

- 26. OS Services • Program execution – OS capability to load a program into memory, run it, end execution, either normally or abnormally (indicating error). • I/O operations – since user programs cannot execute I/O operations directly, the OS must provide some means to perform I/O, which may involve a file or I/O device. • File systems – program capability to read, write, create, and delete files and directories. • Communication – Processes may exchange information, on the same computer or between computers over a network – Implemented via shared memory or message passing. • Error detection – ensure correct computing by detecting errors in the CPU and memory hardware, in I/O devices, or in user programs

- 27. OS Services • Resource allocation – When multiple users or multiple jobs running concurrently, resources must be allocated to each of them. • Accounting – To keep track of which users use how much and what kinds of computer resources • Protection and security – The owners of information stored in a multi-user or networked computer system may want to control use of that information, while concurrent processes should not interfere with each other – If a system is to be protected and secure, precautions must be instituted throughout it; a chain is only as strong as its weakest link.

- 28. Program execution • Operating systems handle many kinds of activities from user programs to system programs like printer spooler, name servers, file server, etc. • Each of these activities is encapsulated as a PROCESS • The operating system's most important job is managing the CPU and Process execution • Following are the major activities of an operating system with respect to program management − • Loads a program into memory. • Executes the program. • Handles program's execution. • Provides a mechanism for process synchronization. • Provides a mechanism for process communication. • Provides a mechanism for deadlock handling.

- 29. I/O Operation • An I/O subsystem comprises of I/O devices and their corresponding driver software. Drivers hide the peculiarities of specific hardware devices from the users. • An Operating System manages the communication between user and device drivers. • I/O operation means read or write operation with any file or any specific I/O device. • Operating system provides the access to the required I/O device when required.

- 30. File System Manipulation • A file system is normally organized into directories for easy navigation and usage. • File handling portion of operating system also allows users to create and delete files by specific name along with extension, search for a given file and / or list file information. • Following are the major activities of an operating system with respect to file management − • Program needs to read a file or write a file. • The operating system gives the permission to the program for operation on file. • Permission varies from read-only, read-write, denied and so on. • Operating System provides an interface to the user to create/delete files. • Operating System provides an interface to the user to create/delete directories. • Operating System provides an interface to create the backup of file system.

- 31. Error Detection • Operating system also deals with hardware problems. • To avoid hardware problems the operating system constantly monitors the system for detecting the errors and fixing these errors (if found). • Errors can occur anytime and anywhere. An error may occur in CPU, in I/O devices or in the memory hardware. • Following are the major activities of an operating system with respect to error handling − • The OS constantly checks for possible errors. • The OS takes an appropriate action to ensure correct and consistent computing.

- 32. Process Management • A program does nothing unless its instructions are executed by a CPU • Program in execution is called a process or can be considered as job • The operating system is responsible for the following activities in connection with process management: • Scheduling processes and threads on the CPUs • Creating and deleting both user and system processes • Suspending and resuming processes • Providing mechanisms for process synchronization • Providing mechanisms for process communication

- 33. Resource Allocation Management • In the multitasking environment, when multiple jobs/ processes are running at a time, the OS is responsible in allocating the required resources (CPU, main memory, tape drive or secondary storage etc.) to each process for its better utilization • Following are the major activities of an operating system with respect to resource management − • The OS manages all kinds of resources using schedulers. • CPU scheduling algorithms are used for better utilization of CPU. • Properties of OS reference

- 34. Assignment • What is a BIOS • Brieflyh discuss the functions of a computer BIOS

- 36. Process • A Process is an instance of a program/ instruction in execution. • A process is defined as an entity which represents the basic unit of work to be implemented in the system. • A process is an 'active' entity as opposed to program which is considered to be a 'passive' entity. Attributes held by process include hardware state, memory, CPU etc. • Process in memory is divided into four sections for efficient working • Heap • Stack • Data • Text

- 37. Program vs. Process • A program is a piece of code which may be a single line or millions of lines of instruction to the computer. • A program and a process are related terms but the major difference between program and process is that Program is a group of instructions to carry out a specified task whereas the Process is a program in execution. • Program and process are relevant but are dissimilar. A program is just a script stored on disk or seem to as the previous stage of the process. On the contrary, the process is an event of a program in execution.

- 39. Process Life Cycle The process is being created. The process is waiting to be assigned to a processor The process is waiting for some event to occur(such as an I/O completion or reception of a signal). Instructions are being executed The process is being created. • Processes in the operating system can be in any of the following states:

- 40. Process Control Block( PCB) • PCB is a central store of ingormation that allows the OS to locate all the key information abourt the process. • It is a data structure that is maintained by the Operating System for every process. • Every process is represented in the operating system by a process control block, which is also called a task control block. • Process control block is important in multiprogramming environment as it captures the information pertaining to the number of processes running simultaneously.

- 41. Components of PCB The current state of the process i.e., whether it is ready, running, waiting, or whatever. the address of the next instruction, which should be executed for that process Information on CPU registers where process need to be stored for execution. Scheduling information is used to set the priority of different processes. Information on amount of CPU and time utilities like real time used, job or process numbers, etc. Information of page table, memory limits, Segment table priority information and pointers to scheduling queues. NB: In computer system there are various process running simultaneously and each process has its unique ID (PID). This Id helps system in scheduling the processes. This Id is provided by the process control block

- 42. Process Scheduling • The act of determining which process is int the ready state and should be move to the running state • The main goal of the process scheduling system is to keep the CPU busy all the time and to deliver minimum response time for all programs. • For achieving this, the scheduler must apply appropriate rules for swapping processes IN and OUT of CPU • Process Scheduling Queues: ( Next Slide) • Scheduling is categorize into two: • Pre-emptive: In this all the Processes are executed by using some Amount of Time of CPU. The Time of CPU is divided into the Number of Minutes and Time of CPU divided into the Process by using Some Rules • Non-Preemptive: In this case the CPU will be automatically free after finishing the whole process. This mean there is no scheduling by the PCB and all jobs are executed one-by-one.

- 43. Pre-emptive Scheduling: We have various Techniques/ Algorithms • First Come First Serve: As the name Suggest, the Processes those are Coming first, will be Executed first by CPU. • CPU will Perform all the Process by using their Coming Order.. In this all the Process are arranged by the CPU and After Executing a Single Process, then this will Automatically Execute second Process by Picking up the next Process. • Its implementation is based on FIFO (First-in-First-out) queue. • Shortest Job first: In this Scheduling, All the Process are Arranged into their Size by considering how much time a Process require, of CPU for Executing. • CPU Arrange all the Processes according to the Requirement Time. • CPU Executes the Processes by Examining the Time Required by Process and prepare a queue in which all the Processes are arranged by using the Number of Time Units Requires by the Process.

- 44. Cont. • Priority Scheduling: Each Process given a Priority to determine what job is executed first, is determined by the CPU. After Examining the Priority of the CPU. • All the Processes are Arranged by using Some Priority to be determine execution time by the CPU • Round Robin: In this Scheduling the Time of CPU is divided into the Equal Parts and Assign to various Processes. The time of CPU is also known as Quantum Time. • Multilevel Queue Scheduling: In this The Time of CPU is divided by using Some Process Categories. In this the Process those are executed on the Foreground or on the Screen, have a higher Priority and the Process those are running in the Background to fill the Request the user.

- 45. Assignment • Compare the any two pre-empted process technics/ algoritthm

- 46. Process Deadlock • Deadlocks are a set of blocked processes each holding a resource and waiting to acquire a resource held by another process. • In this situation, none of the process gets executed since the resource it needs, is held by some other process which is also waiting for some other resource to be released.

- 47. (Process) Starvation Vs. Deadlock

- 48. Conditions for Deadlocks • Mutual Exclusion • A resource can only be shared in mutually exclusive manner. It implies, if two process cannot use the same resource at the same time. • Mutual section from the resource point of view is the fact that a resource can never be used by more than one process simultaneously. • Hold and Wait • A process waits for some resources while holding another resource at the same time. • No pre-emption • The process which once scheduled will be executed till the completion. No other process can be scheduled by the scheduler meanwhile. • Circular Wait • All the processes must be waiting for the resources in a cyclic manner so that the last process is waiting for the resource which is being held by the first process.

- 49. Handling of Deadlocks • Deadlock Ignorance • Deadlock Ignorance is the most widely used approach among all the mechanism; in this approach, the Operating system assumes that deadlock never occurs. • operating systems like Windows and Linux mainly focus upon performance hence the decreases the performance if it usually have to resolve to deadlock handling mechanism. • Deadlock prevention • Deadlocks can be avoided by avoiding at least one of the four conditions, because all this four conditions are required simultaneously to cause deadlock. • If it is possible to violate one of the four conditions at any time then the deadlock can never occur in the system. • OS Deadlock Prevention

- 50. Cont. • Deadlock avoidance • In deadlock avoidance, the operating system checks whether the system is in safe state or in unsafe state at every step which the operating system performs. • The process continues until the system is in safe state. Once the system moves to unsafe state, the OS has to backtrack one step. • The OS reviews each allocation so that the allocation doesn't cause the deadlock in the system. • Deadlock detection and recovery • This approach let the processes fall in deadlock and then periodically check whether deadlock occur in the system or not. • If it occurs then it applies some of the recovery methods to the system to get rid of deadlock. • OS Deadlock Detection and Recovery

- 52. Thread • A thread is a basic of execution or CPU utilization. • A sequential execution stream within a process • A threads have some of the properties of processes, they are sometimes called lightweight process. • Threads are popular way to improve application through parallelism. • The CPU switches rapidly back and forth among the threads giving illusion that the threads are running in parallel. • A thread has or consists of a thread ID, program counter (PC), a register set, and a stack space.

- 53. Thread Vs Process • Similarities • Like process, threads share CPU and only one thread active (running) at a time. • Like process, threads within a processes, threads within a processes execute sequentially. • Like process, thread can create children. • Like process, if one thread is blocked, another thread can run. • Differences • Unlike processes, threads are not independent of one another. • Unlike processes, all threads can access every address in the task . • Unlike processes, thread are design to assist one other. Note that processes might or might not assist one another because processes may originate from different users.

- 54. Types of Threads • There are two types of threads: • User Threads: User managed threads • User-level threads are implemented by users and the kernel is not aware of the existence of these threads • It handles these threads as if they were single-threaded processes • User level thread is also called many-to-one mapping thread because the operating system maps all threads in a multithreaded process to a single execution context • Kernel Threads: Operating System managed threads acting on kernel, an operating system core. • Kernel-level threads are handled by the operating system directly and the thread management is done by the kernel • Kernel performs scheduling on a thread basis. The kernel support and management thread creation only in Kernel space. • Thread management code is not included in the application code. It is the only API to the kernel thread. Windows operating system uses this facility.

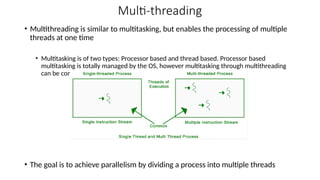

- 55. Multi-threading • Multithreading is similar to multitasking, but enables the processing of multiple threads at one time • Multitasking is of two types: Processor based and thread based. Processor based multitasking is totally managed by the OS, however multitasking through multithreading can be controlled by the programmer to some extent. • The goal is to achieve parallelism by dividing a process into multiple threads

- 56. Importance of Threads in Operating System • The advantages of multithreaded programming can be categorized into four major headings - • Responsiveness: Multithreading is an interactive concept for an application which may allow a program to continue running even when a part of it is blocked or is carrying a lengthy operation, which increases responsiveness to the user. • Resource sharing: Mostly threads share the memory and the resources of any process to which they fit in. The advantage of sharing code is that it allows any application to have multiple different threads of activity inside the same address space. • Economy: In OS, allocation of memory and resources for process creation seems costly. Because threads can distribute resources of any process to which they belong, it became more economical to create and develop context-switch threads. • Utilization of multiprocessor architectures: The advantages of multithreading can be greatly amplified in a multiprocessor architecture, where there exist threads which may run in parallel on diverse processors.

- 57. Inter-process communication • Inter Process Communication (IPC) refers to the function where the operating systems allow various processes to communicate with each other. • This involves synchronizing their activities and managing shared data resources. • Inter-process communication is used for exchanging data between multiple threads in one or more processes or programs. • Processes executing concurrently in the operating system might be either independent processes or cooperating processes. • A process is independent if it cannot be affected by the other processes executing in the system.

- 59. Cont. • Pipes Pipe is widely used for communication between two related processes. This is a half-duplex method, so the first process communicates with the second process. However, in order to achieve a full-duplex, another pipe is needed. • Message Passing: It is a mechanism for a process to communicate and synchronize. Using message passing, the process communicates with each other without resorting to shared variables. • Message Queues: A message queue is a linked list of messages stored within the kernel. It is identified by a message queue identifier. This method offers communication between single or multiple processes with full-duplex capacity. • Shared Memory: • Shared memory is a memory shared between two or more processes that are established using shared memory between all the processes. This type of memory requires to protected from each other by synchronizing access across all the processes. • Direct Communication: In this type of inter-process communication process, should name each other explicitly. In this method, a link is established between one pair of communicating processes, and between each pair, only one link exists. • Indirect Communication: • Indirect communication establishes like only when processes share a common mailbox each pair of processes sharing several communication links. A link can communicate with many processes. The link may be bi-directional or unidirectional. • FIFO: Communication between two unrelated processes. It is a full-duplex method, which means that the first process can communicate with the second process, and the opposite can also happen.

Editor's Notes

- #3: The Hardware; the CPU, the Memory and the I/O devices -provides the basic computing resources for the computer system. The Application Programs as word processors/ spreadsheets/ compilers, and Web browsers-define the ways in which these resources are used to solve users' computing problems. The Operating System controls the hardware and coordinates its use among the various application programs for the various users. The Users are the external entities that provide and consume the end result of a computer process. Performance is of course the most important goal to the user

- #10: The kernel is responsible for: Process management for application execution Memory management, allocation, and I/O Device management through the use of device drivers System call control, which is essential for the execution of kernel services

- #12: The main difference between Multiprogram Batch Systems and Time-Sharing Systems is that in case of Multiprogram batch systems, the objective is to maximize processor use, whereas in Time-Sharing Systems, the objective is to minimize response time.

- #14: Real Time operating System A real-time system is defined as a data processing system in which the time interval required to process and respond to inputs is so small that it controls the environment. The time taken by the system to respond to an input and display of required updated information is termed as the response time. So in this method, the response time is very less as compared to online processing. There are two types of real-time operating systems. Hard real-time systems Hard real-time systems guarantee that critical tasks complete on time. In hard real-time systems, secondary storage is limited or missing and the data is stored in ROM. In these systems, virtual memory is almost never found. Soft real-time systems Soft real-time systems are less restrictive. A critical real-time task gets priority over other tasks and retains the priority until it completes. Soft real-time systems have limited utility than hard real-time systems. For example, multimedia, virtual reality, Advanced Scientific Projects like undersea exploration and planetary rovers, etc.

- #18: The main memory in a computer is called Random Access Memory. It is also known as RAM. This is the part of the computer that stores operating system software, software applications and other information for the central processing unit (CPU) Characteristics of Main Memory Very closely connected to the CPU. Contents are quickly and easily changed. Holds the programs and data that the processor is actively working with. Interacts with the processor millions of times per second. Nothing permanent is kept in main memory

- #19: When an instruction or data is accessed from main memory, it is placed in the cache. Second and subsequent use of the same instruction/data will then be faster, since it is accessed directly from the cache.

- #21: Registers are small, fast memory within the CPU Different registers hold different things instructions and addresses of instructions data (operands) results of operations

- #22: Before a program is run, instructions must first be copied from the slow secondary storage into fast main memory Provides the CPU with fast access to instructions to execute

- #28: A Process may be made up of multiple threads of execution that execute instructions concurrently The operating system has the capability to load a program into memory and execute that program. The program must be able to end its execution, either normally or abnormally / forcefully.

- #29: Each program requires an input and after processing the input submitted by user it produces output. This involves the use of I/O devices. The operating system hides the user from all these details of underlying hardware for the I/O. Operating system makes tit easier for users conveniently run programs by just providing I/O functions

- #30: While working on the computer, generally a user is required to manipulate various types of files like as opening a file, saving a file and deleting a file from the storage disk. This is an important task that is also performed by the operating system. Thus operating system makes it easier for the user programs to accomplish their task by providing the file system manipulation service. This service is performed by the 'Secondary Storage Management' a part of the operating system.

- #31: Errors may occur within CPU, memory hardware, I/O devices and in the user program. For each type of error, the OS takes adequate action for ensuring correct and consistent computing.

- #32: A process needs certain resources including CPU time, memory, files and I/O devices to accomplish its task. These resources are either given to the process when it is created or allocated to it while it is running.

- #36: Stack: Typically an area of preserved main memory to temporary store(main memory) return addresses, procedure arguments, locally variables. Heap: This is dynamically allocated memory to a process during its run time. Data: This section holds the global and static variables, allocated and initialized prior to execution. Text: the compiled program code, read in from non-volatile storage when the program is launched.

- #37: Key Differences Between Program and Process A program is a definite group of ordered operations that are to be performed. On the other hand, an instance of a program being executed is a process. The nature of the program is passive as it does nothing until it gets executed whereas a process is dynamic or active in nature as it is an instance of executing program and perform the specific action. A program has a longer lifespan because it is stored in the memory until it is not manually deleted while a process has a shorter and limited lifespan because it gets terminated after the completion of the task. The resource requirement is much higher in case of a process; it could need processing, memory, I/O resources for the successful execution. In contrast, a program just requires memory for storage.

- #39: These stages may differ in different operating systems, and the names of these states are also not standardized In general, a process can have one of the following five states at a time. Start This is the initial state when a process is first started/created. Ready The process is waiting to be assigned to a processor. Ready processes are waiting to have the processor allocated to them by the operating system so that they can run. Process may come into this state after Start state or while running it by but interrupted by the scheduler to assign CPU to some other process. Running Once the process has been assigned to a processor by the OS scheduler, the process state is set to running and the processor executes its instructions. Waiting Process moves into the waiting state if it needs to wait for a resource, such as waiting for user input, or waiting for a file to become available. Terminated or Exit Once the process finishes its execution, or it is terminated by the operating system, it is moved to the terminated state where it waits to be removed from main memory.

- #40: Role of process control block The role or work of process control block (PCB) in process management is that it can access or modified by most OS utilities including those are involved with memory, scheduling, and input / output resource access.

- #41: Process state: A process can be new, ready, running, waiting, etc. Program counter: The program counter lets you know the address of the next instruction, which should be executed for that process. CPU registers: This component includes accumulators, index and general-purpose registers, and information of condition code. CPU scheduling information: This component includes a process priority, pointers for scheduling queues, and various other scheduling parameters. In computer system there were many processes running simultaneously and each process have its priority. The priority of primary feature of RAM is higher than other secondary features. Accounting and business information: It includes the amount of CPU and time utilities like real time used, job or process numbers, etc. Memory-management information: This information includes the value of the base and limit registers, the page, or segment tables. This depends on the memory system, which is used by the operating system. I/O status information: This block includes a list of open files, the list of I/O devices that are allocated to the process, etc.

- #42: The Operating System maintains the following important process scheduling queues − Job queue − This queue keeps all the processes in the system. Ready queue − This queue keeps a set of all processes residing in main memory, ready and waiting to execute. A new process is always put in this queue. Device queues − The processes which are blocked due to unavailability of an I/O device constitute this queue.

- #43: The OS can use different policies to manage each queue (FIFO, Round Robin, Priority, etc.). The OS scheduler determines how to move processes between the ready and run queues which can only have one entry per processor core on the system; in the above diagram, it has been merged with the CPU

- #44: First Come First Serve (FCFS) Jobs are executed on first come, first serve basis. Poor in performance as average wait time is high. Shortest Job Next (SJN) This is a non-preemptive, pre-emptive scheduling algorithm. Best approach to minimize waiting time. Easy to implement in Batch systems where required CPU time is known in advance.

- #45: Priority Based Scheduling Priority can be decided based on memory requirements, time requirements or any other resource requirement. Each process is assigned a priority. Process with highest priority is to be executed first and so on. Processes with same priority are executed on first come first served basis. Round Robin Scheduling. Each process is provided a fix time to execute and once a process is executed for a given time period, it is pre-empted and other process executes for a given time period. Context switching is used to save states of preempted processes. Context Switch A context switch is the mechanism to store and restore the state or context of a CPU in Process Control block so that a process execution can be resumed from the same point at a later time. Using this technique, a context switcher enables multiple processes to share a single CPU. Context switching is an essential part of a multitasking operating system features. When the scheduler switches the CPU from executing one process to execute another, the state from the current running process is stored into the process control block.

- #47: Every process needs some resources to complete its execution. However, the resource is granted in a sequential order; The process requests for some resource. OS grant the resource if it is available otherwise let the process waits. The process uses it and release on the completion A Deadlock is a situation where each of the computer process waits for a resource which is being assigned to some another process. In this situation, none of the process gets executed since the resource it needs, is held by some other process which is also waiting for some other resource to be released. In this scenario, a cycle is being formed among the three processes. None of the process is progressing and they are all waiting. The computer becomes unresponsive since all the processes got blocked.

- #48: Conditions for Deadlocks Mutual Exclusion A resource can only be shared in mutually exclusive manner. It implies, if two process cannot use the same resource at the same time. Hold and Wait A process waits for some resources while holding another resource at the same time. No pre-emption The process which once scheduled will be executed till the completion. No other process can be scheduled by the scheduler meanwhile. Circular Wait All the processes must be waiting for the resources in a cyclic manner so that the last process is waiting for the resource which is being held by the first process.

- #50: How to avoid Deadlocks by managing the four conditions ( Prevention) Deadlocks can be avoided by avoiding at least one of the four conditions, because all this four conditions are required simultaneously to cause deadlock. Mutual Exclusion Mutual section from the resource point of view is the fact that a resource can never be used by more than one process simultaneously however violating resources behaving in the mutually exclusive manner then deadlock can be prevented. Hold and Wait In this condition processes must be prevented from holding one or more resources while simultaneously waiting for one or more others. No Pre-emption Pre-emption of process resource allocations can avoid the condition of deadlocks, where ever possible. Circular Wait Circular wait can be avoided if we number all resources, and require that processes request resources only in strictly increasing(or decreasing) order.

- #53: Thread ID uniquely identify each thread Program counter that keeps track of which instruction to execute next. Registers holds current running thread /working variables Stack contains the execution history of process Threads belonging to the same process share the same OS resource.

- #57: Responsiveness: If the process is divided into multiple threads, if one thread completes its execution, then its output can be immediately returned. Resource sharing: Resources like code, data, and files can be shared among all threads within a process. Note: stack and registers can’t be shared among the threads. Each thread has its own stack and registers. Faster context switch: Context switch time between threads is lower compared to process context switch. Process context switching requires more overhead from the CPU. Effective utilization of multiprocessor system: If we have multiple threads in a single process, then we can schedule multiple threads on multiple processor. This will make process execution faster. Enhanced throughput of the system: If a process is divided into multiple threads, and each thread function is considered as one job, then the number of jobs completed per unit of time is increased, thus increasing the throughput of the system.

- #58: IPC is also refer to as set of programming interface which allow a programmer to coordinate activities among various program processes which can run concurrently in an operating system. This allows a specific program to handle many user requests at the same time.

- #59: Pipe is a technique for passing information from one program process to another. Unlike other forms of IPC, a pipe is one-way communication only. Basically, a pipe passes a parameter such as the output of one process to another process which accepts it as input. Message Queueing is a method by which process can exchange or pass data using an interface to a system-managed queue of messages. The message queue is managed by the operating system (or kernel). Shared Memory is a method by which program processes can exchange data more quickly than by reading and writing using the regular operating system services. In the Direct Communication, each process that wants to communicate must explicitly name the recipient or sender of the communication. In this scheme, the send and receive primitives are defined as follows : Send (P, message) - Send a message to process P. Receive (Q, message) - Receive a message from process Q. Indirect Communication : With Indirect Communication, the messages are sent to and received from mailboxes. A mailbox can be viewed abstractly as, an object into which messages can be placed by processes and from which messages can be removed. The send and receive primitives are defined as follows : Send (A, message) - Send a message to mailbox A. Received (A, message) - Receive a message from mailbox A.