Packet walks in_kubernetes-v4

- 1. Packet Walk(s) In Kubernetes Don Jayakody, Big Switch Networks @techjaya linkedin.com/in/jayakody

- 2. • We are in the business of “Abstracting Networks” (As one Big Switch) using Open Networking Hardware About Big Switch About • Spent 4 years in Engineering building our products. Now in Technical Product Management About Me Kubernetes: Abstracted Compute Domain Legacy Next-Gen http://www.youtube.com/watch?v=HlAXp0-M6SY&t=0m43s Why Not Abstract the Network?

- 3. • Namespace/Pods/CNIs? • What’s that “Pause” Container really do? • Flannel: Intro / Packet Flows • Exposing Services Intro: K8S Networking Agenda • Architecture • IP-IP Mode (Route formation / Pod-to- pod communication / ARP Resolution/ Packet Flow) • BGP Mode (Peering requirements/ Packet Flow) Calico: Networking • Architecture • Overlay Network Mode (Configuration/ Pod-to-pod communication/ Datapath / ARP Resolution/ Packet Flow) • Direct Routing Mode Cilium: Networking

- 4. • Linux kernel has 6 types of namespaces: pid, net, mnt, uts, ipc, user • Network namespaces provide a brand-new network stack for all the processes within the namespace • That includes network interfaces, routing tables and iptables rules Namespaces K8S Networking: Basics eth0 namespace-1 eth0 namespace-2 veth1veth0 bridge eth0root namespace Node1

- 5. • Lowest common denominator in K8S. Pod is comprised of one or more containers along with a “pause” container • Pause container act as the “parent” container for other containers inside the pod. One of it’s primary responsibilities is to bring up the network namespace • Great for the redundancy: Termination of other containers do not result in termination of the network namespace Pods K8S Networking: Basics

- 6. • Multiple ways to access pod namespaces • ‘kubectl exec --it' • ‘docker exec --it’ • nsenter (“namespace enter”, let you run commands that are installed on the host but not on the container) Accessing Pod Namespaces K8S Networking: Basics Both containers belong to the same pod => Same Network Namespace => same ’ip a’ output

- 7. • Interface between container runtime and network implementation • Network plugin implements the CNI spec. It takes a container runtime and configure (attach/detach) it to the network • CNI plugin is an executable (in: /opt/cni/bin) • When invoked it reads in a JSON config & Environment Variables to get all the required parameters to configure the container with the network Container Networking Interface : CNI K8S Networking: Basics Container Runtime CNI Plugin Container Networking Interface Configures Networking Credit:https://www.slideshare.net/weaveworks/introduction-to-the-container-network-interface-cni

- 8. • Node IP belongs to 2 different subnets: 25.25.25.0/24 & 35.35.35.0/24 • Gateway is configured with .254 in the network for each network segment • Bond0 interface is created by bonding two 10G interfaces Topology Info Topology bond0root Node-121 25.25.25.121 bond0root Node-122 35.35.35.122

- 9. • To make networking easier, Kubernetes does away with port-mapping and assigns a unique IP address to each pod • If a host cannot get an entire subnet to itself things get pretty complicated • Flannel aims to solve this problem by creating an overlay mesh network that provisions a subnet to each server Intro Flannel K8S Flannel CNI Plugin FlannelD (VXLAN/UDP..) Network

- 10. • “Flannel.1” Is the VXLAN interface • CNI0 is the bridge Default Config Flannel bond0root Node-121 25.25.25.121 bond0root Node-122 35.35.35.122 cni0 flannel.1 cni0 flannel.1

- 11. Flannel bond0root Node-121 25.25.25.121 bond0root Node-122 35.35.35.122 cni0 flannel.1 cni0 flannel.1 • Brought up 2 pods Pod-to-Pod Communication eth0pod1 10.244.1.2 eth0pod2 10.244.2.2 veth-x veth-y

- 12. Flannel bond0root Node-121 25.25.25.121 bond0root Node-122 35.35.35.122 cni0 flannel.1 cni0 flannel.1• VETH Interface with ”veth-” got created on the root namespace • Other end is attached to the pod namespace Pod-to-Pod Communication eth0pod1 10.244.1.2 eth0pod2 10.244.2.2 veth-x veth-y notice the index on veth. eg: “eth0@if12” on pod1 ns corresponds to 12th interface on the root ns

- 13. Flannel bond0root Node-121 25.25.25.121 bond0root Node-122 35.35.35.122 cni0 flannel.1 cni0 flannel.1 • ’cni0’ bridge is replying to ARP requests from the pod ARP Handling eth0pod1 10.244.1.2 eth0pod2 10.244.2.2 veth-x veth-y 1 2 ARP Reply

- 14. Flannel bond0root Node-121 25.25.25.121 bond0root Node-122 35.35.35.122 cni0 flannel.1 cni0 flannel.1 • Routing table lookup to figure out where to send the packet Packet Flow eth0pod1 10.244.1.2 eth0pod2 10.244.2.2 veth-x veth-y 1 2

- 15. Flannel bond0root Node-121 25.25.25.121 bond0root Node-122 35.35.35.122 cni0 flannel.1 cni0 flannel.1 • Flannel.1 device does VXLAN encap/decap • Traffic from pod1 is going through “flannel.1” device before exiting Packet Flow eth0pod1 10.244.1.2 eth0pod2 10.244.2.2 veth-x veth-y 1 2 3 4 5 6 7 12 10 9 11 8

- 16. • The primary Calico agent that runs on each machine that hosts endpoints. • Responsible for programming routes and ACLs, and anything else required on the host Felix Calico • BGP Client: responsible of route distribution • When Felix inserts routes into the Linux kernel FIB, Bird will pick them up and distribute them to the other nodes in the deployment Bird

- 17. • Felix's primary responsibility is to program the host's iptables and routes to provide the connectivity to pods on that host. • Bird is a BGP agent for Linux that is used to exchange routing information between the hosts. The routes that are programmed by Felix are picked up by bird and distributed among the cluster hosts Architecture Calico eth0 pod1 eth1 pod2 veth1 cbr0 eth0 flannel 0 Node1 iptables route table Bird Felix eth0 pod3 eth1 pod4 veth1 cbr0 eth0 flannel 0 Node2 iptables route table Bird Felix *etcd/confd components are not shown for clarity

- 18. bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 root Node-122 35.35.35.122 iptables route table • Node-to-node mesh • IP-IP encapsulation Default Configuration Calico

- 19. bond0 tunl0 192.168.83.64 root Node-121 25.25.25.121 iptables route table bond0 tunl0 192.168.243.0 root Node-122 35.35.35.122 iptables route table • Default: Node Mesh with IP-IP tunnel • Route table has entries to all the other tunl0 interfaces through other node IPs Default Configuration Route to the other node’s tunnel Calico “ipip” tunnel

- 20. • Default: Node Mesh with IP-IP tunnel • Route table has entries to all the other tunl0 interfaces through other node IPs Default Configuration bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 192.168.243.0 root Node-122 35.35.35.122 iptables route table “ipip” tunnel Route to the other node’s tunnel Calico

- 21. bond0 tunl0 192.168.83.64 root cali-x eth0pod1 192.168.83.67 Node-121 25.25.25.121 iptables route table bond0 tunl0 192.168.243.0 root cali-y eth0pod2 192.168.243.2 Node-122 35.35.35.122 iptables route table The image part with relationshi The image part with relationshi The image part with relationshi The image part with relationshi The image part with relationshi The image part with relationshi • Brought up 2 pods • “calicoctl get wep” (”workloadendpoints”) shows the endpoints in calico end Pod-to-Pod Communication veth interfacesCalico

- 22. eth0pod1 cali-x bond0 tunl0 192.168.83.64 root 192.168.83.67 Node-121 25.25.25.121 iptables route table eth0pod2 cali-y bond0 tunl0 192.168.243.0 root 192.168.243.2 Node-122 35.35.35.122 iptables route table The image part with relationshi The image part with relationshi The image part with relationshi The image part with relationshi The image part with relationshi The image part with relationshi • VETH Interface with “cali- xxx” got created on the root namespace • Other end is attached to the pod namespace Pod-to-Pod Communication Calico

- 23. eth0pod1 cali-x bond0 tunl0 192.168.83.64 root 192.168.83.67 Node-121 25.25.25.121 iptables route table eth0pod2 cali-y bond0 tunl0 192.168.243.0 root 192.168.243.2 Node-122 35.35.35.122 iptables route table • How does ARP gets resolved? • pod1 & pod2 default route is pointing to private IPv4 “169.254.1.1” ARP Resolution Calico

- 24. eth0pod1 cali-x bond0 tunl0 192.168.83.64 root 192.168.83.67 Node-121 25.25.25.121 iptables route table eth0pod2 cali-y bond0 tunl0 192.168.243.0 root 192.168.243.2 Node-122 35.35.35.122 iptables route table • Initiate ping from pod1-to pod2 • ARP request is send to default GW 169.254.1.1 • cali interface replies to ARP request with it’s own MAC ARP Resolution Calico tcpdump on root veth root veth is replying with its own MAC Pinging from pod1 to pod21 2 3 1 ARP Reply

- 25. eth0pod1 cali-x bond0 tunl0 192.168.83.64 root 192.168.83.67 Node-121 25.25.25.121 iptables route table eth0pod2 cali-y bond0 tunl0 192.168.243.0 root 192.168.243.2 Node-122 35.35.35.122 iptables route table • Why “Cali-” VETH Interfaces replies to the ARP request? • Reason: Proxy-ARP is enabled on the veth interface on root namespace • Network is only learning the Node IP/MACs, not pod macs ARP Resolution Calico No pod-MAC/IPs will be learned on the network. Only Node-IPs Proxy ARP is enabled on Cali veth:

- 26. eth0pod1 cali-x bond0 tunl0 192.168.83.64 root 192.168.83.67 Node-121 25.25.25.121 iptables route table eth0pod2 cali-y bond0 tunl0 192.168.243.0 root 192.168.243.2 Node-122 35.35.35.122 iptables route table • After ARP Resolution packet gets forwarded according to the routing table entries • Packets will get encapsulated via IP-IP Packet Forwarding Calico Notice the 2 IP headers: IP-IP protocl Once the ARP is resolved, routing table rules kicks in

- 27. eth0pod1 cali-x bond0 tunl0 192.168.83.64 root 192.168.83.67 Node-121 25.25.25.121 iptables route table eth0pod2 cali-y bond0 tunl0 192.168.243.0 root 192.168.243.2 Node-122 35.35.35.122 iptables route table • After ARP Resolution packet gets forwarded according to the routing table entries • Packets will get encapsulated via IP-IP Packet Forwarding 1 2 3 Calico 8 9 10 4 5 6 7

- 28. eth0pod1 cali-x bond0 tunl0 192.168.83.64 root 192.168.83.67 Node-121 25.25.25.121 iptables route table eth0pod2 cali-y bond0 tunl0 192.168.243.0 root 192.168.243.2 Node-122 35.35.35.122 iptables route table Packet Forwarding in the same host cali-z pod3 192.168.83.68 eth0 eth0 1 3 2 Calico • /32 routes are present in the routing table for all the containers • Packets get forwarded to the appropriate calico veth interface based on the routing rules

- 29. bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 root Node-122 35.35.35.122 iptables route table • Disable IP-IP Mode Non IP-IP Calico

- 30. bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 root Node-122 35.35.35.122 iptables route table • Disable IP-IP Mode. tunl0 interface is not present anymore • Routes are pointing to the bond0 interface • Bring up 2 pods as before Non IP-IP ❌ ❌ eth0pod1 192.168.83.69 cali-x eth0pod2 cali-y 192.168.243.4 No more tunl0. Pod routes are directly pointing to bond0 Calico

- 31. bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 root Node-122 35.35.35.122 iptables route table • Ping from pod1 to pod2 is unsuccessful • Reason: Routes are not advertised to the Network Non IP-IP ❌ ❌ eth0pod1 192.168.83.69 cali-x eth0pod2 cali-y 192.168.243.4 2 1 Calico 3 ❓ Packets won’t go Reason: No routes to the pod networks

- 32. • Need the Calico nodes to peer with the network fabric BGP mode Calico bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 root Node-122 35.35.35.122 iptables route table ❌ ❌ eth0pod1 192.168.83.69 cali-x eth0pod2 cali-y 192.168.243.4 Bird Bird

- 33. • Create a global BGP configuration • Create the network as a BGP Peer (**Assuming an abstracted cloud network. Config will vary depending on vendor) BGP Mode Calico bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 root Node-122 35.35.35.122 iptables route table ❌ ❌ eth0pod1 192.168.83.69 cali-x eth0pod2 cali-y 192.168.243.4 Create a BGP configuration with AS:63400 Create a ”global” BGP Peer Both Nodes are peering with the global BGP Peer

- 34. • Configure the Calico nodes as BGP Neighbors on the network • As a result network will get to learn about 192.168.83.64/26 & 192.168.243.0/26 routes BGP Mode Calico bond0 tunl0 root Node-121 25.25.25.121 iptables route table bond0 tunl0 root Node-122 35.35.35.122 iptables route table ❌ ❌ eth0pod1 192.168.83.69 cali-x eth0pod2 cali-y 192.168.243.4 Network is peering with both the Calico Nodes Network is learning pod network routes via BGP

- 35. bond0root Node-121 25.25.25.121 iptables route table bond0root Node-122 35.35.35.122 iptables route table • Packets goes across the network without any encapsulations BGP Mode eth0pod1 192.168.83.69 cali-x eth0pod2 cali-y 192.168.243.4 1 2 Calico 7 8 3 4 5 6

- 36. • Cilium Agent, Cilium CLI Client, CNI Plugin will be running on every node • Cilium agent compiles BPF programs and make the kernel runs these programs at key points in the network stack to have visibility and control over all network traffic in/out of all containers • Cilium interacts with the Linux kernel to install BPF program which will then perform networking tasks and implement security rules Architecture Cilium eth0 pod1 eth1 pod2 veth1 cbr0 eth0 flannel 0 Node1 *etcd/monitor components are not shown for clarity Cilium Agent BPF Program BPF Program

- 37. • All nodes form a mesh of tunnels using the UDP based encapsulation protocols: VXLAN (default) or Geneve • Simple: Only requirement is cluster nodes should be able to reach each other using IP/UDP • Auto-configured: Kubernetes is being run with the- ”--allocate-node-cidrs” option, Cilium can form an overlay network automatically without any configuration by the user Overlay Network Mode Cilium: Networking • In direct routing mode, Cilium will hand all packets which are not addressed for another local endpoint to the routing subsystem of the Linux kernel • Packets will be routed as if a local process would have emitted the packet • Admins can use routing daemon such as Zebra, Bird , BGPD. The routing protocols will announce the node allocation prefix via the node’s IP to all other nodes. Direct/Native Routing Mode

- 38. bond0 cilium_vxlan root Node-121 25.25.25.121 bond0 cilium_vxlan root Node-122 35.35.35.122 • Overlay Networking Mode • VXLAN encapsulation • Both VETH/IPVLAN is supported (Higher Performance gains with IPVLAN) Default Configuration Cilium cilium_host 192.168.1.1 cilium_health cilium_net cilium_host 192.168.2.1 cilium_health cilium_net

- 39. bond0 cilium_vxlan root Node-121 25.25.25.121 bond0 cilium_vxlan root Node-122 35.35.35.122 • ”cilium_vxlan” interface is in metadata mode. It can send & receive on multiple addresses • Cilium addressing model allows to derive the node address from each container address. • This is also used to derive the VTEP address, so you don’t need to run a control plane protocol to distribute these addresses. All you need to have are routes to make the node addresses routable Default Configuration Cilium cilium_host cilium_health cilium_net cilium_host cilium_health cilium_net ‘cilium_vxlan’ interface is in Metadata mode. No IP assigned to this interface cilium_host 192.168.1.1 cilium_host 192.168.2.1

- 40. bond0 cilium_vxlan root lxc-x eth0pod1 192.168.1.231 Node-121 25.25.25.121 bond0 cilium_vxlan root lxc-y eth0pod2 192.168.2.251 Node-122 35.35.35.122 • VETH Interface with “lxc- xxx” got created on the root namespace • Other end is attached to the pod namespace Pod-to-Pod Communication Cilium cilium_host cilium_health cilium_net cilium_host cilium_health cilium_net

- 41. eth0pod1 lxc-x bond0 cilium_vxlan root 192.168.1.231 Node-121 25.25.25.121 eth0pod2 lxc-y bond0 cilium_vxlan root 192.168.2.251 Node-122 35.35.35.122 • Cilium datapath uses eBPF hooks to load BPF programs • XDP BPF hook is at the earliest point possible in the networking driver and triggers a run of the BPF program upon packet reception • Traffic Control (TC) Hooks: BPF programs are attached to the TC Ingress hook of host side of the VETH pair for monitoring & enforcement Datapath Cilium cilium_host cilium_health cilium_net cilium_host cilium_health cilium_net XDP Hooks TC Ingress Hooks

- 42. eth0pod1 lxc-x bond0 cilium_vxlan root 192.168.1.231 Node-121 25.25.25.121 eth0pod2 lxc-y bond0 cilium_vxlan root 192.168.2.251 Node-122 35.35.35.122 • Default GW of the container is pointing to the IP of “cilium_host” • BPF Program is installed to reply to the ARP request • LXC Interface MAC is used for the ARP reply ARP Resolution Cilium cilium_host 192.168.1.1 cilium_health cilium_net cilium_host cilium_health cilium_net Default GW of the pod is pointing to ’cilium_host’ IP BPF program is responding with to the ARP with VETH’s (lxc-x) MAC 1 ARP Reply

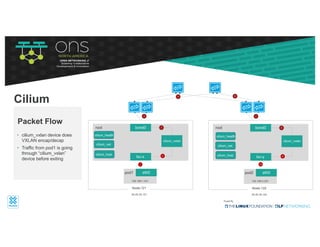

- 43. eth0pod1 lxc-x bond0 cilium_vxlan root 192.168.1.231 Node-121 25.25.25.121 eth0pod2 lxc-y bond0 cilium_vxlan root 192.168.2.251 Node-122 35.35.35.122 • cilium_vxlan device does VXLAN encap/decap • Traffic from pod1 is going through “cilium_vxlan” device before exiting Packet Flow Cilium cilium_health cilium_net cilium_host cilium_health cilium_net 1 2 3 8 9 10 4 5 6 7 cilium_host

- 44. eth0pod1 lxc-x bond0root 192.168.1.231 Node-121 25.25.25.121 eth0pod2 lxc-y bond0root 192.168.2.251 Node-122 35.35.35.122 • No VXLAN/GENEVE overlays • As an admin you are able to run your own flavor of routing daemon (Bird/BGPD/Zebra etc) to distribute routes • You can make the Pod IP’s routable as a result Direct Routing Cilium cilium_host cilium_health cilium_net cilium_host cilium_health cilium_net Routing Daemon Routing Daemon

- 45. Service IP Pod1 Pod2 Pod3 Kube Proxy • Pods are mortal • Need a higher level abstractions: Services • “Service” in Kubernetes is a conceptual concept. Service is not a process/daemon. Outside networks doesn’t learn Service IP addresses • Implemented through Kube Proxy with IPTables rules Services K8S Networking: Basics Yes! Back to the Basics…

- 46. • If Services are an abstracted concept without any meaning outside of the K8S cluster how do we access? Exposing Services K8S Networking: Basics • NodePort / LoadBalancer / Ingress etc. NodePort: Service is accessed via ‘NodeIP:port’ Credit: https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0

- 47. • Load Balancer: Spins up a load balancer and binds service IPs to Load Balancer VIP • Very common in public cloud environments • For baremetal workloads: ‘MetalLB’ (Up & coming project, load-balancer implementation for bare metal K8S clusters, using standard routing protocols) Exposing Services K8S Networking: Basics LoadBalancer: Service is accessed via Loadbalancer Credit: https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0

- 48. • Ingress: K8S Concept that lets you decide how to let traffic into the cluster • Sits in front of multiple services and act as a ”router” • Implemented through an ingress controller (NGINX/HA Proxy) Exposing Services K8S Networking: Basics Credit: https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0

- 49. • Ingress: Network (“Abstracted Network”) can really help you out here • Ingress controllers are deployed in some of your “public” nodes in your cluster • Eg: Big Cloud Fabric (by Big Switch), can expose a Virtual IP in front of the Ingress Controllers and perform Load Balancing/Health Checks/Analytics Exposing Services K8S Networking: Basics Virtual-IP Kube-1862 Kube-1863

- 50. Thanks! • Repo for all the command outputs/PDF slides: https://github.com/jayakody/ons-2019 • Credits: • Inspired by Life of a Packet- Michael Rubin, Google (https://www.youtube.com/watch?v=0Omvgd7Hg1I) • Sarath Kumar, Prashanth Padubidry : Big Switch Engineering • Thomas Graf, Dan Wendlandt: Isovalent (Cilium Project) • Project Calico Slack Channel: special shout out to: Casey Davenport, Tigera • And so many other folks who took time to share knowledge around this emerging space through different mediums (Blogs/YouTube videos etc)