Rapid Prototyping for XR: Lecture 6 - AI for Prototyping and Research Directions.

- 1. RAPID PROTOTYPING FOR XR Lecture 6: Using AI for Prototyping, Research Directions Mark Billinghurst June 13th 2025 [email protected]

- 2. Friday Schedule • 10:00am Present work • Progress to date, feedback • 10:30am Lecture 6: AI for Rapid Prototyping/Research Directions • 11:30 pm Research Paper Presentations • Uswah, Sebastien, Chubo • 12:30 pm Lunch • 1:30pm Project work • Focus on demo/slide presentation • 5:00pm Research Paper Presentations • Jere, Manato, Vy, Nirasha • 6:30 pm Finish

- 4. Piggyback Prototyping • Create prototype on top of existing application • Many applications have creator tools that are geared towards novices • Benefit from existing social platform and user base • Examples • Snapchat Lens Studio & Snapchat platform • Minecraft VR/AR & Minecraft platform • https://www.minecraft.net/en-us/vr • Roblox Creator Hub/Roblox Studio • https://create.roblox.com/landing • Rec Room Creator Kit & Rec Room platform • https://recroom.com/kit

- 5. Web-based AR/VR • WebXR • W3C standards • Open source for AR/VR • Strong developer community • https://www.w3.org/TR/webxr/ • https://immersiveweb.dev/ • A-Frame • Javascript/HTML editing • WebGL, rich interactivity • https://aframe.io/

- 6. Web vs. A-Frame Structure & Content HTML Organization of page content & hierarchy Presentation CSS Definition of page content presentation Behavior JavaScript Specification of interactive behavior Web A-Frame Structure Entities Organization of 3D scene hierarchy Content & Presentation Components Definition of 3D scene content & presentation Behavior Systems & Scripts Specification of interactive behavior

- 7. Unity • Started out as game engine • Has integrated support for many types of XR apps • Powerful scene editor • Asset management & store • Basically all XR device vendors provide Unity SDKs

- 8. Unity vs. A-Frame Unity is a game engine and XR dev platform ● De facto standard for XR apps ● Increasingly built-in support ● Most “XR people” will ask you about your Unity skills :-) Support for all XR devices ● Basically all AR and VR device vendors provide Unity SDKs A-Frame is a declarative WebXR framework ● Emerging XR app development framework on top of THREE.js ● Good for novice XR designers with web dev background Support for most XR devices ● Full WebXR support in Firefox, Chrome, & Oculus Browser

- 9. XR Platforms and Toolkits AR VR A-Frame SteamVR MRTK XR Interaction Oculus VIVE Marker-based AR.js Vuforia Marker-less A-Frame ARKit/ARCore AR Foundation MRTK XR Interaction

- 10. XR Toolkits Card- board AR Kit AR Core Oculus VIVE Holo Lens WMR Web Cam A-Frame AR.js SteamVR MRTK Vuforia AR Foundation XR Interaction WebXR

- 11. Unity XR Interaction Toolkit • High level, component-based, interaction system for XR experiences. • Enables 3D and UI interaction using Unity input events. • Uses the Unity’s ‘Input System’ package. • Building upon a sample is the quickest way to start prototyping. • Prefabs are provided for pre-configured objects/sets of objects.

- 12. Meta XR SDK/Building Blocks • Add support for Quest specific features: • Hand / Eye / Body / Face Tracking • Video passthrough for Mixed Reality • Building store-compatible apps. • New Building Blocks system to drag and drop functionality. • Physics-based hand interactions Asset store link: https://assetstore.unity.com/packages/tools/integration/meta-xr-all-in-one-sdk-269657

- 13. Design Guidelines By Vendors Platform driven By Designers User oriented By Practitioners Experience based By Researchers Empirically derived

- 14. AR Core Elements • Mobile app showing best practice AR UX guidelines • Android .apk file • Live demo UX guidelines

- 15. The Trouble with XR Design Guidelines 1) Rapidly evolving best practices Still a moving target, lots to learn about XR design Slowly emerging design patterns, but often change with OS updates Already major differences between device platforms 2) Challenges with scoping guidelines Often too high level, like “keep the user safe and comfortable” Or, too application/device/vendor-specific 3) Best guidelines come from learning by doing Test your designs early and often, learn from your own “mistakes” Mind differences between VR and AR, but less so between devices

- 16. USING AI FOR XR PROTOTYPING

- 17. Using AI for XR Prototyping • Accelerated Content Creation • Gen. AI for 3D Assets, Procedural Content Generation, Optimization • Intelligent Prototyping Tools and Workflows • AI-Assisted Interface Design, User simulation, Real-time Feedback • Enhanced User Experience and Personalization • Adaptive and Context-Aware XR, Natural User Interfaces, Avatars • Data Analysis and Insights • User Analytics, Emotion detection

- 18. CONTENT CREATION

- 19. Generative AI and Content Creation • Many content creation tools • 2D images • Midjourney, DALL-E 3, Imagen 3, Stable Diffusion • 360 images • Blockade Labs, Stable Diffusion, PromeAI • 3D models • Text to 3D - Meshy, Tripo AI, Hunyuan3D • Image to 3D - Alpha3D, Rodin by Hyper 3D • Audio • Suno AI, Udio, ElevenLabs, LoudMe • Video • Luma Dream Machine, Runway ML, Sora

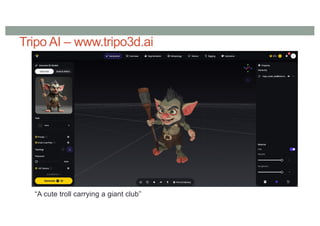

- 21. Tripo AI – www.tripo3d.ai “A cute troll carrying a giant club”

- 22. Rodin – Hyper3d - https://hyper3d.ai/ • 2D image to 3D model

- 23. Procedural Content Generation • Automatically generate virtual content • Landscapes, buildings, objects, and textures dynamically. • Enables creation of very large environments/experiences • E.g. Minecraft, No man’s sky • Automatically generate world at run time

- 25. Automatic Asset Optimization • AI optimization of 3D models and textures • Improve real time performance • Creating smooth user experience 48,000 polygons 2,800 polygons convrse.ai

- 28. Intelligent Prototyping Tools and Workflows • AI-Assisted Interface Design: • Use AI to automate repetitive design tasks, • Provide intelligent design suggestions, • Automated User Interaction and Behaviour: • Simulate user behaviour within a prototype, • Test interaction patterns and identify potential usability issues. • Real-time Feedback and Iteration: • Provide instant feedback on design choices, • Enabling rapid iteration and refinement of prototypes.

- 29. Google Gemini Assistance with Prototyping

- 33. Automated User Interaction and Behaviour Automate user interaction in a VR application in several ways 1. Intelligent Non-Player Characters (NPCs) and Agents: 2. Dynamic Environment and Content Adaptation: Changing world 3. Automated Usability & Accessibility Enhancements: Simplify interactions 4. Advanced Simulation & Optimization: Crowds, testing

- 34. Example: Virtual Crowd Testbed https://www.youtube.com/watch?v=qRygOPmcHDQ

- 36. Enhanced User Experiences • Adaptive and Context-Aware XR: • Analyze user behaviour, biometric signals, and environmental factors • Dynamically adjust the virtual world - creates personalized experiences. • Natural User Interfaces (NUIs): • AI-powered gesture recognition, voice commands (NLP), and eye-tracking • More intuitive and natural XR interactions, mimicking real-world actions. • Intelligent NPCs and Avatars: • Drive more realistic non-player characters and avatars • Enabling dynamic conversations, and human-like expressions and movements. • Personalized Learning Paths and Training: • AI can analyze a trainee's performance and adapt the material • Provide targeted feedback, making learning more effective and personalized

- 37. Natural User Interfaces • Using AI to understand user intent • Gesture, gaze understanding • Using AI/ML for recognition • Multimodal understanding • Fusing gesture + speech, gaze + gesture, etc.

- 38. Example: Gaze + Hand Alignment Interaction • Use alignment between gaze and hand for selection in AR • Use AI to recognize alignment of input streams M. Lystbæk, P. Rosenberg, K. Pfeuffer, J. Grønbæk, and H. Gellersen. 2022. Gaze-Hand Alignment: Combining Eye Gaze and Mid-Air Pointing for Interacting with Menus in Augmented Reality. Proc. ACM Hum.-Comput. Interact. 6, ETRA, Article 145

- 40. Intelligent NPCs and Avatars • Using AI to add intelligent conversational characters to XR • Users can have free conversation with them • Speech recognition, dialogue understanding • Characters can interact with one another • Many options • conv.ai, inworld.ai, custom build, etc

- 41. conv.ai - https://convai.com/ • AI driven conversational characters • Actions: Characters can understand the environment and perform actions • Knowledge Bank: Customized domain knowledge for characters • Intelligent Animations: Integrated with Lip-Sync and custom facial/body animations • Unity Integration: Easily add into AR/VR/MR environments, Unity asset plug-in

- 43. Adaptive XR: CAEVR System 43 AR-glass capable of adding color filter based on the participant’s emotional state. - Low-saturation colors evoke a sense of depression, while high- saturation ones lead people to feel cheerful and pleasant

- 44. 44 Enhancing User Experience in VR

- 47. Data Analytics and Insights • User Analytics: • AI can analyze data collected during user testing of XR prototypes • e.g., gaze tracking, dwell times, interaction patterns • Identify popular features, bottlenecks, and areas for improvement. • Data-driven approach helps optimize the user experience. • Emotion Detection: • analyze facial microexpressions or voice tone to understand user sentiment • providing deeper insights into user engagement and satisfaction.

- 48. Cognitive3D - https://cognitive3d.com/ • Capture capture and analytics for XR • Multiple sensory input (eye tracking, HR, EEG, body movement, etc) • Use AI for data processing • Integrate in with Unity/Unreal • Capture user performance during prototyping

- 51. Sensor Enhanced HMDs Project Galea EEG, EMG, EDA, PPG, EOG, eye gaze, etc.

- 54. NeuralDrum • Using brain synchronicity to increase connection • Collaborative VR drumming experience • Measure brain activity using 3 EEG electrodes • Use PLV to calculate synchronization • More synchronization increases graphics effects/immersion Pai, Y. S., Hajika, R., Gupta, K., Sasikumar, P., & Billinghurst, M. (2020). NeuralDrum: Perceiving Brain Synchronicity in XR Drumming. In SIGGRAPH Asia 2020 Technical Communications (pp. 1-4).

- 55. • HTC Vive HMD • OpenBCI • 3 EEG electrodes Set Up

- 57. NeuralDrum – Brain Synchronisation in XR Poor Player Good Player "It’s quite interesting, I actually felt like my body was exchanged with my partner."

- 59. Skill & resources required Level of fidelity in AR/VR Class 1 InVision, Sketch, XD, ... Class 2 DART, Proto.io, Montage, ... Class 3 ARToolKit, Vuforia/ Lens/Spark AR Studio, ... Class 4 SketchUp , Blender, ... Class 5 A-Frame, Unity, Unreal Engine, ... Immersive Authoring Tilt Brush, Blocks, Maquette, Pronto, ...... ? My Research ProtoAR, 360proto, XRDirector, ... The Trouble with XR Prototyping Tools

- 60. Research Directions • Tangible Authoring • Rapid physical-digital authoring • Interactive prototyping using Wizard of Oz • On-device / cross-device / immersive authoring • Visual scripting & asset/code generation • Collaborative authoring

- 61. Tangible AR Interfaces • AR overcomes limitation of TUIs • enhance display possibilities • merge task/display space • provide public and private views • TUI + AR = Tangible AR • Apply TUI methods to AR interface design

- 62. Space vs. Time - Multiplexed • Space-multiplexed • Many devices each with one function • Quicker to use, more intuitive, clutter • Real Toolbox • Time-multiplexed • One device with many functions • Space efficient • mouse

- 63. Tangible AR: Tiles (Space Multiplexed) • Tiles semantics • data tiles • operation tiles • Operation on tiles • proximity • spatial arrangements • space-multiplexed Poupyrev, I., Tan, D. S., Billinghurst, M., Kato, H., Regenbrecht, H., & Tetsutani, N. (2001, July). Tiles: A Mixed Reality Authoring Interface. In Interact (Vol. 1, pp. 334-341).

- 64. Space-multiplexed Interface Data authoring in Tiles

- 65. Tiles Demo

- 67. Tangible AR: Time-multiplexed Interaction • Use of natural physical object manipulations to control virtual objects • VOMAR Demo • Catalog book: • Turn over the page • Paddle operation: • Push, shake, incline, hit, scoop Kato, H., Billinghurst, M., Poupyrev, I., Tetsutani, N., & Tachibana, K. (2001, November). Tangible augmented reality for human computer interaction. In Proceedings of NICOGRAPH (Vol. 2001).

- 68. VOMAR Interface

- 70. Immersive Authoring ● Using tangible AR to author simple logic ● Physical blocks represent logic functions Lee, G. A., Nelles, C., Billinghurst, M., & Kim, G. J. (2004, November). Immersive authoring of tangible augmented reality applications. In Third IEEE and ACM International Symposium on Mixed and Augmented Reality (pp. 172-181). IEEE.

- 72. Rapid Physical-Digital Transition ProtoAR by Nebeling et al. (CHI 2018)

- 74. Interactive prototyping using Wizard of Oz https://www.youtube.com/watch?v=k9kNEqPxUME

- 75. Immersive Authoring ● Tablet based video prototyping ○ Sketching in real world ○ Tracking user position changes ○ Direct manipulation of content Leiva, G., Nguyen, C., Kazi, R. H., & Asente, P. (2020, April). Pronto: Rapid augmented reality video prototyping using sketches and enaction. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-13).

- 77. Visual Scripting & Asset/Code Generation https://www.youtube.com/watch?v=-m1QVWlLNDs

- 78. Collaborative Authoring ● A multi-user, cross-device, immersive authoring ○ role-based collaborative authoring ● Use Wizard of Oz approach Nebeling, M., Lewis, K., Chang, Y. C., Zhu, L., Chung, M., Wang, P., & Nebeling, J. (2020, April). XRDirector: A role-based collaborative immersive authoring system. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-12).

- 80. Case Study: The Lion King (2019) Nebeling et al.: XRDirector: A Role-Based Collaborative Immersive Authoring System (CHI 2020) Director Actor (VR) Camera (AR) Rafiki Camera

- 82. QUESTIONS

- 83. RESOURCES

- 84. XR Prototyping Web Site XR Prototyping resources (http://xr-prototyping.org/)

- 85. XR MOOC XR MOOC on Coursera (http://xrmooc.com) -- free audit possible

- 87. Other Books 3D User Interfaces: Theory and Practice Joseph J. LaViola Jr.Ernst Kruijff, Ryan P. McMahan, Doug Bowman, Ivan P. Poupyrev Augmented Reality: Principles and Practice Dieter Schmalstieg, Tobias Hollerer

- 88. Interaction Design: Beyond Human- Computer Interaction - Helen Sharp, Jennifer Preece, Yvonne Rogers Note: There is a 6th Edition now

- 89. Prototyping for Designers: Developing the Best Digital and Physical Products - Kathryn McElroy The VR Book: Human-centered Design for Virtual Reality - Jason Jerald

- 90. Tools and Guidelines The UX of VR Low-fi prototyping tools for VR 3D design patterns for VR Virtual Reality Human Interface Guidelines