RAPIDS: ускоряем Pandas и scikit-learn на GPU Павел Клеменков, NVidia

- 1. Pavel Klemenkov, Chief Data Scientist @ NVIDIA RAPIDS: SPEEDING UP PANDAS AND SCIKIT-LEARN

- 2. 2 TYPICAL DS PIPELINE All Data ETL Manage Data Structured Data Store Data Preparation Training Model Training Visualization Evaluate Inference Deploy Can we test more hypothesis per unit of time?

- 3. 3 TYPICAL DS PIPELINE All Data ETL Manage Data Structured Data Store Data Preparation Training Model Training Visualization Evaluate Inference Deploy Can we test more hypothesis per unit of time? Hyperparameters optimization

- 4. 4 RAPIDS — OPEN GPU DATA SCIENCE Software Stack Python CUDA PYTHON APACHE ARROW on GPU Memory DASK DEEP LEARNING FRAMEWORKS CUDNN RAPIDS CUMLCUDF CUGRAPH

- 5. 5 GETTING STARTED rapids.ai getting started 10 minutes to cuDF

- 7. 7 def randChar(f, numGrp, N): things = [f.format(x) for x in range(numGrp)] return [things[x] for x in np.random.choice(numGrp, N)] def randFloat(numGrp, N) : things = [round(100 * np.random.random(), 4) for x in range(numGrp)] return [things[x] for x in np.random.choice(numGrp, N)] N = int(1e7) K = 100 pdf = pd.DataFrame({ 'id1' : randChar("id{0:0=3d}", K, N), # large groups (char) 'id2' : randChar("id{0:0=3d}", K, N), # large groups (char) 'id3' : randChar("id{0:0=3d}", N//K, N), # small groups (char) 'id4' : np.random.choice(K, N), # large groups (int) 'id5' : np.random.choice(K, N), # large groups (int) 'id6' : np.random.choice(N//K, N), # small groups (int) 'v1' : np.random.choice(5, N), # int in range [1,5] 'v2' : np.random.choice(5, N), # int in range [1,5] 'v3' : randFloat(100,N) # numeric e.g. 23.5749 }) cdf = cudf.DataFrame.from_pandas(pdf)

- 8. 8 BENCHMARK #1 %%timeit -r 3 -n 3 pdf.groupby(['id1']).agg({'v1':'sum’}) 776 ms ± 4.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) %%timeit -r 3 -n 3 cdf.groupby(['id1']).agg({'v1':'sum’}) 21.5 ms ± 1.3 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) Small number of large groups

- 9. 9 BENCHMARK #2 %%timeit -r 3 -n 3 pdf.groupby(['id1','id2']).agg({'v1':'sum’}) 1.79 s ± 10.7 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) %%timeit -r 3 -n 3 cdf.groupby(['id1','id2']).agg({'v1':'sum’}) 37.5 ms ± 14.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) Multiple groups

- 10. 10 BENCHMARK #3 %%timeit -r 3 -n 3 pdf.groupby(['id3']).agg({'v1':'sum', 'v3':'mean’}) 1.36 s ± 21.9 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) %%timeit -r 3 -n 3 cdf.groupby(['id3']).agg({'v1':'sum', 'v3':'mean’}) 53 ms ± 2.42 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) Large number (1e5) of small groups, multiple arrgegates GroupBy benchmark notebook

- 11. 11 WAIT A MINUTE… • Pandas is single-threaded, but there is Dask • cuDF is a single GPU solution

- 12. 12 WAIT A MINUTE… ddf = dask.dataframe.from_pandas(pdf, npartitions=8) %%timeit -r 3 -n 3 pdf.groupby(['id1','id2']).agg({'v1':'sum’}) 1.79 s ± 10.7 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) %%timeit -r 3 -n 3 ddf.groupby(["id1", "id2"]).agg({'v1': 'sum'}).compute() 1.34 s ± 33.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) %%timeit -r 3 -n 3 cdf.groupby(['id1','id2']).agg({'v1':'sum’}) 37.5 ms ± 14.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each) DASK DataFrame execution

- 13. 13 +

- 14. 14 CUML

- 15. 15 Category Algorithm Notes Clustering Density-Based Spatial Clustering of Applications with Noise (DBSCAN) K-Means Multi-node multi-GPU via Dask Dimensionality Reduction Principal Components Analysis (PCA) Multi-node multi-GPU via Dask Truncated Singular Value Decomposition (tSVD) Multi-node multi-GPU via Dask Uniform Manifold Approximation and Projection (UMAP) Random Projection t-Distributed Stochastic Neighbor Embedding (TSNE) Linear Models for Regression or Classification Linear Regression (OLS) Linear Regression with Lasso or Ridge Regularization ElasticNet Regression Logistic Regression Stochastic Gradient Descent (SGD), Coordinate Descent (CD), and Quasi-Newton (QN) (including L-BFGS and OWL-QN) solvers for linear models

- 16. 16 Category Algorithm Notes Nonlinear Models for Regression or Classification Random Forest (RF) Classification Experimental multi-node multi- GPU via Dask Random Forest (RF) Regression Experimental multi-node multi- GPU via Dask Inference for decision tree- based models Forest Inference Library (FIL) K-Nearest Neighbors (KNN) Multi-node multi-GPU via Dask, uses Faiss for Nearest Neighbors Query. K-Nearest Neighbors (KNN) Classification K-Nearest Neighbors (KNN) Regression Support Vector Machine Classifier (SVC) Epsilon-Support Vector Regression (SVR) Time Series Linear Kalman Filter Holt-Winters Exponential Smoothing Auto-regressive Integrated Moving Average (ARIMA)

- 18. 18 START DASK CLUSTER from dask.distributed import Client from dask_cuda import LocalCUDACluster # This will use all GPUs on the local host by default cluster = LocalCUDACluster(threads_per_worker=1) c = Client(cluster) # Query the client for all connected workers workers = c.has_what().keys() n_workers = len(workers) n_streams = 8 # Performance optimization

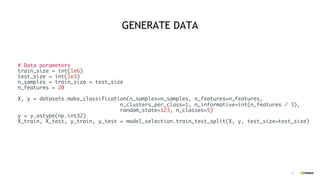

- 19. 19 GENERATE DATA # Data parameters train_size = int(1e6) test_size = int(1e3) n_samples = train_size + test_size n_features = 20 X, y = datasets.make_classification(n_samples=n_samples, n_features=n_features, n_clusters_per_class=1, n_informative=int(n_features / 3), random_state=123, n_classes=5) y = y.astype(np.int32) X_train, X_test, y_train, y_test = model_selection.train_test_split(X, y, test_size=test_size)

- 20. 20 DISTRIBUTE DATA TO GPUS n_partitions = n_workers # First convert to cudf (with real data, you would likely load in cuDF format to start) X_train_cudf = cudf.DataFrame.from_pandas(pd.DataFrame(X_train)) y_train_cudf = cudf.Series(y_train) # Partition with Dask # In this case, each worker will train on 1/n_partitions fraction of the data X_train_dask = dask_cudf.from_cudf(X_train_cudf, npartitions=n_partitions) y_train_dask = dask_cudf.from_cudf(y_train_cudf, npartitions=n_partitions) # Persist to cache the data in active memory X_train_dask, y_train_dask = dask_utils.persist_across_workers(c, [X_train_dask, y_train_dask], workers=workers)

- 21. 21

- 22. 22 BUILD A SCIKIT-LEARN MODEL # Random Forest building parameters max_depth = 12 n_bins = 16 n_trees = 1000 %%time # Use all avilable CPU cores skl_model = sklRF(max_depth=max_depth, n_estimators=n_trees, n_jobs=-1) skl_model.fit(X_train, y_train) CPU times: user 3h 3min 18s, sys: 32.3 s, total: 3h 3min 51s Wall time: 2min 27s

- 23. 23

- 24. 24 BUILD DISTRIBUTED CUML MODEL # Random Forest building parameters max_depth = 12 n_bins = 16 n_trees = 1000 %%time cuml_model = cumlDaskRF(max_depth=max_depth, n_estimators=n_trees, n_bins=n_bins, n_streams=n_streams) cuml_model.fit(X_train_dask, y_train_dask) wait(cuml_model.rfs) # Allow asynchronous training tasks to finish CPU times: user 133 ms, sys: 24.4 ms, total: 157 ms Wall time: 1.93 s

- 25. 25 PREDICT AND CHECK ACCURACY skl_y_pred = skl_model.predict(X_test) cuml_y_pred = cuml_model.predict(X_test) # Due to randomness in the algorithm, you may see slight variation in accuracies print("SKLearn accuracy: ", accuracy_score(y_test, skl_y_pred)) print("CuML accuracy: ", accuracy_score(y_test, cuml_y_pred)) SKLearn accuracy: 0.899 CuML accuracy: 0.886 Random Forest SNMG demo

- 26. 26 ANY PROBLEMS?

- 27. 27 YES! • Still pretty amature and not ready for production • Especially DASK • Porting UDFs is hard [1, 2] • No CPU version (even for inference) • No automatic memory management • Due to obvious reasons 1. Apply Operations in cuDF 2. Numba cuDF integration

- 28. 28 GPU 101

- 29. 29 2010 2016 2019 Scale factor Storage 50 MB/s (HDD) 500 MB/s (SATA-SSD) 2 GB/s (NVMe- SSD) 40х Network 1 Gbit/s 10 Gbit/s 40 Gbit/s 40х CPU 500 GFLOPS 1 200 GFLOPS 3 000 GFLOPS (18 cores/avx512) 6x CPU mem 40 GB/s 80 GB/s 125 GB/s 3х GPU 1 300 GFLOPS 6 000 GFLOPS 15 000 GFLOPS 12x GPU mem 150 GB/s 480 GB/s 900 GB/s 6х

- 30. 30 Performance, GFLOPS Memory bandwidth, GB/s TDP, W Price, $ Nvidia Tesla T4 8 100 320 75 3000 Intel® Xeon® Gold 6140 2 500 120 140 3000

- 31. 31 GPU VS CPU ARCHITECTURE

- 32. 32 GPU TAKE AWAYS 1. GPU memory bus is ~7x wider than CPU 2. GPU has thousands of “simple” ALUs 3. GPU is a peripherial device 1. CPU needs to run a CUDA kernel on GPU 2. GPU connects to CPU via PCI Express

- 33. 33 DRAM CPU GPU DRAM GPU (Tesla V100) DDR4 4ch 60 GB/s PCI v4 x16 32 GB/s HBM2 900 GB/s CPU TO GPU IS SLOW! 30x performance drop

- 34. 34 GPU BEST PRACTICE 1. Data must not leave GPU memory! 2. You will get performance boost if your dataset is big enough to keep GPU busy 3. Use Apache Arrow compatible formats (e.g. Parquet) 4. Keep an eye on GPUDirect Storage and similar 5. CUDA is different to what you’re used to. Accept it and make use of it!

- 35. 35 USEFUL LINKS RAPIDS RAPIDS DOCS rapids-nightly dockerhub (use it except for production) RAPIDS Notebooks RAPIDS Contributed Notebooks kNN 600x speedup on MNIST (Kaggle notebook) Multi-GPU XGBoost with RAPIDS Dmitry Ursegov presentation for Moscow Spark #7 Numba for CUDA GPUs PyCUDA

![7

def randChar(f, numGrp, N):

things = [f.format(x) for x in range(numGrp)]

return [things[x] for x in np.random.choice(numGrp, N)]

def randFloat(numGrp, N) :

things = [round(100 * np.random.random(), 4) for x in range(numGrp)]

return [things[x] for x in np.random.choice(numGrp, N)]

N = int(1e7)

K = 100

pdf = pd.DataFrame({

'id1' : randChar("id{0:0=3d}", K, N), # large groups (char)

'id2' : randChar("id{0:0=3d}", K, N), # large groups (char)

'id3' : randChar("id{0:0=3d}", N//K, N), # small groups (char)

'id4' : np.random.choice(K, N), # large groups (int)

'id5' : np.random.choice(K, N), # large groups (int)

'id6' : np.random.choice(N//K, N), # small groups (int)

'v1' : np.random.choice(5, N), # int in range [1,5]

'v2' : np.random.choice(5, N), # int in range [1,5]

'v3' : randFloat(100,N) # numeric e.g. 23.5749

})

cdf = cudf.DataFrame.from_pandas(pdf)](https://image.slidesharecdn.com/1-200205075146/85/RAPIDS-Pandas-scikit-learn-GPU-NVidia-7-320.jpg)

![8

BENCHMARK #1

%%timeit -r 3 -n 3

pdf.groupby(['id1']).agg({'v1':'sum’})

776 ms ± 4.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

%%timeit -r 3 -n 3

cdf.groupby(['id1']).agg({'v1':'sum’})

21.5 ms ± 1.3 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

Small number of large groups](https://image.slidesharecdn.com/1-200205075146/85/RAPIDS-Pandas-scikit-learn-GPU-NVidia-8-320.jpg)

![9

BENCHMARK #2

%%timeit -r 3 -n 3

pdf.groupby(['id1','id2']).agg({'v1':'sum’})

1.79 s ± 10.7 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

%%timeit -r 3 -n 3

cdf.groupby(['id1','id2']).agg({'v1':'sum’})

37.5 ms ± 14.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

Multiple groups](https://image.slidesharecdn.com/1-200205075146/85/RAPIDS-Pandas-scikit-learn-GPU-NVidia-9-320.jpg)

![10

BENCHMARK #3

%%timeit -r 3 -n 3

pdf.groupby(['id3']).agg({'v1':'sum', 'v3':'mean’})

1.36 s ± 21.9 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

%%timeit -r 3 -n 3

cdf.groupby(['id3']).agg({'v1':'sum', 'v3':'mean’})

53 ms ± 2.42 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

Large number (1e5) of small groups, multiple arrgegates

GroupBy benchmark notebook](https://image.slidesharecdn.com/1-200205075146/85/RAPIDS-Pandas-scikit-learn-GPU-NVidia-10-320.jpg)

![12

WAIT A MINUTE…

ddf = dask.dataframe.from_pandas(pdf, npartitions=8)

%%timeit -r 3 -n 3

pdf.groupby(['id1','id2']).agg({'v1':'sum’})

1.79 s ± 10.7 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

%%timeit -r 3 -n 3

ddf.groupby(["id1", "id2"]).agg({'v1': 'sum'}).compute()

1.34 s ± 33.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

%%timeit -r 3 -n 3

cdf.groupby(['id1','id2']).agg({'v1':'sum’})

37.5 ms ± 14.8 ms per loop (mean ± std. dev. of 3 runs, 3 loops each)

DASK DataFrame execution](https://image.slidesharecdn.com/1-200205075146/85/RAPIDS-Pandas-scikit-learn-GPU-NVidia-12-320.jpg)

![20

DISTRIBUTE DATA TO GPUS

n_partitions = n_workers

# First convert to cudf (with real data, you would likely load in cuDF format to start)

X_train_cudf = cudf.DataFrame.from_pandas(pd.DataFrame(X_train))

y_train_cudf = cudf.Series(y_train)

# Partition with Dask

# In this case, each worker will train on 1/n_partitions fraction of the data

X_train_dask = dask_cudf.from_cudf(X_train_cudf, npartitions=n_partitions)

y_train_dask = dask_cudf.from_cudf(y_train_cudf, npartitions=n_partitions)

# Persist to cache the data in active memory

X_train_dask, y_train_dask =

dask_utils.persist_across_workers(c, [X_train_dask, y_train_dask], workers=workers)](https://image.slidesharecdn.com/1-200205075146/85/RAPIDS-Pandas-scikit-learn-GPU-NVidia-20-320.jpg)

![27

YES!

• Still pretty amature and not ready for production

• Especially DASK

• Porting UDFs is hard [1, 2]

• No CPU version (even for inference)

• No automatic memory management

• Due to obvious reasons

1. Apply Operations in cuDF

2. Numba cuDF integration](https://image.slidesharecdn.com/1-200205075146/85/RAPIDS-Pandas-scikit-learn-GPU-NVidia-27-320.jpg)