Reason To Switch to DNNDNNs excel in handling huge volumes of data (e.g., images, speech, text).

- 1. machine learning (ML) • Supervised Learning • Unsupervised Learning • Reinforcement Learning - trial and error • Overfitting: The model memorizes the training data too well and fails on new data. • Underfitting: The model is too simple and doesn’t capture enough patterns. • Example: A student who memorizes answers without understanding concepts will struggle with new questions.

- 2. Bias-Variance Tradeoff Bias: The model makes consistent but possibly incorrect predictions (too simple). Variance: The model is too sensitive to training data and doesn’t generalize well. Feature Engineering • Picking the right input variables (features) is crucial. • Example: If predicting car prices, relevant features might be age, mileage, and brand. Gradient Descent • An optimization algorithm that helps ML models learn by minimizing errors. • Example: Imagine you're blindfolded and trying to find the lowest point in a valley by taking small steps downward.

- 3. • Evaluation Metrics • How do we know a model is good? • Common metrics: Accuracy, Precision, Recall, F1 Score. • Example: In spam detection, a good model should catch spam emails while avoiding flagging real emails

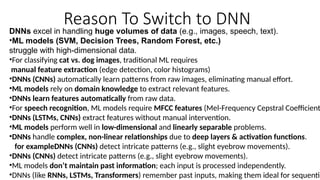

- 4. Reason To Switch to DNN DNNs excel in handling huge volumes of data (e.g., images, speech, text). •ML models (SVM, Decision Trees, Random Forest, etc.) struggle with high-dimensional data. •For classifying cat vs. dog images, traditional ML requires manual feature extraction (edge detection, color histograms) •DNNs (CNNs) automatically learn patterns from raw images, eliminating manual effort. •ML models rely on domain knowledge to extract relevant features. •DNNs learn features automatically from raw data. •For speech recognition, ML models require MFCC features (Mel-Frequency Cepstral Coefficient •DNNs (LSTMs, CNNs) extract features without manual intervention. •ML models perform well in low-dimensional and linearly separable problems. •DNNs handle complex, non-linear relationships due to deep layers & activation functions. for exampleDNNs (CNNs) detect intricate patterns (e.g., slight eyebrow movements). •DNNs (CNNs) detect intricate patterns (e.g., slight eyebrow movements). •ML models don’t maintain past information; each input is processed independently. •DNNs (like RNNs, LSTMs, Transformers) remember past inputs, making them ideal for sequentia

- 5. Introduction to Neural Networks Neural networks are capable of learning and identifying patterns directly from data without pre-defined rules.

- 6. Networks structure. Neurons: The basic units that receive inputs, each neuron is governed by a threshold and an activation function. The threshold and activation function are key concepts that help determine how a neuron behaves when receiving input. Connections: Links between neurons that carry information, regulated by weights and biases. Weights and Biases: These parameters determine the strength and influence of connections. Weights are numerical values that represent the strength of the connections between neurons. Biases: A bias is an additional parameter added to the weighted sum of inputs before applying the activation function. The bias allows the activation function to shift, making the model more flexible. It helps the model adjust its outputs independently of the input values. The bias acts like an "offset" or "shift" to help the neuron fire more easily (or less easily) based on the threshold, even when the weighted sum of inputs is zero.

- 7. Threshold and activation function A threshold is a value that helps determine whether a neuron should "fire" or not, i.e., whether the neuron should activate or pass the signal along. If the sum of the weighted inputs to a neuron exceeds this threshold, the neuron activates (fires). If the sum is below the threshold, the neuron does not activate. In simple terms, the threshold acts as a decision boundary that helps decide whether the neuron will produce an output. An activation function is a mathematical function applied to the weighted sum of inputs (after considering the threshold) to decide the output of the neuron. It introduces non-linearity into the model, allowing neural networks to learn complex patterns and make decisions based on the inputs.

- 8. Activation function • Sigmoid: Outputs values between 0 and 1, often used in binary classification • Sigmoid(x)=1/1+ e−x • ReLU (Rectified Linear Unit): Outputs 0 if the input is negative and the input itself if positive. It helps prevent the vanishing gradient problem. • • Tanh (Hyperbolic Tangent): Outputs values between -1 and 1 • (ex -e-x )/(ex +e-x ) • Softmax: Used in multi-class classification, where it outputs a probability distribution over multiple classes

- 9. Propagation • Propagation Functions: propagation functions refer to the mechanisms that help process and transfer data across layers of neurons, from the input layer to the output layer. This process is crucial for the network to make predictions or decisions based on the data it's given. There are two main types of propagation in neural networks

- 10. • 1. Forward Propagation: • Forward propagation is the process by which input data is passed through the network to compute the output. It involves the following steps: • Input Layer: The raw input data is provided to the input layer of the network. • Weighted Sum Calculation: Each neuron in the hidden layers (and output layer) receives inputs from the previous layer, each input multiplied by its corresponding weight. Then, a bias term is added to the weighted sum of inputs. • • Where: • Xi are the inputs, • wi are the weights, • b is the bias. • Activation Function: After computing the weighted sum, an activation function (like ReLU, sigmoid, etc.) is applied to the result, determining the neuron's output. This output is passed to the next layer. • Layer by Layer Processing: The process of weighted sum calculation followed by activation is repeated layer by layer from the input layer to the hidden layers and eventually to the output layer. • Output Layer: After propagating through all the layers, the final output is generated. This output could represent a prediction, a classification label, or other results depending on the task.

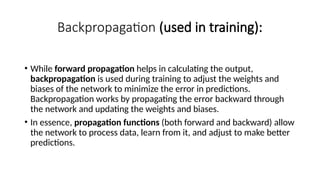

- 11. Backpropagation (used in training): • While forward propagation helps in calculating the output, backpropagation is used during training to adjust the weights and biases of the network to minimize the error in predictions. Backpropagation works by propagating the error backward through the network and updating the weights and biases. • In essence, propagation functions (both forward and backward) allow the network to process data, learn from it, and adjust to make better predictions.

- 12. Key Elements in Propagation: • Weights and Biases: The weighted connections between neurons and the bias terms that influence the neuron’s activation. • Activation Function: Determines the output of each neuron after receiving the weighted sum. • Data Transfer Across Layers: The output of one layer becomes the input to the next, allowing the network to learn complex patterns by processing data layer by layer.

- 13. Learning Rule • the learning rule refers to the method used to adjust the weights and biases over time in order to improve the accuracy of the model. The goal is to minimize the difference between the predicted output and the actual target, which is typically measured by a loss function or error function. The learning rule determines how the parameters (weights and biases) should be updated during the training process. • Key Concepts Involved in the Learning Rule: • Loss Function (or Cost Function): • The loss function quantifies how far the network's predictions are from the actual target values. Common examples include: • Mean Squared Error (MSE) for regression tasks. • Cross-Entropy Loss for classification tasks. • The loss function provides a numerical value that we aim to minimize during training. A lower loss indicates a better model. • Gradient Descent: • Gradient Descent is the most widely used learning rule in neural networks. It is an optimization algorithm used to minimize the loss function by adjusting the weights and biases. • The core idea of gradient descent is to take small steps in the opposite direction of the gradient (or derivative) of the loss function with respect to the weights and biases. This process is done iteratively until the loss converges to a minimum

- 14. Artificial Neural Networks (ANNs) • Structure of Artificial Neurons • An artificial neuron (also called a perceptron) is a mathematical model of a biological neuron. It consists of: • Inputs (x1, x2, ..., xn): Represent data (e.g., pixel values, numerical features). • Weights (w1, w2, ..., wn): Each input is assigned a weight, determining its importance. • Summation function: Computes a weighted sum of inputs

- 15. How Artificial Neural Networks Work • Feedforward processing: Input data passes through multiple layers (input → hidden → output). • Weight adjustments: Learning occurs through backpropagation, where errors are minimized using optimization techniques like gradient descent. • Activation functions: Sigmoid, ReLU, and softmax functions control neuron output. • Training with large datasets: Unlike biological neurons, ANNs require extensive data and computing power.

- 16. Applications of Neural Networks • 1. Image and Video Processing • Neural networks, especially Convolutional Neural Networks (CNNs), are extensively used in image-related tasks. • 1.1 Facial Recognition • Used in security systems, smartphones (Face ID), and law enforcement. • Example: Facebook's DeepFace and Apple's FaceID. • 1.2 Object Detection and Image Classification • Used in autonomous vehicles, medical imaging, and surveillance. • Example: Google Lens, Tesla’s self-driving technology. • 1.3 Image Generation (GANs - Generative Adversarial Networks) • Creates realistic fake images (deepfakes), artwork, and synthetic human faces. • Example: NVIDIA’s StyleGAN for AI-generated art. • Neural networks play a crucial role in understanding and generating human language.

- 17. Sentiment Analysis • Analyzes emotions in text (e.g., customer reviews, social media posts). • 2.2 Machine Translation • Converts text between languages. • Example: Google Translate using Transformer models. • 2.3 Chatbots and Virtual Assistants • AI-based assistants that respond to human queries. • Example: Siri, Alexa, ChatGPT.

- 18. Key Components of a Neural Network • Neurons • Layers • Weights • Biases • Activation Function • Loss Function (Cost Function) • Optimization Algorithm • Forward Propagation • Backpropagation • Learning Rate • Epochs and Batch Size

- 19. Neurons • Neurons are the fundamental units of a neural network. They are inspired by biological neurons and are responsible for performing computations. Each neuron receives inputs, processes them, and produces an output. • A neuron performs a weighted sum of its inputs, adds a bias, and then passes the result through an activation function to produce its output. • The process involved are 1) Weighted Sum of Inputs : Each neuron receives multiple inputs, multiplies them with corresponding weights, adds a bias, and computes a weighted sum. 2) After computing ZZZ, the neuron applies an activation function to introduce non- linearity. Common activation functions , Sigmoid, ReLU, Tanh, Softmax. 3) Output Transmission The activated value is passed as input to the next layer of neurons, or as the final prediction.

- 20. Layers • Neural networks are organized into layers, each containing multiple neurons. There are typically three types of layers: • Input Layer: This is the first layer of the network, where the raw input data is fed into the network. Each neuron in the input layer represents one feature of the input data. • Hidden Layers: These layers are where most of the computation happens. They are called "hidden" because they are not exposed to the outside world, but they play a crucial role in learning complex patterns. A neural network can have one or more hidden layers. • Output Layer: This layer produces the final output of the network, which could be a prediction, classification, or some other result depending on the task (e.g., regression, classification).

- 21. Weights , Biases, Activation Function • Weights are the parameters that connect neurons between layers. Each connection between two neurons has an associated weight, which determines the strength of the connection. • During training, weights are adjusted to minimize the error between the predicted and actual output. The learning process involves finding the optimal weights for the network. • Biases are additional parameters added to the weighted sum of inputs before applying the activation function. • Bias allows the model to shift the activation function to the left or right, providing more flexibility for the network to fit the data and improve accuracy. • Every neuron, except those in the input layer, typically has a bias. • The activation function is applied to the weighted sum of inputs (including the bias) to introduce non-linearity into the model. Without non-linearity, a neural network would just be a linear regression model, regardless of how many layers it has.

- 22. Loss Function (Cost Function) • The loss function measures the difference between the predicted output and the actual target value. The goal during training is to minimize this loss to improve the accuracy of the network. • Common loss functions include: • Mean Squared Error (MSE) for regression tasks. • Cross-Entropy Loss for classification tasks.

- 23. Optimization Algorithm • An optimization algorithm is used to adjust the weights and biases to minimize the loss function. The most common optimization algorithm is Gradient Descent, which iteratively adjusts the weights based on the gradient (derivative) of the loss function. • Variants of gradient descent, such as Stochastic Gradient Descent (SGD), Mini-batch Gradient Descent, and advanced optimizers like Adam, are used to improve the efficiency of training.

- 24. Forward Propagation, Backpropagation • Forward propagation refers to the process of passing input data through the network to calculate the output. • In forward propagation, data is passed from the input layer through the hidden layers, where each neuron applies the weighted sum, adds the bias, and applies the activation function, until it reaches the output layer. • Backpropagation is the process used to train the neural network by adjusting the weights and biases. It involves calculating the gradient of the loss function with respect to each weight and bias in the network (using the chain rule of calculus) and then updating them accordingly to minimize the error. • Backpropagation is combined with the optimization algorithm (like gradient descent) to update the weights in a way that reduces the loss over time.

- 25. Learning Rate, Epochs and Batch Size • The learning rate is a hyperparameter that controls the size of the steps taken during the weight update process in gradient descent. A learning rate that is too large can cause the model to overshoot the optimal solution, while a learning rate that is too small can make training very slow. • Epochs: One epoch refers to a complete pass through the entire training dataset. Multiple epochs are used to train a neural network and improve its accuracy. • Batch Size: In batch training, the dataset is divided into smaller subsets (called batches). The batch size determines the number of training examples used to compute the gradient during each update step.

- 26. Neural Network Representations • A Neural Network Representation refers to the way a neural network is structured mathematically and computationally. It is commonly represented as a weighted graph where: • Components • Nodes (Neurons)