Recommender Systems Content and Collaborative Filtering

- 1. CS246: Mining Massive Datasets Jure Leskovec, StanfordUniversity http://cs246.stanford.edu Note to other teachers and usersofthese slides: Wewouldbedelighted if you found our material usefulfor giving your own lectures. Feel free to usethese slides verbatim, or to modifythem to fit your own needs. If you makeuse of a significant portion of theseslides in yourown lecture, please include this message, or a link toour website: http://www.mmds.org

- 2. High dim. data Locality sensitive hashing Clustering Dimension- ality reduction Graph data PageRank, SimRank Community Detection Spam Detection Infinite data Filtering data streams Web advertising Queries on streams Machine learning SVM Decision Trees Perceptron, kNN Apps Recommen- der systems Association Rules Duplicate document detection 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 2

- 3. Customer X ▪ Buys Metallica CD ▪ Buys Megadeth CD Customer Y ▪ Clicks on Metallica album ▪ Recommender system suggests Megadeth from data collected about customerX 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 3

- 4. Items Retrieval Recommendations Products, web sites, blogs, news items, … 1/25/22 4 Jure Leskovec, Stanford CS246:MiningMassive Datasets Examples:

- 5. Shelf space is a scarce commodity for traditional retailers ▪ Also: TV networks, movie theaters,… Web enables near-zero-cost dissemination of information about products ▪ From scarcity to abundance More choice necessitates better filters: ▪ Recommendation engines ▪ Association rules: How Into Thin Air made Touching the Void a bestseller: http://www.wired.com/wired/archive/12.10/tail.html 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 5

- 6. Source: Chris Anderson (2004) 1/25/22 6 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 7. Non-personalized recommendations: ▪ Editorial and hand curated ▪ List of favorites ▪ List of “essential” items ▪ Simple aggregates ▪ Top 10, Most Popular, Recent Uploads Personalized recommendations: ▪ Tailored to individual users ▪ Examples: Amazon, Netflix, Youtube,… 1/25/22 7 Jure Leskovec, Stanford CS246:MiningMassive Datasets Today’s class

- 8. X = set of Customers S = set of Items Utility function u: X × S → R ▪ R = set of ratings ▪ R is a totally ordered set ▪ e.g., 1-5 stars, real number in [0,1] 1/25/22 8 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 9. 0.4 1 0.2 0.3 0.5 0.2 1 Avatar LOTR Matrix Pirates Alice Bob Carol David 1/25/22 9 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 10. (1) Gathering “known” ratings for matrix ▪ How to collect the data in the utility matrix (2) Extrapolatingunknown ratings from the known ones ▪ Mainly interested in high unknown ratings ▪ We are not interested in knowing what you don’t like but what you like (3) Evaluating extrapolation methods ▪ How to measure success/performance of recommendation methods 1/25/22 10 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 11. Explicit ▪ Ask people to rate items ▪ Doesn’t work well in practice – people don’t like being bothered ▪ Crowdsourcing: Pay people to label items Implicit ▪ Learn ratings from user actions ▪ E.g., purchase implies high rating ▪ What about low ratings? 1/25/22 11 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 12. Key problem: Utility matrix U is sparse ▪ Most people have not rated most items ▪ Cold start: ▪ New items have no ratings ▪ New users have no history Three approaches to recommender systems: ▪ 1) Content-based ▪ 2) Collaborative filtering ▪ 3) Latent factor based 1/25/22 12 Jure Leskovec, Stanford CS246:MiningMassive Datasets Today!

- 14. Main idea: ▪ Items have profiles: ▪ Video -> [genre, director, actors, plot, release year] ▪ News -> [set of keywords] ▪ Recommend items to customer x similar to previous items rated highly by x Example: Movie recommendations ▪ Recommend movies with same actor(s), director, genre, … Websites, blogs, news ▪ Recommend other sites with “similar” content 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 14

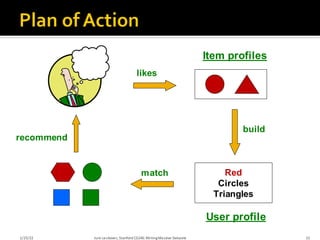

- 15. likes Item profiles Red Circles Triangles User profile match recommend build 1/25/22 15 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 16. For each item, create an item profile Profile is a set (vector) of features ▪ Movies: author, title, actor, director,… ▪ Text: Set of “important” words in document How to pick important features? ▪ Usual heuristic from text mining is TF-IDF (Term frequency * Inverse Doc Frequency) ▪ Term … Feature ▪ Document … Item 1/25/22 16 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 17. fij = frequency of term (feature) i in doc (item) j ni = number of docs that mention term i N = total number of docs TF-IDF score: wij = TFij × IDFi Doc profile = set of words with highest TF-IDF scores, together with their scores 1/25/22 17 Jure Leskovec, Stanford CS246:MiningMassive Datasets Note: we normalize TF to discount for “longer” documents

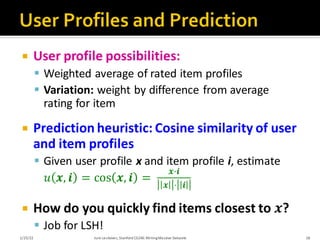

- 18. User profile possibilities: ▪ Weighted average of rated item profiles ▪ Variation: weight by difference from average rating for item Prediction heuristic: Cosine similarity of user and item profiles ▪ Given user profile x and item profile i, estimate 𝑢 𝒙, 𝒊 = cos 𝒙, 𝒊 = 𝒙·𝒊 𝒙 ⋅ 𝒊 How do you quickly find items closest to 𝒙? ▪ Job for LSH! 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 18

- 19. +: No need for data on other users ▪ No item cold-start problem, no sparsity problem +: Able to recommend to users with unique tastes +: Able to recommend new & unpopular items ▪ No first-rater problem +: Able to provide explanations ▪ Can provide explanations of recommended items by listing content-features that caused an item to be recommended 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 19

- 20. –: Finding the appropriate features is hard ▪ E.g., images, movies, music –: Recommendationsfor new users ▪ How to build a user profile? –: Overspecialization ▪ Never recommends items outside user’s content profile ▪ People might have multiple interests ▪ Unable to exploit quality judgments of other users 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 20

- 21. Harnessing quality judgments of other users

- 22. Does not build item profile or user profile In place of item-profile (user-profile) we use its row (column) in the utility matrix. Comes in two flavors: ▪ User-user collaborative filtering ▪ Item-Item collaborative filtering 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 22

- 23. Consider user x Find set N of other users whose ratings are “similar” to x’s ratings Estimate x’s ratings based on ratings of users in N 1/25/22 23 Jure Leskovec, Stanford CS246:MiningMassive Datasets x N

- 24. Let rx be the vector of user x’s ratings Jaccard similarity measure ▪ Problem: Ignores the value of the rating Cosine similarity measure ▪ sim(x, y) = cos(rx, ry) = 𝑟𝑥⋅𝑟𝑦 ||𝑟𝑥||⋅||𝑟𝑦|| ▪ Problem: Treats some missing ratings as “negative” Pearson correlation coefficient ▪ Sxy = items rated by both users x and y 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 24 rx = [1, _, _, 1, 3] ry = [1, _, 2, 2, _] rx, ry as sets: rx = {1, 4, 5} ry = {1, 3, 4} rx, ry as points: rx = {1, 0, 0, 1, 3} ry = {1, 0, 2, 2, 0} rx, ry … avg. rating of x, y 𝒔𝒊𝒎 𝒙, 𝒚 = σ𝒔∈𝑺𝒙𝒚 𝒓𝒙𝒔 − 𝒓𝒙 𝒓𝒚𝒔 − 𝒓𝒚 σ𝒔∈𝑺𝒙𝒚 𝒓𝒙𝒔 − 𝒓𝒙 𝟐 σ𝒔∈𝑺𝒙𝒚 𝒓𝒚𝒔 − 𝒓𝒚 𝟐

- 25. Intuitively we want: sim(A, B) > sim(A, C) Jaccard similarity: 1/5 < 2/4 Cosine similarity: 0.380 > 0.322 ▪ Considers missing ratings as “negative” ▪ Solution: subtract the (row) mean 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 25 sim A,B vs. A,C: 0.092 > -0.559 Notice cosine sim. is correlation when data is centered at 0 𝒔𝒊𝒎(𝒙, 𝒚) = σ𝒊 𝒓𝒙𝒊 ⋅ 𝒓𝒚𝒊 σ𝒊 𝒓𝒙𝒊 𝟐 ⋅ σ𝒊 𝒓𝒚𝒊 𝟐 Cosine sim:

- 26. From similarity metric to recommendations: Let rx be the vector of user x’s ratings Let N be the set of k users most similar to x who have rated item i Prediction for item i of user x: ▪ 𝑟𝑥𝑖 = 1 𝑘 σ𝑦∈𝑁 𝑟𝑦𝑖 ▪ Or even better: 𝑟𝑥𝑖 = σ𝑦∈𝑁 𝑠𝑥𝑦⋅𝑟𝑦𝑖 σ𝑦∈𝑁 𝑠𝑥𝑦 Many other tricks possible… 1/25/22 26 Jure Leskovec, Stanford CS246:MiningMassive Datasets Shorthand: 𝒔𝒙𝒚 = 𝒔𝒊𝒎 𝒙, 𝒚

- 27. So far: User-user collaborative filtering Another view: Item-item ▪ For item i, find other similar items ▪ Estimate rating for item i based on ratings for similar items ▪ Can use same similarity metrics and prediction functions as in user-user model 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 27 = ) ; ( ) ; ( x i N j ij x i N j xj ij xi s r s r sij… similarity of items i and j rxj…rating of user x on item j N(i;x)… set of items which were rated by x and similar to i

- 28. 12 11 10 9 8 7 6 5 4 3 2 1 4 5 5 3 1 1 3 1 2 4 4 5 2 5 3 4 3 2 1 4 2 3 2 4 5 4 2 4 5 2 2 4 3 4 5 4 2 3 3 1 6 users movies - unknown rating - rating between 1 to 5 1/25/22 28 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 29. 12 11 10 9 8 7 6 5 4 3 2 1 4 5 5 ? 3 1 1 3 1 2 4 4 5 2 5 3 4 3 2 1 4 2 3 2 4 5 4 2 4 5 2 2 4 3 4 5 4 2 3 3 1 6 users - estimate rating of movie 1 by user 5 1/25/22 29 Jure Leskovec, Stanford CS246:MiningMassive Datasets movies

- 30. 12 11 10 9 8 7 6 5 4 3 2 1 4 5 5 ? 3 1 1 3 1 2 4 4 5 2 5 3 4 3 2 1 4 2 3 2 4 5 4 2 4 5 2 2 4 3 4 5 4 2 3 3 1 6 users Neighbor selection: Identify movies similar to movie 1, rated by user 5 1/25/22 30 Jure Leskovec, Stanford CS246:MiningMassive Datasets movies 1.00 -0.18 0.41 -0.10 -0.31 0.59 Here we use Pearson correlation as similarity: 1) Subtract mean rating mi from each movie i m1 = (1+3+5+5+4)/5 = 3.6 row 1: [-2.6, 0, -0.6, 0, 0, 1.4, 0, 0, 1.4, 0, 0.4, 0] 2) Compute dot products between rows s1,m

- 31. 12 11 10 9 8 7 6 5 4 3 2 1 4 5 5 ? 3 1 1 3 1 2 4 4 5 2 5 3 4 3 2 1 4 2 3 2 4 5 4 2 4 5 2 2 4 3 4 5 4 2 3 3 1 6 users Compute similarity weights: s1,3=0.41, s1,6=0.59 1/25/22 31 Jure Leskovec, Stanford CS246:MiningMassive Datasets movies 1.00 -0.18 0.41 -0.10 -0.31 0.59 s1,m

- 32. 12 11 10 9 8 7 6 5 4 3 2 1 4 5 5 2.6 3 1 1 3 1 2 4 4 5 2 5 3 4 3 2 1 4 2 3 2 4 5 4 2 4 5 2 2 4 3 4 5 4 2 3 3 1 6 users Predict by taking weighted average: r1.5 = (0.41*2 + 0.59*3) / (0.41+0.59) = 2.6 1/25/22 32 Jure Leskovec, Stanford CS246:MiningMassive Datasets movies 𝒓𝒊𝒙 = σ𝒋∈𝑵(𝒊;𝒙) 𝒔𝒊𝒋 ⋅ 𝒓𝒋𝒙 σ𝒔𝒊𝒋

- 33. Define similarity sij of items i and j Select k nearest neighbors N(i; x) ▪ Items most similar to i, that were rated by x Estimate rating rxi as the weighted average: 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 33 baseline estimate for rxi μ = overall mean movie rating bx = rating deviation of user x = (avg. rating of user x) – μ bi = rating deviation of movie i = ) ; ( ) ; ( x i N j ij x i N j xj ij xi s r s r Before: − + = ) ; ( ) ; ( ) ( x i N j ij x i N j xj xj ij xi xi s b r s b r 𝒃𝒙𝒊 = 𝝁 + 𝒃𝒙 + 𝒃𝒊

- 34. 0.4 1 8 . 0 1 0.9 0.3 0.5 0.8 1 Avatar LOTR Matrix Pirates Alice Bob Carol David 1/25/22 34 Jure Leskovec, Stanford CS246:MiningMassive Datasets In practice, it has been observed that item-item often works better than user-user Why? Items are “simpler”, users have multiple tastes

- 35. + Works for any kind of item ▪ No feature selection needed - Cold Start: ▪ Need enough users in the system to find a match - Sparsity: ▪ The user/ratings matrix is sparse ▪ Hard to find users that have rated the same items - First rater: ▪ Cannot recommend an item that has not been previously rated ▪ New items, Esoteric items - Popularity bias: ▪ Cannot recommend items to someone with unique taste ▪ Tends to recommend popular items 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 35

- 36. Implement two or more different recommenders and combine predictions ▪ Perhaps using a linear model Add content-based methods to collaborative filtering ▪ Item profiles for new item problem ▪ Demographics to deal with new user problem 1/25/22 36 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 37. - Evaluation - Error metrics - Complexity / Speed 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 37

- 38. 1 3 4 3 5 5 4 5 5 3 3 2 2 2 5 2 1 1 3 3 1 movies users 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 38

- 39. 1 3 4 3 5 5 4 5 5 3 3 2 ? ? ? 2 1 ? 3 ? 1 Test Data Set users movies 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 39

- 40. Compare predictions with known ratings ▪ Root-mean-square error (RMSE) ▪ 1 𝑁 σ𝑥𝑖 𝑟𝑥𝑖 − 𝑟𝑥𝑖 ∗ 2 where 𝒓𝒙𝒊 is predicted, 𝒓𝒙𝒊 ∗ is the true rating of x on i ▪ N is the number of points we are making comparisons on ▪ Precision at top 10: ▪ % of relevant items in top 10 Another approach: 0/1 model ▪ Coverage: ▪ Number of items/users for which the system can make predictions ▪ Precision: ▪ Accuracy of predictions ▪ Receiver operating characteristic (ROC) ▪ Tradeoff curve between false positives and false negatives 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 40

- 41. Narrow focus on accuracy sometimes misses the point ▪ Prediction Diversity ▪ Prediction Context ▪ Order of predictions In practice, we care only to predict high ratings: ▪ RMSE might penalize a method that does well for high ratings and badly for others 1/25/22 41 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 42. Expensive step is finding k most similar customers: O(|X|) Too expensive to do at runtime ▪ Could pre-compute Naïve pre-computation takes time O(k ·|X|) ▪ X … set of customers We already know how to do this! ▪ Near-neighbor search in high dimensions (LSH) ▪ Clustering ▪ Dimensionality reduction 1/25/22 42 Jure Leskovec, Stanford CS246:MiningMassive Datasets

- 43. Leverage all the data ▪ Don’t try to reduce data size in an effort to make fancy algorithms work ▪ Simple methods on large data do best Add more data ▪ e.g., add IMDB data on genres More data beats better algorithms http://anand.typepad.com/datawocky/2008/03/more-data-usual.html 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 43

- 45. Training data ▪ 100 million ratings, 480,000 users, 17,770 movies ▪ 6 years of data: 2000-2005 Test data ▪ Last few ratings of each user (2.8 million) ▪ Evaluation criterion: root mean squared error (RMSE) ▪ Netflix Cinematch RMSE: 0.9514 Competition ▪ 2,700+ teams ▪ $1 million prize for 10% improvement on Cinematch 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 45

- 46. Next topic: Recommendations via Latent Factor models 1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 46 Overview of Coffee Varieties FR TE S6 S5 L5 S3 S2 S1 R8 R6 R5 R4 R3 R2 L4 C7 S7 F9 F8 F6 F5 F4 F3 F2F1 F0 I2 C6 I1 C4 C3 C2 C1 B2 B1 S4 Complexity of Flavor Exoticness / Price Flavored Exotic Popular Roasts and Blends a1 The bubbles above represent products sized by sales volume. Products close to each other are recommended to each other.

- 47. Geared towards females Geared towards males serious escapist The Princess Diaries The Lion King Braveheart Independence Day Amadeus The Color Purple Ocean’s 11 Sense and Sensibility Gus Dave [BellkorTeam] 1/25/22 47 Jure Leskovec, Stanford CS246:MiningMassive Datasets Lethal Weapon Dumb and Dumber

- 48. Koren, Bell, Volinksy, IEEE Computer, 2009 1/25/22 48 Jure Leskovec, Stanford CS246:MiningMassive Datasets

![ X = set of Customers

S = set of Items

Utility function u: X × S → R

▪ R = set of ratings

▪ R is a totally ordered set

▪ e.g., 1-5 stars, real number in [0,1]

1/25/22 8

Jure Leskovec, Stanford CS246:MiningMassive Datasets](https://image.slidesharecdn.com/07-recsys1-241006124310-cf7142e1/85/Recommender-Systems-Content-and-Collaborative-Filtering-8-320.jpg)

![ Main idea:

▪ Items have profiles:

▪ Video -> [genre, director, actors, plot, release year]

▪ News -> [set of keywords]

▪ Recommend items to customer x similar to previous

items rated highly by x

Example:

Movie recommendations

▪ Recommend movies with same actor(s),

director, genre, …

Websites, blogs, news

▪ Recommend other sites with “similar” content

1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 14](https://image.slidesharecdn.com/07-recsys1-241006124310-cf7142e1/85/Recommender-Systems-Content-and-Collaborative-Filtering-14-320.jpg)

![ Let rx be the vector of user x’s ratings

Jaccard similarity measure

▪ Problem: Ignores the value of the rating

Cosine similarity measure

▪ sim(x, y) = cos(rx, ry) =

𝑟𝑥⋅𝑟𝑦

||𝑟𝑥||⋅||𝑟𝑦||

▪ Problem: Treats some missing ratings as “negative”

Pearson correlation coefficient

▪ Sxy = items rated by both users x and y

1/25/22 Jure Leskovec, Stanford CS246:MiningMassive Datasets 24

rx = [1, _, _, 1, 3]

ry = [1, _, 2, 2, _]

rx, ry as sets:

rx = {1, 4, 5}

ry = {1, 3, 4}

rx, ry as points:

rx = {1, 0, 0, 1, 3}

ry = {1, 0, 2, 2, 0}

rx, ry … avg.

rating of x, y

𝒔𝒊𝒎 𝒙, 𝒚 =

σ𝒔∈𝑺𝒙𝒚

𝒓𝒙𝒔 − 𝒓𝒙 𝒓𝒚𝒔 − 𝒓𝒚

σ𝒔∈𝑺𝒙𝒚

𝒓𝒙𝒔 − 𝒓𝒙

𝟐 σ𝒔∈𝑺𝒙𝒚

𝒓𝒚𝒔 − 𝒓𝒚

𝟐](https://image.slidesharecdn.com/07-recsys1-241006124310-cf7142e1/85/Recommender-Systems-Content-and-Collaborative-Filtering-24-320.jpg)

![12

11

10

9

8

7

6

5

4

3

2

1

4

5

5

?

3

1

1

3

1

2

4

4

5

2

5

3

4

3

2

1

4

2

3

2

4

5

4

2

4

5

2

2

4

3

4

5

4

2

3

3

1

6

users

Neighbor selection:

Identify movies similar to

movie 1, rated by user 5

1/25/22 30

Jure Leskovec, Stanford CS246:MiningMassive Datasets

movies

1.00

-0.18

0.41

-0.10

-0.31

0.59

Here we use Pearson correlation as similarity:

1) Subtract mean rating mi from each movie i

m1 = (1+3+5+5+4)/5 = 3.6

row 1: [-2.6, 0, -0.6, 0, 0, 1.4, 0, 0, 1.4, 0, 0.4, 0]

2) Compute dot products between rows

s1,m](https://image.slidesharecdn.com/07-recsys1-241006124310-cf7142e1/85/Recommender-Systems-Content-and-Collaborative-Filtering-30-320.jpg)

![Geared

towards

females

Geared

towards

males

serious

escapist

The Princess

Diaries

The Lion

King

Braveheart

Independence

Day

Amadeus

The Color

Purple

Ocean’s 11

Sense and

Sensibility

Gus

Dave

[BellkorTeam]

1/25/22 47

Jure Leskovec, Stanford CS246:MiningMassive Datasets

Lethal

Weapon

Dumb and

Dumber](https://image.slidesharecdn.com/07-recsys1-241006124310-cf7142e1/85/Recommender-Systems-Content-and-Collaborative-Filtering-47-320.jpg)