Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | Simplilearn

- 2. What’s in it for you? RNN What is a Neural Network? Why Recurrent Neural Network? Popular Neural Networks How does a RNN work? Vanishing and Exploding Gradient Problem Long Short Term Memory (LSTM) Use case implementation of LSTM What is a Recurrent Neural Network?

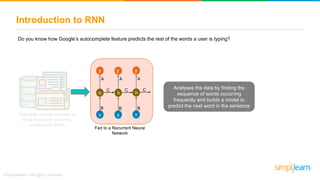

- 3. Introduction to RNN Do you know how Google’s autocomplete feature predicts the rest of the words a user is typing? h y x A B C h y x A B C h y x A B C Fed to a Recurrent Neural Network What is the best food to eat in Las Vegas Google search Autocompletes the search

- 4. Introduction to RNN Do you know how Google’s autocomplete feature predicts the rest of the words a user is typing? h y x A B C h y x A B C h y x A B C Fed to a Recurrent Neural Network What is the best food to eat in Las Vegas Google search Autocompletes the search Collection of large volumes of most frequently occurring consecutive words

- 5. Introduction to RNN Do you know how Google’s autocomplete feature predicts the rest of the words a user is typing? Collection of large volumes of most frequently occurring consecutive words h y x A B C h y x A B C h y x A B C Fed to a Recurrent Neural Network What is the best food to eat in Las Vegas Google search Autocompletes the search Analyses the data by finding the sequence of words occurring frequently and builds a model to predict the next word in the sentence

- 6. Introduction to RNN Do you know how Google’s autocomplete feature predicts the rest of the words a user is typing? Collection of large volumes of most frequently occurring consecutive words h y x A B C h y x A B C h y x A B C Fed to a Recurrent Neural Network What is the best food to eat in Las Vegas Google search Autocompletes the search

- 7. Introduction to RNN Do you know how Google’s autocomplete feature predicts the rest of the words a user is typing? Collection of large volumes of most frequently occurring consecutive words h y x A B C h y x A B C h y x A B C Fed to a Recurrent Neural Network What is the best food to eat in Las Vegas Google search Autocompletes the search

- 8. What is a Neural Network? Neural Networks used in Deep Learning, consists of different layers connected to each other and work on the structure and functions of a human brain. It learns from huge volumes of data and uses complex algorithms to train a neural net. Input Layer Hidden Layers Output Layer German Shepherd Labrador Image pixels of 2 different breeds of dog Identifies the dogs breed

- 9. What is a Neural Network? Neural Networks used in Deep Learning, consists of different layers connected to each other and work on the structure and functions of a human brain. It learns from huge volumes of data and uses complex algorithms to train a neural net. Hidden Layers Output Layer Input Layer German Shepherd Labrador Image pixels of 2 different breeds of dog Identifies the dogs breed Such networks do not require memorizing the past output

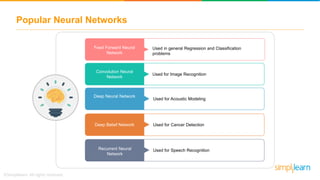

- 10. Popular Neural Networks Feed Forward Neural Network Used in general Regression and Classification problems Convolution Neural Network Used for Image Recognition Deep Neural Network Used for Acoustic Modeling Deep Belief Network Used for Cancer Detection Recurrent Neural Network Used for Speech Recognition

- 11. Feed Forward Neural Network Input Layers Hidden Layers Output Layer x y^ input Predicted output Simplified presentation In a Feed-Forward Network , information flows only in forward direction, from the input nodes, through the hidden layers (if any) and to the output nodes. There are no cycles or loops in the network. Input Layer Hidden Layers Output Layer • Decisions are based on current input • No memory about the past • No future scope

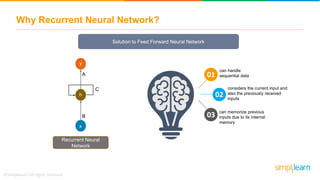

- 12. Why Recurrent Neural Network? Feed Forward Neural Network 01 02 03 cannot handle sequential data cannot memorize previous inputs considers only the current input Issues in Feed Forward Neural Network

- 13. Why Recurrent Neural Network? h y x A B C Recurrent Neural Network 01 02 03 can handle sequential data can memorize previous inputs due to its internal memory considers the current input and also the previously received inputs Solution to Feed Forward Neural Network

- 14. Applications of RNN Image captioning RNN is used to caption an image by analyzing the activities present in it “A Dog catching a ball in mid air”

- 15. Applications of RNN Time series prediction Any time series problem like predicting the prices of stocks in a particular month can be solved using RNN

- 16. Applications of RNN Natural Language Processing Text mining and Sentiment analysis can be carried out using RNN for Natural Language Processing When it rains, look for rainbows. When it’s dark, look for stars. Positive Sentiment

- 17. Applications of RNN Machine Translation Given an input in one language, RNN can be used to translate the input into different languages as output Here the person is speaking in English and it is getting translated into Chinese, Italian, French, German and Spanish languages

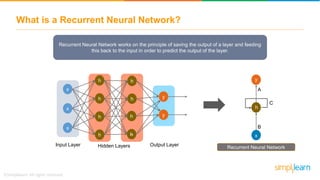

- 18. What is a Recurrent Neural Network? Recurrent Neural Network works on the principle of saving the output of a layer and feeding this back to the input in order to predict the output of the layer. h y x A B C Recurrent Neural Network x x x h h h h h h h h y y Input Layer Hidden Layers Output Layer

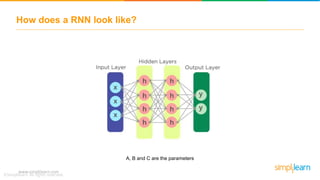

- 19. www.simplilearn.com How does a RNN look like? A, B and C are the parameters

- 20. How does a RNN look like? www.simplilearn.com

- 21. How does a RNN work? = new state fc = function with parameter c = old state = input vector at time step t = f (h(t-1) ,c ) C A B C A B C A B y(t-1) y(t+1)y(t) x(t-1) x(t+1) h(t-1) h(t+1) h(t) h(t) x(t) x(t) h(t) h(t-1) x(t)

- 22. Types of Recurrent Neural Network one to one Single output Single input one to one network is known as the Vanilla Neural Network. Used for regular machine learning problems

- 23. Types of Recurrent Neural Network one to many Multiple outputs Single input one to many network generates sequence of outputs. Example: Image captioning

- 24. Types of Recurrent Neural Network many to one Single output Multiple inputs many to one network takes in a sequence of inputs. Example: Sentiment analysis where a given sentence can be classified as expressing positive or negative sentiments

- 25. Types of Recurrent Neural Network many to many Multiple outputs Multiple inputs many to many network takes in a sequence of inputs and generates a sequence of outputs. Example: Machine Translation

- 26. Vanishing Gradient Problem While training a RNN, your slope can be either too small or very large and this makes training difficult. When the slope is too small, the problem is know as Vanishing gradient. Change in Y Change in X Y X s0 E0 x0 s1 E1 s2 E2 x2x1 s3 E3 x3 Loss of Information through time Backpropagate the error

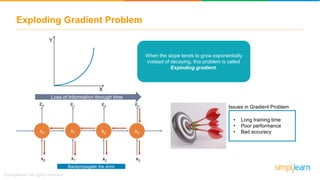

- 27. Exploding Gradient Problem When the slope tends to grow exponentially instead of decaying, this problem is called Exploding gradient. Y X • Long training time • Poor performance • Bad accuracys0 E0 x0 s1 E1 s2 E2 x2x1 s3 E3 x3 Loss of Information through time Backpropagate the error Issues in Gradient Problem

- 28. Explaining Gradient Problem s1 y1 x1 s2 y2 s3 y3 x3x2 st yt xt …….s0 Consider the following 2 examples to understand what should be the next word in the sequence: The person who took my bike and…………………………………………., a thief. The students who got into Engineering with …………………………………………., from Asia. ____ ____ was were

- 29. Explaining Gradient Problem s1 y1 x1 s2 y2 s3 y3 x3x2 st yt xt …….s0 Consider the following 2 examples: The person who took my bike and…………………………………………., a thief. The students who got into Engineering with …………………………………………., were from Asia . ____was In order to understand what would be the next word in the sequence, the RNN must memorize the previous context whether the subject was singular noun or plural noun

- 30. Explaining Gradient Problem Consider the following 2 examples: The person who took my bike and…………………………………………., was a thief. The students who got into Engineering with …………………………………………., from Asia . In order to understand what would be the next word in the sequence, the RNN must memorize the previous context whether the subject was singular noun or plural noun ____were It might be sometimes difficult for the error to backpropagate to the beginning of the sequence to predict what should be the output s1 y1 x1 s2 y2 s3 y3 x3x2 st yt xt …….s0

- 31. Solution to Gradient Problem Identity Initialization Truncated Backpropagation Gradient Clipping 2 1 3 Exploding Gradient Weight initialization Choosing the right Activation Function Long Short-Term Memory Networks (LSTMs) 2 1 3 Vanishing Gradient

- 32. Long-Term Dependencies Here we do not need any further context. It’s pretty clear the last word is going to be “sky”. Suppose we try to predict the last word in the text “ The clouds are in the ___”sky

- 33. Long-Term Dependencies Suppose we try to predict the last word in the text “I have been staying in Spain for the last 10 years… I can speak fluent ______.” • Here we need the context of Spain to predict the last word in the text. • It’s possible that the gap between the relevant information and the point where it is needed to become very large. • LSTMs help us solve this problem. Spanish The word we predict will depend on the previous few words in context

- 34. Long Short-Term Memory Networks LSTMs are special kind of Recurrent Neural Networks, capable of learning long-term dependencies. Remembering information for long periods of time is their default behavior. tanh ht-1 xt-1 tanh ht xt tanh ht+1 Xt+1 A AA All recurrent neural networks have the form of a chain of repeating modules of neural network. In standard RNNs, this repeating module will have a very simple structure, such as a single tanh layer.

- 35. Long Short-Term Memory Networks LSTMs are special kind of Recurrent Neural Networks, capable of learning long-term dependencies. Remembering information for long periods of time is their default behavior. +x tanh x tanh x +x tanh x tanh x +x tanh x tanh x ht-1 ht Ht+1 xt-1 xt Xt+1 A A LSTMs also have a chain like structure, but the repeating module has a different structure. Instead of having a single neural network layer, there are four interacting layers communicating in a very special way.

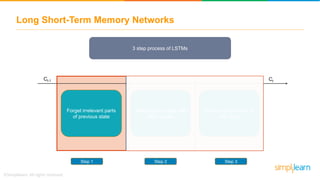

- 36. Long Short-Term Memory Networks 3 step process of LSTMs Step 1 Step 2 Step 3 Forget irrelevant parts of previous state Selectively update cell state values Output certain parts of cell state Ct-1 Ct

- 37. Long Short-Term Memory Networks 3 step process of LSTMs Step 1 Step 2 Step 3 Forget irrelevant parts of previous state Selectively update cell state values Output certain parts of cell state Ct-1 Ct

- 38. Long Short-Term Memory Networks 3 step process of LSTMs Step 1 Step 2 Step 3 Forget irrelevant parts of previous state Selectively update cell state values Output certain parts of cell state Ct-1 Ct

- 39. ht Ct Working of LSTMs First step in the LSTM is to decide which information to be omitted in from the cell in that particular time step. It is decided by the sigmoid function. It looks at the previous state (ht-1 ) and the current input xt and computes the function. +x tanh x tanh x ht xt Ct-1 ht-1 ft ft = forget gate Decides which information to delete that is not important from previous time step Decides how much of the past it should rememberStep-1

- 40. ht Ct Working of LSTMs First step in the LSTM is to decide which information to be omitted in from the cell in that particular time step. It is decided by the sigmoid function. It looks at the previous state (ht-1 ) and the current input xt and computes the function. +x tanh x tanh x ht xt Ct-1 ht-1 ft ft = forget gate Decides which information to delete that is not important from previous time step Consider an LSTM is fed with the following inputs from previous and present time step : Alice is good in Physics. John on the other hand is good in Chemistry. John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Previous Output Current Input ht-1 xt Forget gate realizes there might be a change in context after encountering the first full stop. Compares with the current input sentence at xt The next sentence talks about John, so the information on Alice is deleted. The position of subject is vacated and is assigned to John

- 41. ht Ct Working of LSTMs First step in the LSTM is to decide which information to be omitted in from the cell in that particular time step. It is decided by the sigmoid function. It looks at the previous state (ht-1 ) and the current input xt and computes the function. +x tanh x tanh x ht xt Ct-1 ht-1 ft ft = forget gate Decides which information to delete that is not important from previous time step Consider an LSTM is fed with the following inputs from previous and present time step : Alice is good in Physics. John on the other hand is good in Chemistry. John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Previous Output Current Input ht-1 xt Forget gate realizes there might be a change in context after encountering the first full stop. Compares with the current input sentence at xt The next sentence talks about John, so the information on Alice is deleted. The position of subject is vacated and is assigned to John

- 42. ht Ct Working of LSTMs First step in the LSTM is to decide which information to be omitted in from the cell in that particular time step. It is decided by the sigmoid function. It looks at the previous state (ht-1 ) and the current input xt and computes the function. +x tanh x tanh x ht xt Ct-1 ht-1 ft ft = forget gate Decides which information to delete that is not important from previous time step Consider an LSTM is fed with the following inputs from previous and present time step : Alice is good in Physics. John on the other hand is good in Chemistry. John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Previous Output Current Input ht-1 xt Forget gate realizes there might be a change in context after encountering the first full stop. Compares with the current input sentence at xt The next sentence talks about John, so the information on Alice is deleted. The position of subject is vacated and is assigned to John

- 43. ht Ct Working of LSTMs First step in the LSTM is to decide which information to be omitted in from the cell in that particular time step. It is decided by the sigmoid function. It looks at the previous state (ht-1 ) and the current input xt and computes the function. +x tanh x tanh x ht xt Ct-1 ht-1 ft ft = forget gate Decides which information to delete that is not important from previous time step Consider an LSTM is fed with the following inputs from previous and present time step : Alice is good in Physics. John on the other hand is good in Chemistry. John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Previous Output Current Input ht-1 xt Forget gate realizes there might be a change in context after encountering the first full stop. Compares with the current input sentence at xt The next sentence talks about John, so the information on Alice is deleted. The position of subject is vacated and is assigned to John

- 44. ht Ct Working of LSTMs First step in the LSTM is to decide which information to be omitted in from the cell in that particular time step. It is decided by the sigmoid function. It looks at the previous state (ht-1 ) and the current input xt and computes the function. +x tanh x tanh x ht xt Ct-1 ht-1 ft ft = forget gate Decides which information to delete that is not important from previous time step Consider an LSTM is fed with the following inputs from previous and present time step : Alice is good in Physics. John on the other hand is good in Chemistry. John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Previous Output Current Input ht-1 xt Forget gate realizes there might be a change in context after encountering the first full stop. Compares with the current input sentence at xt The next sentence talks about John, so the information on Alice is deleted. The position of subject is vacated and is assigned to John

- 45. ht Ct Working of LSTMs In the second layer, there are 2 parts. One is the sigmoid function and the other is the tanh. In the sigmoid function, it decides which values to let through(0 or 1). tanh function gives the weightage to the values which are passed deciding their level of importance(-1 to 1). +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ Decides how much should this unit add to the current stateStep-2 it = input gate Determines which information to let through based on its significance in the current time step

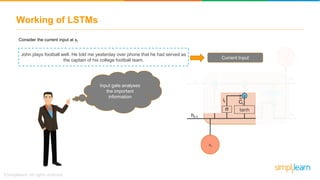

- 46. ht Ct Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ Consider the current input at xt John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Current Input Input gate analyses the important information

- 47. ht Ct Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ Consider the current input at xt John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Current Input John plays football and he was the captain of his college team is important

- 48. ht Ct Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ Consider the current input at xt John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Current Input He told me over phone yesterday is less important, hence it is forgotten

- 49. ht Ct Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ Consider the current input at xt John plays football well. He told me yesterday over phone that he had served as the captain of his college football team. Current Input This process of adding some new information can be done via the input gate

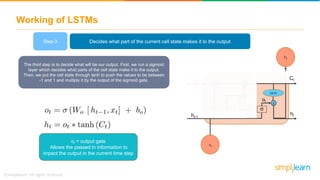

- 50. Working of LSTMs The third step is to decide what will be our output. First, we run a sigmoid layer which decides what parts of the cell state make it to the output. Then, we put the cell state through tanh to push the values to be between -1 and 1 and multiply it by the output of the sigmoid gate. +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ ht ot Ct Decides what part of the current cell state makes it to the outputStep-3 ot = output gate Allows the passed in information to impact the output in the current time step

- 51. Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ ht ot Ct Let’s consider this example to predicting the next word in the sentence: John played tremendously well against the opponent and won for his team. For his contributions, brave ____ was awarded player of the match. There could be a lot of choices for the empty space

- 52. Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ ht ot Ct Let’s consider this example to predicting the next word in the sentence: John played tremendously well against the opponent and won for his team. For his contributions, brave ____ was awarded player of the match. Current input brave is an adjective

- 53. Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ ht ot Ct Let’s consider this example to predicting the next word in the sentence: John played tremendously well against the opponent and won for his team. For his contributions, brave ____ was awarded player of the match. Adjectives describe a noun

- 54. Working of LSTMs +x tanh x tanh x ht xt Ct-1 ht-1 ft Ct it ~ ht ot Ct Let’s consider this example to predicting the next word in the sentence: John played tremendously well against the opponent and won for his team. For his contributions, brave ____ was awarded player of the match.John “John” could be the best output after brave

- 55. Use case implementation of LSTM Let’s predict the prices of stocks using LSTM network Based on the stock price data between 2012- 2016 Predict the stock prices of 2017

- 56. Use case implementation of LSTM 1. Import the Libraries 2. Import the training dataset 3. Feature Scaling

- 57. Use case implementation of LSTM 4. Create a data structure with 60 timesteps and 1 output 5. Import keras libraries and packages

- 58. Use case implementation of LSTM 6. Initialize the RNN 7. Adding the LSTM layers and some Dropout regularization

- 59. Use case implementation of LSTM 8. Adding the output layer 9. Compile the RNN 10. Fit the RNN to the training set

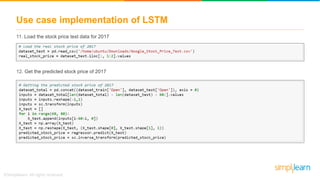

- 60. Use case implementation of LSTM 11. Load the stock price test data for 2017 12. Get the predicted stock price of 2017

- 61. Use case implementation of LSTM 13. Visualize the results of predicted and real stock price

- 62. Key Takeaways

Editor's Notes

- #2: Style - 01

- #3: Style - 01

- #4: Style - 01

- #5: Style - 01

- #6: Style - 01

- #7: Style - 01

- #8: Style - 01

- #9: Style - 01

- #10: Style - 01

- #11: Style - 01

- #12: Style - 01

- #13: Style - 01

- #14: Style - 01

- #15: Style - 01

- #16: Style - 01

- #17: Style - 01

- #18: Style - 01

- #19: Style - 01

- #20: Style - 01

- #21: Style - 01

- #22: Style - 01

- #23: Style - 01

- #24: Style - 01

- #25: Style - 01

- #26: Style - 01

- #27: Style - 01

- #28: Style - 01

- #29: Style - 01

- #30: Style - 01

- #31: Style - 01

- #32: Style - 01

- #33: Style - 01

- #34: Style - 01

- #35: Style - 01

- #36: Style - 01

- #37: Style - 01

- #38: Style - 01

- #39: Style - 01

- #40: Style - 01

- #41: Style - 01

- #42: Style - 01

- #43: Style - 01

- #44: Style - 01

- #45: Style - 01

- #46: Style - 01

- #47: Style - 01

- #48: Style - 01

- #49: Style - 01

- #50: Style - 01

- #51: Style - 01

- #52: Style - 01

- #53: Style - 01

- #54: Style - 01

- #55: Style - 01

- #56: Style - 01

- #57: Style - 01

- #58: Style - 01

- #59: Style - 01

- #60: Style - 01

- #61: Style - 01

- #62: Style - 01

- #63: Style - 01