Editor's Notes

- #10: Think of it like a big hash-table

- #11: Think of it like a big hash-table

- #12: Think of it like a big hash-table

- #13: Think of it like a big hash-table

- #14: Think of it like a big hash-table

- #15: Think of it like a big hash-table

- #16: X = throughput, compute power for MapReduce, storage, lower latency

- #17: X = throughput, compute power for MapReduce, storage, lower latency

- #18: X = throughput, compute power for MapReduce, storage, lower latency

- #33: Consistent hashing means: 1) large, fixed-size key-space 2) no rehashing of keys - always hash the same way

- #34: Consistent hashing means: 1) large, fixed-size key-space 2) no rehashing of keys - always hash the same way

- #35: Consistent hashing means: 1) large, fixed-size key-space 2) no rehashing of keys - always hash the same way

- #36: Consistent hashing means: 1) large, fixed-size key-space 2) no rehashing of keys - always hash the same way

- #37: Consistent hashing means: 1) large, fixed-size key-space 2) no rehashing of keys - always hash the same way

- #103: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #104: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #105: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #106: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #107: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #108: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #109: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #110: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #111: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #112: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #113: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #114: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #115: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #116: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #117: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #118: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #119: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #120: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #121: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #122: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #123: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #124: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #125: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #126: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #127: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #128: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #129: 1) Client requests a key 2) Get handler starts up to service the request 3) Hashes key to its owner partitions (N=3) 4) Sends similar “get” request to those partitions 5) Waits for R replies that concur (R=2) 6) Resolves the object, replies to client 7) Third reply may come back at any time, but FSM replies as soon as quorum is satisfied/violated

- #159: *** make sure to talk about LWW, and commit hooks -- tell them to ignore the vclock business ***

- #181: “Quorums”? When I say “quora” I mean the constraints (or lack thereof) your application puts on request consistency.

- #182: Remember that requests contact all participant partitions/vnodes. No computer system is 100% reliable, so there will be times when increased latency or hardware failure will make a node unavailable. By unavailable, I mean requests timeout, the network partitions, or there’s an actual physical outage. FT = fault-tolerance, C = consistency Strong consistency (as opposed to strict) means that the participants in each read or write quorum overlap. The typical example is N=3, R=2, W=2. In all successful read requests, at least one of the read partitions will be one that accepted the latest write.

- #183: Remember that requests contact all participant partitions/vnodes. No computer system is 100% reliable, so there will be times when increased latency or hardware failure will make a node unavailable. By unavailable, I mean requests timeout, the network partitions, or there’s an actual physical outage. FT = fault-tolerance, C = consistency Strong consistency (as opposed to strict) means that the participants in each read or write quorum overlap. The typical example is N=3, R=2, W=2. In all successful read requests, at least one of the read partitions will be one that accepted the latest write.

- #184: Remember that requests contact all participant partitions/vnodes. No computer system is 100% reliable, so there will be times when increased latency or hardware failure will make a node unavailable. By unavailable, I mean requests timeout, the network partitions, or there’s an actual physical outage. FT = fault-tolerance, C = consistency Strong consistency (as opposed to strict) means that the participants in each read or write quorum overlap. The typical example is N=3, R=2, W=2. In all successful read requests, at least one of the read partitions will be one that accepted the latest write.

- #185: Remember that requests contact all participant partitions/vnodes. No computer system is 100% reliable, so there will be times when increased latency or hardware failure will make a node unavailable. By unavailable, I mean requests timeout, the network partitions, or there’s an actual physical outage. FT = fault-tolerance, C = consistency Strong consistency (as opposed to strict) means that the participants in each read or write quorum overlap. The typical example is N=3, R=2, W=2. In all successful read requests, at least one of the read partitions will be one that accepted the latest write.

- #186: However, writes are a little more complicated to track than reads. When there’s a detectable node outage/partition, writes will be sent to fallbacks (hinted handoff), which means that Riak is HIGHLY write-available. Also, there’s an implied R quorum because the internal Erlang client has to fetch the object to update it and the vclock.

- #187: However, writes are a little more complicated to track than reads. When there’s a detectable node outage/partition, writes will be sent to fallbacks (hinted handoff), which means that Riak is HIGHLY write-available. Also, there’s an implied R quorum because the internal Erlang client has to fetch the object to update it and the vclock.

- #188: However, writes are a little more complicated to track than reads. When there’s a detectable node outage/partition, writes will be sent to fallbacks (hinted handoff), which means that Riak is HIGHLY write-available. Also, there’s an implied R quorum because the internal Erlang client has to fetch the object to update it and the vclock.

- #189: However, writes are a little more complicated to track than reads. When there’s a detectable node outage/partition, writes will be sent to fallbacks (hinted handoff), which means that Riak is HIGHLY write-available. Also, there’s an implied R quorum because the internal Erlang client has to fetch the object to update it and the vclock.

- #190: Why don’t we outright reclaim the space? Ordering is hard to determine since deletes require no vclock. We prefer not lose data when there is an issue of contention.

- #191: Why don’t we outright reclaim the space? Ordering is hard to determine since deletes require no vclock. We prefer not lose data when there is an issue of contention.

- #192: Why don’t we outright reclaim the space? Ordering is hard to determine since deletes require no vclock. We prefer not lose data when there is an issue of contention.

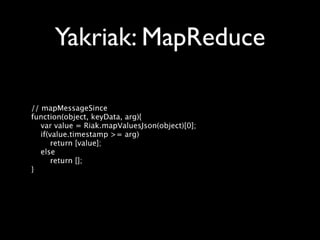

- #270: This is probably one of the easiest Map-Reduce queries/jobs you can submit. It simply returns the values of all the keys in the bucket, including their bucket/key/vclock and metadata.

- #271: Instead of specifying the function inline, you can also store it under a bucket/key, and have Riak retrieve and execute it automatically.

- #272: A query that makes use of the “arg” in the map phase, named functions, and a reduce phase. Finally here’s how you can submit all queries. Use the @- to signify that your data will come on the next line and be terminated by Ctrl-D.

- #273: A query that makes use of the “arg” in the map phase, named functions, and a reduce phase. Finally here’s how you can submit all queries. Use the @- to signify that your data will come on the next line and be terminated by Ctrl-D.