S3, Cassandra or Outer Space? Dumping Time Series Data using Spark - Demi Ben-Ari, Panorays

- 1. S3, Cassandra or Outer Space? Dumping Time Series Data using Spark Demi Ben-Ari - VP R&D @ Tel-Aviv 30 MARCH 2017

- 2. About Me Demi Ben-Ari, Co-Founder & VP R&D @ Panorays ● BS’c Computer Science – Academic College Tel-Aviv Yaffo ● Co-Founder ○ “Big Things” Big Data Community ○ Google Developer Group Cloud In the Past: ● Sr. Data Engineer - Windward ● Team Leader & Sr. Software Engineer Missile defense and Alert System - “Ofek” – IAF Interested in almost every kind of technology – A True Geek

- 3. Agenda ● Apache Spark brief overview and Catch Up ● Data flow and Environment ● What’s our time series data like? ● Where we started from - where we got to ○ Problems and our decisions ○ Evolution of the solution ● Conclusions

- 4. Spark Brief Overview & Catchup

- 5. Scala & Spark (Architecture) Scala REPL Scala Compiler Spark Runtime Scala Runtime JVM File System (eg. HDFS, Cassandra, S3..) Cluster Manager (eg. Yarn, Mesos)

- 6. What kind of DSL is Apache Spark ● Centered around Collections ● Immutable data sets equipped with functional transformations ● These are exactly the Scala collection operations map flatMap filter ... reduce fold aggregate ... union intersection ...

- 7. Spark is A Multi-Language Platform ● Why to use Scala instead of Python? ○ Native to Spark, Can use everything without translation ○ Types help

- 8. So Bottom Line… What’s Spark???

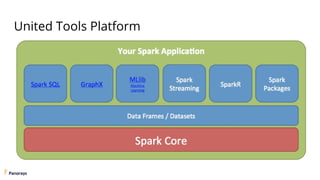

- 10. United Tools Platform - Single Framework Batch InteractiveStreaming Single Framework

- 11. Data flow and Environment (Our Use Case)

- 12. Structure of the Data ● Maritime Analytics Platform ● Geo Locations + Metadata ● Arriving over time ● Different types of messages being reported by satellites ● Encoded (For Compression purposes) ● Might arrive later than actually transmitted

- 13. Data Flow Diagram External Data Source Analytics Layers Data Pipeline Parsed Raw Entity Resolution Process Building insights on top of the entities Data Output Layer Anomaly Detection Trends

- 14. Environment Description Cluster Dev Testing Live Staging ProductionEnv OB1K RESTful Java Services

- 15. Basic Terms ● Missing Parts in Time Series Data ◦ Data arriving from the satellites ● Might be causing delays because of bad transmission ◦ Data vendors delaying the data stream ◦ Calculation in Layers may cause Holes in the Data ● Calculating the Data layers by time slices

- 16. Basic Terms ● Idempotence is the property of certain operations in mathematics and computer science, that can be applied multiple times without changing the result beyond the initial application. ● Function: Same input => Same output

- 17. Basic Terms ● Partitions == Parallelism ◦ Physical / Logical partitioning ● Resilient Distributed Datasets (RDDs) == Collections ◦ fault-tolerant collection of elements that can be operated on in parallel. ◦ Applying immutable transformations and actions over RDDs

- 18. What RDD’s really are?

- 19. So…..

- 20. The Problem - Receiving DATA Beginning state, no data, and the timeline begins T = 0 Level 3 Entity Level 2 Entity Level 1 Entity

- 21. The Problem - Receiving DATA T = 10 Level 3 Entity Level 2 Entity Level 1 Entity Computation sliding window size Level 1 entities data arrives and gets stored

- 22. The Problem - Receiving DATA T = 10 Level 3 Entity Level 2 Entity Level 1 Entity Computation sliding window size Level 3 entities are created on top of Level 2’s Data (Decreased amount of data) Level 2 entities are created on top of Level 1’s Data (Decreased amount of data)

- 23. The Problem - Receiving DATA T = 20 Level 3 Entity Level 2 Entity Level 1 Entity Computation sliding window size Because of the sliding window’s back size, level 2 and 3 entities would not be created properly and there would be “Holes” in the Data Level 1 entity's data arriving late

- 24. Solution to the Problem ● Creating Dependent Micro services forming a data pipeline ◦ Mainly Apache Spark applications ◦ Services are only dependent on the Data - not the previous service’s run ● Forming a structure and scheduling of “Back Sliding Window” ◦ Know your data and its relevance through time ◦ Don’t try to foresee the future – it might Bias the results

- 25. How it looks like in the end... Level 3 Entity Level 2 Entity Level 1 Entity 6 Hour time slot 12 Hours of Data A Week of Data More than a Week of Data

- 26. Starting point & Infrastructure

- 27. How we started? ● Spark Standalone – via ec2 scripts ◦ Around 5 nodes (r3.xlarge instances) ◦ Didn’t want to keep a persistent HDFS – Costs a lot ◦ 100 GB (per day) => ~150 TB for 4 years ◦ Cost for server per year (r3.xlarge): - On demand: ~2900$ - Reserved: ~1750$ ● Know your costs: http://www.ec2instances.info/

- 28. Know Your Costs

- 29. Decision ● Working with S3 as the persistence layer ◦ Pay extra for - Put (0.005 per 1000 requests) - Get (0.004 per 10,000 requests) ◦ 150TB => ~210$ for 4 years of Data ● Same format as HDFS (CSV files) ◦ s3n://some-bucket/entity1/201412010000/part-00000 ◦ s3n://some-bucket/entity1/201412010000/part-00001 ◦ ……

- 30. What about the serving?

- 31. MongoDB for Serving Worker 1 Worker 2 …. …. … … Worker N MongoDB Replica Set Spark Cluster Master Write Read

- 32. Spark Slave - Server Specs ● Instance Type: r3.xlarge ● CPU’s: 4 ● RAM: 30.5GB ● Storage: ephemeral ● Amount: 10+

- 33. MongoDB - Server Specs ● MongoDB version: 2.6.1 ● Instance Type: m3.xlarge (AWS) ● CPU’s: 4 ● RAM: 15GB ● Storage: EBS ● DB Size: ~500GB ● Collection Indexes: 5 (4 compound)

- 34. The Problem ● Batch jobs ◦ Should run for 5-10 minutes in total ◦ Actual - runs for ~40 minutes ● Why? ◦ ~20 minutes to write with the Java mongo driver – Async (Unacknowledged) ◦ ~20 minutes to sync the journal ◦ Total: ~ 40 Minutes of the DB being unavailable ◦ No batch process response and no UI serving

- 35. Alternative Solutions ● Sharded MongoDB (With replica sets) ◦ Pros: - Increases Throughput by the amount of shards - Increases the availability of the DB ◦ Cons: - Very hard to manage DevOps wise (for a small team of developers) - High cost of servers – because each shared need 3 replicas

- 36. Workflow with MongoDB Worker 1 Worker 2 …. …. … … Worker N Spark Cluster Master Write Read Master

- 37. Our DevOps – After that solution We had no DevOps guy at that time at all ☹

- 38. Alternative Solutions ● Apache Cassandra ◦ Pros: - Very large developer community - Linearly scalable Database - No single master architecture - Proven working with distributed engines like Apache Spark ◦ Cons: - We had no experience at all with the Database - No Geo Spatial Index – Needed to implement by ourselves

- 39. The Solution ● Migration to Apache Cassandra ● Create easily a Cassandra cluster using DataStax Community AMI on AWS ◦ First easy step – Using the spark-cassandra-connector (Easy bootstrap move to Spark ⬄ Cassandra) ◦ Creating a monitoring dashboard to Cassandra ● Second phase: ◦ Creating a self managed and self provisioned Cassandra Cluster ◦ Tuning the hell out of it!!!

- 40. Workflow with Cassandra Worker 1 Worker 2 …. …. … … Worker N Cassandra Cluster Spark Cluster Write Read

- 41. Result ● Performance improvement ◦ Batch write parts of the job run in 3 minutes instead of ~ 40 minutes in MongoDB ● Took 2 weeks to go from “Zero to Hero”, and to ramp up a running solution that work without glitches

- 42. So (Again)?

- 43. Transferring the Heaviest Process ● Micro service that runs every 10 minutes ● Writes to Cassandra 30GB per iteration ◦ (Replication factor 3 => 90GB) ● At first took us 18 minutes to do all of the writes ◦ Not Acceptable in a 10 minute process

- 44. Cluster On OpsCenter - Before

- 45. Transferring the Heaviest Process ● Solutions ◦ We chose the i2.xlarge ◦ Optimization of the Cluster ◦ Changing the JDK to Java-8 - Changing the GC algorithm to G1 ◦ Tuning the Operation system - Ulimit, removing the swap ◦ Write time went down to ~5 minutes (For 30GB RF=3) Sounds good right? I don’t think so

- 46. Cloud Watch After Tuning

- 47. The Solution ● Taking the same Data Model that we held in Cassandra (All of the Raw data per 10 minutes) and put it on S3 ◦ Write time went down from ~5 minutes to 1.5 minutes ● Added another process, not dependent on the main one, happens every 15 minutes ◦ Reads from S3, downscales the amount and Writes them to Cassandra for serving

- 48. Parsed Raw Static / Aggregated Data Spark Analytics Layers UI Serving Downscaled Data Heavy Fusion Process How it looks after all?

- 49. Conclusion ● Always give an estimate to your data ◦ Frequency ◦ Volume ◦ Arrangement of the previous phase ● There is no “Best” persistence layer ◦ There is the right one for the job ◦ Don’t overload an existing solution

- 50. Conclusion ● Spark is a great framework for distributed collections ◦ Fully functional API ◦ Can perform imperative actions ● “With great power, comes lots of partitioning” ◦ Control your work and data distribution via partitions ● https://www.pinterest.com/pin/155514993354583499/ (Thanks)

- 51. Questions?

- 52. ● LinkedIn ● Twitter: @demibenari ● Blog: http://progexc.blogspot.com/ ● [email protected] ● “Big Things” Community Meetup, YouTube, Facebook, Twitter ● GDG Cloud