Session 04 pig - slides

- 1. Hadoop Developer Training Session 04 - PIG

- 2. Page 1Classification: Restricted Agenda • PIG • PIG - Overview • Installation and Running Pig • Load in Pig • Macros in Pig

- 3. Page 2Classification: Restricted What is Apache Pig? • Apache Pig is an abstraction over MapReduce. It is a tool/platform which is used to analyze larger sets of data representing them as data flows. Pig is generally used with Hadoop; we can perform all the data manipulation operations in Hadoop using Apache Pig. • To write data analysis programs, Pig provides a high-level language known as Pig Latin. This language provides various operators using which programmers can develop their own functions for reading, writing, and processing data. • To analyze data using Apache Pig, programmers need to write scripts using Pig Latin language. All these scripts are internally converted to Map and Reduce tasks. Apache Pig has a component known as Pig Engine that accepts the Pig Latin scripts as input and converts those scripts into MapReduce jobs.

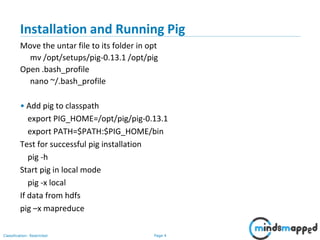

- 4. Page 3Classification: Restricted Installation and Running Pig • PIG is a data analytics framework in Hadoop ecosystem. It could be installed in following ways: • Local Mode: It is installed on a single machine and use local file system for storage. Local mode is used mainly for debugging and testing Pig Latin scripts. Specify ‘local’ as an argument to pig to start it in local mode. • MapReduce Mode: This is the default installation of Pig. It requires Hadoop cluster configured to load files in HDFS and run MR using Pig Latin scripts. Follow the steps below to configure PIG in your environment: Download the latest version of pig from here. Untar the pig tar using command tar –xzvf pig-0.13.0.tar.gz Create a folder in /opt for pig installation mkdir /opt/pig Move the untar file to its folder in opt mv /opt/setups/pig-0.13.1 /opt/pig Open .bash_profile nano ~/.bash_profile

- 5. Page 4Classification: Restricted Installation and Running Pig Move the untar file to its folder in opt mv /opt/setups/pig-0.13.1 /opt/pig Open .bash_profile nano ~/.bash_profile • Add pig to classpath export PIG_HOME=/opt/pig/pig-0.13.1 export PATH=$PATH:$PIG_HOME/bin Test for successful pig installation pig -h Start pig in local mode pig -x local If data from hdfs pig –x mapreduce

- 6. Page 5Classification: Restricted Installation and Running Pig In general, Apache Pig works on top of Hadoop. It is an analytical tool that analyzes large datasets that exist in the Hadoop File System. To analyze data using Apache Pig, we have to initially load the data into Apache Pig. This chapter explains how to load data to Apache Pig from HDFS. Preparing HDFS In MapReduce mode, Pig reads (loads) data from HDFS and stores the results back in HDFS. Therefore, let us start HDFS and create the following sample data in HDFS. The above dataset contains personal details like id, first name, last name, phone number and city, of six students Student ID First Name Last Name Phone City 1 Peter Burke 4353521729 Salt Lake City 2 Aaron Kimberlake 8013528191 Salt Lake City 3 Danny Jacob 2958295582 Salt Lake City 4 Angela Kouth 2938811911 Salt Lake City 5 Peggy Karter 3202289119 Salt Lake City

- 7. Page 6Classification: Restricted Installation and Running Pig Create a Directory in HDFS In Hadoop DFS, you can create directories using the command mkdir The input file of Pig contains each tuple/record in individual lines. And the entities of the record are separated by a delimiter (In our example we used “,”). In the local file system, create an input file student_data.txt containing data as shown below. 1, Peter, Burke, 4353521729, Salt Lake City 2, Aaron, Kimberlake, 8013528191, Salt Lake City 3, Danny, Jacob, 2958295582, Salt Lake City 4, Angela, Kouth, 2938811911, Salt Lake City 5, Peggy, Karter, 3202289119, Salt Lake City Now, move the file from the local file system to HDFS using put command

- 8. Page 7Classification: Restricted Load in Pig The load statement will simply load the data into the specified relation in Pig. The load statement consists of two parts divided by the “=” operator. On the left-hand side, we need to mention the name of the relation where we want to store the data, and on the right-hand side, we have to define how we store the data. Given below is the syntax of the Load operator. Relation_name = LOAD 'Input file path' USING function as schema; Schema − We have to define the schema of the data. We can define the required schema as follows − (column1 : data type, column2 : data type, column3 : data type); Now load the data from the file student_data.txt into Pig by executing the following Pig Latin statement in the Grunt shell. student = LOAD '/pig_data/student_data.txt' USING PigStorage(',') as ( id:int, firstname:chararray, lastname:chararray, phone:chararray, city:chararray );

- 9. Page 8Classification: Restricted Load in Pig We have used the PigStorage() function. It loads and stores data as structured text files. It takes a delimiter using which each entity of a tuple is separated, as a parameter. By default, it takes ‘t’ as a parameter. In the previous chapter, we learnt how to load data into Apache Pig. You can store the loaded data in the file system using the store operator. A stored file is only obtained in mapreduce(hdfs) mode of pig STORE Relation_name INTO ' required_directory_path ' [USING function]; student = LOAD '/pig_data/student_data.txt' USING PigStorage(',') as ( id:int, firstname:chararray, lastname:chararray, phone:chararray, city:chararray ); STORE student INTO ' hdfs://localhost:9000/pig_Output/ ' USING PigStorage (',');

- 10. Page 9Classification: Restricted Load in Pig Dump Operator The Dump operator is used to run the Pig Latin statements and display the results on the screen. Given below is the syntax of the Dump operator. grunt> Dump Relation_Name grunt> Dump student Describe Operator The describe operator is used to view the schema of a relation grunt> Describe Relation_name grunt> describe student;

- 11. Page 10Classification: Restricted Load in Pig Output Once you execute the above Pig Latin statement, it will produce the following output. grunt> student: { id: int,firstname: chararray,lastname: chararray,phone: chararray,city: chararray } The illustrate operator gives you the step-by-step execution of a sequence of statements. Syntax Given below is the syntax of the illustrate operator. grunt> illustrate Relation_name; Now, let us illustrate the relation named student as shown below. grunt> illustrate student

- 12. Page 11Classification: Restricted Load in Pig Assume that we have a file named student_details.txt in the HDFS directory /pig_data/ as shown below. student_details.txt 1, Peter, Burke, 4353521729, Salt Lake City 2, Aaron, Kimberlake, 8013528191, Salt Lake City 3, Danny, Jacob, 2958295582, Salt Lake City 4, Angela, Kouth, 2938811911, Salt Lake City 5, Peggy, Karter, 3202289119, Salt Lake City 6, King, Salmon, 2398329282, Salt Lake City 7, Carolyn, Fisher, 2293322829, Salt Lake City 8, John, Hopkins, 2102392020, Salt Lake City grunt> student_details = LOAD '/pig_data/student_details.txt' USING PigStorage(',') as (id:int, firstname:chararray, lastname:chararray, age:int, phone:chararray, city:chararray);

- 13. Page 12Classification: Restricted Load in Pig The GROUP operator is used to group the data in one or more relations. It collects the data having the same key. Syntax Given below is the syntax of the group operator. grunt> Group_data = GROUP Relation_name BY age; grunt> group_data = GROUP student_details by age; grunt> dump group_data

- 14. Page 13Classification: Restricted Load in Pig Output Then you will get output displaying the contents of the relation named group_data as shown below. Here you can observe that the resulting schema has two columns − One is age, by which we have grouped the relation. The other is a bag, which contains the group of tuples, student records with the respective age. (21,{(4, Angela, Kouth, 2938811911, Salt Lake City), (1, Peter, Burke, 4353521729, Salt Lake City)}) (22,{(3, Danny, Jacob, 2958295582, Salt Lake City), (2, Aaron, Kimberlake, 8013528191, Salt Lake City)}) (23,{(6, King, Salmon, 2398329282, Salt Lake City), (5, Peggy, Karter, 3202289119, Salt Lake City)}) (24,{(8, John, Hopkins, 2102392020, Salt Lake City), (7, Carolyn, Fisher, 2293322829, Salt Lake City)})

- 15. Page 14Classification: Restricted Load in Pig customers.txt 1, Peter, 32, Salt Lake City, 2000.00 2, Aaron, 25, Salt Lake City, 1500.00 3, Danny, 23, Salt Lake City, 2000.00 4, Angela, 25, Salt Lake City, 6500.00 5, Peggy, 27, Salt Lake City, 8500.00 6, King, 22, Salt Lake City, 4500.00 7, Carolyn, 24, Salt Lake City,10000.00 orders.txt 102,2009-10-08 00:00:00,3,3000 100,2009-10-08 00:00:00,3,1500 101,2009-11-20 00:00:00,2,1560 103,2008-05-20 00:00:00,4,2060

- 16. Page 15Classification: Restricted Topics to be covered in next session PIG • Loads in Pig Continued • Verification • Filters • Macros in Pig

![Page 8Classification: Restricted

Load in Pig

We have used the PigStorage() function. It loads and stores data as

structured text files. It takes a delimiter using which each entity of a tuple is

separated, as a parameter. By default, it takes ‘t’ as a parameter.

In the previous chapter, we learnt how to load data into Apache Pig. You can

store the loaded data in the file system using the store operator.

A stored file is only obtained in mapreduce(hdfs) mode of pig

STORE Relation_name INTO ' required_directory_path ' [USING function];

student = LOAD '/pig_data/student_data.txt' USING PigStorage(',') as (

id:int, firstname:chararray, lastname:chararray, phone:chararray,

city:chararray );

STORE student INTO ' hdfs://localhost:9000/pig_Output/ ' USING PigStorage

(',');](https://image.slidesharecdn.com/session04-pig-slides-180609010054/85/Session-04-pig-slides-9-320.jpg)