Spark Cassandra Connector Dataframes

- 1. Cassandra And Spark Dataframes Russell Spitzer Software Engineer @ Datastax

- 2. Cassandra And Spark Dataframes

- 3. Cassandra And Spark Dataframes

- 4. Cassandra And Spark Dataframes

- 5. Cassandra And Spark Dataframes

- 6. Tungsten Gives Dataframes OffHeap Power! Can compare memory off-heap and bitwise! Code generation!

- 7. The Core is the Cassandra Source https://github.com/datastax/spark-cassandra-connector/tree/master/spark-cassandra- connector/src/main/scala/org/apache/spark/sql/cassandra /** * Implements [[BaseRelation]]]], [[InsertableRelation]]]] and [[PrunedFilteredScan]]]] * It inserts data to and scans Cassandra table. If filterPushdown is true, it pushs down * some filters to CQL * */ DataFrame source org.apache.spark.sql.cassandra

- 8. The Core is the Cassandra Source https://github.com/datastax/spark-cassandra-connector/tree/master/spark-cassandra- connector/src/main/scala/org/apache/spark/sql/cassandra /** * Implements [[BaseRelation]]]], [[InsertableRelation]]]] and [[PrunedFilteredScan]]]] * It inserts data to and scans Cassandra table. If filterPushdown is true, it pushs down * some filters to CQL * */ DataFrame CassandraSourceRelation CassandraTableScanRDDConfiguration

- 9. Configuration Can Be Done on a Per Source Level clusterName:keyspaceName/propertyName. Example Changing Cluster/Keyspace Level Properties val conf = new SparkConf() .set("ClusterOne/spark.cassandra.input.split.size_in_mb","32") .set("default:test/spark.cassandra.input.split.size_in_mb","128") val lastdf = sqlContext .read .format("org.apache.spark.sql.cassandra") .options(Map( "table" -> "words", "keyspace" -> "test" , "cluster" -> "ClusterOne" ) ).load()

- 10. Configuration Can Be Done on a Per Source Level clusterName:keyspaceName/propertyName. Example Changing Cluster/Keyspace Level Properties val conf = new SparkConf() .set("ClusterOne/spark.cassandra.input.split.size_in_mb","32") .set("default:test/spark.cassandra.input.split.size_in_mb","128") val lastdf = sqlContext .read .format("org.apache.spark.sql.cassandra") .options(Map( "table" -> "words", "keyspace" -> "test" , "cluster" -> "ClusterOne" ) ).load() Namespace: ClusterOne spark.cassandra.input.split.size_in_mb=32

- 11. Configuration Can Be Done on a Per Source Level clusterName:keyspaceName/propertyName. Example Changing Cluster/Keyspace Level Properties val conf = new SparkConf() .set("ClusterOne/spark.cassandra.input.split.size_in_mb","32") .set("default:test/spark.cassandra.input.split.size_in_mb","128") val lastdf = sqlContext .read .format("org.apache.spark.sql.cassandra") .options(Map( "table" -> "words", "keyspace" -> "test" , "cluster" -> "ClusterOne" ) ).load() Namespace: default Keyspace: test spark.cassandra.input.split.size_in_mb=128 Namespace: ClusterOne spark.cassandra.input.split.size_in_mb=32

- 12. Configuration Can Be Done on a Per Source Level clusterName:keyspaceName/propertyName. Example Changing Cluster/Keyspace Level Properties val conf = new SparkConf() .set("ClusterOne/spark.cassandra.input.split.size_in_mb","32") .set("default:test/spark.cassandra.input.split.size_in_mb","128") val lastdf = sqlContext .read .format("org.apache.spark.sql.cassandra") .options(Map( "table" -> "words", "keyspace" -> "test" , "cluster" -> "ClusterOne" ) ).load() Namespace: default Keyspace: test spark.cassandra.input.split.size_in_mb=128 Namespace: ClusterOne spark.cassandra.input.split.size_in_mb=32

- 13. Configuration Can Be Done on a Per Source Level clusterName:keyspaceName/propertyName. Example Changing Cluster/Keyspace Level Properties val conf = new SparkConf() .set("ClusterOne/spark.cassandra.input.split.size_in_mb","32") .set("default:test/spark.cassandra.input.split.size_in_mb","128") val lastdf = sqlContext .read .format("org.apache.spark.sql.cassandra") .options(Map( "table" -> "words", "keyspace" -> "test" , "cluster" -> "default" ) ).load() Namespace: default Keyspace: test spark.cassandra.input.split.size_in_mb=128 Namespace: ClusterOne spark.cassandra.input.split.size_in_mb=32

- 14. Configuration Can Be Done on a Per Source Level clusterName:keyspaceName/propertyName. Example Changing Cluster/Keyspace Level Properties val conf = new SparkConf() .set("ClusterOne/spark.cassandra.input.split.size_in_mb","32") .set("default:test/spark.cassandra.input.split.size_in_mb","128") val lastdf = sqlContext .read .format("org.apache.spark.sql.cassandra") .options(Map( "table" -> "words", "keyspace" -> "other" , "cluster" -> "default" ) ).load() Namespace: default Keyspace: test spark.cassandra.input.split.size_in_mb=128 Namespace: ClusterOne spark.cassandra.input.split.size_in_mb=32 Connector Default

- 15. Predicate Pushdown Is Automatic! Select * From cassandraTable where clusteringKey > 100

- 16. Predicate Pushdown Is Automatic! Select * From cassandraTable where clusteringKey > 100 DataFrame DataFromC* Filter clusteringKey > 100 Show

- 17. Predicate Pushdown Is Automatic! Select * From cassandraTable where clusteringKey > 100 DataFrame DataFromC* Filter clusteringKey > 100 Show Catalyst

- 18. Predicate Pushdown Is Automatic! Select * From cassandraTable where clusteringKey > 100 DataFrame DataFromC* Filter clusteringKey > 100 Show Catalyst https://github.com/datastax/spark-cassandra-connector/blob/master/spark-cassandra- connector/src/main/scala/org/apache/spark/sql/cassandra/PredicatePushDown.scala

- 19. Predicate Pushdown Is Automatic! Select * From cassandraTable where clusteringKey > 100 DataFrame DataFromC* AND add where clause to CQL "clusteringKey > 100" Show Catalyst https://github.com/datastax/spark-cassandra-connector/blob/master/spark-cassandra- connector/src/main/scala/org/apache/spark/sql/cassandra/PredicatePushDown.scala

- 20. What can be pushed down? 1. Only push down no-partition key column predicates with =, >, <, >=, <= predicate 2. Only push down primary key column predicates with = or IN predicate. 3. If there are regular columns in the pushdown predicates, they should have at least one EQ expression on an indexed column and no IN predicates. 4. All partition column predicates must be included in the predicates to be pushed down, only the last part of the partition key can be an IN predicate. For each partition column, only one predicate is allowed. 5. For cluster column predicates, only last predicate can be non-EQ predicate including IN predicate, and preceding column predicates must be EQ predicates. 6. If there is only one cluster column predicate, the predicates could be any non-IN predicate. There is no pushdown predicates if there is any OR condition or NOT IN condition. 7. We're not allowed to push down multiple predicates for the same column if any of them is equality or IN predicate.

- 21. What can be pushed down? If you could write in CQL it will get pushed down.

- 22. What are we Pushing Down To? CassandraTableScanRDD All of the underlying code is the same as with sc.cassandraTable so everything with Reading and Writing applies

- 23. What are we Pushing Down To? CassandraTableScanRDD All of the underlying code is the same as with sc.cassandraTable so everything with Reading and Writing applies https://academy.datastax.com/ Watch me talk about this in the privacy of your own home!

- 24. How the Spark Cassandra Connector Reads Data

- 25. Spark RDDs Represent a Large Amount of Data Partitioned into Chunks RDD 1 2 3 4 5 6 7 8 9Node 2 Node 1 Node 3 Node 4

- 26. Node 2 Node 1 Spark RDDs Represent a Large Amount of Data Partitioned into Chunks RDD 2 346 7 8 9 Node 3 Node 4 1 5

- 27. Node 2 Node 1 RDD 2 346 7 8 9 Node 3 Node 4 1 5 Spark RDDs Represent a Large Amount of Data Partitioned into Chunks

- 28. Cassandra Data is Distributed By Token Range

- 29. Cassandra Data is Distributed By Token Range 0 500

- 30. Cassandra Data is Distributed By Token Range 0 500 999

- 31. Cassandra Data is Distributed By Token Range 0 500 Node 1 Node 2 Node 3 Node 4

- 32. Cassandra Data is Distributed By Token Range 0 500 Node 1 Node 2 Node 3 Node 4 Without vnodes

- 33. Cassandra Data is Distributed By Token Range 0 500 Node 1 Node 2 Node 3 Node 4 With vnodes

- 34. Node 1 120-220 300-500 780-830 0-50 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb The Connector Uses Information on the Node to Make Spark Partitions

- 35. Node 1 120-220 300-500 0-50 The Connector Uses Information on the Node to Make Spark Partitions 1 780-830 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 36. 1 Node 1 120-220 300-500 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 37. 2 1 Node 1 300-500 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 38. 2 1 Node 1 300-500 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 39. 2 1 Node 1 300-400 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 400-500 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 40. 21 Node 1 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 400-500 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 41. 21 Node 1 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 400-500 3 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 42. 21 Node 1 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 3 400-500 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 43. 21 Node 1 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 3 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 44. 4 21 Node 1 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 3 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 45. 4 21 Node 1 0-50 The Connector Uses Information on the Node to Make Spark Partitions 780-830 3 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 46. 421 Node 1 The Connector Uses Information on the Node to Make Spark Partitions 3 spark.cassandra.input.split_size_in_mb 1 Reported density is 100 tokens per mb

- 47. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50780-830 Node 1

- 48. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50

- 49. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50

- 50. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows

- 51. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows

- 52. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows 50 CQL Rows

- 53. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows

- 54. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows

- 55. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows

- 56. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows

- 57. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows

- 58. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows

- 59. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 780 and token(pk) <= 830 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows

- 60. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows

- 61. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows

- 62. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows

- 63. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows

- 64. 4 spark.cassandra.input.page.row.size 50 Data is Retrieved Using the DataStax Java Driver 0-50 780-830 Node 1 SELECT * FROM keyspace.table WHERE token(pk) > 0 and token(pk) <= 50 50 CQL Rows50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows 50 CQL Rows

- 65. How The Spark Cassandra Connector Writes Data

- 66. Spark RDDs Represent a Large Amount of Data Partitioned into Chunks RDD 1 2 3 4 5 6 7 8 9Node 2 Node 1 Node 3 Node 4

- 67. Node 2 Node 1 Spark RDDs Represent a Large Amount of Data Partitioned into Chunks RDD 2 346 7 8 9 Node 3 Node 4 1 5

- 68. Node 2 Node 1 RDD 2 346 7 8 9 Node 3 Node 4 1 5 The Spark Cassandra Connector saveToCassandra method can be called on almost all RDDs rdd.saveToCassandra("Keyspace","Table")

- 69. Node 11 A Java Driver connection is made to the local node and a prepared statement is built for the target table Java Driver

- 70. Node 11 Batches are built from data in Spark partitions Java Driver 1,1,1 1,2,1 2,1,1 3,8,1 3,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 3,9,1

- 71. Node 11 By default these batches only contain CQL Rows which share the same partition key Java Driver 1,1,1 1,2,1 2,1,1 3,8,1 3,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1

- 72. Node 11 Java Driver 1,1,1 1,2,1 2,1,1 3,8,1 3,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 By default these batches only contain CQL Rows which share the same partition key PK=1

- 73. Node 11 When an element is not part of an existing batch, a new batch is started Java Driver 1,1,1 1,2,1 2,1,1 3,8,1 3,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 PK=1

- 74. Node 11 Java Driver 1,1,1 1,2,1 2,1,1 3,8,1 3,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 When an element is not part of an existing batch, a new batch is started PK=1 PK=2

- 75. Node 11 Java Driver 1,1,1 1,2,1 2,1,1 3,8,1 3,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 When an element is not part of an existing batch, a new batch is started PK=1 PK=2

- 76. Node 11 Java Driver 1,1,1 1,2,1 2,1,1 3,8,13,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 If a batch size reaches batch.size.rows or batch.size.bytes it is executed by the driver PK=1 PK=2 PK=3

- 77. Node 11 Java Driver 1,1,1 1,2,1 2,1,1 3,8,13,2,1 3,4,1 3,5,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 PK=1 PK=2 PK=3 If a batch size reaches batch.size.rows or batch.size.bytes it is executed by the driver

- 78. Node 11 Java Driver 1,1,1 1,2,1 2,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4,3,9,1 3,1,1 spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 If a batch size reaches batch.size.rows or batch.size.bytes it is executed by the driver PK=1 PK=2

- 79. Node 11 Java Driver 1,1,1 1,2,1 2,1,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4,3,9,1 spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 If a batch size reaches batch.size.rows or batch.size.bytes it is executed by the driver PK=1 PK=2 PK=3

- 80. Node 11 If more than batch.buffer.size batches are currently being made, the largest batch is executed by the Java Driver Java Driver 1,1,1 1,2,1 2,1,1 3,1,1 1,4,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 PK=1 PK=2 PK=3

- 81. Node 11 Java Driver 2,1,1 3,1,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 PK=2 PK=3 If more than batch.buffer.size batches are currently being made, the largest batch is executed by the Java Driver

- 82. Node 11 Java Driver 2,1,1 3,1,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 If more than batch.buffer.size batches are currently being made, the largest batch is executed by the Java Driver PK=2 PK=3 PK=5

- 83. Node 11 Java Driver 2,1,1 3,1,1 5,4,1 2,4,1 8,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,1 If more than batch.buffer.size batches are currently being made, the largest batch is executed by the Java Driver PK=2 PK=3 PK=5

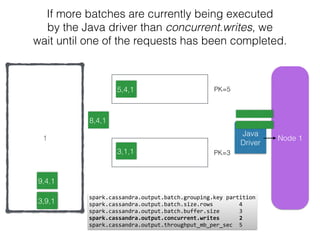

- 84. Node 11 If more batches are currently being executed by the Java driver than concurrent.writes, we wait until one of the requests has been completed. Java Driver 2,1,1 3,1,1 5,4,1 2,4,18,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,13,9,1 PK=2 PK=3 PK=5

- 85. Node 11 If more batches are currently being executed by the Java driver than concurrent.writes, we wait until one of the requests has been completed. Java Driver 2,1,1 3,1,1 5,4,1 2,4,18,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,9,13,9,1 Write Acknowledged PK=2 PK=3 PK=5

- 86. Node 11 If more batches are currently being executed by the Java driver than concurrent.writes, we wait until one of the requests has been completed. Java Driver 2,1,1 3,1,1 5,4,1 2,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 8,4,1 3,9,1 PK=2 PK=3 PK=5

- 87. Node 11 If more batches are currently being executed by the Java driver than concurrent.writes, we wait until one of the requests has been completed. Java Driver 3,1,1 5,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 8,4,1 3,9,1 PK=3 PK=5

- 88. Node 11 If more batches are currently being executed by the Java driver than concurrent.writes, we wait until one of the requests has been completed. Java Driver 3,1,1 5,4,1 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 8,4,1 3,9,1 PK=8 PK=3 PK=5

- 89. Node 11 If more batches are currently being executed by the Java driver than concurrent.writes, we wait until one of the requests has been completed. Java Driver 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,1,1 5,4,1 8,4,1 3,9,1 PK=8 PK=3 PK=5

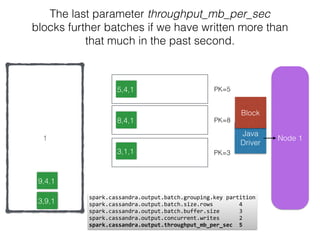

- 90. Node 11 The last parameter throughput_mb_per_sec blocks further batches if we have written more than that much in the past second. Java Driver 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,1,1 5,4,1 8,4,1 3,9,1 PK=8 PK=3 PK=5

- 91. Node 11 The last parameter throughput_mb_per_sec blocks further batches if we have written more than that much in the past second. Java Driver 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,1,1 5,4,1 8,4,1 3,9,1 PK=8 PK=3 PK=5 Write Acknowledged

- 92. Node 11 The last parameter throughput_mb_per_sec blocks further batches if we have written more than that much in the past second. Java Driver 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,1,1 5,4,1 8,4,1 3,9,1 PK=8 PK=3 PK=5

- 93. Node 11 The last parameter throughput_mb_per_sec blocks further batches if we have written more than that much in the past second. Java Driver 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,1,1 5,4,1 8,4,1 3,9,1 PK=8 PK=3 PK=5 Write Acknowledged

- 94. Node 11 The last parameter throughput_mb_per_sec blocks further batches if we have written more than that much in the past second. Java Driver 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 Block 3,1,1 5,4,1 8,4,1 3,9,1 PK=8 PK=3 PK=5

- 95. Node 11 The last parameter throughput_mb_per_sec blocks further batches if we have written more than that much in the past second. Java Driver 9,4,1 11,4, spark.cassandra.output.batch.grouping.key partition spark.cassandra.output.batch.size.rows 4 spark.cassandra.output.batch.buffer.size 3 spark.cassandra.output.concurrent.writes 2 spark.cassandra.output.throughput_mb_per_sec 5 3,1,1 5,4,1 8,4,1 3,9,1 PK=8 PK=3 PK=5

- 96. Thanks for Coming and I hope you Have a Great Time At C* Summit http://cassandrasummit-datastax.com/agenda/the-spark- cassandra-connector-past-present-and-future/ Also ask these guys really hard questions Jacek PiotrAlex

![The Core is the Cassandra Source

https://github.com/datastax/spark-cassandra-connector/tree/master/spark-cassandra-

connector/src/main/scala/org/apache/spark/sql/cassandra

/**

* Implements [[BaseRelation]]]], [[InsertableRelation]]]] and [[PrunedFilteredScan]]]]

* It inserts data to and scans Cassandra table. If filterPushdown is true, it pushs down

* some filters to CQL

*

*/

DataFrame

source

org.apache.spark.sql.cassandra](https://image.slidesharecdn.com/sparkcassandradataframes-150922055615-lva1-app6891/85/Spark-Cassandra-Connector-Dataframes-7-320.jpg)

![The Core is the Cassandra Source

https://github.com/datastax/spark-cassandra-connector/tree/master/spark-cassandra-

connector/src/main/scala/org/apache/spark/sql/cassandra

/**

* Implements [[BaseRelation]]]], [[InsertableRelation]]]] and [[PrunedFilteredScan]]]]

* It inserts data to and scans Cassandra table. If filterPushdown is true, it pushs down

* some filters to CQL

*

*/

DataFrame

CassandraSourceRelation

CassandraTableScanRDDConfiguration](https://image.slidesharecdn.com/sparkcassandradataframes-150922055615-lva1-app6891/85/Spark-Cassandra-Connector-Dataframes-8-320.jpg)