BDM25 - Spark runtime internal

- 1. Runtime Internal Nan Zhu (McGill University & Faimdata)

- 2. –Johnny Appleseed “Type a quote here.”

- 3. WHO AM I • Nan Zhu, PhD Candidate in School of Computer Science of McGill University • Work on computer networks (Software Defined Networks) and large-scale data processing • Work with Prof. Wenbo He and Prof. Xue Liu • PhD is an awesome experience in my life • Tackle real world problems • Keep thinking ! Get insights !

- 4. WHO AM I • Nan Zhu, PhD Candidate in School of Computer Science of McGill University • Work on computer networks (Software Defined Networks) and large-scale data processing • Work with Prof. Wenbo He and Prof. Xue Liu • PhD is an awesome experience in my life • Tackle real world problems • Keep thinking ! Get insights ! When will I graduate ?

- 5. WHO AM I • Do-it-all Engineer in Faimdata (http://www.faimdata.com) • Faimdata is a new startup located in Montreal • Build Customer-centric analysis solution based on Spark for retailers • My responsibility • Participate in everything related to data • Akka, HBase, Hive, Kafka, Spark, etc.

- 6. WHO AM I • My Contribution to Spark • 0.8.1, 0.9.0, 0.9.1, 1.0.0 • 1000+ code, 30 patches • Two examples: • YARN-like architecture in Spark • Introduce Actor Supervisor mechanism to DAGScheduler

- 7. WHO AM I • My Contribution to Spark • 0.8.1, 0.9.0, 0.9.1, 1.0.0 • 1000+ code, 30 patches • Two examples: • YARN-like architecture in Spark • Introduce Actor Supervisor mechanism to DAGScheduler I’m CodingCat@GitHub !!!!

- 8. WHO AM I • My Contribution to Spark • 0.8.1, 0.9.0, 0.9.1, 1.0.0 • 1000+ code, 30 patches • Two examples: • YARN-like architecture in Spark • Introduce Actor Supervisor mechanism to DAGScheduler I’m CodingCat@GitHub !!!!

- 10. What is Spark? • A distributed computing framework • Organize computation as concurrent tasks • Schedule tasks to multiple servers • Handle fault-tolerance, load balancing, etc, in automatic (and transparently)

- 11. Advantages of Spark • More Descriptive Computing Model • Faster Processing Speed • Unified Pipeline

- 12. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce)

- 13. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce)

- 14. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair

- 15. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair

- 16. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair

- 17. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair Reduce function, collect the <word, 1> pairs generated by Map function and merge them by accumulation

- 18. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair Reduce function, collect the <word, 1> pairs generated by Map function and merge them by accumulation

- 19. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair Reduce function, collect the <word, 1> pairs generated by Map function and merge them by accumulation

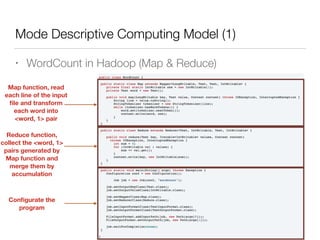

- 20. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair Reduce function, collect the <word, 1> pairs generated by Map function and merge them by accumulation Configurate the program

- 21. Mode Descriptive Computing Model (1) • WordCount in Hadoop (Map & Reduce) Map function, read each line of the input file and transform each word into <word, 1> pair Reduce function, collect the <word, 1> pairs generated by Map function and merge them by accumulation Configurate the program

- 22. DESCRIPTIVE COMPUTING MODEL (2) • WordCount in Spark Scala: Java:

- 23. DESCRIPTIVE COMPUTING MODEL (2) • Closer look at WordCount in Spark Scala: Organize Computation into Multiple Stages in a Processing Pipeline: transformation to get the intermediate results with expected schema action to get final output Computation is expressed with more high-level APIs, which simplify the logic in original Map & Reduce and define the computation as a processing pipeline

- 24. DESCRIPTIVE COMPUTING MODEL (2) • Closer look at WordCount in Spark Scala: Organize Computation into Multiple Stages in a Processing Pipeline: transformation to get the intermediate results with expected schema action to get final output Transformation Computation is expressed with more high-level APIs, which simplify the logic in original Map & Reduce and define the computation as a processing pipeline

- 25. DESCRIPTIVE COMPUTING MODEL (2) • Closer look at WordCount in Spark Scala: Organize Computation into Multiple Stages in a Processing Pipeline: transformation to get the intermediate results with expected schema action to get final output Transformation Action Computation is expressed with more high-level APIs, which simplify the logic in original Map & Reduce and define the computation as a processing pipeline

- 26. MUCH BETTER PERFORMANCE • PageRank Algorithm Performance Comparison Matei Zaharia, et al, Resilient Distributed Datasets: A Fault-Tolerant Abstraction for In-Memory Cluster Computing, NSDI 2012 0" 20" 40" 60" 80" 100" 120" 140" 160" 180" Hadoop"" Basic"Spark" Spark"with"Controlled; par<<on" Time%per%Itera+ons%(s)%

- 27. Unified pipeline Diverse APIs, Operational Cost, etc.

- 28. Unified pipeline

- 29. Unified pipeline • With a Single Spark Cluster • Batching Processing: Spark Core • Query: Shark & Spark SQL & BlinkDB • Streaming: Spark Streaming • Machine Learning: MLlib • Graph: GraphX

- 30. Understanding Distributed Computing Framework

- 31. Understand a distributed computing framework • DataFlow • e.g. Hadoop family utilizes HDFS to transfer data within a job and share data across jobs/applications HDFS Daemon MapTask HDFS Daemon MapTask HDFS Daemon MapTask

- 32. Understand a distributed computing framework • DataFlow • e.g. Hadoop family utilizes HDFS to transfer data within a job and share data across jobs/applications

- 33. Understand a distributed computing framework • DataFlow • e.g. Hadoop family utilizes HDFS to transfer data within a job and share data across jobs/applications

- 34. Understanding a distributed computing engine • Task Management • How the computation is executed within multiple servers • How the tasks are scheduled • How the resources are allocated

- 35. Spark Data Abstraction Model

- 36. Basic Structure of Spark program • A Spark Program val sc = new SparkContext(…) ! val points = sc.textFile("hdfs://...") .map(_.split.map(_.toDouble)).splitAt(1) .map { case (Array(label), features) => LabeledPoint(label, features) } ! val model = Model.train(points)

- 37. Basic Structure of Spark program • A Spark Program val sc = new SparkContext(…) ! val points = sc.textFile("hdfs://...") .map(_.split.map(_.toDouble)).splitAt(1) .map { case (Array(label), features) => LabeledPoint(label, features) } ! val model = Model.train(points) Includes the components driving the running of computing tasks (will introduce later)

- 38. Basic Structure of Spark program • A Spark Program val sc = new SparkContext(…) ! val points = sc.textFile("hdfs://...") .map(_.split.map(_.toDouble)).splitAt(1) .map { case (Array(label), features) => LabeledPoint(label, features) } ! val model = Model.train(points) Includes the components driving the running of computing tasks (will introduce later) Load data from HDFS, forming a RDD (Resilient Distributed Datasets) object

- 39. Basic Structure of Spark program • A Spark Program val sc = new SparkContext(…) ! val points = sc.textFile("hdfs://...") .map(_.split.map(_.toDouble)).splitAt(1) .map { case (Array(label), features) => LabeledPoint(label, features) } ! val model = Model.train(points) Includes the components driving the running of computing tasks (will introduce later) Load data from HDFS, forming a RDD (Resilient Distributed Datasets) object Transformations to generate RDDs with expected element(s)/ format

- 40. Basic Structure of Spark program • A Spark Program val sc = new SparkContext(…) ! val points = sc.textFile("hdfs://...") .map(_.split.map(_.toDouble)).splitAt(1) .map { case (Array(label), features) => LabeledPoint(label, features) } ! val model = Model.train(points) Includes the components driving the running of computing tasks (will introduce later) Load data from HDFS, forming a RDD (Resilient Distributed Datasets) object Transformations to generate RDDs with expected element(s)/ format All Computations are around RDDs

- 41. Resilient Distributed Dataset • RDD is a distributed memory abstraction which is • data collection • immutable • created by either loading from stable storage system (e.g. HDFS) or through transformations on other RDD(s) • partitioned and distributed sc.textFile(…) filter() map()

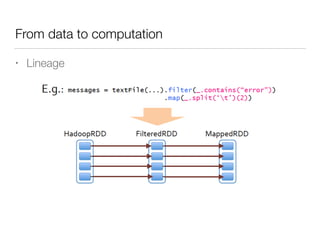

- 42. From data to computation • Lineage

- 43. From data to computation • Lineage

- 44. From data to computation • Lineage Where do I come from? (dependency)

- 45. From data to computation • Lineage Where do I come from? (dependency) How do I come from? (save the functions calculating the partitions)

- 46. From data to computation • Lineage Where do I come from? (dependency) How do I come from? (save the functions calculating the partitions) Computation is organized as a DAG (Lineage)

- 47. From data to computation • Lineage Where do I come from? (dependency) How do I come from? (save the functions calculating the partitions) Computation is organized as a DAG (Lineage) Lost data can be recovered in parallel with the help of the lineage DAG

- 48. Cache • Frequently accessed RDDs can be materialized and cached in memory • Cached RDD can also be replicated for fault tolerance (Spark scheduler takes cached data locality into account) • Manage the cache space with LRU algorithm

- 49. Benefits Brought Cache • Example (Log Mining)

- 50. Benefits Brought Cache • Example (Log Mining) Count is an action, for the first time, it has to calculate from the start of the DAG Graph (textFile)

- 51. Benefits Brought Cache • Example (Log Mining) Count is an action, for the first time, it has to calculate from the start of the DAG Graph (textFile) Because the data is cached, the second count does not trigger a “start-from-zero” computation, instead, it is based on “cachedMsgs” directly

- 52. Summary • Resilient Distributed Datasets (RDD) • Distributed memory abstraction in Spark • Keep computation run in memory with best effort • Keep track of the “lineage” of data • Organize computation • Support fault-tolerance • Cache

- 53. RDD brings much better performance by simplifying the data flow • Share Data among Applications A typical data processing pipeline

- 54. RDD brings much better performance by simplifying the data flow • Share Data among Applications A typical data processing pipeline Overhead Overhead

- 55. RDD brings much better performance by simplifying the data flow • Share Data among Applications A typical data processing pipeline Overhead Overhead

- 56. RDD brings much better performance by simplifying the data flow • Share Data among Applications A typical data processing pipeline

- 57. RDD brings much better performance by simplifying the data flow • Share Data among Applications A typical data processing pipeline

- 58. RDD brings much better performance by simplifying the data flow • Share data in Iterative Algorithms • Certain amount of predictive/machine learning algorithms are iterative • e.g. K-Means Step 1: Place randomly initial group centroids into the space. Step 2: Assign each object to the group that has the closest centroid. Step 3: Recalculate the positions of the centroids. Step 4: If the positions of the centroids didn't change go to the next step, else go to Step 2. Step 5: End.

- 59. RDD brings much better performance by simplifying the data flow • Share data in Iterative Algorithms • Certain amount of predictive/machine learning algorithms are iterative • e.g. K-Means

- 60. RDD brings much better performance by simplifying the data flow • Share data in Iterative Algorithms • Certain amount of predictive/machine learning algorithms are iterative • e.g. K-Means Assign group (Step 2) Recalculate Group (Step 3 & 4) Output (Step 5) HDFS Read Write Write

- 61. RDD brings much better performance by simplifying the data flow • Share data in Iterative Algorithms • Certain amount of predictive/machine learning algorithms are iterative • e.g. K-Means

- 62. RDD brings much better performance by simplifying the data flow • Share data in Iterative Algorithms • Certain amount of predictive/machine learning algorithms are iterative • e.g. K-Means Write Assign group (Step 2) Recalculate Group (Step 3 & 4) Output (Step 5) HDFS

- 63. RDD brings much better performance by simplifying the data flow • Share data in Iterative Algorithms • Certain amount of predictive/machine learning algorithms are iterative • e.g. K-Means

- 64. Spark Scheduler

- 65. Worker Worker The Structure of Spark Cluster Driver Program Spark Context Cluster Manager DAG Sche Task Sche Cluster Sche

- 66. Worker Worker The Structure of Spark Cluster Driver Program Spark Context Cluster Manager Each SparkContext creates a Spark application DAG Sche Task Sche Cluster Sche

- 67. Worker Worker The Structure of Spark Cluster Driver Program Spark Context Cluster Manager Each SparkContext creates a Spark application DAG Sche Task Sche Cluster Sche

- 68. Worker Worker The Structure of Spark Cluster Driver Program Spark Context Cluster Manager Each SparkContext creates a Spark application Submit Application to Cluster Manager DAG Sche Task Sche Cluster Sche

- 69. Worker Worker The Structure of Spark Cluster Driver Program Spark Context Cluster Manager Each SparkContext creates a Spark application Submit Application to Cluster Manager The Cluster Manager can be the master of standalone mode in Spark, Mesos and YARN DAG Sche Task Sche Cluster Sche

- 70. Worker Worker Executor Cache Executor Cache The Structure of Spark Cluster Driver Program Spark Context Cluster Manager Each SparkContext creates a Spark application Submit Application to Cluster Manager The Cluster Manager can be the master of standalone mode in Spark, Mesos and YARN DAG Sche Task Sche Cluster Sche

- 71. Worker Worker Executor Cache Executor Cache The Structure of Spark Cluster Driver Program Spark Context Cluster Manager Each SparkContext creates a Spark application Submit Application to Cluster Manager The Cluster Manager can be the master of standalone mode in Spark, Mesos and YARN Start Executors for the application in Workers; Executors registers with ClusterScheduler; DAG Sche Task Sche Cluster Sche

- 72. Worker Worker Executor Cache Executor Cache The Structure of Spark Cluster Driver Program Spark Context Cluster Manager Each SparkContext creates a Spark application Submit Application to Cluster Manager The Cluster Manager can be the master of standalone mode in Spark, Mesos and YARN Start Executors for the application in Workers; Executors registers with ClusterScheduler; DAG Sche Task Sche Cluster Sche Driver program schedules tasks for the application TaskTask Task Task

- 73. Scheduling Process join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Split DAG DAGScheduler RDD objects are connected together with a DAG Submit each stage as a TaskSet TaskScheduler TaskSetManagers ! (monitor the progress of tasks and handle failed stages) Failed Stages ClusterScheduler Submit Tasks to Executors

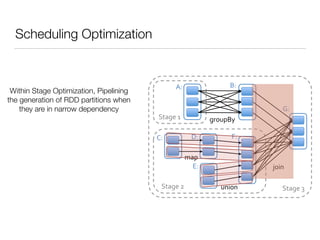

- 74. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:%

- 75. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency

- 76. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency

- 77. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency

- 78. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency

- 79. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency

- 80. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency

- 81. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency Partitioning-based join optimization, avoid whole-shuffle with best-efforts

- 82. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency Partitioning-based join optimization, avoid whole-shuffle with best-efforts

- 83. Scheduling Optimization join% union% groupBy% map% Stage%3% Stage%1% Stage%2% A:% B:% C:% D:% E:% F:% G:% Within Stage Optimization, Pipelining the generation of RDD partitions when they are in narrow dependency Partitioning-based join optimization, avoid whole-shuffle with best-efforts Cache-aware to avoid duplicate computation

- 84. Summary • No centralized application scheduler • Maximize Throughput • Application specific schedulers (DAGScheduler, TaskScheduler, ClusterScheduler) are initialized within SparkContext • Scheduling Abstraction (DAG, TaskSet, Task) • Support fault-tolerance, pipelining, auto-recovery, etc. • Scheduling Optimization • Pipelining, join, caching

- 85. We are hiring ! http://www.faimdata.com [email protected] [email protected]

- 86. Thank you! Q & A Credits to my friend, LianCheng@Databricks, his slides inspired me a lot